In Kubernetes, a Pod is the concept of a container group, and a Pod can contain multiple containers. These containers share resources such as network and storage, providing a more flexible environment for applications to run in.

A container is essentially a special process, created with a NameSpace to isolate the runtime environment, Cgroups to control resource overheads and some Linux network virtualisation techniques to solve network communication problems.

What Pods do is allow multiple containers to join the same NameSpace for resource sharing.

Next, we can use Docker to restore the principles of Pod implementation.

Shared NameSpace for containers

We will now deploy a Pod with two containers, nginx and busybox, the former as the main application providing web services and the latter as the Sidecar debugging container.

First start the nginx container:

|

|

By default, Docker’s IPC Namespace is private, and we can use --ipc="shareable" to specify that sharing is allowed. The -v parameter needs no further explanation.

Next, we start the busybox container and join it to the NET, IPC, PID NameSpace of the nginx container, and we share the Volume of the nginx container so that we can access the nginx log files.

|

|

Once both containers are started, you can debug the resources of the nginx container directly in the busybox container.

|

|

In the busybox container, not only can you see the processes of the nginx container, but you can also directly access the nginx services and shared log file directories.

However, as the Namespace is created by the nginx container, if nginx crashes unexpectedly, all Namespaces will be deleted together and the busybox container will be terminated.

Clearly, it is not desirable to have business containers acting as shared base containers; each container must be guaranteed a peer-to-peer relationship, not a parent-child relationship. kubernetes also takes this into account.

Pause containers

The Pause container, also known as the Infra container. To resolve the security issue of sharing base containers, Kubernetes starts an additional Infra container in each Pod to share the entire Pod’s Namespace.

The Pause container is started when the Pod is created and creates the Namespace, configures the network IP address, routes and other relevant information. The lifecycle of the Pause container is the lifecycle of the Pod, so to speak.

Once the Pause container has been started, other containers are started and share the Namespace with the Pause container, so that each container can access the resources of the other containers in the Pod.

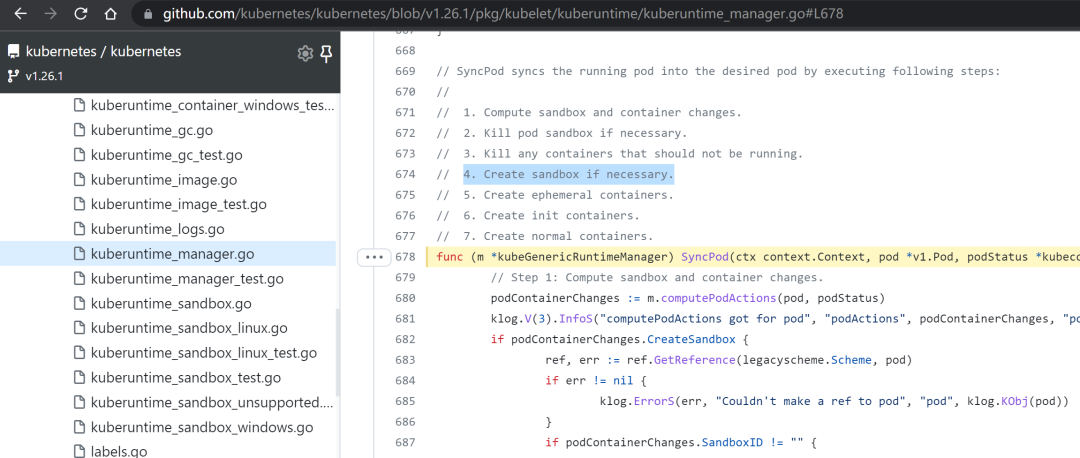

The creation process can be found in the kubelet source code: pkg/kubelet/kuberuntime/kuberuntime_manager.go#L678

The creation of the Sandbox container in step 4 is actually the creation of the Pause container. Only after that does the creation of the init container and the normal container continue.

The Pause container, which plays such an important role, also has its own characteristics.

-

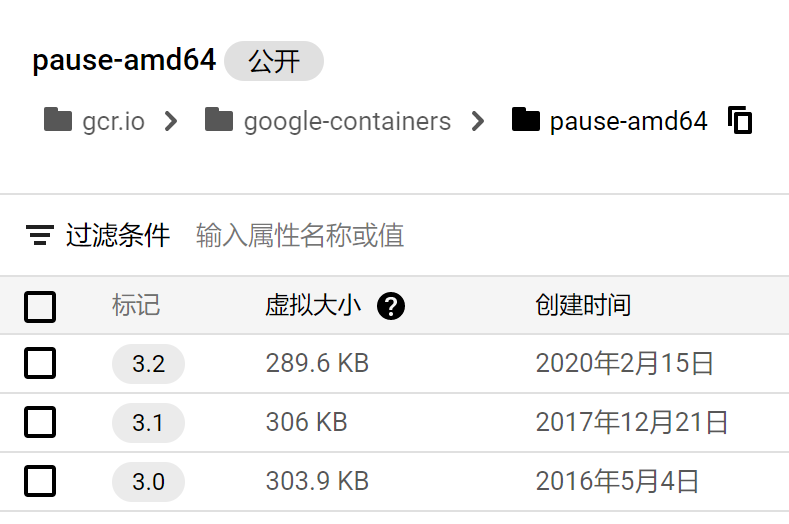

the image is very small: gcr.io/google_containers/pause-amd64

-

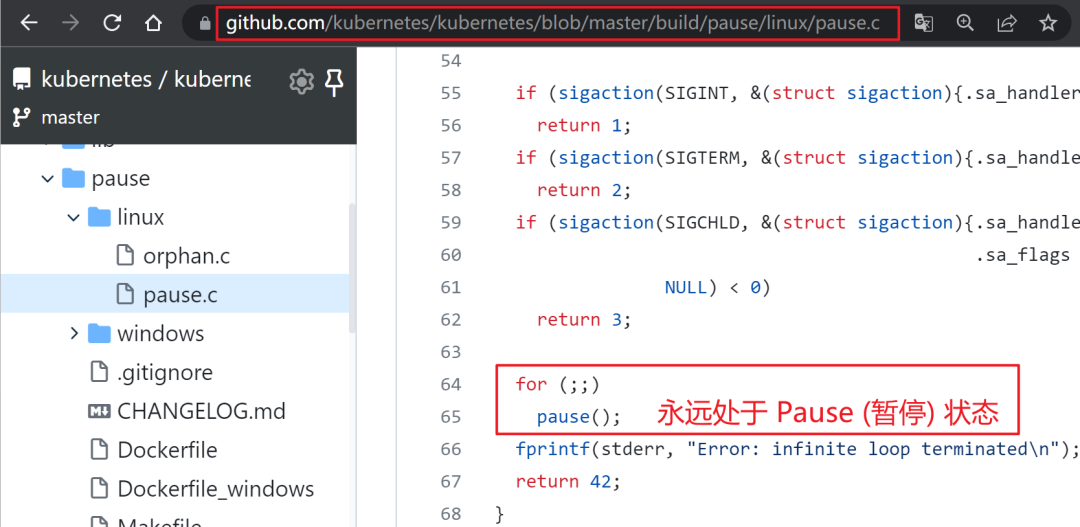

The performance overhead is almost negligible: pause.c

Having understood the Pause container, let’s start our experiments with Pod principles again.

Starting with a new environment, the first thing we should start this time is the Pause container.

|

|

Then start the nginx container again and add it to the Namespace of the Pause container.

|

|

Ditto for the busybox container.

|

|

You will now see both the pause and nginx container processes in the busybox container.

|

|

And even if nginx unexpectedly crashes, it won’t affect the busybox container.

Back to Kubernetes

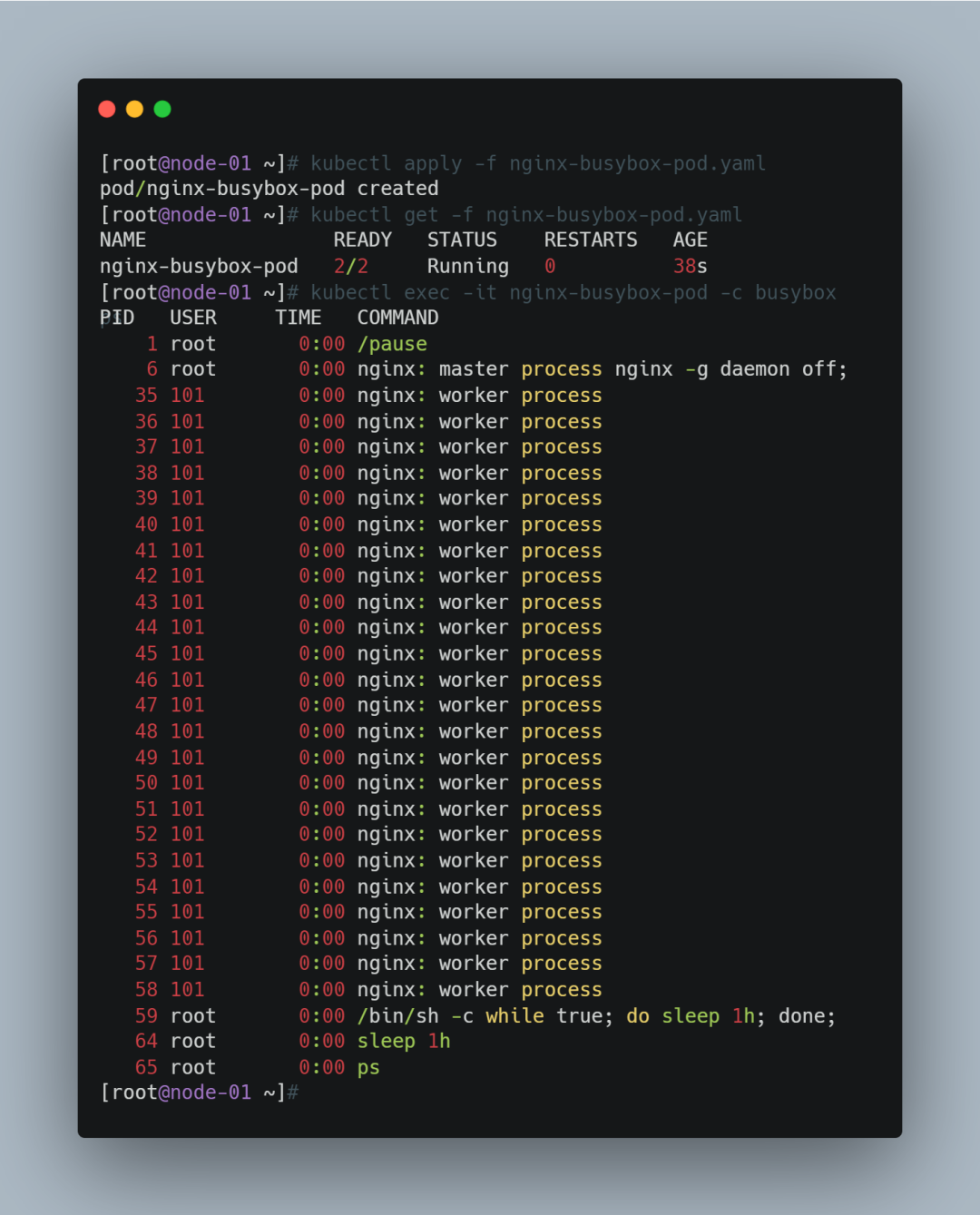

I’m sure you now understand what happens when you execute the kubectl apply -f nginx-busybox-pod.yaml command in a Kubernetes cluster.

|

|

The principle is as simple as that.