Introduction

The other day I was tinkering with Ubuntu and wanted to use the NVIDIA graphics card on my old computer to run code on the GPU and experience the joy of multi-core.

Luckily, my computer also supports Cuda.

1

2

3

4

5

6

7

8

9

10

11

12

13

|

$ sudo lshw -C display

*-display

description: 3D controller

product: GK208M [GeForce GT 740M]

vendor: NVIDIA Corporation

physical id: 0

bus info: pci@0000:01:00.0

version: a1

width: 64 bits

clock: 33MHz

capabilities: pm msi pciexpress bus_master cap_list rom

configuration: driver=nouveau latency=0

resources: irq:35 memory:f0000000-f0ffffff memory:c0000000-cfffffff memory:d0000000-d1ffffff ioport:6000(size=128)

|

First, install the Cuda development tools with the following command.

1

|

$ sudo apt install nvidia-cuda-toolkit

|

Check out the information.

1

2

3

4

5

6

|

$ nvcc --version

nvcc: NVIDIA (R) Cuda compiler driver

Copyright (c) 2005-2021 NVIDIA Corporation

Built on Thu_Nov_18_09:45:30_PST_2021

Cuda compilation tools, release 11.5, V11.5.119

Build cuda_11.5.r11.5/compiler.30672275_0

|

Install the relevant dependency packages via Conda.

1

|

conda install numba & conda install cudatoolkit

|

Installing via pip works just as well.

Testing and driver installation

Tested briefly, exceptions were made.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

|

$ /home/larry/anaconda3/bin/python /home/larry/code/pkslow-samples/python/src/main/python/cuda/test1.py

Traceback (most recent call last):

File "/home/larry/anaconda3/lib/python3.9/site-packages/numba/cuda/cudadrv/driver.py", line 246, in ensure_initialized

self.cuInit(0)

File "/home/larry/anaconda3/lib/python3.9/site-packages/numba/cuda/cudadrv/driver.py", line 319, in safe_cuda_api_call

self._check_ctypes_error(fname, retcode)

File "/home/larry/anaconda3/lib/python3.9/site-packages/numba/cuda/cudadrv/driver.py", line 387, in _check_ctypes_error

raise CudaAPIError(retcode, msg)

numba.cuda.cudadrv.driver.CudaAPIError: [100] Call to cuInit results in CUDA_ERROR_NO_DEVICE

During handling of the above exception, another exception occurred:

Traceback (most recent call last):

File "/home/larry/code/pkslow-samples/python/src/main/python/cuda/test1.py", line 15, in <module>

gpu_print[1, 2]()

File "/home/larry/anaconda3/lib/python3.9/site-packages/numba/cuda/compiler.py", line 862, in __getitem__

return self.configure(*args)

File "/home/larry/anaconda3/lib/python3.9/site-packages/numba/cuda/compiler.py", line 857, in configure

return _KernelConfiguration(self, griddim, blockdim, stream, sharedmem)

File "/home/larry/anaconda3/lib/python3.9/site-packages/numba/cuda/compiler.py", line 718, in __init__

ctx = get_context()

File "/home/larry/anaconda3/lib/python3.9/site-packages/numba/cuda/cudadrv/devices.py", line 220, in get_context

return _runtime.get_or_create_context(devnum)

File "/home/larry/anaconda3/lib/python3.9/site-packages/numba/cuda/cudadrv/devices.py", line 138, in get_or_create_context

return self._get_or_create_context_uncached(devnum)

File "/home/larry/anaconda3/lib/python3.9/site-packages/numba/cuda/cudadrv/devices.py", line 153, in _get_or_create_context_uncached

with driver.get_active_context() as ac:

File "/home/larry/anaconda3/lib/python3.9/site-packages/numba/cuda/cudadrv/driver.py", line 487, in __enter__

driver.cuCtxGetCurrent(byref(hctx))

File "/home/larry/anaconda3/lib/python3.9/site-packages/numba/cuda/cudadrv/driver.py", line 284, in __getattr__

self.ensure_initialized()

File "/home/larry/anaconda3/lib/python3.9/site-packages/numba/cuda/cudadrv/driver.py", line 250, in ensure_initialized

raise CudaSupportError(f"Error at driver init: {description}")

numba.cuda.cudadrv.error.CudaSupportError: Error at driver init: Call to cuInit results in CUDA_ERROR_NO_DEVICE (100)

|

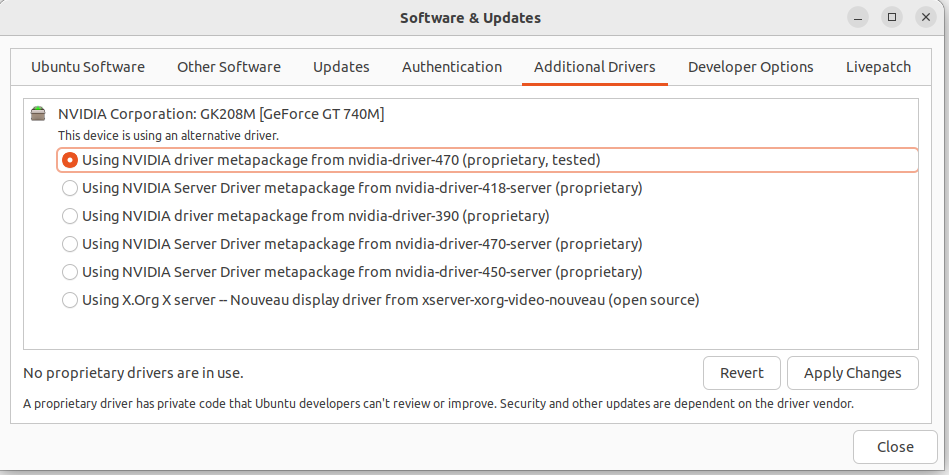

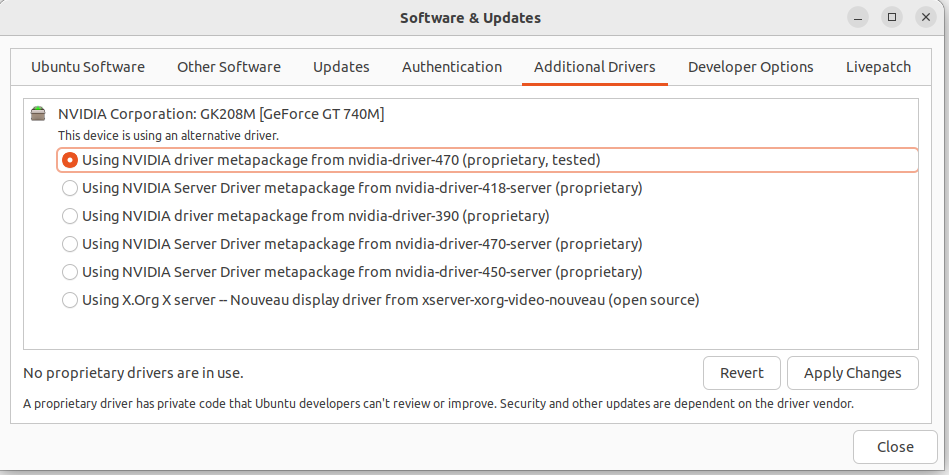

After searching online, I found that it was a driver issue. Install the graphics card driver via the tool that comes with Ubuntu.

Still failing.

1

2

|

$ nvidia-smi

NVIDIA-SMI has failed because it couldn't communicate with the NVIDIA driver. Make sure that the latest NVIDIA driver is installed and running.

|

Finally, the problem was successfully solved by installing the driver from the command line.

1

|

$ sudo apt install nvidia-driver-470

|

It was checked and found to be normal.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

|

$ nvidia-smi

Wed Dec 7 22:13:49 2022

+-----------------------------------------------------------------------------+

| NVIDIA-SMI 470.161.03 Driver Version: 470.161.03 CUDA Version: 11.4 |

|-------------------------------+----------------------+----------------------+

| GPU Name Persistence-M| Bus-Id Disp.A | Volatile Uncorr. ECC |

| Fan Temp Perf Pwr:Usage/Cap| Memory-Usage | GPU-Util Compute M. |

| | | MIG M. |

|===============================+======================+======================|

| 0 NVIDIA GeForce ... Off | 00000000:01:00.0 N/A | N/A |

| N/A 51C P8 N/A / N/A | 4MiB / 2004MiB | N/A Default |

| | | N/A |

+-------------------------------+----------------------+----------------------+

+-----------------------------------------------------------------------------+

| Processes: |

| GPU GI CI PID Type Process name GPU Memory |

| ID ID Usage |

|=============================================================================|

| No running processes found |

+-----------------------------------------------------------------------------+

|

Run python code

Print ID

Prepare the following code.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

from numba import cuda

import os

def cpu_print():

print('cpu print')

@cuda.jit

def gpu_print():

dataIndex = cuda.threadIdx.x + cuda.blockIdx.x * cuda.blockDim.x

print('gpu print ', cuda.threadIdx.x, cuda.blockIdx.x, cuda.blockDim.x, dataIndex)

if __name__ == '__main__':

gpu_print[4, 4]()

cuda.synchronize()

cpu_print()

|

This code has two main functions, one executed with the CPU and one executed with the GPU, performing the print operation. The key is the @cuda.jit annotation that allows the code to be executed on the GPU. The result of the run is as follows.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

$ /home/larry/anaconda3/bin/python /home/larry/code/pkslow-samples/python/src/main/python/cuda/print_test.py

gpu print 0 3 4 12

gpu print 1 3 4 13

gpu print 2 3 4 14

gpu print 3 3 4 15

gpu print 0 2 4 8

gpu print 1 2 4 9

gpu print 2 2 4 10

gpu print 3 2 4 11

gpu print 0 1 4 4

gpu print 1 1 4 5

gpu print 2 1 4 6

gpu print 3 1 4 7

gpu print 0 0 4 0

gpu print 1 0 4 1

gpu print 2 0 4 2

gpu print 3 0 4 3

cpu print

|

You can see that the GPU prints a total of 16 times, using a different Thread for execution. This time the result may be different each time it is printed, because the submission GPU is executed asynchronously and there is no way to ensure which unit is executed first. It is also necessary to call the synchronization function cuda.synchronize() to make sure that the GPU finishes executing before continuing on to the next run.

Let’s look at the power of GPU parallelism with this function.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

|

from numba import jit, cuda

import numpy as np

# to measure exec time

from timeit import default_timer as timer

# normal function to run on cpu

def func(a):

for i in range(10000000):

a[i] += 1

# function optimized to run on gpu

@jit(target_backend='cuda')

def func2(a):

for i in range(10000000):

a[i] += 1

if __name__ == "__main__":

n = 10000000

a = np.ones(n, dtype=np.float64)

start = timer()

func(a)

print("without GPU:", timer() - start)

start = timer()

func2(a)

print("with GPU:", timer() - start)

|

The results are as follows.

1

2

3

|

$ /home/larry/anaconda3/bin/python /home/larry/code/pkslow-samples/python/src/main/python/cuda/time_test.py

without GPU: 3.7136273959999926

with GPU: 0.4040513340000871

|

You can see that it takes 3.7 seconds to use the CPU, while the GPU takes only 0.4 seconds, which is still quite fast. Of course, it does not mean that the GPU is necessarily faster than the CPU, it depends on the type of task.