Interrupts and exceptions can be summarized as an event handling mechanism. A signal is issued by an interrupt or exception, and then the operating system interrupts the current operation and finds the corresponding handler to handle the interrupt or exception according to the signal, and then returns to the original program for further execution according to the result.

For exceptions and interrupts different books seem to have different definitions, but in fact speak of the same thing, I use here “intel architectures software developer’s manual” the book inside the definition.

- An interrupt is an asynchronous event that is typically triggered by an I/O device.

- An exception is a synchronous event that is generated when the processor detects one or more predefined conditions while executing an instruction. The IA-32 architecture specifies three classes of exceptions: faults,traps, and aborts.

We can broadly understand an interrupt as an asynchronous event triggered by an I/O device, such as a user’s keyboard input. It is an electrical signal that is generated by a hardware device and then passed to the CPU via the interrupt controller, which has two special pins, NMI and INTR, to receive the interrupt signal.

An exception is a synchronous event, usually generated by an error in the program or by an exception condition that the kernel must handle, such as a missing page exception or a syscall. Exceptions can be classified as errors, traps, or terminations.

Synchronous as described above because an interrupt is issued by the CPU only after an instruction has been executed and does not occur during the execution of a code instruction, such as a system call, while asynchronous means that the interrupt can occur between instructions.

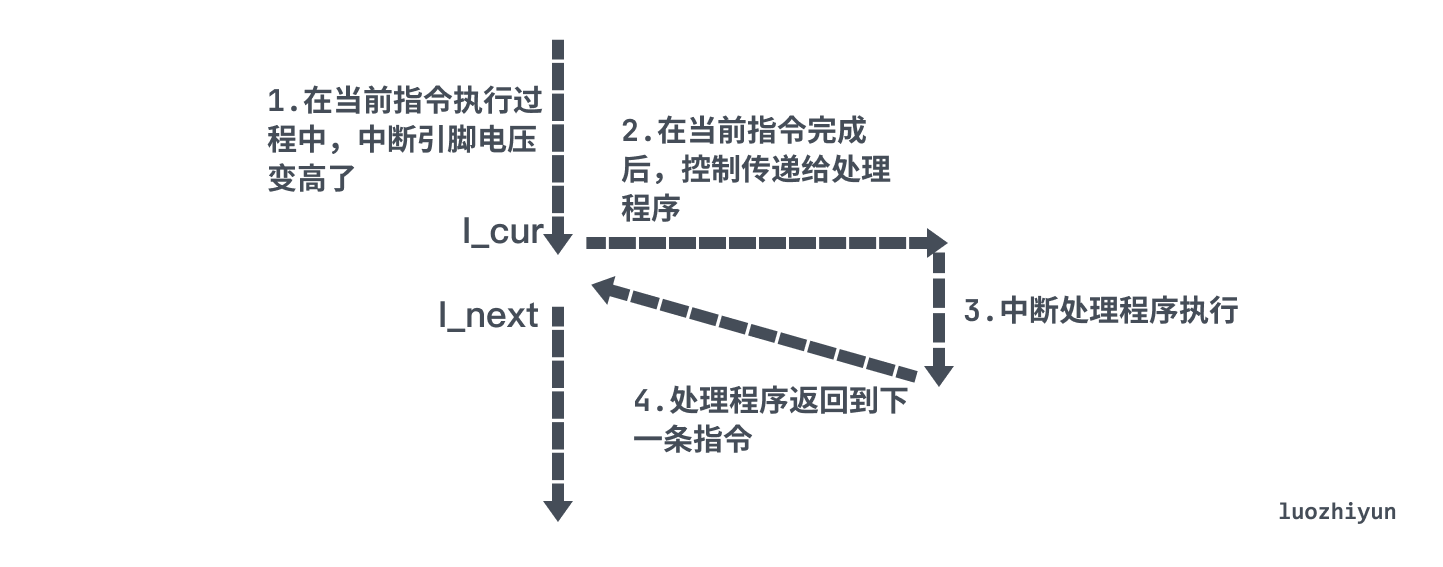

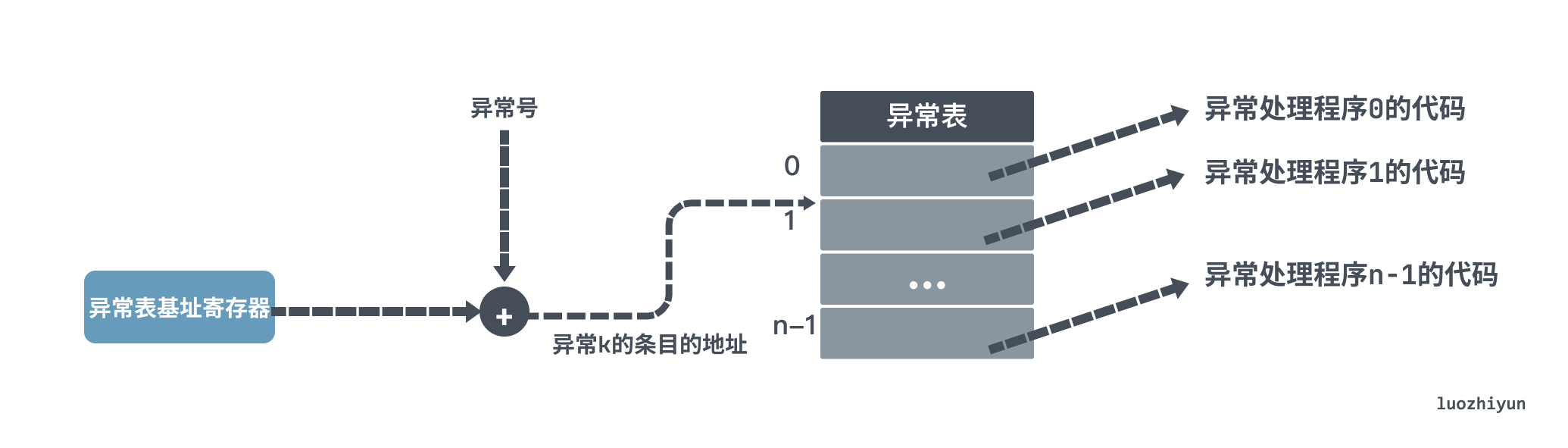

When an exception or interrupt occurs, the processor stops executing the current program or task and instead executes a program specifically designed to handle the interrupt or exception. The processor will fetch the corresponding handler from the interrupt descriptor table (IDT), and when the exception or interrupt is executed, it will continue back to the interrupted program or task to continue execution.

In Linux, an interrupt handler is a C function, except that these functions must be declared with a specific type so that the kernel can pass information about the handler in a standard way. Interrupt handlers run in an interrput context, where the code executed in that context is not blockable.

In x86 systems, 256 exceptions (interrupt vector) are defined in the IDT in advance. The numbers from 0 to 31 correspond to the exceptions defined by Intel, and the numbers from 32 to 255 correspond to the exceptions defined by the operating system.

| Vector | Mnemonic | Description | Source |

|---|---|---|---|

| 0 | #DE | Divide Error | DIV and IDIV instructions. |

| 13 | #GP | General Protection | Any memory reference and other protection checks. |

| 14 | #PF | Page Fault | Any memory reference. |

| 18 | #MC | Machine Check | Error codes (if any) and source are model dependent. |

| 32-255 | Maskable Interrupts | External interrupt from INTR pin or INT n instruction. |

The above table can be found in the intel architectures software developer’s manual. I listed some common exceptions above, such as dezero, general protection exceptions, missing pages, machine checks, etc.

Here are a few more high-level exceptions and interrupts: system calls, context switches, signals, etc.

system call

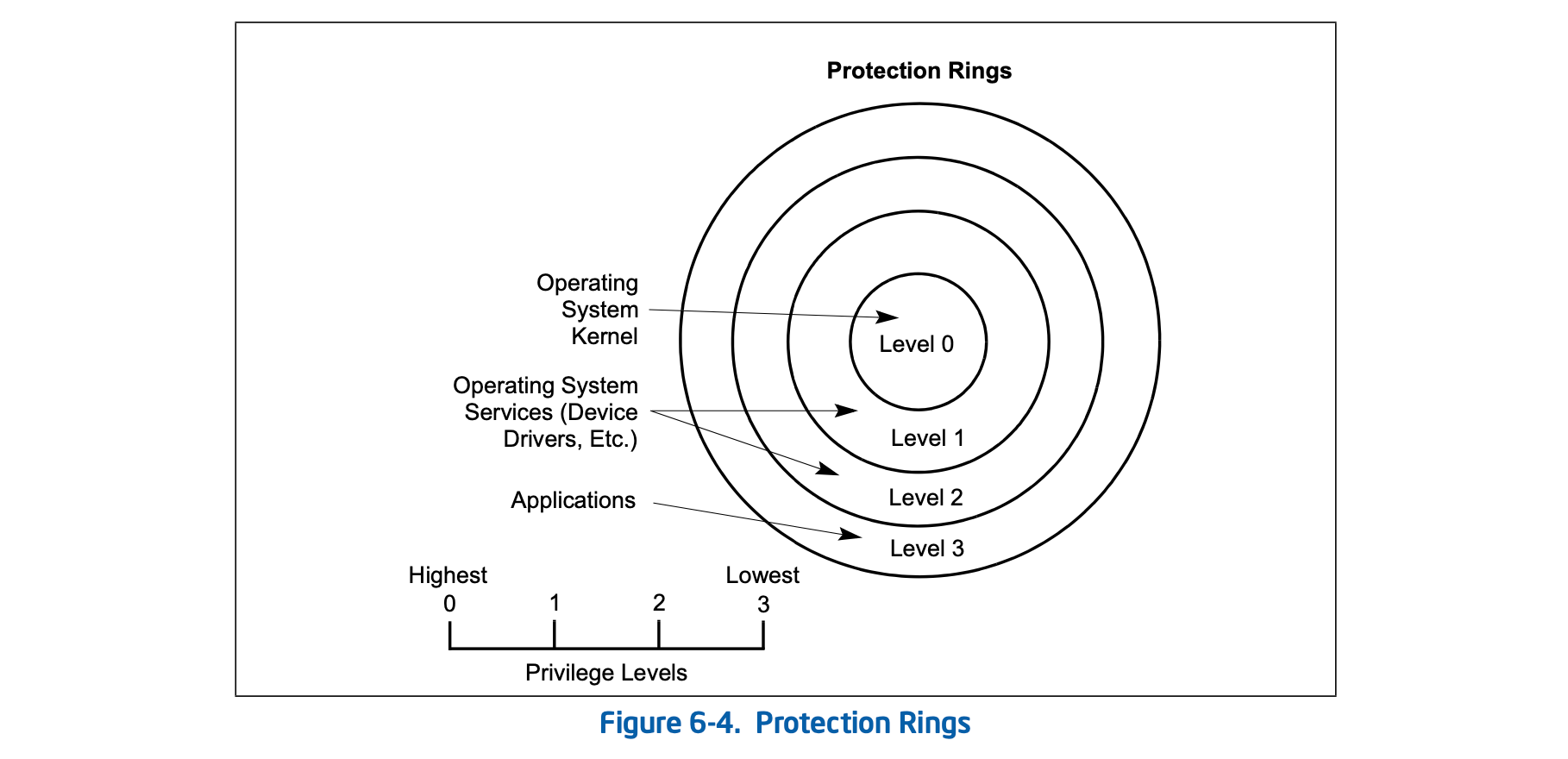

For the operating system, four priority levels are set.

- Provides direct access to all hardware resources. Limited to the lowest level of operating system functionality, such as BIOS, memory management.

- Access to hardware resources is somewhat restricted. May be used by library programs and software that controls I/O devices.

- More restricted access to hardware resources. May be used by library programs and software controlling I/O devices.

- No direct access to hardware resources. Applications operate at this level.

Due to the security and stability of the system, the user space cannot execute system calls directly and needs to switch to the kernel state. So the application will trigger an exception with the int $n instruction. n represents the exception number in the IDT table mentioned above. Older Linux kernels use the int $0x80 instruction, which will cause the system to switch to the kernel state and execute the corresponding exception handler after triggering the exception.

On Linux, each system call is given a system call number, and the kernel keeps a list of all registered system calls in the system call table, which is stored in sys_call_table. Since all system calls are caught in the kernel in the same way, the system call number is passed to the kernel via the eax register. The user space puts the number corresponding to the corresponding system call into eax before it is caught in the kernel.

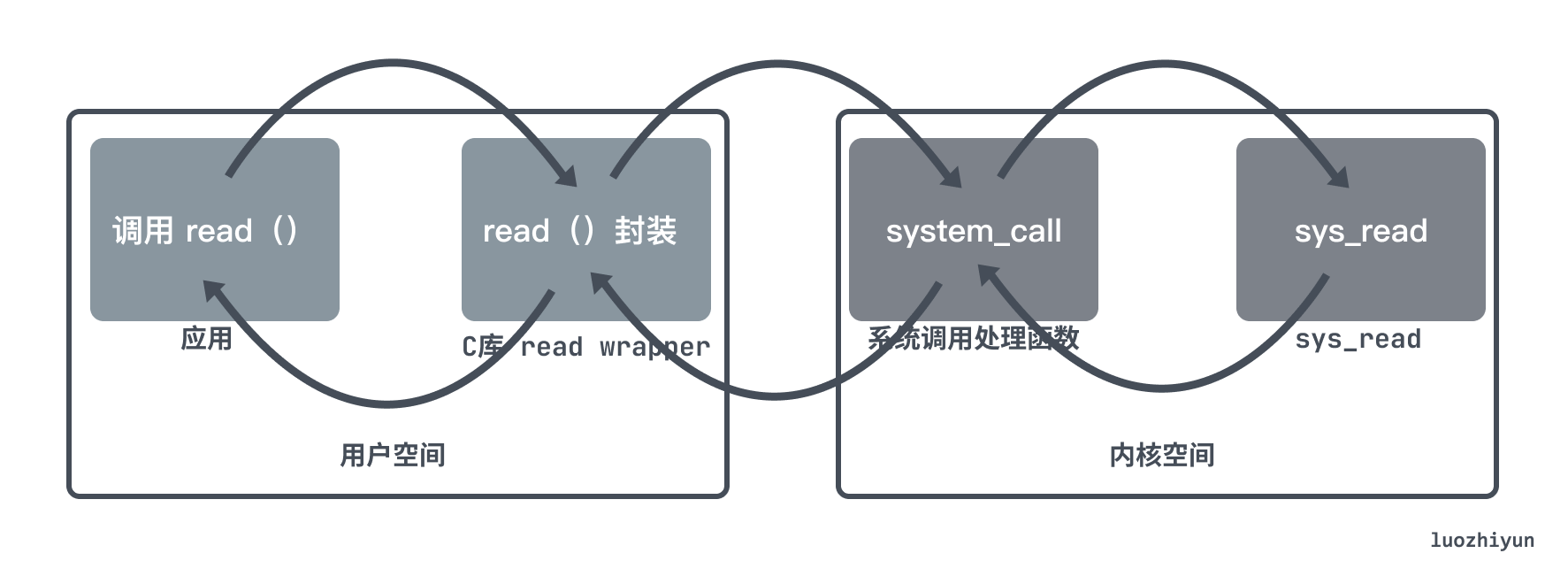

So the system call execution flow is as follows.

- the application code calls the system call ( read ), which is a library function that wraps the system call

- the library function ( read ) is responsible for preparing the parameters to be passed to the kernel and triggering an exception interrupt to switch to the kernel.

- the CPU is interrupted by the interrupt and executes the interrupt handler, i.e. the system call handler (system_call).

- the system call handler function calls the system call service routine ( sys_read ) to actually start processing the system call.

Context switch

Context switch is a higher-level form of exception control flow through which the operating system implements multitasking. This means that context switch is actually built on top of a lower exception mechanism.

The kernel uses a scheduler to control whether the current process can be preempted. If it is preempted, the kernel selects a new process to run, saves the context of the old process, restores the context of the new process, and then passes control to the new process, which is called context switching.

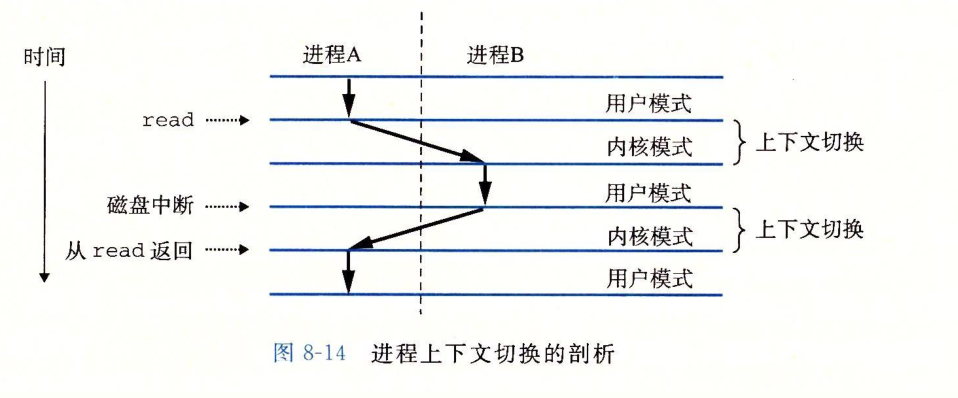

A context switch may occur when a system call is executed, for example, if a process blocks due to a read system call to access a disk, the current process may be put to sleep and switched to another process. Context switches can also occur during interrupts, such as when a periodic system timer interrupts and the kernel decides that the current process has run long enough and switches to a new process.

The process switch occurs only in the kernel state. Before the process switch is performed, the contents of all registers used by the user state process are saved on the kernel state stack.

The figure above shows an example of switching between processes A and B. Process A is initially in user mode until it enters the kernel by executing the system call read. Since it takes some time for the disk to read the data, the kernel performs the switch from process A to B. After the disk data is ready, the disk controller signals an interrupt to indicate that the data has been transferred from the disk to memory. After the disk data is ready, the disk controller signals an interrupt to indicate that the data has been transferred from disk to memory, and the kernel switches from kernel B to A and then executes the instruction in process A that follows the read call.

Signals

Signals are a software form of exception that allows processes and the kernel to interrupt other processes and can notify the process that an event of some type has occurred on the system. Each signal type corresponds to some type of system event. The underlying hardware exceptions are handled by kernel exception handlers and are normally invisible to the user process. However, signals can inform the user process that an exception has occurred, e.g., if a process tries to divide by 0, the kernel sends it a SIGFPE signal; if a process executes an illegal instruction, the kernel sends a SIGILL signal, etc.

The signal consists of two steps, sending the signal and receiving the signal.

A signal that is sent but not accepted is called a pending signal. A type will only have at most one pending signal. If a process has a pending signal of type k, then any subsequent signals of type k sent to that process will only be discarded.

A process can also block the reception of certain signals. When a signal is blocked, it can still be sent, but not received, until the process unblocks the signal.

The kernel maintains a collection of pending signals in the pending bit vector and a collection of blocked signals in the blocked bit vector for each process.

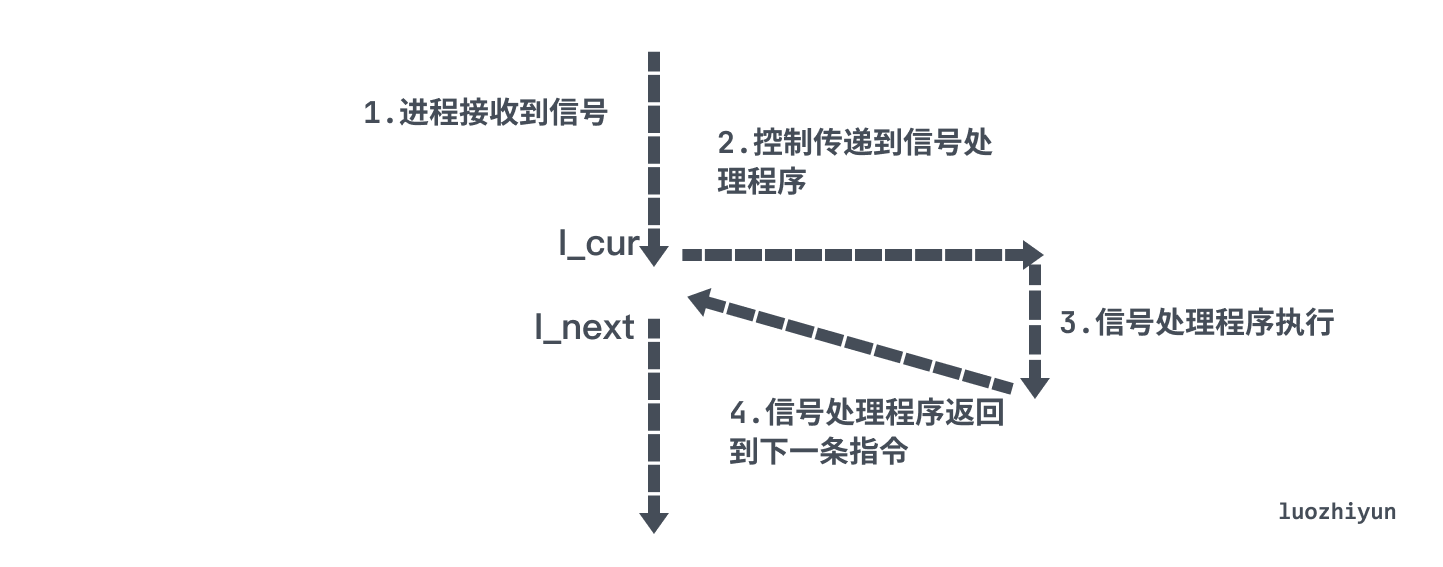

When a process switches from kernel mode to user mode, it checks the set of unblocked pending signals (pending & ~blocked) in the process. If the set is empty, then the kernel passes control to the next instruction of the process (I_next); if it is non-empty, then the kernel selects a signal k (usually the smallest k) in the set to force the process to receive it, and then the process triggers some behavior based on the signal and returns to the control flow to execute the next instruction (I_next) when it is done.

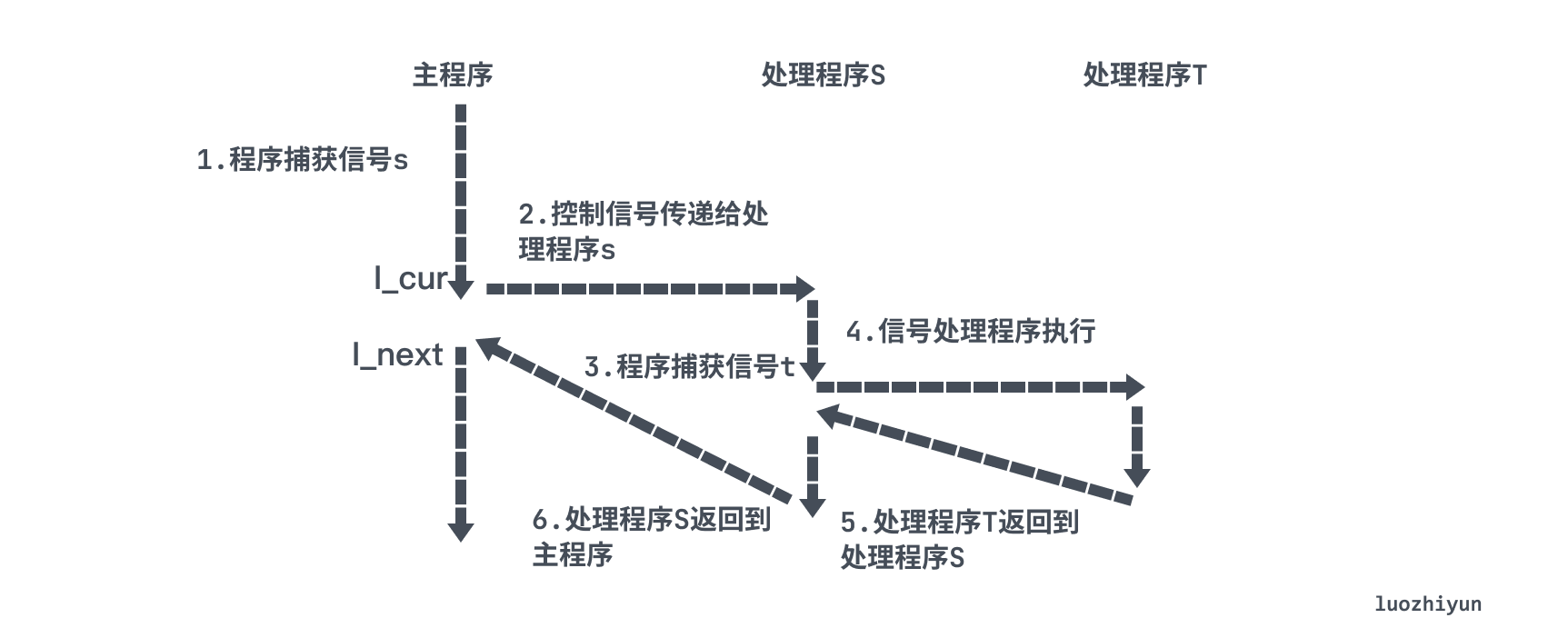

As shown above, signal handlers can be interrupted by other signal handlers.

Summary

This article describes how program execution is controlled in a computer system through exceptions and interrupts. Exception control flow occurs at all levels of a computer system and is the basic mechanism for providing concurrency in a computer system. At the low-level hardware layer, exceptions are handled through exception controllers that send signals through the processor, allowing for a fast response to I/O events. At the operating system level, exceptions are used to bring the program into the kernel state and then make system calls. Interrupts also interrupt the execution of the current process, thus allowing switching between processes, which is the basic mechanism for providing concurrency in computer systems. Signaling also allows processes and the kernel to interrupt other processes, thus enabling inter-process control.