Service Overview

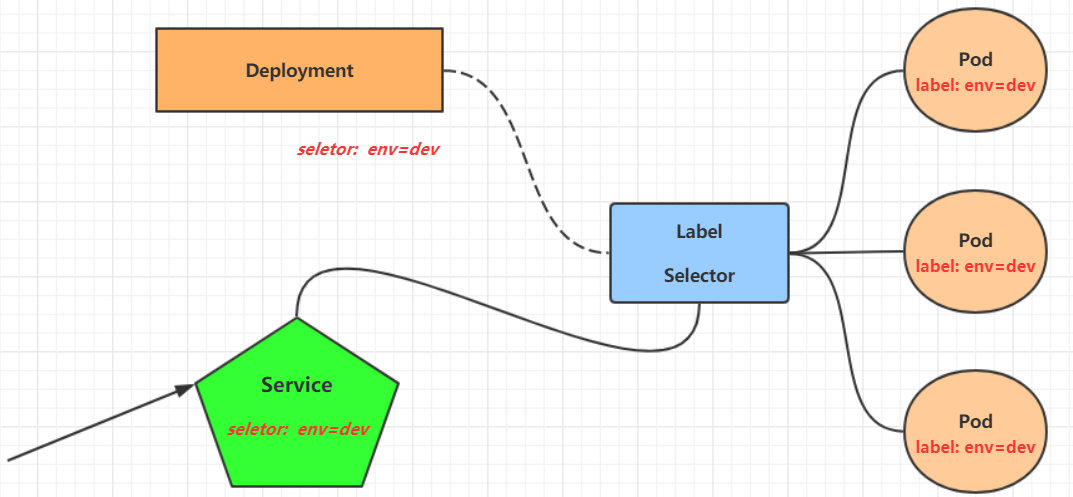

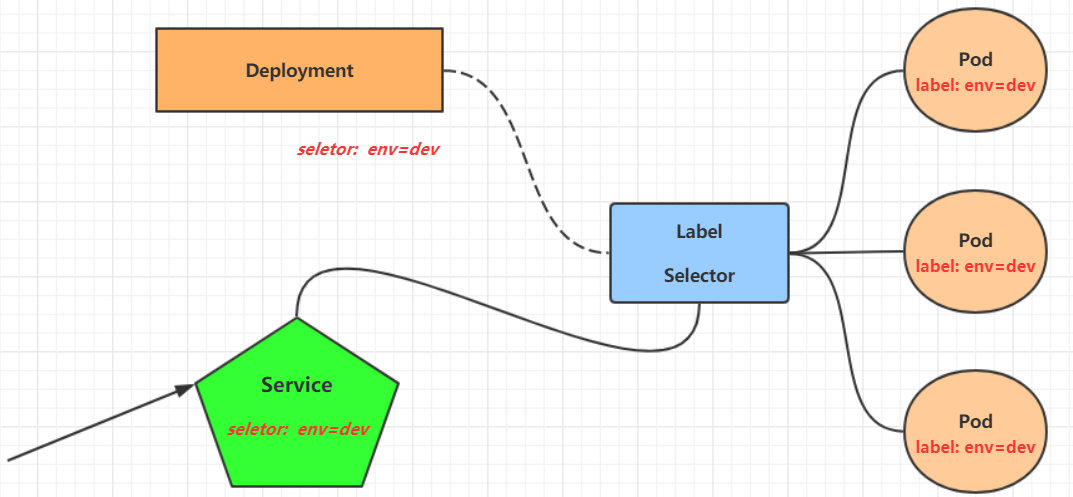

In kubernetes, a pod is a carrier for an application and the application can be accessed through the ip of the pod. However, the ip address of the pod is not fixed, which means it is not convenient to directly adopt the ip of the pod to access the service. To solve this problem, kubernetes provides Service resources, which aggregate multiple pods providing the same service and provide a unified entry address. By accessing the Service’s entry address, you can access the pod services behind it.

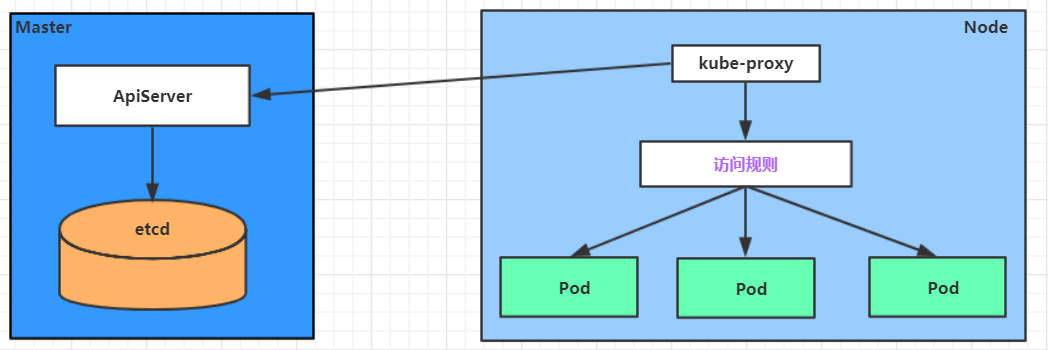

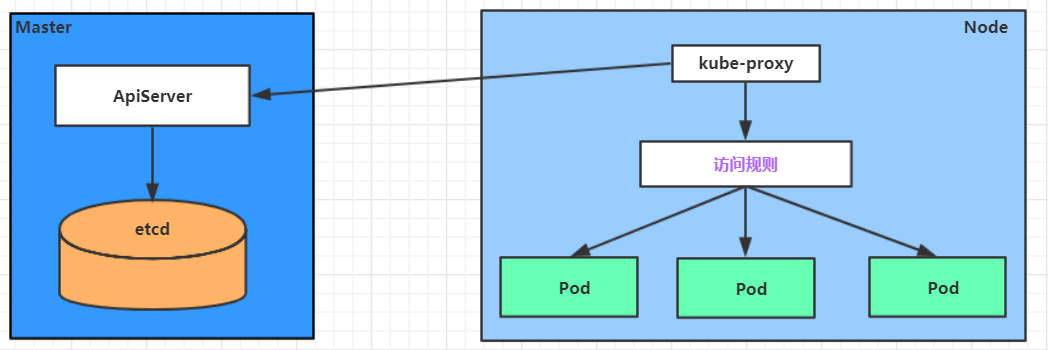

Service is in many cases just a concept, but the real role is played by the kube-proxy service process, which runs on each Node. When a Service is created, it writes information about the created Service to etcd through the api-server, and the kube-proxy discovers such Service changes based on a listening mechanism, and then it converts the latest Service information into the corresponding access rules .

Working mode

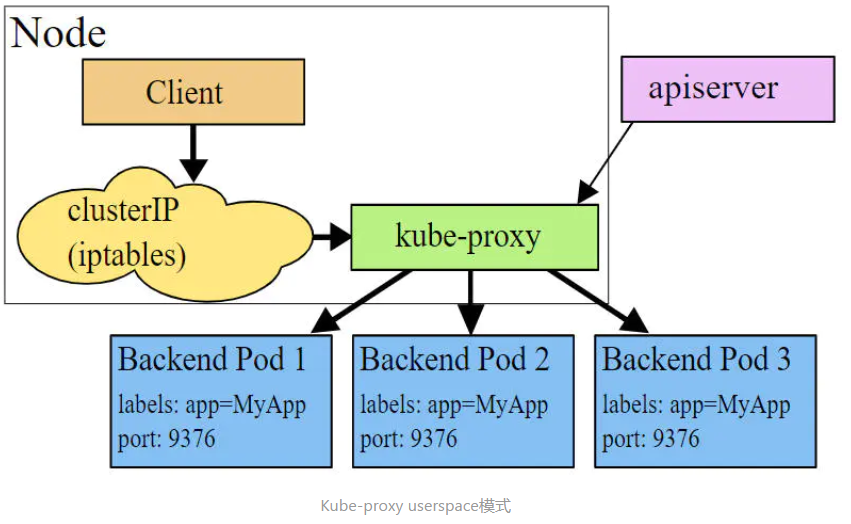

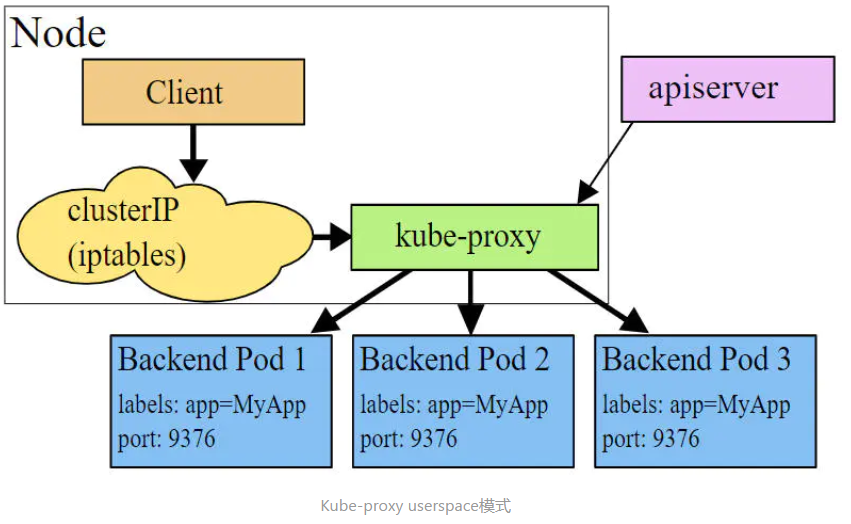

userspace mode

In userspace mode, kube-proxy creates a listening port for each service, and requests to the Cluster IP are redirected by Iptables rules to the port kube-proxy is listening on. kube-proxy selects a Pod that provides the service based on the LB algorithm and establishes a link with it to forward the request to the Pod. forward the request to the Pod. In this mode, kube-proxy acts as a Layer 4 responsible equalizer. Since kube-proxy runs in userspace, it adds a copy of data between kernel and userspace for forwarding processing, which is more stable but less efficient.

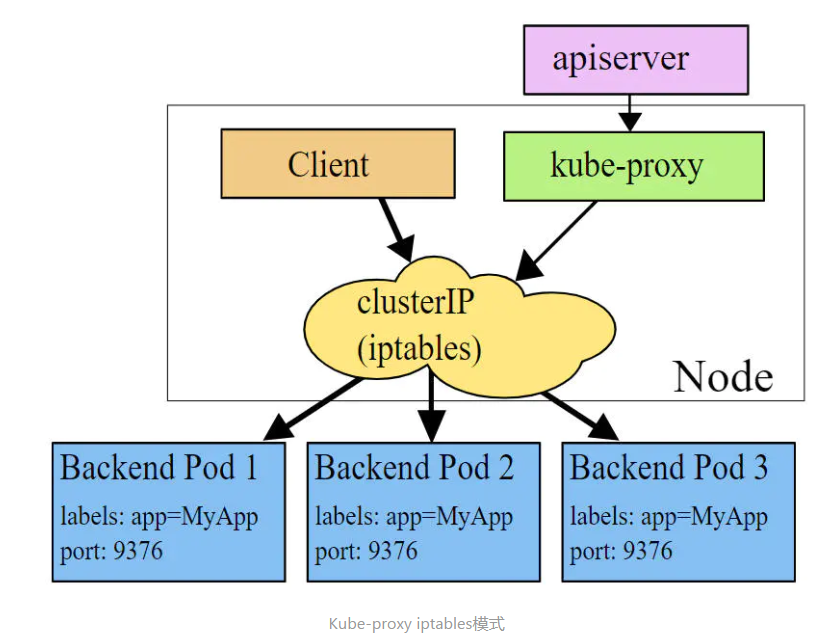

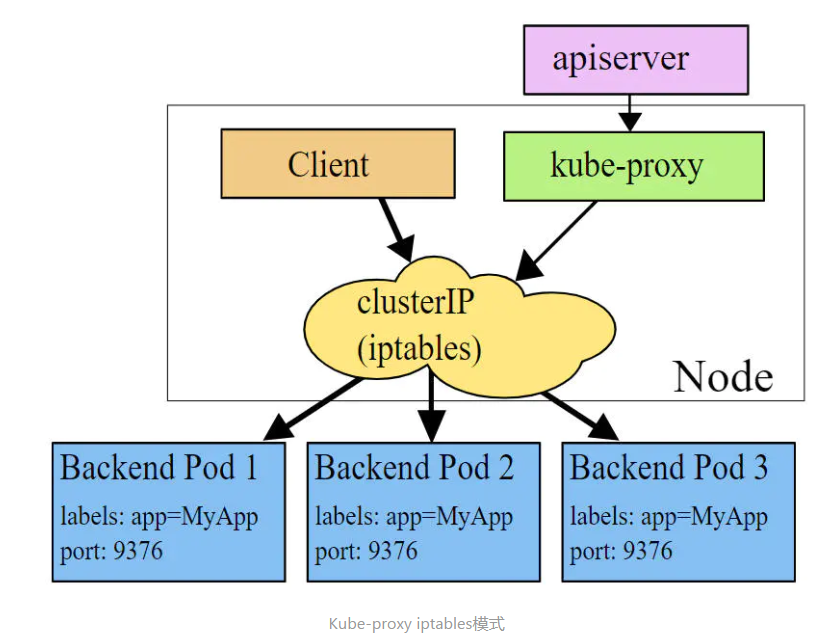

iptables mode

In iptables mode, kube-proxy creates iptables rules for each Pod on the backend of the service and redirects requests to the Cluster IP directly to a Pod IP. kube-proxy does not assume the role of a Layer 4 responsible equalizer in this mode, but only creates iptables rules. The advantage of this mode is that it is more efficient than userspace mode, but it does not provide a flexible LB policy and cannot retry when the backend Pod is unavailable.

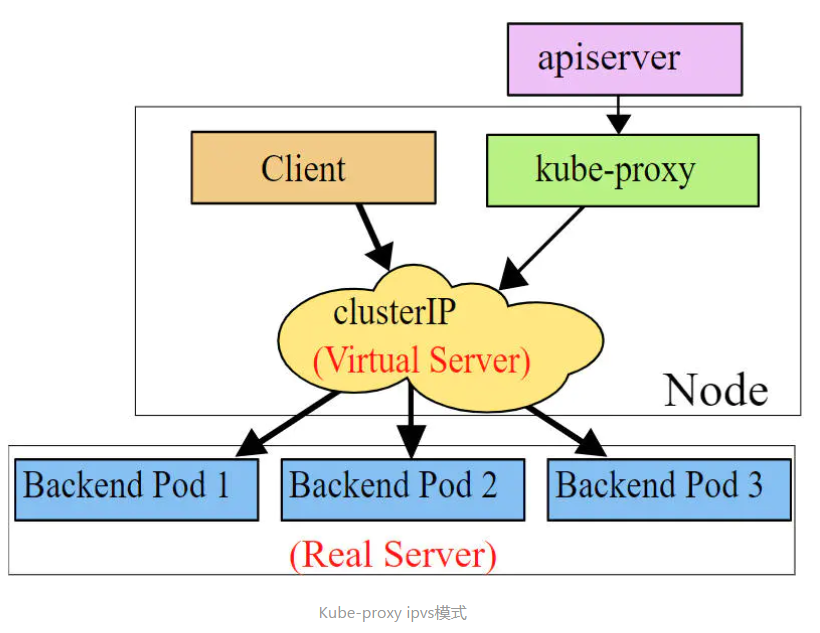

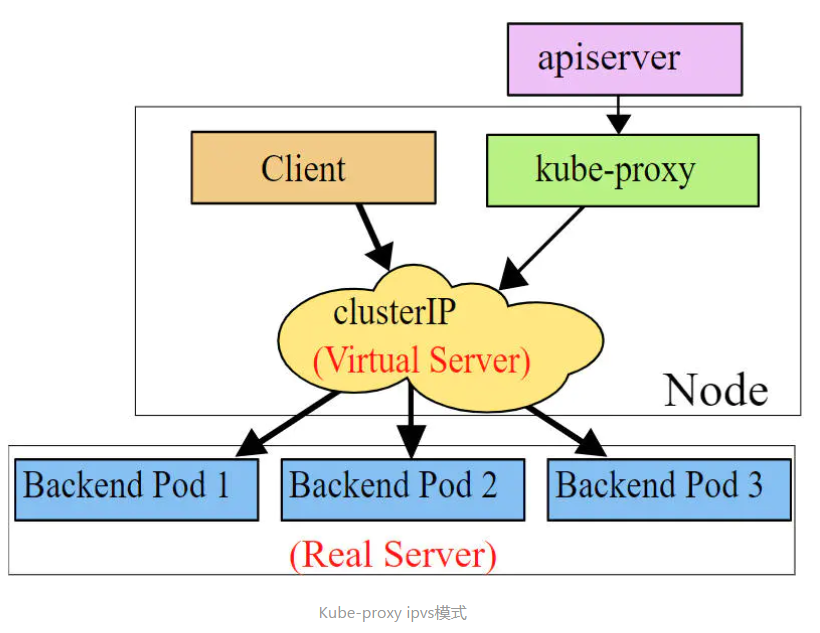

ipvs mode

ipvs mode is similar to iptables in that kube-proxy monitors Pod changes and creates ipvs rules accordingly. ipvs is more efficient compared to iptables for forwarding. In addition to this, ipvs supports more LB algorithms.

Set working mode

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

|

# Take ipvs as an example, please install the ipvs module before using it (it is already installed when you install the cluster)

# Edit kube-proxy cm to change mode to "ipvs"

[root@master yaml]# kubectl edit cm kube-proxy -n kube-system

configmap/kube-proxy edited

......

mode: "ipvs"

......

# Remove kube-proxy to make it restart automatically to update the configuration

[root@master yaml]# kubectl delete pod -l k8s-app=kube-proxy -n kube-system

pod "kube-proxy-5r4xw" deleted

pod "kube-proxy-qww6b" deleted

pod "kube-proxy-th7hm" deleted

# View ipvs rules

# Found that the configuration has taken effect

[root@master yaml]# ipvsadm -Ln

IP Virtual Server version 1.2.1 (size=4096)

Prot LocalAddress:Port Scheduler Flags

-> RemoteAddress:Port Forward Weight ActiveConn InActConn

TCP 10.96.0.1:443 rr

-> 192.16.1.10:6443 Masq 1 0 0

TCP 10.96.0.10:53 rr

-> 10.244.52.213:53 Masq 1 0 0

-> 10.244.52.218:53 Masq 1 0 0

TCP 10.96.0.10:9153 rr

-> 10.244.52.213:9153 Masq 1 0 0

-> 10.244.52.218:9153 Masq 1 0 0

UDP 10.96.0.10:53 rr

-> 10.244.52.213:53 Masq 1 0 0

-> 10.244.52.218:53 Masq 1 0 0

|

Service Resource List

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

kind: Service

apiVersion: v1

metadata:

name: service

namespace: <namespace>

spec:

selector: # Tag selector to determine which pods the service is currently proxying

type: <Service type>

clusterIP: <ip address of the virtual service>

sessionAffinity: # session affinity, support ClientIP, None two options

ports:

- protocol: <protocol>

port: <service port>

targetPort: <pod targetPort>

nodePort: <node Port>

|

The service types are as follows:

- ClusterIP: default value, it is a virtual IP automatically assigned by the Kubernetes system and can only be accessed inside the cluster

- NodePort: Expose the service to the outside through the port on the specified Node.

- LoadBalancer: Use an external load balancer to complete the load distribution to the service, note that this mode requires external cloud environment support

- ExternalName: Bring services from outside the cluster inside the cluster and use them directly.

Service usage

Experimental environment

Before using service, first create 3 pods using Deployment, and note that you should set the app=nginx-pod tag for the pods.

Create Service-Env.yaml with the following content

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

apiVersion: apps/v1

kind: Deployment

metadata:

name: service-env

namespace: default

spec:

replicas: 3

selector:

matchLabels:

app: service-env

template:

metadata:

labels:

app: service-env

spec:

containers:

- name: nginx

image: docker.io/library/nginx:1.23.1

ports:

- containerPort: 80

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

|

# create deploy

[root@master yaml]# kubectl create -f Service-Env.yaml

deployment.apps/service-env created

# View pod details

[root@master yaml]# kubectl get pods -n default -o wide --show-labels

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES LABELS

service-env-77bd9f74d4-7qntr 1/1 Running 0 108s 10.244.52.222 work2.host.com <none> <none>

service-env-77bd9f74d4-9hs5k 1/1 Running 0 108s 10.244.67.84 work1.host.com <none> <none>

service-env-77bd9f74d4-s5hh5 1/1 Running 0 108s 10.244.67.79 work1.host.com <none> <none>

# To facilitate testing, modify the nginx access page to podip

# Modify the container in turn

[root@master yaml]# kubectl exec -it service-env-77bd9f74d4-7qntr -n default /bin/sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

# echo 10.244.52.222 > /usr/share/nginx/html/index.html

# exit

[root@master yaml]# kubectl exec -it service-env-77bd9f74d4-9hs5k -n default /bin/sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

# echo 10.244.67.84 > /usr/share/nginx/html/index.html

# exit

[root@master yaml]# kubectl exec -it service-env-77bd9f74d4-s5hh5 -n default /bin/sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

# echo 10.244.67.79 > /usr/share/nginx/html/index.html

# exit

# Testing

[root@master yaml]# curl 10.244.52.222

10.244.52.222

[root@master yaml]# curl 10.244.67.84

10.244.67.84

[root@master yaml]# curl 10.244.67.79

10.244.67.79

|

ClusterIP

Create Service-Clusterip.yaml with the following content

1

2

3

4

5

6

7

8

9

10

11

12

13

|

apiVersion: v1

kind: Service

metadata:

name: service-clusterip

namespace: default

spec:

selector:

app: service-env

clusterIP: 10.97.1.1 # The ip address of the service, if not written, will generate a default

type: ClusterIP

ports:

- port: 80 # Service port

targetPort: 80 # pod port

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

|

# create service

[root@master yaml]# kubectl create -f Service-Clusterip.yaml

service/service-clusterip created

# View service

[root@master yaml]# kubectl get svc -n default -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 2d18h <none>

service-clusterip ClusterIP 10.97.1.1 <none> 80/TCP 4m42s app=service-env

# View service details

# There is an Endpoints, which is the pod entry

[root@master yaml]# kubectl describe svc service-clusterip -n default

Name: service-clusterip

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app=service-env

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: 10.97.1.1

IPs: 10.97.1.1

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.52.222:80,10.244.67.79:80,10.244.67.84:80

Session Affinity: None

Events: <none>

# View the mapping rules for ipvs

[root@master yaml]# ipvsadm -Ln

......

TCP 10.97.1.1:80 rr

-> 10.244.52.222:80 Masq 1 0 0

-> 10.244.67.79:80 Masq 1 0 0

-> 10.244.67.84:80 Masq 1 0 0

......

# Testing

# http://10.97.1.1:80

[root@master yaml]# curl http://10.97.1.1:80

10.244.67.84

[root@master yaml]# curl http://10.97.1.1:80

10.244.67.79

[root@master yaml]# curl http://10.97.1.1:80

10.244.52.222

|

HeadLiness

Endpoint is a resource object in kubernetes, stored in etcd, used to record the access addresses of all pods corresponding to a service, which is generated based on the selector description in the service configuration file. A Service consists of a set of Pods that are exposed through Endpoints, Endpoints are the set of endpoints that implement the actual service. In other words, the connection between service and pod is realized through endpoints.

Create Service-Headliness.yaml with the following content

1

2

3

4

5

6

7

8

9

10

11

12

13

|

apiVersion: v1

kind: Service

metadata:

name: service-headliness

namespace: default

spec:

selector:

app: service-env

clusterIP: None # Set the clusterIP to None to create the headliness service

type: ClusterIP

ports:

- port: 80

targetPort: 80

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

|

# create service

[root@master yaml]# kubectl create -f Service-Headliness.yaml

service/service-headliness created

# check service

# Found CLUSTER-IP Unassigned IP

[root@master yaml]# kubectl get svc service-headliness -n default -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service-headliness ClusterIP None <none> 80/TCP 43s app=service-env

# View service details

[root@master yaml]# kubectl describe svc service-headliness -n default

Name: service-headliness

Namespace: default

Labels: <none>

Annotations: <none>

Selector: app=service-env

Type: ClusterIP

IP Family Policy: SingleStack

IP Families: IPv4

IP: None

IPs: None

Port: <unset> 80/TCP

TargetPort: 80/TCP

Endpoints: 10.244.52.222:80,10.244.67.79:80,10.244.67.84:80

Session Affinity: None

Events: <none>

[root@master yaml]# kubectl exec -it pc-deployment-6895856946-9b24j -n default /bin/sh

kubectl exec [POD] [COMMAND] is DEPRECATED and will be removed in a future version. Use kubectl exec [POD] -- [COMMAND] instead.

# cat /etc/resolv.conf

search default.svc.cluster.local svc.cluster.local cluster.local host.com

nameserver 10.96.0.10

options ndots:5

# exit

[root@master yaml]# dig @10.96.0.10 service-headliness.default.svc.cluster.local +short

10.244.67.84

10.244.52.222

10.244.67.79

|

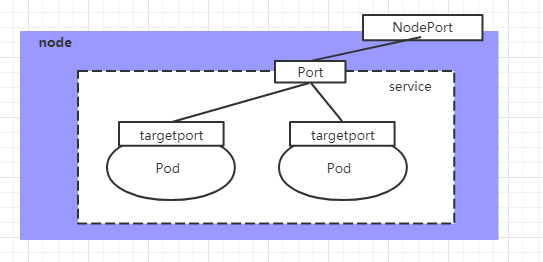

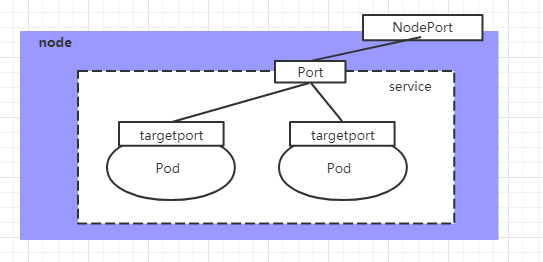

NodePort

If you want to expose the service to the outside of the cluster, you have to use another type of service called NodePort, which actually works by mapping the port of the service to a port on the Node, and then you can access the service via NodeIp:NodePort. service.

Create Service-Nodeport.yaml, with the following content

1

2

3

4

5

6

7

8

9

10

11

12

13

|

apiVersion: v1

kind: Service

metadata:

name: service-nodeport

namespace: default

spec:

selector:

app: service-env

type: NodePort # service type

ports:

- port: 80

nodePort: 30002 # Specify the port of the bound node (default range: 30000-32767), if not specified, it will be assigned by default

targetPort: 80

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

# create service

[root@master yaml]# kubectl create -f Service-Nodeport.yaml

service/service-nodeport created

# check service

[root@master yaml]# kubectl get svc -n default -o wide

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE SELECTOR

service-nodeport NodePort 10.101.89.79 <none> 80:30002/TCP 20s app=service-env

# Test

# Access to port 30002 of each node

[root@master yaml]# curl http://master.host.com:30002

10.244.67.84

[root@master yaml]# curl http://work1.host.com:30002

10.244.67.79

[root@master yaml]# curl http://work2.host.com:30002

10.244.67.84

|

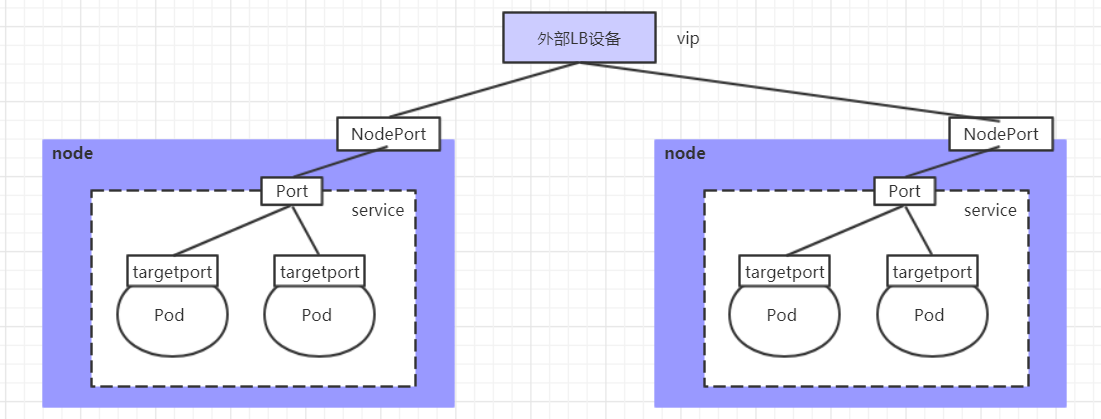

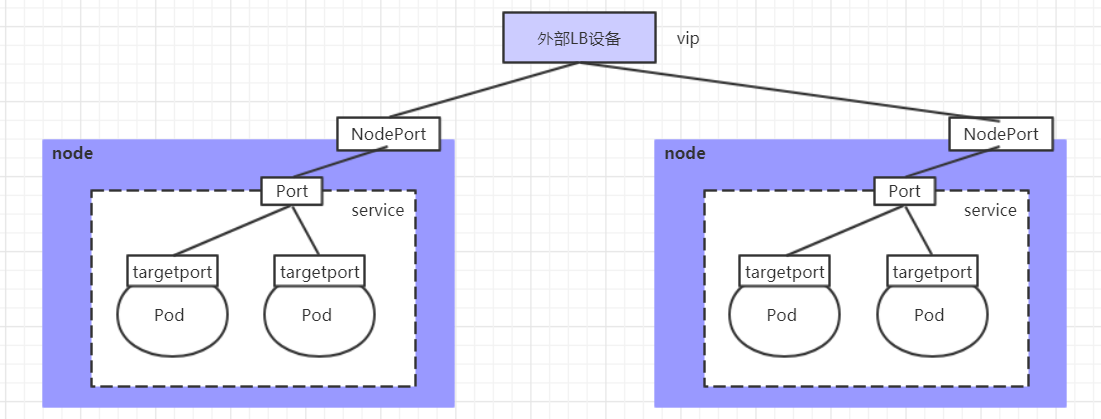

LoadBalancer

LoadBalancer and NodePort are similar in that they both expose a port to the outside, the difference is that LoadBalancer will do a load balancing device outside the cluster, and this device needs to be supported by the external environment, and requests sent to this device by external services will be forwarded to the cluster after the device load. The implementation of LoadBalancer requires an external device, so it is not demonstrated here.

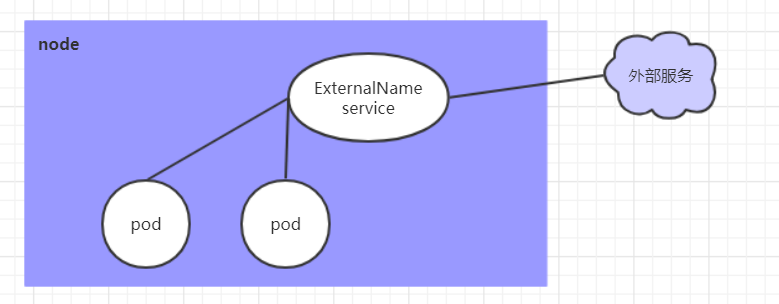

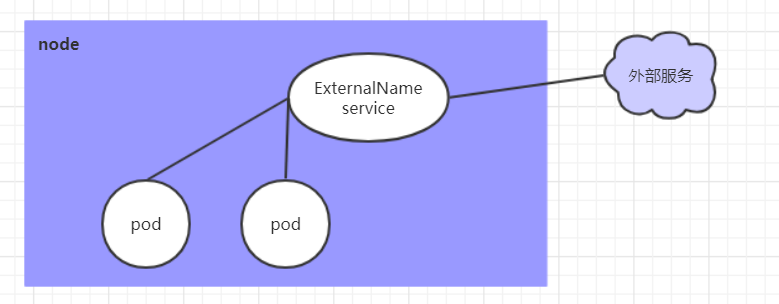

ExternalName

Service of type ExternalName is used to introduce a service external to the cluster. It specifies the address of an external service through the externalName attribute, and then this service can be accessed inside the cluster to access the external service.

Create a Service-Externalname.yaml with the following content

1

2

3

4

5

6

7

8

|

apiVersion: v1

kind: Service

metadata:

name: service-externalname

namespace: default

spec:

type: ExternalName # service type

externalName: www.baidu.com #Change to ip address is fine

|

1

2

3

4

5

6

7

8

9

|

# create service

[root@master yaml]# kubectl create -f Service-Externalname.yaml

service/service-externalname created

# check dns

[root@master yaml]# dig @10.96.0.10 service-externalname.default.svc.cluster.local +short

www.baidu.com.

39.156.66.14

39.156.66.18

|