Previously we explained the use of the Jenkins pipeline to implement CI/CD for Kubernetes applications, now we will migrate this pipeline to Tekton, in fact, the overall idea is the same, that is, to divide the entire workflow into different tasks to perform, the previous workflow stages are divided into the following stages: Clone code -> unit testing -> compile and package -> Docker image build/push -> Kubectl/Helm deployment services/.

In Tekton we can convert these phases directly into Task tasks, Clone code in Tekton does not require us to actively define a task, but only to specify an input code resource on top of the executed task. Here we will convert the above workflow step by step into a Tekton flow, with the same code repository as http://git.k8s.local/course/devops-demo.git.

Clone Code

Although we don’t have to define a separate task for Clone code, we can just use a git type input resource, because there are more tasks involved here, and many times we need to Clone code first and then operate, so the best way is to Clone the code and then share it with other tasks through Workspace, here we can directly use Catalog git-clone to achieve this task, we can do some customization according to their needs, the corresponding Task is as follows.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

|

# task-clone.yaml

apiVersion: tekton.dev/v1beta1

kind: Task

metadata:

name: git-clone

labels:

app.kubernetes.io/version: "0.8"

annotations:

tekton.dev/pipelines.minVersion: "0.29.0"

tekton.dev/categories: Git

tekton.dev/tags: git

tekton.dev/displayName: "git clone"

tekton.dev/platforms: "linux/amd64,linux/s390x,linux/ppc64le,linux/arm64"

spec:

description: >-

These Tasks are Git tasks to work with repositories used by other tasks

in your Pipeline.

The git-clone Task will clone a repo from the provided url into the

output Workspace. By default the repo will be cloned into the root of

your Workspace. You can clone into a subdirectory by setting this Task's

subdirectory param. This Task also supports sparse checkouts. To perform

a sparse checkout, pass a list of comma separated directory patterns to

this Task's sparseCheckoutDirectories param.

workspaces:

- name: output

description: The git repo will be cloned onto the volume backing this Workspace.

- name: ssh-directory

optional: true

description: |

A .ssh directory with private key, known_hosts, config, etc. Copied to

the user's home before git commands are executed. Used to authenticate

with the git remote when performing the clone. Binding a Secret to this

Workspace is strongly recommended over other volume types.

- name: basic-auth

optional: true

description: |

A Workspace containing a .gitconfig and .git-credentials file. These

will be copied to the user's home before any git commands are run. Any

other files in this Workspace are ignored. It is strongly recommended

to use ssh-directory over basic-auth whenever possible and to bind a

Secret to this Workspace over other volume types.

- name: ssl-ca-directory

optional: true

description: |

A workspace containing CA certificates, this will be used by Git to

verify the peer with when fetching or pushing over HTTPS.

params:

- name: url

description: Repository URL to clone from.

type: string

- name: revision

description: Revision to checkout. (branch, tag, sha, ref, etc...)

type: string

default: ""

- name: refspec

description: Refspec to fetch before checking out revision.

default: ""

- name: submodules

description: Initialize and fetch git submodules.

type: string

default: "true"

- name: depth

description: Perform a shallow clone, fetching only the most recent N commits.

type: string

default: "1"

- name: sslVerify

description: Set the `http.sslVerify` global git config. Setting this to `false` is not advised unless you are sure that you trust your git remote.

type: string

default: "true"

- name: crtFileName

description: file name of mounted crt using ssl-ca-directory workspace. default value is ca-bundle.crt.

type: string

default: "ca-bundle.crt"

- name: subdirectory

description: Subdirectory inside the `output` Workspace to clone the repo into.

type: string

default: ""

- name: sparseCheckoutDirectories

description: Define the directory patterns to match or exclude when performing a sparse checkout.

type: string

default: ""

- name: deleteExisting

description: Clean out the contents of the destination directory if it already exists before cloning.

type: string

default: "true"

- name: httpProxy

description: HTTP proxy server for non-SSL requests.

type: string

default: ""

- name: httpsProxy

description: HTTPS proxy server for SSL requests.

type: string

default: ""

- name: noProxy

description: Opt out of proxying HTTP/HTTPS requests.

type: string

default: ""

- name: verbose

description: Log the commands that are executed during `git-clone`'s operation.

type: string

default: "true"

- name: gitInitImage

description: The image providing the git-init binary that this Task runs.

type: string

default: "cnych/tekton-git-init:v0.29.0"

- name: userHome

description: |

Absolute path to the user's home directory.

type: string

default: "/home/nonroot"

results:

- name: commit

description: The precise commit SHA that was fetched by this Task.

- name: url

description: The precise URL that was fetched by this Task.

steps:

- name: clone

image: "$(params.gitInitImage)"

env:

- name: HOME

value: "$(params.userHome)"

- name: PARAM_URL

value: $(params.url)

- name: PARAM_REVISION

value: $(params.revision)

- name: PARAM_REFSPEC

value: $(params.refspec)

- name: PARAM_SUBMODULES

value: $(params.submodules)

- name: PARAM_DEPTH

value: $(params.depth)

- name: PARAM_SSL_VERIFY

value: $(params.sslVerify)

- name: PARAM_CRT_FILENAME

value: $(params.crtFileName)

- name: PARAM_SUBDIRECTORY

value: $(params.subdirectory)

- name: PARAM_DELETE_EXISTING

value: $(params.deleteExisting)

- name: PARAM_HTTP_PROXY

value: $(params.httpProxy)

- name: PARAM_HTTPS_PROXY

value: $(params.httpsProxy)

- name: PARAM_NO_PROXY

value: $(params.noProxy)

- name: PARAM_VERBOSE

value: $(params.verbose)

- name: PARAM_SPARSE_CHECKOUT_DIRECTORIES

value: $(params.sparseCheckoutDirectories)

- name: PARAM_USER_HOME

value: $(params.userHome)

- name: WORKSPACE_OUTPUT_PATH

value: $(workspaces.output.path)

- name: WORKSPACE_SSH_DIRECTORY_BOUND

value: $(workspaces.ssh-directory.bound)

- name: WORKSPACE_SSH_DIRECTORY_PATH

value: $(workspaces.ssh-directory.path)

- name: WORKSPACE_BASIC_AUTH_DIRECTORY_BOUND

value: $(workspaces.basic-auth.bound)

- name: WORKSPACE_BASIC_AUTH_DIRECTORY_PATH

value: $(workspaces.basic-auth.path)

- name: WORKSPACE_SSL_CA_DIRECTORY_BOUND

value: $(workspaces.ssl-ca-directory.bound)

- name: WORKSPACE_SSL_CA_DIRECTORY_PATH

value: $(workspaces.ssl-ca-directory.path)

securityContext:

runAsNonRoot: true

runAsUser: 65532

script: |

#!/usr/bin/env sh

set -eu

if [ "${PARAM_VERBOSE}" = "true" ] ; then

set -x

fi

if [ "${WORKSPACE_BASIC_AUTH_DIRECTORY_BOUND}" = "true" ] ; then

cp "${WORKSPACE_BASIC_AUTH_DIRECTORY_PATH}/.git-credentials" "${PARAM_USER_HOME}/.git-credentials"

cp "${WORKSPACE_BASIC_AUTH_DIRECTORY_PATH}/.gitconfig" "${PARAM_USER_HOME}/.gitconfig"

chmod 400 "${PARAM_USER_HOME}/.git-credentials"

chmod 400 "${PARAM_USER_HOME}/.gitconfig"

fi

if [ "${WORKSPACE_SSH_DIRECTORY_BOUND}" = "true" ] ; then

cp -R "${WORKSPACE_SSH_DIRECTORY_PATH}" "${PARAM_USER_HOME}"/.ssh

chmod 700 "${PARAM_USER_HOME}"/.ssh

chmod -R 400 "${PARAM_USER_HOME}"/.ssh/*

fi

if [ "${WORKSPACE_SSL_CA_DIRECTORY_BOUND}" = "true" ] ; then

export GIT_SSL_CAPATH="${WORKSPACE_SSL_CA_DIRECTORY_PATH}"

if [ "${PARAM_CRT_FILENAME}" != "" ] ; then

export GIT_SSL_CAINFO="${WORKSPACE_SSL_CA_DIRECTORY_PATH}/${PARAM_CRT_FILENAME}"

fi

fi

CHECKOUT_DIR="${WORKSPACE_OUTPUT_PATH}/${PARAM_SUBDIRECTORY}"

cleandir() {

# Delete any existing contents of the repo directory if it exists.

#

# We don't just "rm -rf ${CHECKOUT_DIR}" because ${CHECKOUT_DIR} might be "/"

# or the root of a mounted volume.

if [ -d "${CHECKOUT_DIR}" ] ; then

# Delete non-hidden files and directories

rm -rf "${CHECKOUT_DIR:?}"/*

# Delete files and directories starting with . but excluding ..

rm -rf "${CHECKOUT_DIR}"/.[!.]*

# Delete files and directories starting with .. plus any other character

rm -rf "${CHECKOUT_DIR}"/..?*

fi

}

if [ "${PARAM_DELETE_EXISTING}" = "true" ] ; then

cleandir

fi

test -z "${PARAM_HTTP_PROXY}" || export HTTP_PROXY="${PARAM_HTTP_PROXY}"

test -z "${PARAM_HTTPS_PROXY}" || export HTTPS_PROXY="${PARAM_HTTPS_PROXY}"

test -z "${PARAM_NO_PROXY}" || export NO_PROXY="${PARAM_NO_PROXY}"

/ko-app/git-init \

-url="${PARAM_URL}" \

-revision="${PARAM_REVISION}" \

-refspec="${PARAM_REFSPEC}" \

-path="${CHECKOUT_DIR}" \

-sslVerify="${PARAM_SSL_VERIFY}" \

-submodules="${PARAM_SUBMODULES}" \

-depth="${PARAM_DEPTH}" \

-sparseCheckoutDirectories="${PARAM_SPARSE_CHECKOUT_DIRECTORIES}"

cd "${CHECKOUT_DIR}"

RESULT_SHA="$(git rev-parse HEAD)"

EXIT_CODE="$?"

if [ "${EXIT_CODE}" != 0 ] ; then

exit "${EXIT_CODE}"

fi

printf "%s" "${RESULT_SHA}" > "$(results.commit.path)"

printf "%s" "${PARAM_URL}" > "$(results.url.path)"

|

Generally we only need to provide the workspace for persistent code as output, and then include the url and revision parameters, and use the default for the rest.

Unit Testing

The unit test phase is relatively simple, and normally it’s just a matter of executing a test command, so we’re not really executing a unit test here, so we can simply test it by writing a Task as shown below.

1

2

3

4

5

6

7

8

9

10

11

|

# task-test.yaml

apiVersion: tekton.dev/v1beta1

kind: Task

metadata:

name: test

spec:

steps:

- name: test

image: golang:1.14.2-alpine3.11

command: ["echo"]

args: ["this is a test task"]

|

Compile and package

The second phase is the compile and package phase. Since our Dockerfile is not a multi-stage build, we need to use a task to compile and package the application into a binary file first, and then pass the compiled file to the next task for image building.

We’ve defined what we want to do in this phase, so writing the task is easy. Create the Task task as shown below, and first associate the code inside the clone task by defining a workspace.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

|

# task-build.yaml

apiVersion: tekton.dev/v1beta1

kind: Task

metadata:

name: build

spec:

workspaces:

- name: go-repo

mountPath: /workspace/repo

steps:

- name: build

image: golang:1.14.2-alpine3.11

workingDir: /workspace/repo

script: |

go build -v -o demo-app

env:

- name: GOPROXY

value: https://goproxy.cn

- name: GOOS

value: linux

- name: GOARCH

value: amd64

|

The build task is also very simple, just we will need to use the environment variables directly through the env injection, of course, directly into the script is also possible, or directly use the command to execute the task can be, and then build the generated demo-app binary file to keep in the code root, so that can also be shared through workspace.

Docker image

Next is to build and push the Docker image, we have introduced the use of Kaniko, DooD, DinD 3 modes of image construction, here we directly use DinD this mode, we have to build the image Dockerfile is very simple.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

FROM alpine

WORKDIR /home

# Modify alpine source to Aliyun

RUN sed -i 's/dl-cdn.alpinelinux.org/mirrors.ustc.edu.cn/g' /etc/apk/repositories && \

apk update && \

apk upgrade && \

apk add ca-certificates && update-ca-certificates && \

apk add --update tzdata && \

rm -rf /var/cache/apk/*

COPY demo-app /home/

ENV TZ=Asia/Shanghai

EXPOSE 8080

ENTRYPOINT ./demo-app

|

We can copy the compiled binaries directly to the image, so we also need to go through Workspace to get the products of the last build task, here we use sidecar to implement the DinD mode to build the image and create a task as shown below.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

|

# task-docker.yaml

apiVersion: tekton.dev/v1beta1

kind: Task

metadata:

name: docker

spec:

workspaces:

- name: go-repo

params:

- name: image

description: Reference of the image docker will produce.

- name: registry_mirror

description: Specific the docker registry mirror

default: ""

- name: registry_url

description: private docker images registry url

steps:

- name: docker-build # Build Steps

image: docker:stable

env:

- name: DOCKER_HOST # Link sidecar over TCP using TLS form

value: tcp://localhost:2376

- name: DOCKER_TLS_VERIFY # Calibrate TLS

value: "1"

- name: DOCKER_CERT_PATH # Certificates generated using the sidecar daemon

value: /certs/client

- name: DOCKER_PASSWORD

valueFrom:

secretKeyRef:

name: harbor-auth

key: password

- name: DOCKER_USERNAME

valueFrom:

secretKeyRef:

name: harbor-auth

key: username

workingDir: $(workspaces.go-repo.path)

script: | # docker build command

docker login $(params.registry_url) -u $DOCKER_USERNAME -p $DOCKER_PASSWORD

docker build --no-cache -f ./Dockerfile -t $(params.image) .

docker push $(params.image)

volumeMounts: # Declare mount certificate directory

- mountPath: /certs/client

name: dind-certs

sidecars: # sidecar mode, providing docker daemon service, realizing true DinD mode

- image: docker:dind

name: server

args:

- --storage-driver=vfs

- --userland-proxy=false

- --debug

- --insecure-registry=$(params.registry_url)

- --registry-mirror=$(params.registry_mirror)

securityContext:

privileged: true

env:

- name: DOCKER_TLS_CERTDIR # Write the generated certificate to a path shared with the client

value: /certs

volumeMounts:

- mountPath: /certs/client

name: dind-certs

- mountPath: /var/lib/docker

name: docker-root

readinessProbe: # Wait for the dind daemon to generate the certificate it shares with the client

periodSeconds: 1

exec:

command: ["ls", "/certs/client/ca.pem"]

volumes: # Just use the emptyDir form

- name: dind-certs

emptyDir: {}

- name: docker-root

persistentVolumeClaim:

claimName: docker-root-pvc

|

The point of this task is to declare a Workspace, and to use the same Workspace as the previous build task when executing the task, so that you can get the demo-app binary compiled above.

Deployment

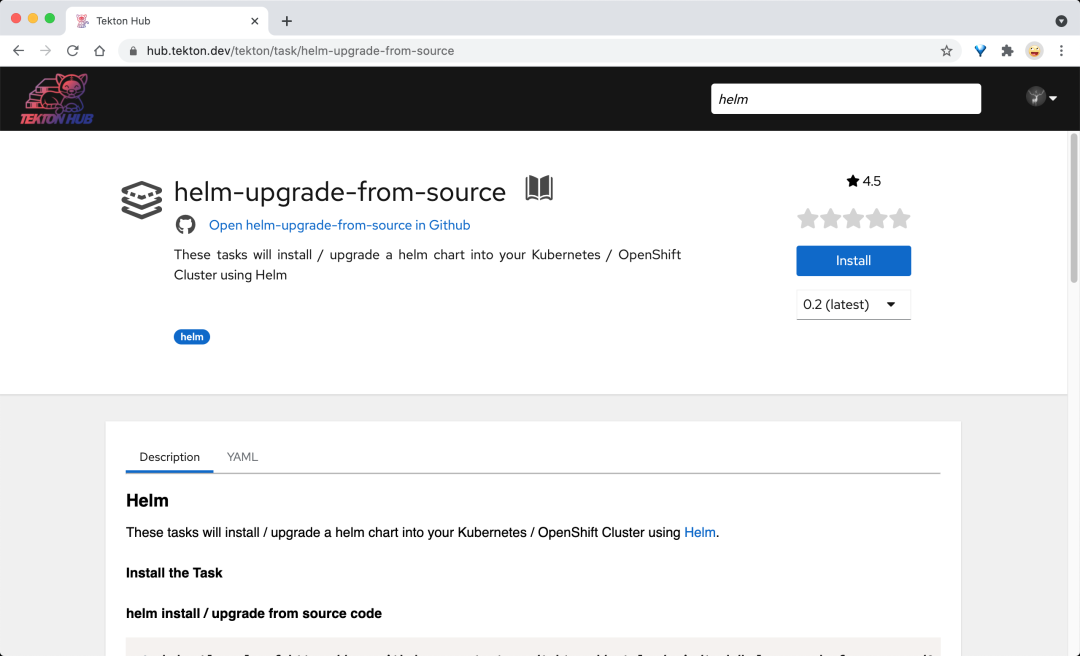

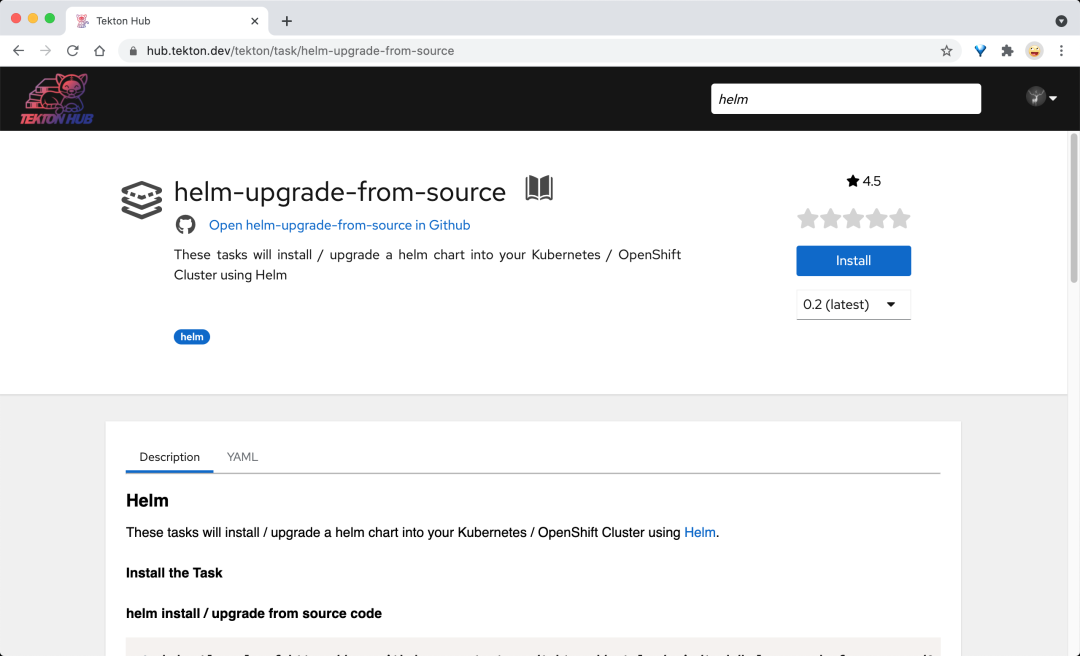

For the next stage of deployment, we can also refer to the previous implementation in the Jenkins pipeline, as we include the Helm Chart package in the project, so we can directly use Helm to deploy it, to implement Helm deployment, of course we need an image containing the helm command first, but of course we can write such a task ourselves, in addition we can also In addition, we can also look for Catalog directly on hub.tekton.dev, because there are many common tasks on it, such as helm-upgrade-from-source, which is a Task task that can completely satisfy our needs.

The Catalog also contains full documentation underneath, so we can download the task directly and make some customizations to suit our needs, as shown below.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

|

# task-deploy.yaml

apiVersion: tekton.dev/v1beta1

kind: Task

metadata:

name: deploy

labels:

app.kubernetes.io/version: "0.3"

annotations:

tekton.dev/pipelines.minVersion: "0.12.1"

tekton.dev/categories: Deployment

tekton.dev/tags: helm

tekton.dev/platforms: "linux/amd64,linux/s390x,linux/ppc64le,linux/arm64"

spec:

description: >-

These tasks will install / upgrade a helm chart into your Kubernetes /

OpenShift Cluster using Helm

params:

- name: charts_dir

description: The directory in source that contains the helm chart

- name: release_version

description: The helm release version in semantic versioning format

default: "v1.0.0"

- name: release_name

description: The helm release name

default: "helm-release"

- name: release_namespace

description: The helm release namespace

default: ""

- name: overwrite_values

description: "Specify the values you want to overwrite, comma separated: autoscaling.enabled=true,replicas=1"

default: ""

- name: values_file

description: "The values file to be used"

default: "values.yaml"

- name: helm_image

description: "helm image to be used"

default: "docker.io/lachlanevenson/k8s-helm@sha256:5c792f29950b388de24e7448d378881f68b3df73a7b30769a6aa861061fd08ae" #tag: v3.6.0

- name: upgrade_extra_params

description: "Extra parameters passed for the helm upgrade command"

default: ""

workspaces:

- name: source

results:

- name: helm-status

description: Helm deploy status

steps:

- name: upgrade

image: $(params.helm_image)

workingDir: /workspace/source

script: |

echo current installed helm releases

helm list --namespace "$(params.release_namespace)"

echo installing helm chart...

helm upgrade --install --wait --values "$(params.charts_dir)/$(params.values_file)" --namespace "$(params.release_namespace)" --version "$(params.release_version)" "$(params.release_name)" "$(params.charts_dir)" --debug --set "$(params.overwrite_values)" $(params.upgrade_extra_params)

status=`helm status $(params.release_name) --namespace "$(params.release_namespace)" | awk '/STATUS/ {print $2}'`

echo ${status} | tr -d "\n" | tee $(results.helm-status.path)

|

Since our Helm Chart template is in the code repository, we don’t need to get it from the Chart Repo repository, we just need to specify the Chart path, and all other configurable parameters are exposed through the params parameter, which is very flexible, and finally we also get the status of the Helm deployment and write it to the Results for subsequent tasks.

Rollback

Finally the application deployment may also need to be rolled back after completion, because there may be errors in the deployed application, of course this rollback is best triggered by ourselves, but in some scenarios, such as helm deployment has clearly failed, then we can of course automatically rollback, so it is necessary to determine when the deployment fails and then perform the rollback, that is, this task is not necessarily occurring, only in some scenarios, and we can do this in the pipeline by using WhenExpressions. To run a task only when certain conditions are met, you can protect the task execution by using the when field, which allows you to list a series of references to WhenExpressions.

WhenExpressions consists of Input, Operator and Values components.

Input is the input to WhenExpressions, which can be a static input or a variable (Params or Results), or an empty string by default if no input is providedOperator is an operator that represents the relationship between Input and Values, valid operators include in, notinValues is a string array that must be provided as a non-empty array of Values, which can also contain static values or variables (Params, Results or Workspaces bindings)

When WhenExpressions is configured in a Task task, the declared WhenExpressions is evaluated before the Task is executed, and if the result is True, the Task is executed, if it is False, the Task is not executed.

The rollback task we created here is shown below.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

|

# task-rollback.yaml

apiVersion: tekton.dev/v1beta1

kind: Task

metadata:

name: rollback

spec:

params:

- name: release_name

description: The helm release name

- name: release_namespace

description: The helm release namespace

default: ""

- name: helm_image

description: "helm image to be used"

default: "docker.io/lachlanevenson/k8s-helm@sha256:5c792f29950b388de24e7448d378881f68b3df73a7b30769a6aa861061fd08ae" #tag: v3.6.0

steps:

- name: rollback

image: $(params.helm_image)

script: |

echo rollback current installed helm releases

helm rollback $(params.release_name) --namespace $(params.release_namespace)

|

Pipeline

Now that our entire workflow tasks have been created, we can link them all together to form a Pipeline, referencing the Task defined above to the Pipeline, and of course declaring the resources or workspaces used in the Task.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

|

# pipeline.yaml

apiVersion: tekton.dev/v1beta1

kind: Pipeline

metadata:

name: pipeline

spec:

workspaces:

- name: go-repo-pvc

params:

# Defining the code repository

- name: git_url

- name: revision

type: string

default: "main"

# 定义镜像参数Define the image parameter

- name: image

- name: registry_url

type: string

default: "harbor.k8s.local"

- name: registry_mirror

type: string

default: "https://mirror.baidubce.com"

# Define helm charts parameters

- name: charts_dir

- name: release_name

- name: release_namespace

default: "default"

- name: overwrite_values

default: ""

- name: values_file

default: "values.yaml"

tasks: # Add task to the pipeline

- name: clone

taskRef:

name: git-clone

workspaces:

- name: output

workspace: go-repo-pvc

params:

- name: url

value: $(params.git_url)

- name: revision

value: $(params.revision)

- name: test

taskRef:

name: test

runAfter:

- clone

- name: build # Compile the binary program

taskRef:

name: build

runAfter: # The build task is executed only after the test task is executed

- test

- clone

workspaces: # Passing on workspaces

- name: go-repo

workspace: go-repo-pvc

- name: docker # Build and push Docker images

taskRef:

name: docker

runAfter:

- build

workspaces:

- name: go-repo

workspace: go-repo-pvc

params:

- name: image

value: $(params.image)

- name: registry_url

value: $(params.registry_url)

- name: registry_mirror

value: $(params.registry_mirror)

- name: deploy

taskRef:

name: deploy

runAfter:

- docker

workspaces:

- name: source

workspace: go-repo-pvc

params:

- name: charts_dir

value: $(params.charts_dir)

- name: release_name

value: $(params.release_name)

- name: release_namespace

value: $(params.release_namespace)

- name: overwrite_values

value: $(params.overwrite_values)

- name: values_file

value: $(params.values_file)

- name: rollback

taskRef:

name: rollback

when:

- input: "$(tasks.deploy.results.helm-status)"

operator: in

values: ["failed"]

params:

- name: release_name

value: $(params.release_name)

- name: release_namespace

value: $(params.release_namespace)

|

The overall process is relatively simple, that is, the Pipeline needs to first declare the Workspace, Resource, Params and other resources used, and then pass the declared data to the Task task, it should be noted that the last rollback task, we need to determine whether the task needs to be executed based on the results of the previous deploy task. So here we use the when property to get the deployment status via $(tasks.deploy.results.helm-status).

Executing the Pipeline

Now we can execute our pipeline to see if it meets our requirements. First, we need to create the other resource objects associated with it, such as the PVC for Workspace, and the authentication information for GitLab and Harbor.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

|

# other.yaml

apiVersion: v1

kind: Secret

metadata:

name: gitlab-auth

annotations:

tekton.dev/git-0: http://git.k8s.local

type: kubernetes.io/basic-auth

stringData:

username: root

password: admin321

---

apiVersion: v1

kind: Secret

metadata:

name: harbor-auth

annotations:

tekton.dev/docker-0: http://harbor.k8s.local

type: kubernetes.io/basic-auth

stringData:

username: admin

password: Harbor12345

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: tekton-build-sa

secrets:

- name: harbor-auth

- name: gitlab-auth

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: tekton-clusterrole-binding

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: edit

subjects:

- kind: ServiceAccount

name: tekton-build-sa

namespace: default

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: go-repo-pvc

spec:

resources:

requests:

storage: 1Gi

volumeMode: Filesystem

storageClassName: nfs-client # Automatic PV generation using StorageClass

accessModes:

- ReadWriteOnce

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: docker-root-pvc

spec:

resources:

requests:

storage: 2Gi

volumeMode: Filesystem

storageClassName: nfs-client # Automatic PV generation using StorageClass

accessModes:

- ReadWriteOnce

|

After these associated resource objects are created, you also need to bind a permission for the above ServiceAccount, because in the Helm container we want to operate some cluster resources, we must first do the permission declaration, here we can bind tekton-build-sa to the edit ClusterRole.

We can then create a PipelineRun resource object to trigger our pipeline build.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

|

# pipelinerun.yaml

apiVersion: tekton.dev/v1beta1

kind: PipelineRun

metadata:

name: pipelinerun

spec:

serviceAccountName: tekton-build-sa

pipelineRef:

name: pipeline

workspaces:

- name: go-repo-pvc

persistentVolumeClaim:

claimName: go-repo-pvc

params:

- name: git_url

value: http://git.k8s.local/course/devops-demo.git

- name: image

value: "harbor.k8s.local/course/devops-demo:v0.1.0"

- name: charts_dir

value: "./helm"

- name: release_name

value: devops-demo

- name: release_namespace

value: "kube-ops"

- name: overwrite_values

value: "image.repository=harbor.k8s.local/course/devops-demo,image.tag=v0.1.0"

- name: values_file

value: "my-values.yaml"

|

Creating the above resource object directly will allow us to execute our Pipeline flow.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

|

$ kubectl apply -f pipelinerun.yaml

$ tkn pr describe pipelinerun

Name: pipelinerun

Namespace: default

Pipeline Ref: pipeline

Service Account: tekton-build-sa

Timeout: 1h0m0s

Labels:

tekton.dev/pipeline=pipeline

🌡️ Status

STARTED DURATION STATUS

4 minutes ago 2m30s Succeeded(Completed)

⚓ Params

NAME VALUE

∙ git_url http://git.k8s.local/course/devops-demo.git

∙ image harbor.k8s.local/course/devops-demo:v0.1.0

∙ charts_dir ./helm

∙ release_name devops-demo

∙ release_namespace kube-ops

∙ overwrite_values image.repository=harbor.k8s.local/course/devops-demo,image.tag=v0.1.0

∙ values_file my-values.yaml

📂 Workspaces

NAME SUB PATH WORKSPACE BINDING

∙ go-repo-pvc --- PersistentVolumeClaim (claimName=go-repo-pvc)

🗂 Taskruns

NAME TASK NAME STARTED DURATION STATUS

∙ pipelinerun-deploy deploy 3 minutes ago 1m14s Succeeded

∙ pipelinerun-docker docker 4 minutes ago 55s Succeeded

∙ pipelinerun-build build 4 minutes ago 11s Succeeded

∙ pipelinerun-test test 4 minutes ago 4s Succeeded

∙ pipelinerun-clone clone 4 minutes ago 6s Succeeded

⏭️ Skipped Tasks

NAME

∙ rollback

# 部署成功了

$ curl devops-demo.k8s.local

{"msg":"Hello DevOps On Kubernetes"}

|

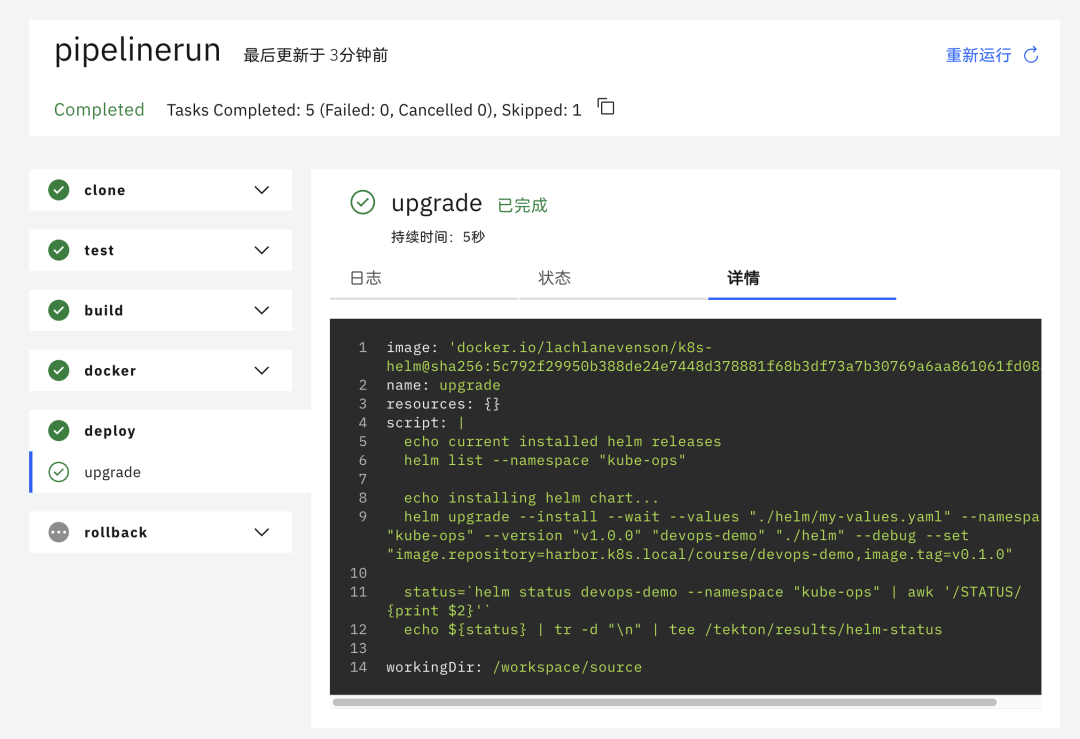

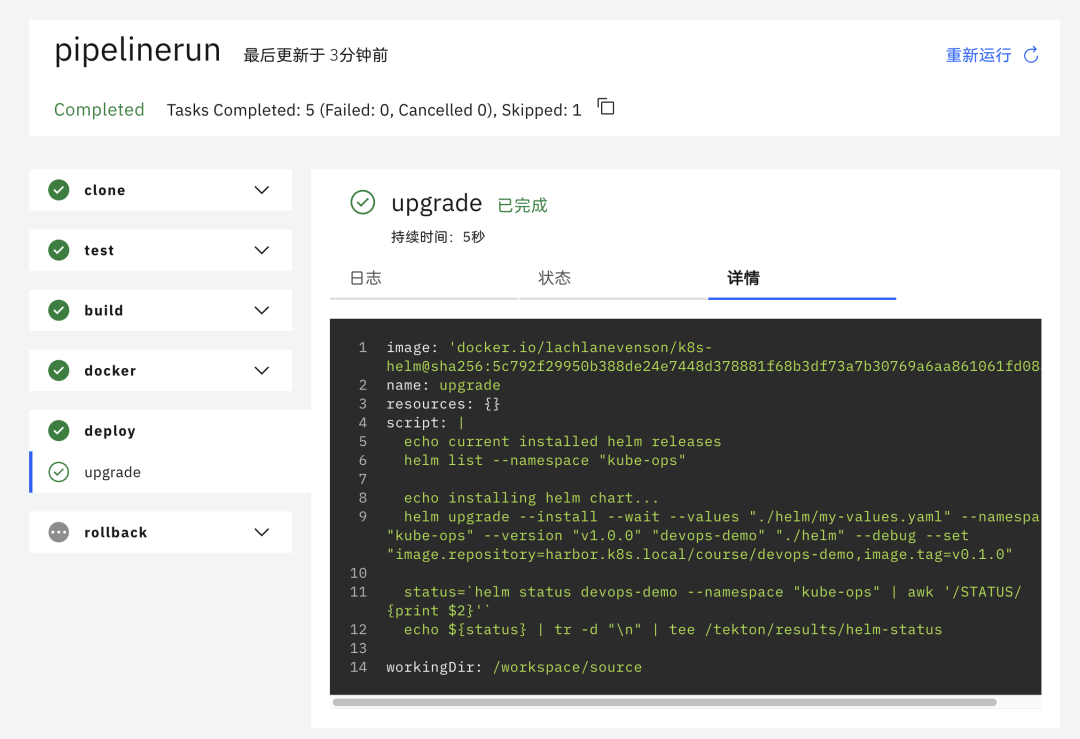

You can also see on the Dashboard that the pipeline is executing normally, and the rollback rollback task is ignored because the deployment is successful.

Triggers

Now that the entire pipeline has been successfully executed, the last step is to interface Gitlab with Tekton, which means that the Tekton Trigger will automatically trigger the build. We’ve already covered Tekton Trigger in detail, so I won’t go into the details.

The first step is to add a Secret Token for Gitlab Webhook access. You’ll also need to associate this Secret with the ServiceAccount you used above, and then continue to add the corresponding RBAC permissions.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

|

# other.yaml

# ......

apiVersion: v1

kind: Secret

metadata:

name: gitlab-secret

type: Opaque

stringData:

secretToken: "1234567"

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: tekton-build-sa

secrets:

- name: harbor-auth

- name: gitlab-auth

- name: gitlab-secret

---

apiVersion: rbac.authorization.k8s.io/v1

kind: Role

metadata:

name: tekton-triggers-gitlab-minimal

rules:

# EventListeners need to be able to fetch all namespaced resources

- apiGroups: ["triggers.tekton.dev"]

resources:

["eventlisteners", "triggerbindings", "triggertemplates", "triggers"]

verbs: ["get", "list", "watch"]

- apiGroups: [""]

# configmaps is needed for updating logging config

resources: ["configmaps"]

verbs: ["get", "list", "watch"]

# Permissions to create resources in associated TriggerTemplates

- apiGroups: ["tekton.dev"]

resources: ["pipelineruns", "pipelineresources", "taskruns"]

verbs: ["create"]

- apiGroups: [""]

resources: ["serviceaccounts"]

verbs: ["impersonate"]

- apiGroups: ["policy"]

resources: ["podsecuritypolicies"]

resourceNames: ["tekton-triggers"]

verbs: ["use"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: RoleBinding

metadata:

name: tekton-triggers-gitlab-binding

subjects:

- kind: ServiceAccount

name: tekton-build-sa

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: Role

name: tekton-triggers-gitlab-minimal

---

kind: ClusterRole

apiVersion: rbac.authorization.k8s.io/v1

metadata:

name: tekton-triggers-gitlab-clusterrole

rules:

# EventListeners need to be able to fetch any clustertriggerbindings

- apiGroups: ["triggers.tekton.dev"]

resources: ["clustertriggerbindings", "clusterinterceptors"]

verbs: ["get", "list", "watch"]

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: tekton-triggers-gitlab-clusterbinding

subjects:

- kind: ServiceAccount

name: tekton-build-sa

namespace: default

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: tekton-triggers-gitlab-clusterrole

|

Next, you can create an EventListener resource object to receive Push Event events from Gitlab, as shown below.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

|

# gitlab-listener.yaml

apiVersion: triggers.tekton.dev/v1beta1

kind: EventListener

metadata:

name: gitlab-listener # This event listener creates a Service object named el-gitlab-listener

spec:

serviceAccountName: tekton-build-sa

triggers:

- name: gitlab-push-events-trigger

interceptors:

- ref:

name: gitlab

params:

- name: secretRef # Reference the value of the secretToken in the Secret object of gitlab-secret

value:

secretName: gitlab-secret

secretKey: secretToken

- name: eventTypes

value:

- Push Hook # Receive only GitLab Push events

bindings: # Define TriggerBinding, configure parameters

- name: gitrevision

value: $(body.checkout_sha)

- name: gitrepositoryurl

value: $(body.repository.git_http_url)

template:

ref: gitlab-template

|

Above we defined two parameters gitrevision and gitrepositoryurl through TriggerBinding. The values of these two parameters can be obtained from the POST requests sent by Gitlab, and then we can pass these two parameters to the TriggerTemplate object. The template here is just a template of the PipelineRun object we defined above, replacing the git_url and mirror TAG parameters, as shown below.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

|

# gitlab-template.yaml

apiVersion: triggers.tekton.dev/v1beta1

kind: TriggerTemplate

metadata:

name: gitlab-template

spec:

params: # Define the parameters, consistent with those in TriggerBinding

- name: gitrevision

- name: gitrepositoryurl

resourcetemplates: # Define resource templates

- apiVersion: tekton.dev/v1beta1

kind: PipelineRun # Defining pipeline templates

metadata:

generateName: gitlab-run- # TaskRun name prefix

spec:

serviceAccountName: tekton-build-sa

pipelineRef:

name: pipeline

workspaces:

- name: go-repo-pvc

persistentVolumeClaim:

claimName: go-repo-pvc

params:

- name: git_url

value: $(tt.params.gitrepositoryurl)

- name: image

value: "harbor.k8s.local/course/devops-demo:$(tt.params.gitrevision)"

- name: charts_dir

value: "./helm"

- name: release_name

value: devops-demo

- name: release_namespace

value: "kube-ops"

- name: overwrite_values

value: "image.repository=harbor.k8s.local/course/devops-demo,image.tag=$(tt.params.gitrevision)"

- name: values_file

value: "my-values.yaml"

|

Just create the new resource objects above, which will create an eventlistern service to receive webhook requests.

1

2

3

|

$ kubectl get eventlistener gitlab-listener

NAME ADDRESS AVAILABLE REASON READY REASON

gitlab-listener http://el-gitlab-listener.default.svc.cluster.local:8080 True MinimumReplicasAvailable True

|

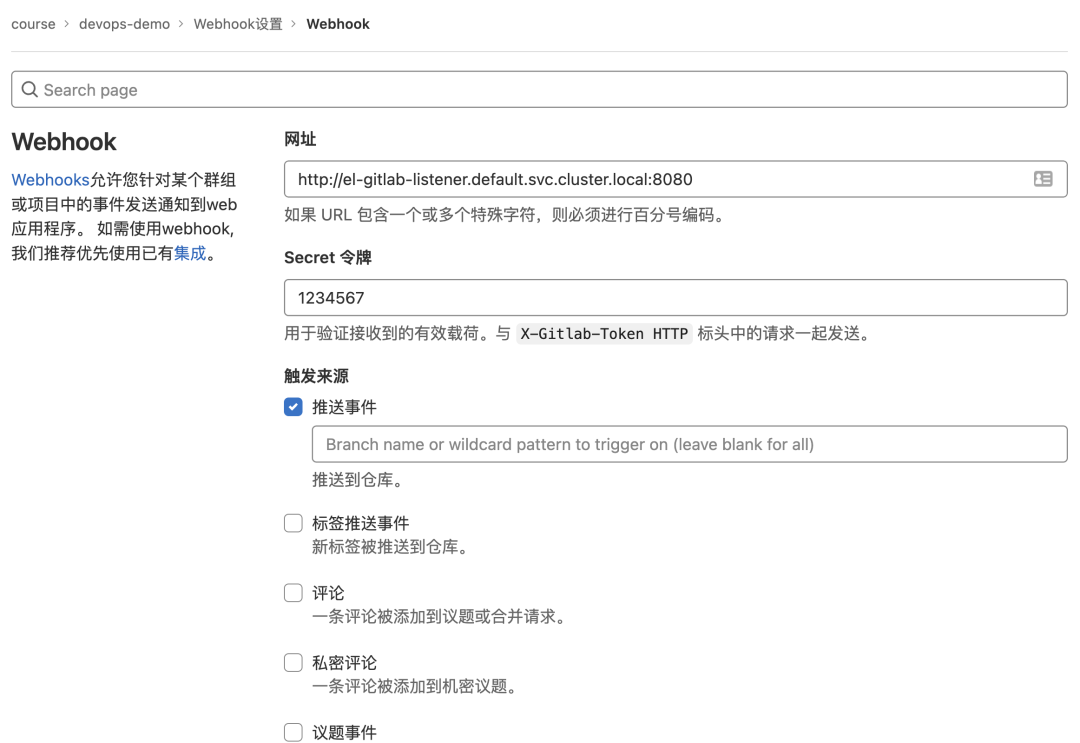

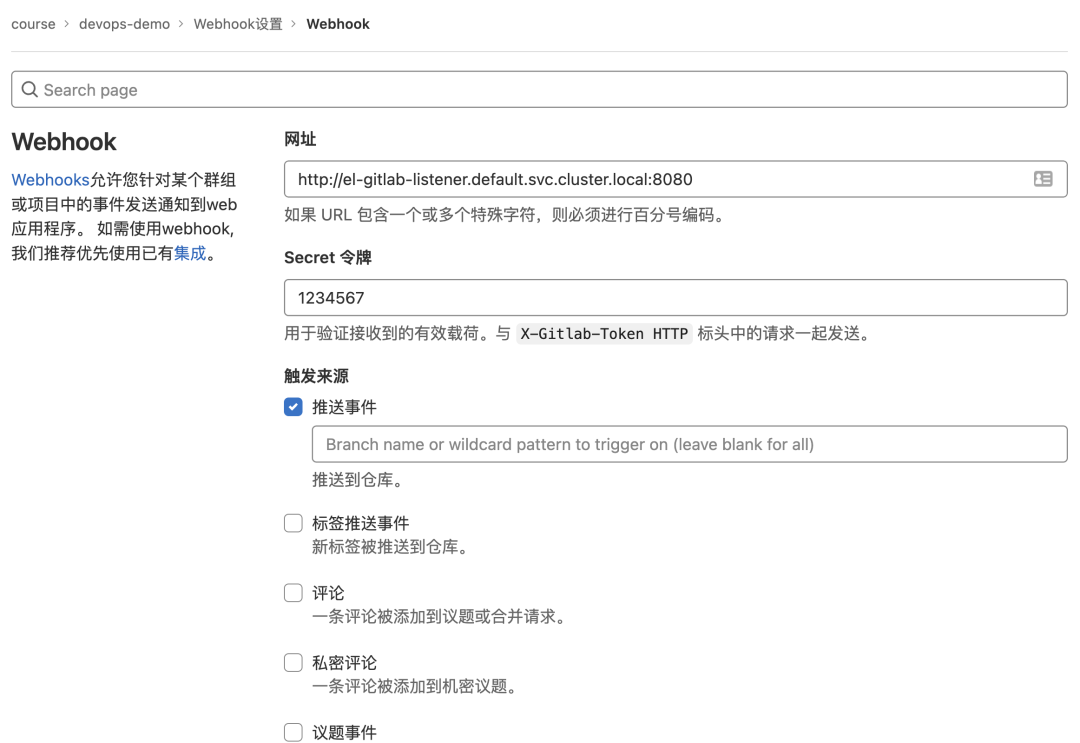

So make sure you also configure the Webhook in your Gitlab repository.

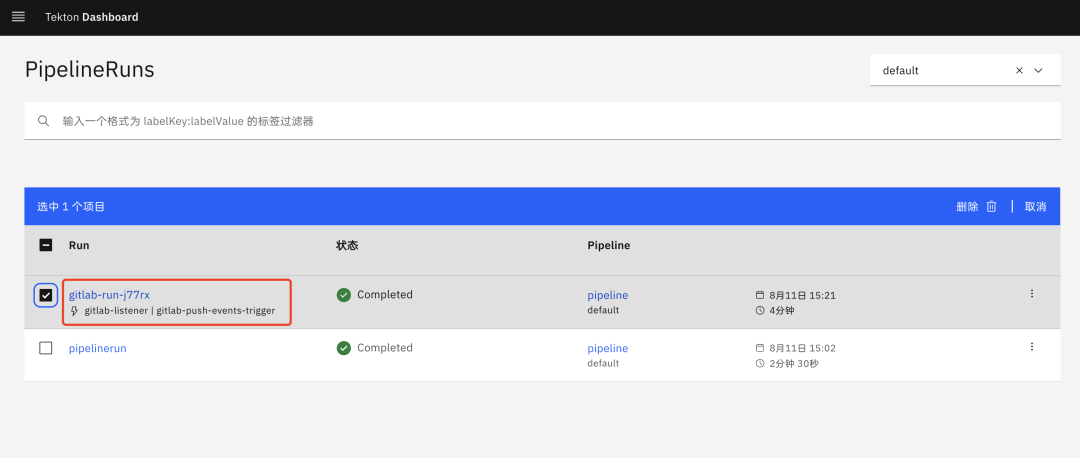

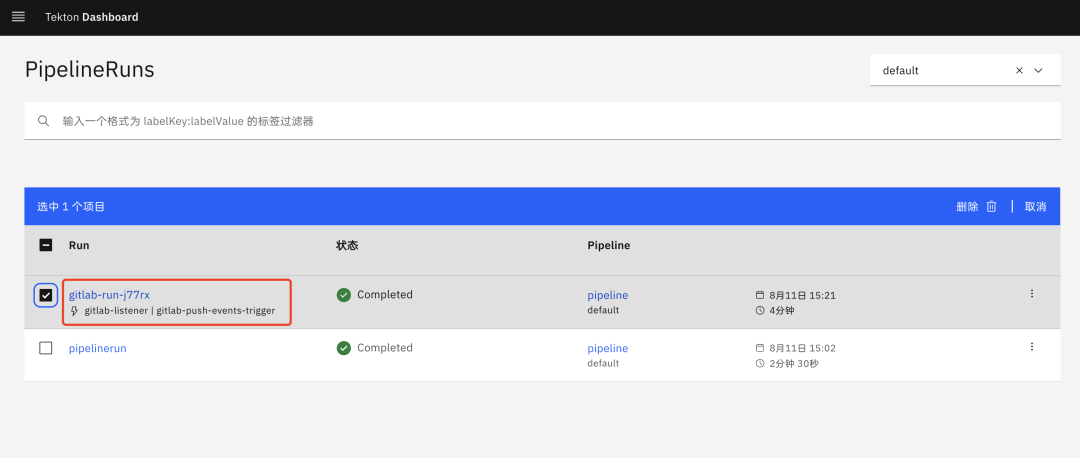

This way our entire trigger and listener is configured, next we go to modify our project code and commit the code, after normal commit a PipelinRun object will be created in the cluster to execute our pipeline.

1

2

3

4

5

|

$ kubectl get pipelinerun

NAME SUCCEEDED REASON STARTTIME COMPLETIONTIME

gitlab-run-j77rx True Completed 4m46s 46s

$ curl devops-demo.k8s.local

{"msg":"Hello Tekton On Kubernetes"}

|

You can see that the application has successfully deployed our newly committed code after the successful execution of the pipeline, and here we are done refactoring the project’s pipeline using Tekton.