Linkerd is a fully open source Service Grid implementation of Kubernetes. It makes running services easier and safer by providing you with runtime debugging, observability, reliability, and security, all without requiring any changes to your code.

Linkerd works by installing a set of ultra-light, transparent agents next to each service instance. These agents automatically handle all traffic to and from the service. Because they are transparent, these agents act as highly instrumented out-of-process network stacks, sending telemetry data to and receiving control signals from the control plane. This design allows Linkerd to measure and manipulate the traffic to and from your service without introducing excessive latency. To be as small, light, and secure as possible, Linkerd’s agents are written in Rust.

Feature Overview

Automatic mTLS: Linkerd automatically enables mutual transport layer security (TLS) for all communication between Grid applications.Automatic proxy injection: Linkerd automatically injects data plane proxies into annotations-based pods.Container Network Interface Plugin: Linkerd can be configured to run a CNI plug-in that automatically rewrites the iptables rules for each pod.Dashboard and Grafana: Linkerd provides a web dashboard, as well as pre-configured Grafana dashboards.Distributed tracing: You can enable distributed tracing support in Linkerd.Fault Injection: Linkerd provides a mechanism for programmatically injecting faults into the service.High Availability: The Linkerd control plane can operate in High Availability (HA) mode.HTTP, HTTP/2 and gRPC Proxy: Linkerd will automatically enable advanced features (including metrics, load balancing, retries, etc.) for HTTP, HTTP/2 and gRPC connections.Ingress: Linkerd can work with the ingress controller of your choice.Load balancing: Linkerd automatically load balances requests from all target endpoints on HTTP, HTTP/2 and gRPC connections.Multi-cluster communication: Linkerd can transparently and securely connect services running in different clusters.Retries and timeouts: Linkerd can perform service-specific retries and timeouts.Service Profile: Linkerd’s service profile supports per-route metrics as well as retries and timeouts.TCP Proxy and Protocol Inspection: Linkerd can proxy all TCP traffic, including TLS connections, WebSockets and HTTP tunnels.Telemetry and Monitoring: Linkerd automatically collects metrics from all services that send traffic through it.Traffic splitting (canary, blue/green deployment): Linkerd can dynamically send a portion of traffic to a different service.

Installation

We can install Linkerd’s control plane to a Kubernetes cluster by installing a CLI command line tool for Linkerd locally.

So first you need to run the kubectl command locally to make sure you have access to an available Kubernetes cluster, or if there is no cluster, you can quickly create one locally using KinD.

1

2

3

|

$ kubectl version --short

Client Version: v1.23.5

Server Version: v1.22.8

|

You can use the following command to install Linkerd’s CLI tools locally.

1

|

$ curl --proto '=https' --tlsv1.2 -sSfL https://run.linkerd.io/install | sh

|

If you have a Mac system, you can also use the Homebrew tool to install it with one click.

Again, go directly to the Linkerd Release page at https://github.com/linkerd/linkerd2/releases/ to download and install.

After installation, use the following command to verify that the CLI tools are installed successfully.

1

2

3

|

$ linkerd version

Client version: stable-2.11.1

Server version: unavailable

|

Normally we can see the CLI version information, but the Server version: unavailable message appears, which is caused by the fact that we haven’t installed the control plane on the Kubernetes cluster, so next we’ll install the Server side.

Kubernetes clusters can be configured in many different ways, before installing the Linkerd control plane we need to check and verify that all configurations are correct, to check if the cluster is ready to install Linkerd, you can execute the following command.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

|

$ linkerd check --pre

Linkerd core checks

===================

kubernetes-api

--------------

√ can initialize the client

√ can query the Kubernetes API

kubernetes-version

------------------

√ is running the minimum Kubernetes API version

√ is running the minimum kubectl version

pre-kubernetes-setup

--------------------

√ control plane namespace does not already exist

√ can create non-namespaced resources

√ can create ServiceAccounts

√ can create Services

√ can create Deployments

√ can create CronJobs

√ can create ConfigMaps

√ can create Secrets

√ can read Secrets

√ can read extension-apiserver-authentication configmap

√ no clock skew detected

linkerd-version

---------------

√ can determine the latest version

‼ cli is up-to-date

is running version 2.11.1 but the latest stable version is 2.11.4

see https://linkerd.io/2.11/checks/#l5d-version-cli for hints

Status check results are √

|

If everything checks out OK, you can start installing Linkerd’s control plane by executing the following command directly in one click.

1

|

$ linkerd install | kubectl apply -f -

|

In this command, linkerd install generates a Kubernetes resource manifest file containing all the necessary control plane resources, which can then be installed into the Kubernetes cluster using the kubectl apply command.

You can see that the Linkerd control plane will be installed under a namespace named linkerd, and the following Pods will be running after the installation is complete.

1

2

3

4

5

|

$ kubectl get pods -n linkerd

NAME READY STATUS RESTARTS AGE

linkerd-destination-79d6fc496f-dcgfx 4/4 Running 0 166m

linkerd-identity-6b78ff444f-jwp47 2/2 Running 0 166m

linkerd-proxy-injector-86f7f649dc-v576m 2/2 Running 0 166m

|

After the installation is complete wait for the control plane to be ready by running the following command and you can verify that the installation results are correct.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

|

$ linkerd check

Linkerd core checks

===================

kubernetes-api

--------------

√ can initialize the client

√ can query the Kubernetes API

kubernetes-version

------------------

√ is running the minimum Kubernetes API version

√ is running the minimum kubectl version

linkerd-existence

-----------------

√ 'linkerd-config' config map exists

√ heartbeat ServiceAccount exist

√ control plane replica sets are ready

√ no unschedulable pods

√ control plane pods are ready

√ cluster networks contains all node podCIDRs

linkerd-config

--------------

√ control plane Namespace exists

√ control plane ClusterRoles exist

√ control plane ClusterRoleBindings exist

√ control plane ServiceAccounts exist

√ control plane CustomResourceDefinitions exist

√ control plane MutatingWebhookConfigurations exist

√ control plane ValidatingWebhookConfigurations exist

linkerd-identity

----------------

√ certificate config is valid

√ trust anchors are using supported crypto algorithm

√ trust anchors are within their validity period

√ trust anchors are valid for at least 60 days

√ issuer cert is using supported crypto algorithm

√ issuer cert is within its validity period

√ issuer cert is valid for at least 60 days

√ issuer cert is issued by the trust anchor

linkerd-webhooks-and-apisvc-tls

-------------------------------

√ proxy-injector webhook has valid cert

√ proxy-injector cert is valid for at least 60 days

√ sp-validator webhook has valid cert

√ sp-validator cert is valid for at least 60 days

√ policy-validator webhook has valid cert

√ policy-validator cert is valid for at least 60 days

linkerd-version

---------------

√ can determine the latest version

‼ cli is up-to-date

is running version 2.11.1 but the latest stable version is 2.11.4

see https://linkerd.io/2.11/checks/#l5d-version-cli for hints

control-plane-version

---------------------

√ can retrieve the control plane version

‼ control plane is up-to-date

is running version 2.11.1 but the latest stable version is 2.11.4

see https://linkerd.io/2.11/checks/#l5d-version-control for hints

√ control plane and cli versions match

linkerd-control-plane-proxy

---------------------------

√ control plane proxies are healthy

‼ control plane proxies are up-to-date

some proxies are not running the current version:

* linkerd-destination-79d6fc496f-dcgfx (stable-2.11.1)

* linkerd-identity-6b78ff444f-jwp47 (stable-2.11.1)

* linkerd-proxy-injector-86f7f649dc-v576m (stable-2.11.1)

see https://linkerd.io/2.11/checks/#l5d-cp-proxy-version for hints

√ control plane proxies and cli versions match

Status check results are √

|

When the above Status check results are √ message appears, it means that the Linkerd control plane is successfully installed. In addition to installing the control plane using the CLI tool, we can also install it using the Helm Chart method as follows.

1

2

3

4

5

6

7

8

9

10

11

12

|

$ helm repo add linkerd https://helm.linkerd.io/stable

# set expiry date one year from now, in Mac:

$ exp=$(date -v+8760H +"%Y-%m-%dT%H:%M:%SZ")

# in Linux:

$ exp=$(date -d '+8760 hour' +"%Y-%m-%dT%H:%M:%SZ")

$ helm install linkerd2 \

--set-file identityTrustAnchorsPEM=ca.crt \

--set-file identity.issuer.tls.crtPEM=issuer.crt \

--set-file identity.issuer.tls.keyPEM=issuer.key \

--set identity.issuer.crtExpiry=$exp \

linkerd/linkerd2

|

In addition the chart contains a values-ha.yaml file which overrides some default values for setting in high availability scenarios, similar to the -ha option in linkerd install. We can get values-ha.yaml by getting the chart file.

1

|

$ helm fetch --untar linkerd/linkerd2

|

Then use -f flag to overwrite the file, e.g.

1

2

3

4

5

6

7

8

|

## see above on how to set $exp

helm install linkerd2 \

--set-file identityTrustAnchorsPEM=ca.crt \

--set-file identity.issuer.tls.crtPEM=issuer.crt \

--set-file identity.issuer.tls.keyPEM=issuer.key \

--set identity.issuer.crtExpiry=$exp \

-f linkerd2/values-ha.yaml \

linkerd/linkerd2

|

Either way is fine for installation. Now that we have completed the installation of Linkerd, re-execute the linkerd version command to see the Server side version information.

1

2

3

|

$ linkerd version

Client version: stable-2.11.1

Server version: stable-2.11.1

|

Example

Next we install a simple example application, Emojivoto, which is a simple standalone Kubernetes application that uses a mix of gRPC and HTTP calls to allow users to vote on their favorite emoji.

Emojivoto can be installed into the emojivoto namespace by running the following command.

1

2

3

4

5

6

7

8

9

10

11

12

|

$ curl -fsL https://run.linkerd.io/emojivoto.yml | kubectl apply -f -

namespace/emojivoto created

serviceaccount/emoji created

serviceaccount/voting created

serviceaccount/web created

service/emoji-svc created

service/voting-svc created

service/web-svc created

deployment.apps/emoji created

deployment.apps/vote-bot created

deployment.apps/voting created

deployment.apps/web created

|

Under this application, you can see that there are 4 Pod services.

1

2

3

4

5

6

7

8

9

10

11

|

$ kubectl get pods -n emojivoto

NAME READY STATUS RESTARTS AGE

emoji-66ccdb4d86-6vqvt 1/1 Running 0 91s

vote-bot-69754c864f-k26fb 1/1 Running 0 91s

voting-f999bd4d7-k44nb 1/1 Running 0 91s

web-79469b946f-bz295 1/1 Running 0 91s

$ kubectl get svc -n emojivoto

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

emoji-svc ClusterIP 10.111.7.125 <none> 8080/TCP,8801/TCP 2m49s

voting-svc ClusterIP 10.105.192.118 <none> 8080/TCP,8801/TCP 2m48s

web-svc ClusterIP 10.111.236.171 <none> 80/TCP 2m48s

|

We can expose the web-svc service via port-forward and then access the application in the browser.

1

|

$ kubectl -n emojivoto port-forward svc/web-svc 8080:80

|

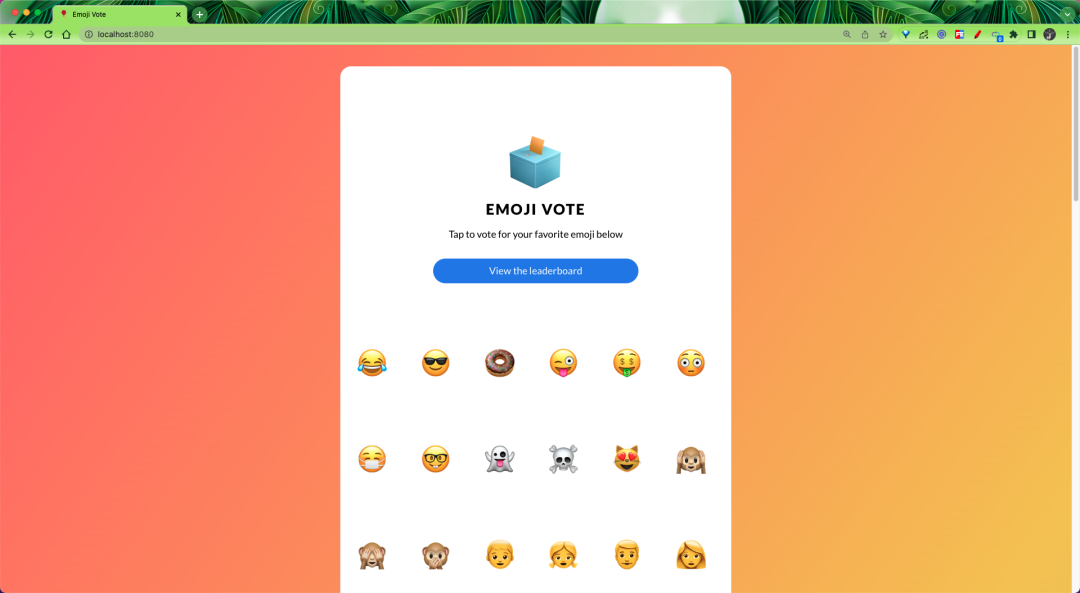

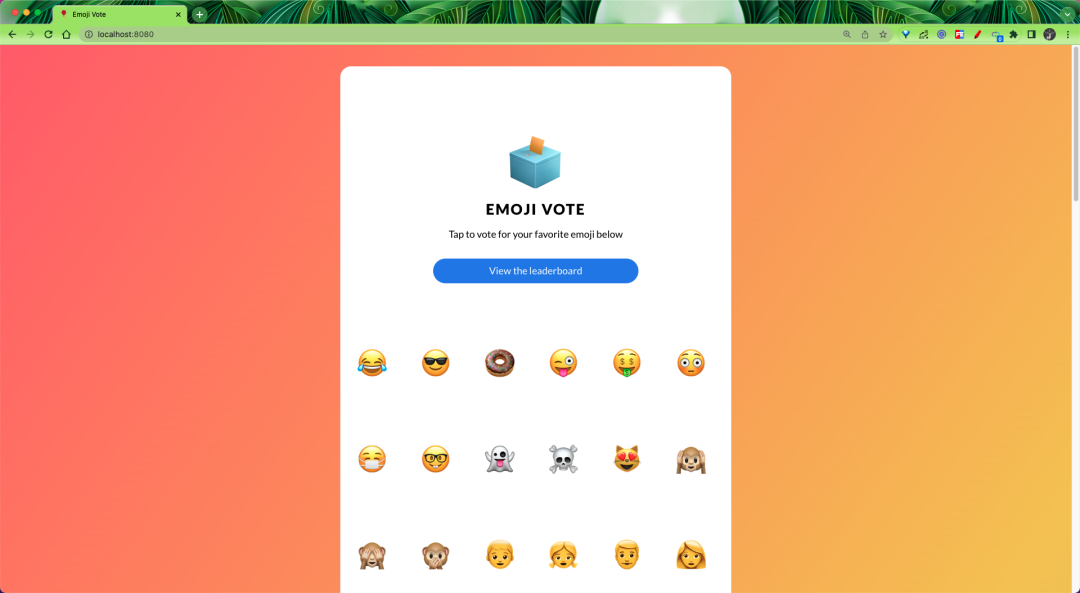

Now we can access the Emojivoto application from our browser via http://localhost:8080.

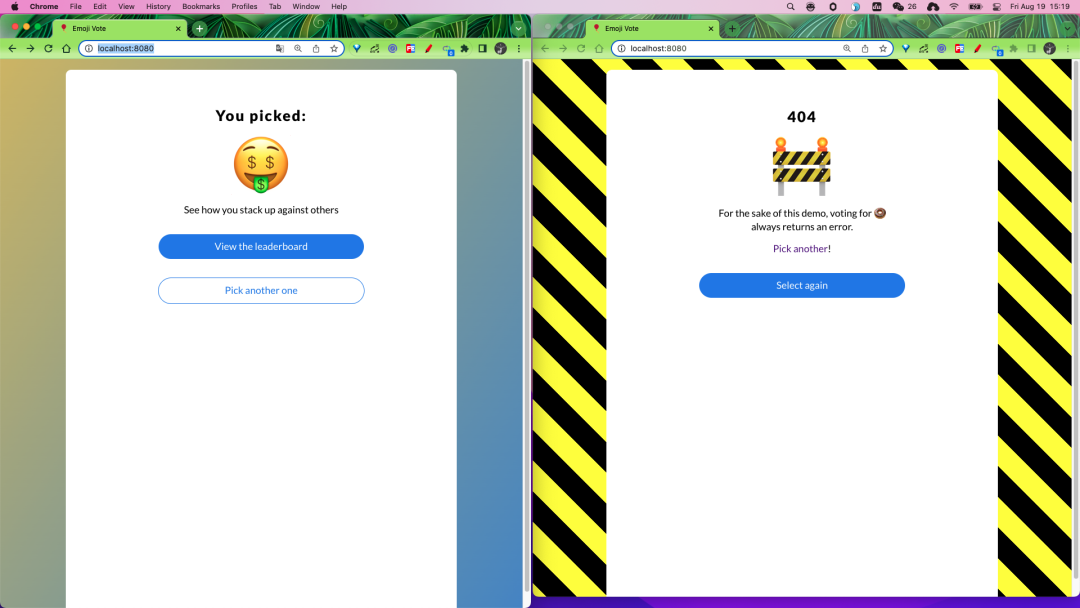

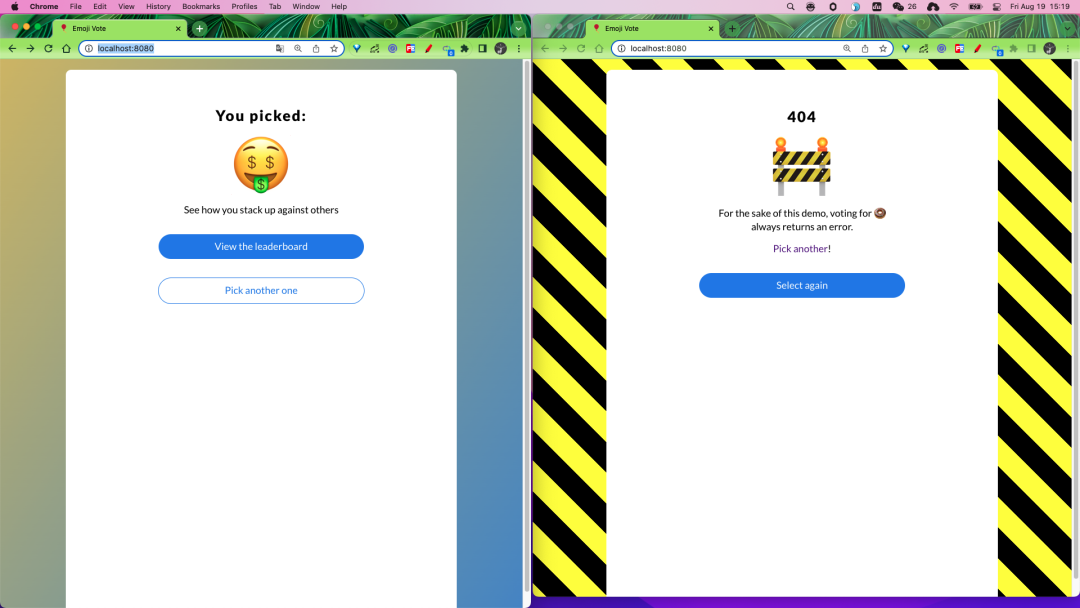

We can select the emoji we like on the page to vote, but some errors will appear after selecting some emoji, for example, when we click on the donut emoji we will get a 404 page.

But don’t worry, this is a deliberate error left in the application to identify the issue later using Linkerd.

Next, we can add the above example application to Service Mesh, add Linkerd’s data plane proxy to it, and run the following command directly to mesh the Emojivoto application.

1

2

3

4

5

6

7

8

9

10

11

12

13

|

$ kubectl get -n emojivoto deploy -o yaml \

| linkerd inject - \

| kubectl apply -f -

deployment "emoji" injected

deployment "vote-bot" injected

deployment "voting" injected

deployment "web" injected

deployment.apps/emoji configured

deployment.apps/vote-bot configured

deployment.apps/voting configured

deployment.apps/web configured

|

The above command first fetches all Deployments running in the emojivoto namespace, then runs their manifests through linkerd inject and reapplies them to the cluster.

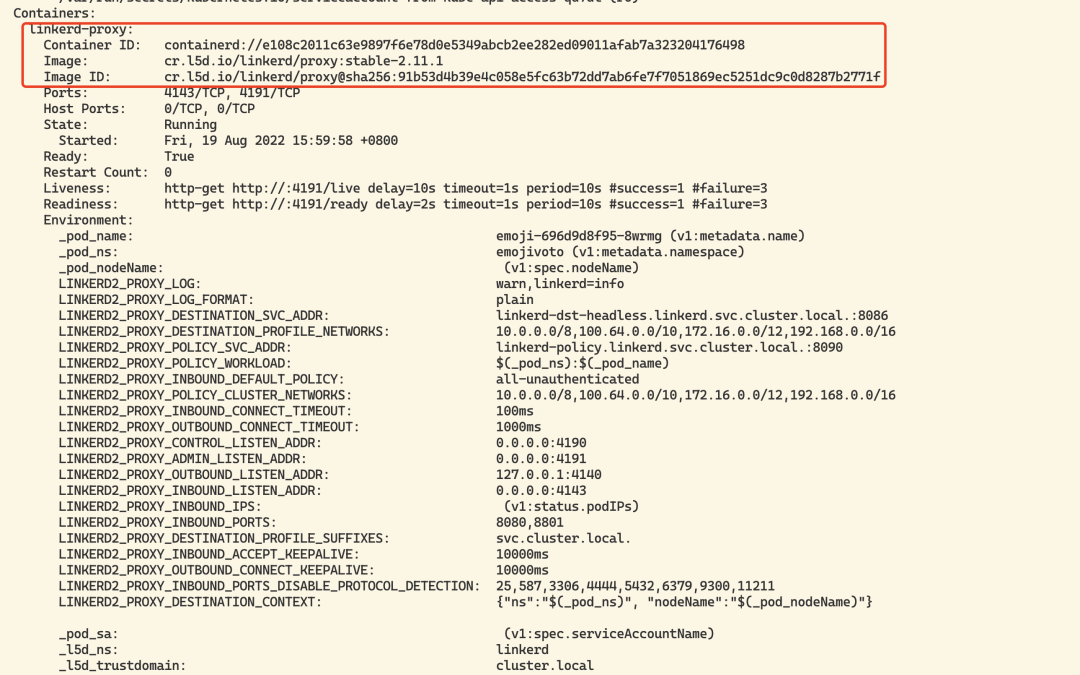

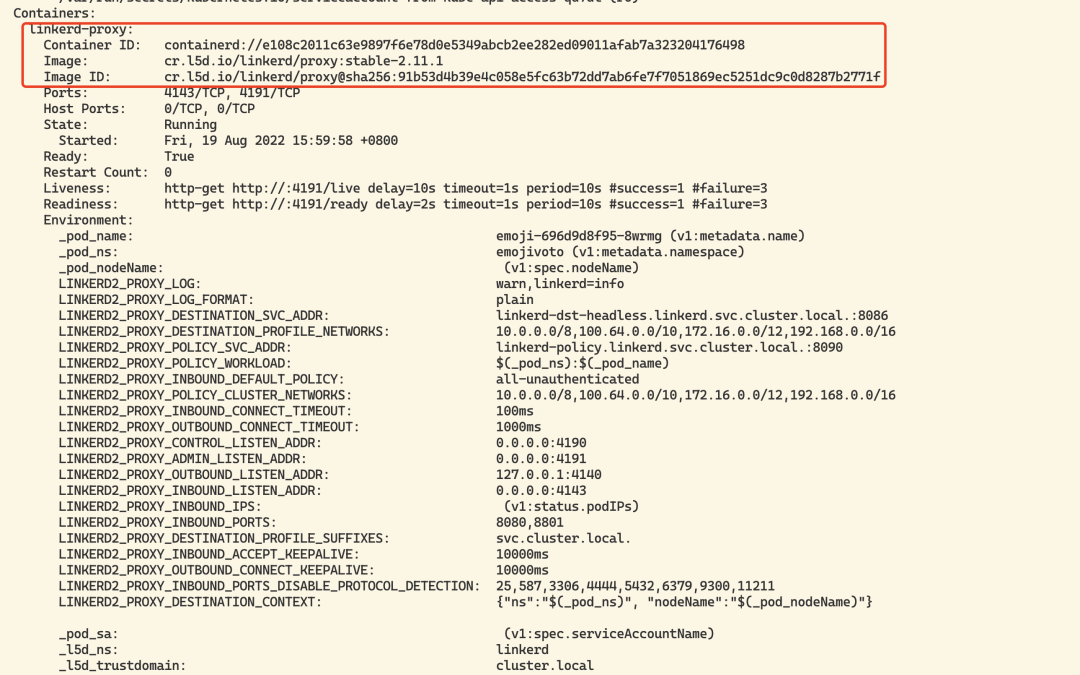

Note that the linkerd inject command only adds a linkerd.io/inject: enabled annotation to the Pod specification and does not directly inject a Sidecar container, which instructs Linkerd to inject the agent into the Pod when it is created, so the application Pod will So after executing the above command, a sidecar proxy container will be added to the application Pod.

1

2

3

4

5

6

|

$ kubectl get pods -n emojivoto

NAME READY STATUS RESTARTS AGE

emoji-696d9d8f95-8wrmg 2/2 Running 0 34m

vote-bot-6d7677bb68-c98kb 2/2 Running 0 34m

voting-ff4c54b8d-rdtmk 2/2 Running 0 34m

web-5f86686c4d-qh5bz 2/2 Running 0 34m

|

You can see that each Pod now has 2 containers, with one more sidecar proxy container for Linkerd compared to the previous one.

Once the application update is complete, we have successfully introduced the application into Linkerd’s Grid service. The new proxy containers make up the data plane, and we can also check the data plane status with the following command.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

|

$ linkerd -n emojivoto check --proxy

Linkerd core checks

===================

kubernetes-api

--------------

√ can initialize the client

√ can query the Kubernetes API

kubernetes-version

------------------

√ is running the minimum Kubernetes API version

√ is running the minimum kubectl version

linkerd-existence

-----------------

√ 'linkerd-config' config map exists

√ heartbeat ServiceAccount exist

√ control plane replica sets are ready

√ no unschedulable pods

√ control plane pods are ready

√ cluster networks contains all node podCIDRs

linkerd-config

--------------

√ control plane Namespace exists

√ control plane ClusterRoles exist

√ control plane ClusterRoleBindings exist

√ control plane ServiceAccounts exist

√ control plane CustomResourceDefinitions exist

√ control plane MutatingWebhookConfigurations exist

√ control plane ValidatingWebhookConfigurations exist

linkerd-identity

----------------

√ certificate config is valid

√ trust anchors are using supported crypto algorithm

√ trust anchors are within their validity period

√ trust anchors are valid for at least 60 days

√ issuer cert is using supported crypto algorithm

√ issuer cert is within its validity period

√ issuer cert is valid for at least 60 days

√ issuer cert is issued by the trust anchor

linkerd-webhooks-and-apisvc-tls

-------------------------------

√ proxy-injector webhook has valid cert

√ proxy-injector cert is valid for at least 60 days

√ sp-validator webhook has valid cert

√ sp-validator cert is valid for at least 60 days

√ policy-validator webhook has valid cert

√ policy-validator cert is valid for at least 60 days

linkerd-identity-data-plane

---------------------------

√ data plane proxies certificate match CA

linkerd-version

---------------

√ can determine the latest version

‼ cli is up-to-date

is running version 2.11.1 but the latest stable version is 2.11.4

see https://linkerd.io/2.11/checks/#l5d-version-cli for hints

linkerd-control-plane-proxy

---------------------------

√ control plane proxies are healthy

‼ control plane proxies are up-to-date

some proxies are not running the current version:

* linkerd-destination-79d6fc496f-dcgfx (stable-2.11.1)

* linkerd-identity-6b78ff444f-jwp47 (stable-2.11.1)

* linkerd-proxy-injector-86f7f649dc-v576m (stable-2.11.1)

see https://linkerd.io/2.11/checks/#l5d-cp-proxy-version for hints

√ control plane proxies and cli versions match

linkerd-data-plane

------------------

√ data plane namespace exists

√ data plane proxies are ready

‼ data plane is up-to-date

some proxies are not running the current version:

* emoji-696d9d8f95-8wrmg (stable-2.11.1)

* vote-bot-6d7677bb68-c98kb (stable-2.11.1)

* voting-ff4c54b8d-rdtmk (stable-2.11.1)

* web-5f86686c4d-qh5bz (stable-2.11.1)

see https://linkerd.io/2.11/checks/#l5d-data-plane-version for hints

√ data plane and cli versions match

√ data plane pod labels are configured correctly

√ data plane service labels are configured correctly

√ data plane service annotations are configured correctly

√ opaque ports are properly annotated

Status check results are √

|

Of course, we can still access the application via http://localhost:8080, but of course there’s no difference in use from before, we can use Linkerd to see what the application is actually doing, but we need to go and install a separate plugin, because Linkerd’s core control plane is very lightweight, so Linkerd These plugins add some non-critical but often useful functionality to Linkerd, including various dashboards, for example we can install a viz plugin, the Linkerd-Viz plugin contains Linkerd’s observability and visualization components.

The installation command is shown below.

1

|

$ linkerd viz install | kubectl apply -f -

|

The above command creates a namespace named linkerd-viz where monitoring-related applications such as Prometheus, Grafana, etc. will be installed.

1

2

3

4

5

6

7

8

|

$ kubectl get pods -n linkerd-viz

NAME READY STATUS RESTARTS AGE

grafana-8d54d5f6d-wwtdz 2/2 Running 0 3h1m

metrics-api-6c59967bf4-pjwdq 2/2 Running 0 4h22m

prometheus-7bbc4d8c5b-5rc8r 2/2 Running 0 4h22m

tap-599c774dfb-m2kqz 2/2 Running 0 4h22m

tap-injector-748d54b7bc-jvshs 2/2 Running 0 4h22m

web-db97ff489-5599v 2/2 Running 0 4h22m

|

Once the installation is complete, we can use the following command to open a dashboard page.

1

|

$ linkerd viz dashboard &

|

When the viz plug-in is deployed, executing the above command will automatically open a Linkerd observable Dashboard in the browser.

Alternatively, we can expose the viz service via Ingress, creating the resource object shown below.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

|

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: web-ingress

namespace: linkerd-viz

annotations:

nginx.ingress.kubernetes.io/upstream-vhost: $service_name.$namespace.svc.cluster.local:8084

nginx.ingress.kubernetes.io/configuration-snippet: |

proxy_set_header Origin "";

proxy_hide_header l5d-remote-ip;

proxy_hide_header l5d-server-id;

spec:

ingressClassName: nginx

rules:

- host: linkerd.k8s.local

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: web

port:

number: 8084

|

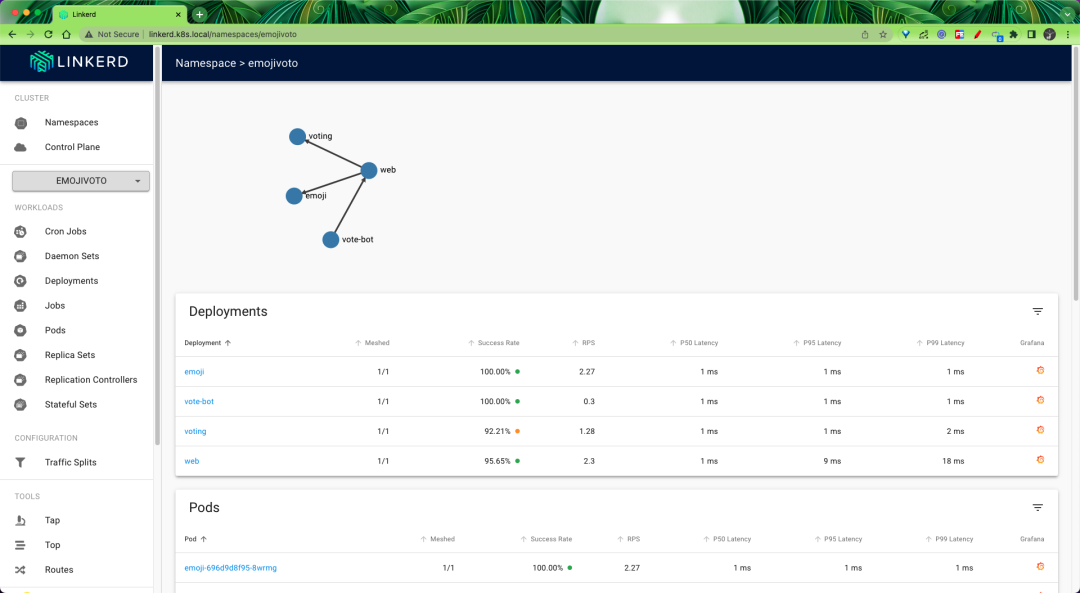

Once applied, you can access viz through linkerd.k8s.local.

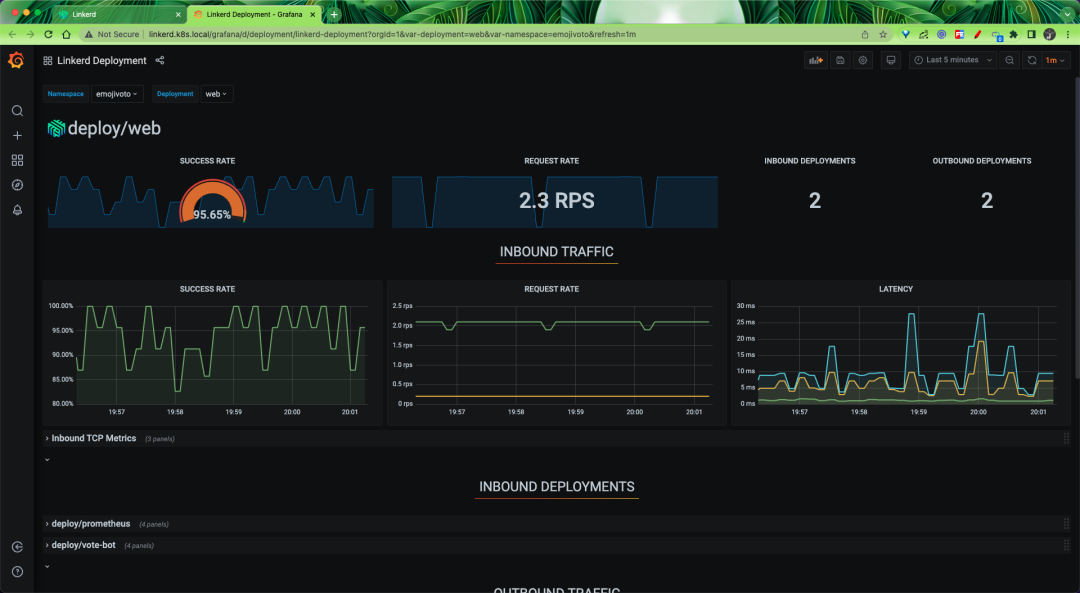

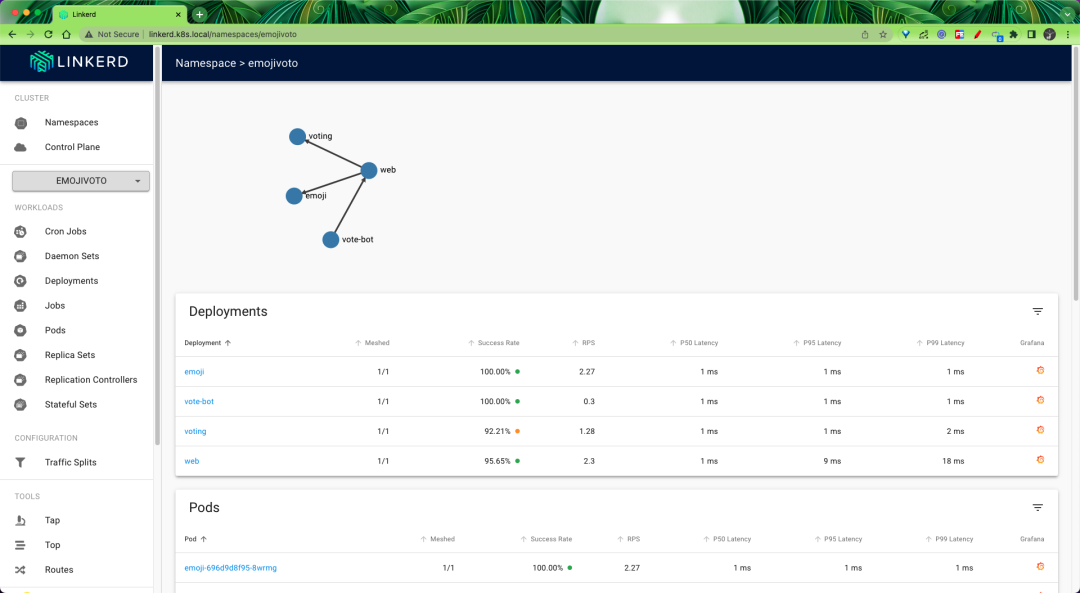

An automatically generated topology diagram can also be displayed.

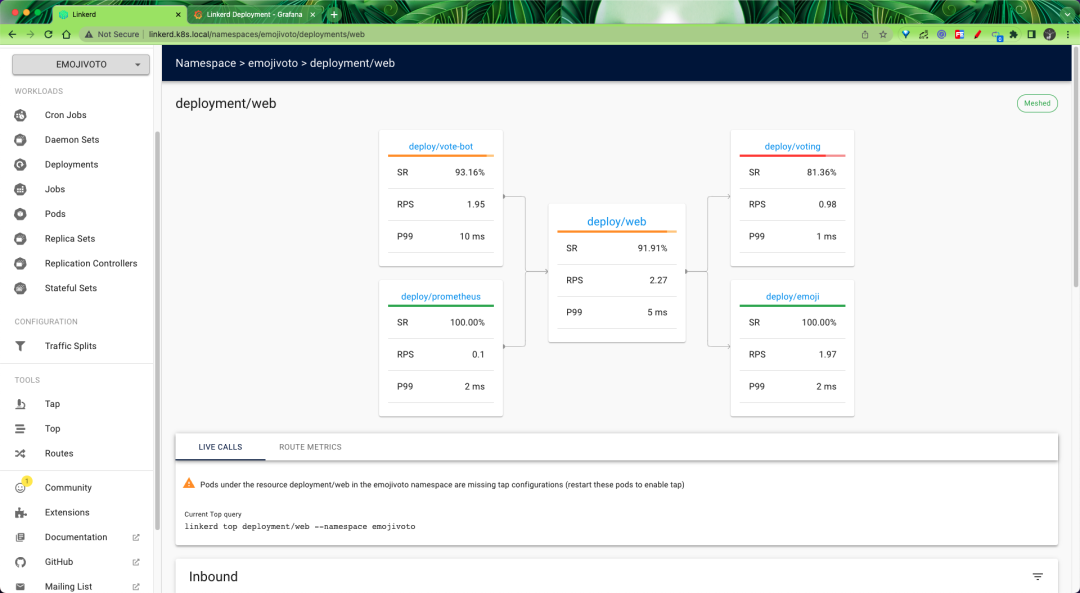

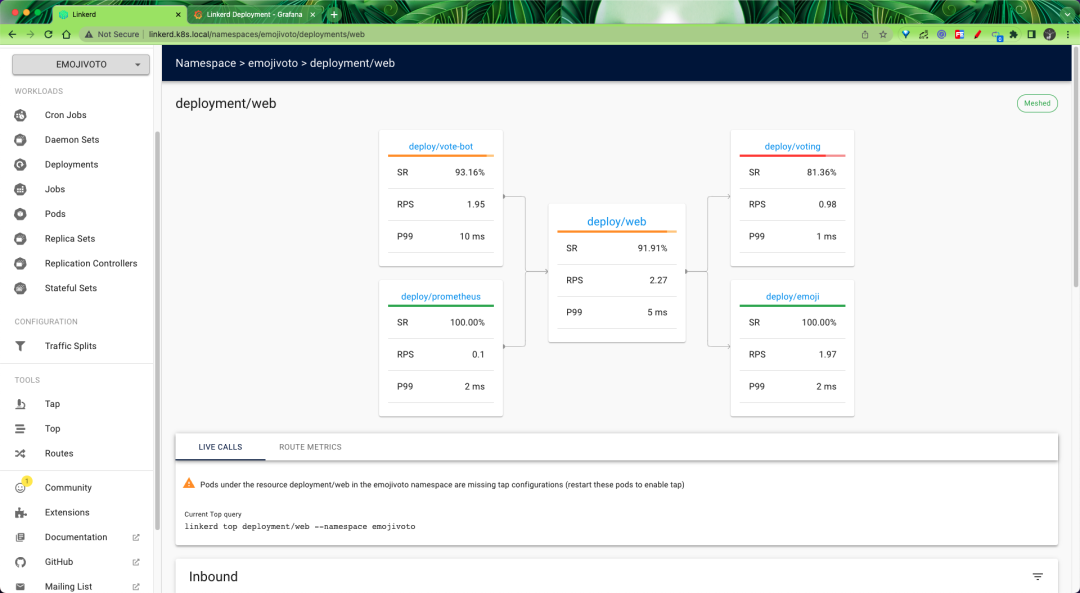

On the page, we can find real-time metrics for each Emojivoto component, so we can determine which component is partially malfunctioning, and then target the problem.

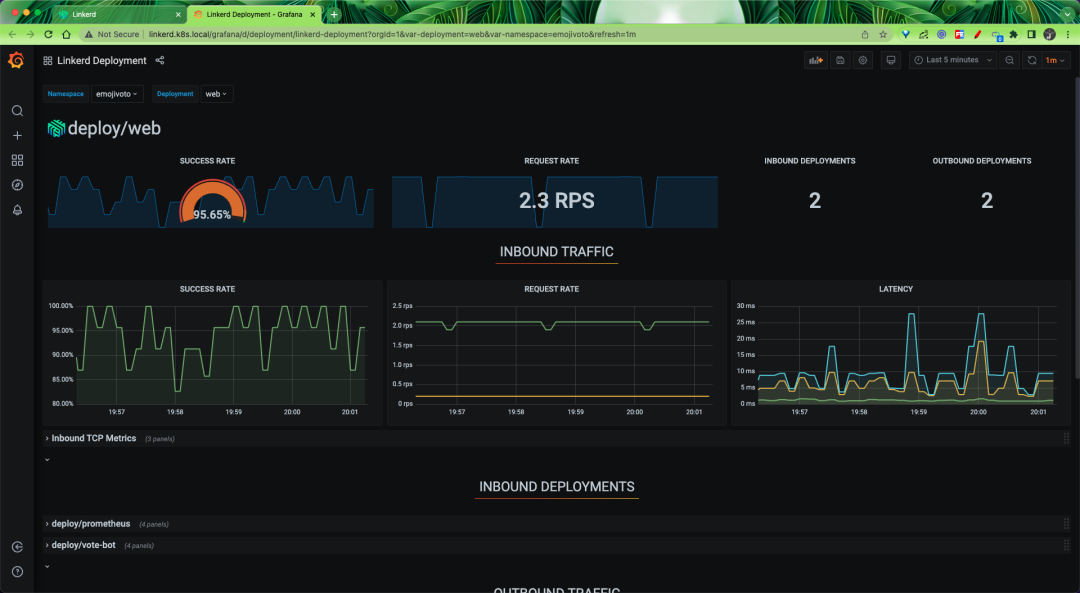

The corresponding resource contains a Grafana icon that you can click to automatically jump to Grafana’s monitoring page.