Image credit from “Kubernetes Operators Explained

A few years ago, I called Kubernetes the de facto standard for service orchestration and container scheduling, and today K8s is the unchallenged “kingpin” of the space. However, while Kubernetes has evolved to become very complex today, the original data model, application model and scaling approach of Kubernetes is still valid. And application models and scaling methods like Operator are becoming increasingly popular with developers and operators.

We have stateful backend services inside our platform. Deployment and maintenance of stateful services is the specialty of k8s operators, so it’s time to look at operator.

Study the operator now.

1. Advantages of Operator

The concept of the kubernetes operator originally came from CoreOS, a container technology company acquired by Red Hat.

CoreOS introduced the operator concept along with the first reference implementations of the operator: the etcd operator and the prometheus operator.

Note: etcd was released as open source by CoreOS in 2013; prometheus, the first time-series data storage and monitoring system for cloud-native services, was released as open source by SoundCloud in 2012.

Here is CoreOS’s interpretation of the concept of Operator: Operator represents in software the human knowledge of operations and maintenance through which an application can be reliably managed.

Screenshot from the official CoreOS blog archive

The original purpose of Operator was to free up operations staff, and today Operator is becoming increasingly popular with cloud-native operations developers.

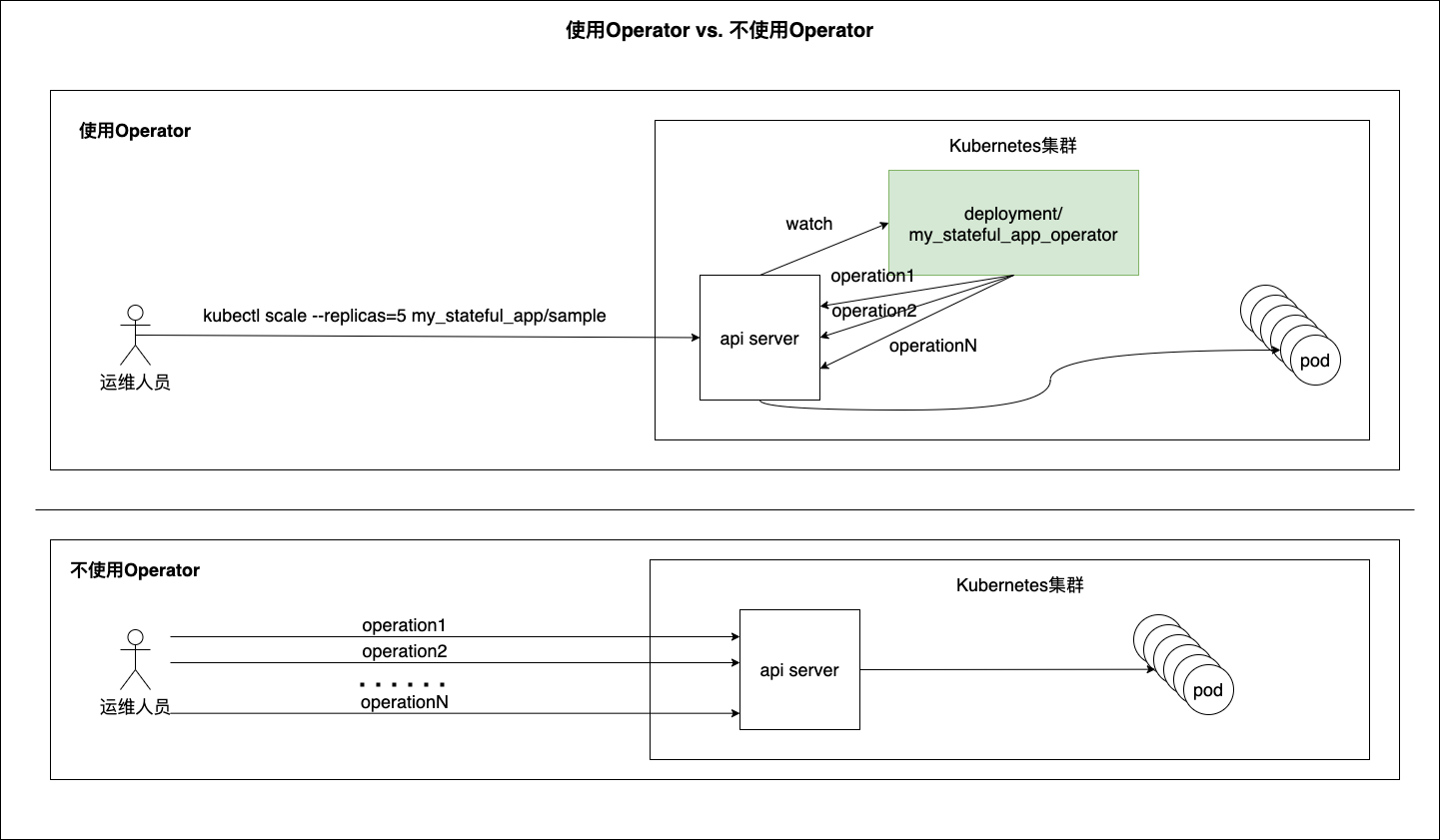

So what are the benefits of Operator? The following diagram compares the benefits of using Operator with those of not using it.

This diagram gives you an idea of the benefits of operators, even if you don’t know much about them.

We can see that with operator, a simple command is all that is needed to scale a stateful application (in this case, scale-out operations, but also other “complex” operations for stateful applications such as version upgrades). Ops doesn’t need to know how scaling works inside k8s for stateful applications.

In the absence of an operator, Ops needs to have a deep knowledge of the steps of stateful application scaling, and execute the commands in a sequence one by one and check the command response, and retry in case of failure until the scaling succeeds.

We see operator as an experienced operator built into k8s, monitoring the state of the target object at all times, keeping the complexity to itself, giving the operator a simple interface to interact with, and at the same time operator reduces the probability of operational errors caused by the operator for personal reasons.

However, although operator is good, but the development threshold is not low. The development threshold is reflected in at least the following aspects.

- The understanding of the operator concept is based on the understanding of k8s, which has become increasingly complex since it was open sourced in 2014, and requires some time investment to understand.

- Hand-jobbing operator from scratch is very verbose, almost no one does it, most developers go to learn the corresponding development framework and tools, such as: kubebuilder, operator framework sdk, etc..

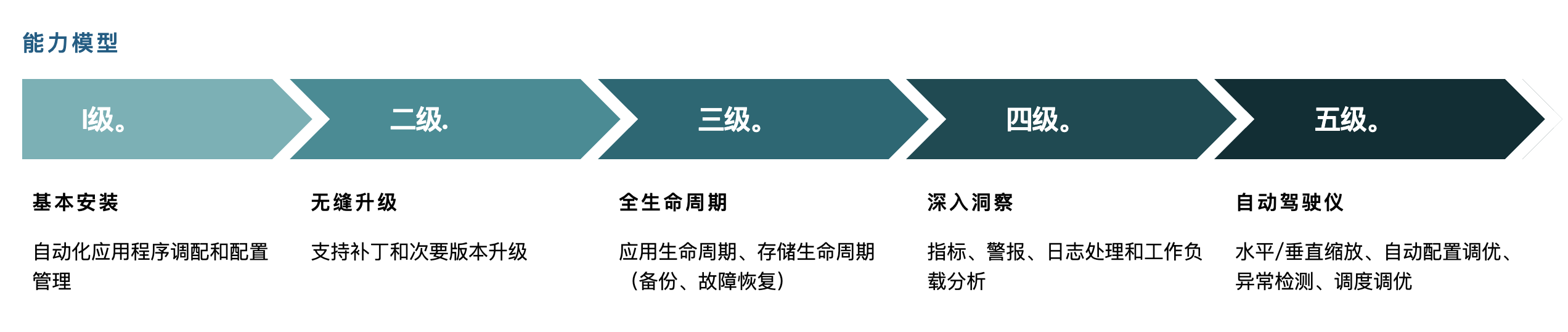

- There are also high and low levels of operator capability, and the operator framework proposes a CAPABILITY MODEL containing * five levels of operator capability, see the following figure. Developing operators with high capability levels using Go requires a deep understanding of client-go, the API in the official kubernetes go client library.

Screenshot from operator framework official website

Of course, among these thresholds, understanding the concept of operator is both the foundation and the prerequisite, and understanding operator in turn presupposes a deep understanding of many concepts of kubernetes, especially resource, resource type, API, controller, and the relationship between them. Let’s take a quick look at these concepts.

2. Introduction to Kubernetes resource, resource type, API and controller

Kubernetes has evolved to the point where its essence has become apparent.

- Kubernetes is a “database” (the data is actually stored persistently in etcd).

- Its API is a “sql statement”.

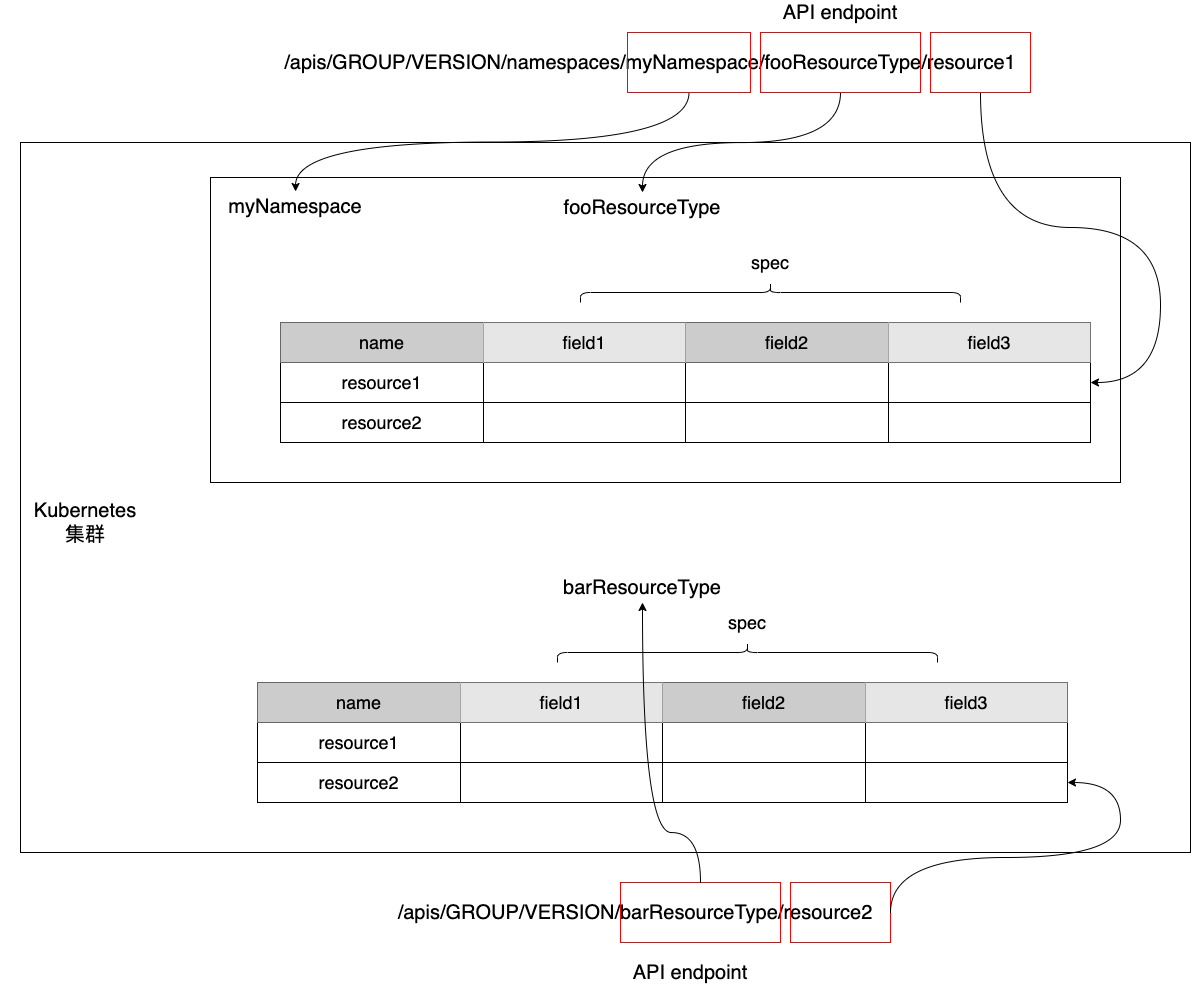

- The API is designed in a resource-based Restful style, with the resource type being the endpoint of the API.

- Each type of resource (i.e. Resource Type) is a “table”, and the spec of the Resource Type corresponds to the “table structure” information (schema).

- A row in each “table” is a resource, i.e., an instance of the Resource Type corresponding to that table.

- Kubernetes has many built-in “tables”, such as Pod, Deployment, DaemonSet, ReplicaSet, etc.

The following is a schematic representation of the relationship between the Kubernetes API and RESOURCE.

We see that there are two types of resource types, one namespace-related (namespace-scoped), and we manipulate instances of such resource types by means of an API of the following form.

|

|

The other class is namespace-independent, i.e., cluster-scoped, and we operate on instances of this type of resource type through the following form of API.

We know that Kubernetes is not really just a “database”, it is a platform standard for service orchestration and container scheduling, and its basic scheduling unit is a Pod (also a resource type), which is a collection of containers. How are Pods created, updated and deleted? This can’t be done without a controller. Each type of resource type has its own controller . Take the resource type pod as an example, its controller is an instance of ReplicasSet.

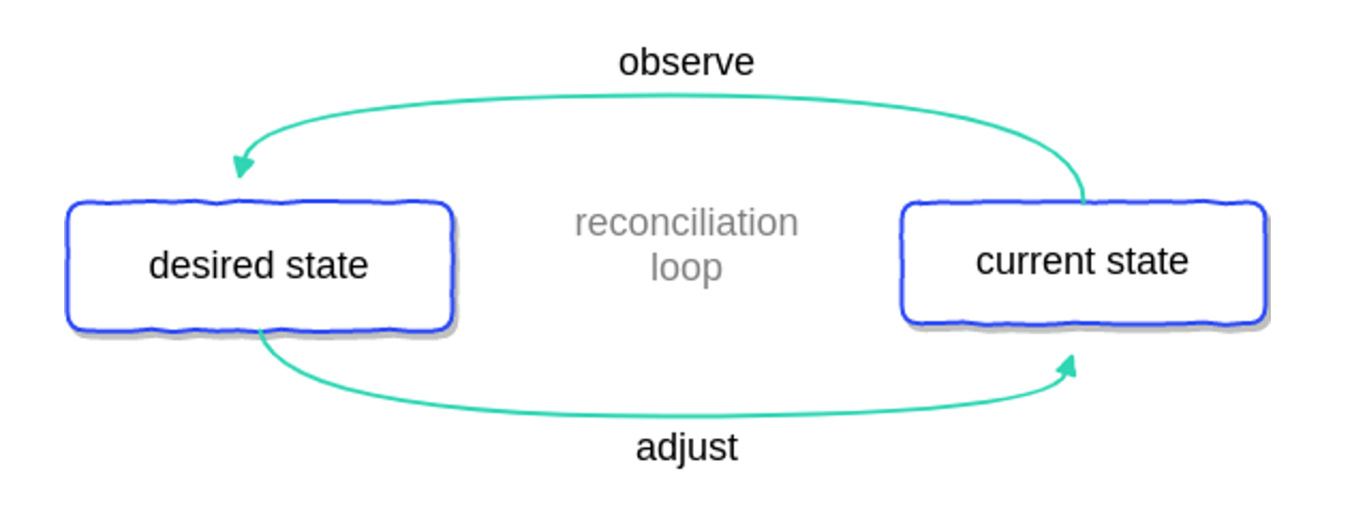

The operating logic of the controller is shown in the following diagram.

Quoted from the article “Kubernetes Operators Explained

Once the controller starts, it will try to get the current state of the resource and compare it with the desired state (spec) of the resource stored in k8s, and if it does not match, the controller will call the corresponding API to adjust it and try to make the current state to agree with the desired state. This reconciliation process is called reconciliation, and the pseudo-code logic of the reconciliation process is as follows.

Note: There is a concept of object in k8s? So what is an object? It is similar to the Java Object base class or the Object superclass in Ruby. Not only is the instance resource of a resource type an (is-a)object, but the resource type itself is also an object, which is an instance of the kubernetes concept.

With the above initial understanding of these concepts of k8s, let’s understand what Operator really is!

3. Operator pattern = operation object (CRD) + control logic (controller)

If the operations and maintenance personnel face these built-in resource type (such as deployment, pod, etc.), which is the second case in the previous “using operator vs. not using operator” comparison chart, the operations and maintenance personnel will face a very complex situation, and operation The operation will be very complicated and error-prone.

So how do we customize the resource type if we don’t face the built-in resource type, Kubernetes provides Custom Resource Definition, CRD (when coreos first introduced the operator concept, crd was formerly known as Third Party Resource, TPR) can be used to customize the resource type.

According to our understanding of resource type, defining a CRD is equivalent to creating a new “table” (resource type), and once the CRD is created, k8s will automatically generate an API endpoint for us corresponding to the CRD, and we can manipulate it through yaml or API. We can manipulate this “table” through yaml or API. We can “insert” data into the “table”, i.e., create a Custom Resource (CR) based on the CRD, which is like creating a Deployment instance and inserting data into the Deployment “table”.

Like the native built-in resource type, it is not enough to have a CR that stores the state of the object, the native resource type has a corresponding controller responsible for coordinating the creation, scaling and deletion of instances. CR also needs such a “coordinator”. That is, we also need to define a controller to listen to the CR state and manage the creation, scaling, and deletion of CRs, as well as to keep the desired state (spec) consistent with the current state (current state). This controller is no longer an instance of the native Resource type, but a controller for the CRD-oriented instance CR.

With a custom operation object type (CRD) and a controller oriented to instances of the operation object type, we packaged it into a concept: the “Operator pattern”. The controller in the operator pattern is also called operator, and it is the controller in the The main body of the cluster that performs maintenance operations on CRs.

4. Developing a webserver operator with kubebuilder

Assumptions: At this point, your local development environment has everything you need to access your experimental k8s environment, and you can manipulate k8s at will through the kubectl tool.

A more in-depth explanation of the concept is not as helpful as a real-world experience to understand the concept, we will develop a simple Operator.

As mentioned before, operator development is very verbose, so the community provides development tools and frameworks to help developers simplify the development process, and the current mainstream includes operator framework sdk and kubebuilder. The former is a set of tools open-sourced and maintained by redhat, supporting operator development using go, ansible, and helm (of which only go can develop operators up to capability level 5, while the other two cannot); and kubebuilder is an operator development tool maintained by the official sig (special interest group) of kubernetes. Currently, when developing operators based on the operator framework sdk and go, the operator sdk also uses kubebuilder at the bottom, so here we will directly use kubebuilder to develop operators.

A more in-depth explanation of the concept is not as helpful as a real-world experience to understand the concept, we will develop a simple Operator.

As mentioned before, operator development is very verbose, so the community provides development tools and frameworks to help developers simplify the development process, and the current mainstream includes operator framework sdk and kubebuilder. The former is a set of tools open-sourced and maintained by redhat, which supports operator development using go, ansible, and helm (of which only go can develop operators up to capability level 5, while the other two cannot); and kubebuilder is an operator development tool maintained by the official sig (interest group) of kubernetes. Currently, when developing operators based on the operator framework sdk and go, the operator sdk also uses kubebuilder at the bottom, so here we will directly use kubebuilder to develop operators.

According to the operator capability model, our operator is almost at this level of level 2. We define a resource type of a webserver, which represents an nginx-based webserver cluster, and our operator supports the creation of webserver examples (an nginx cluster), support for nginx cluster scaling, and support for version upgrades of nginx in the cluster.

Let’s use kubebuilder to implement this operator!

1. Installing kubebuilder

Here we use the source code build method to install, and the steps are as follows.

|

|

Then just copy bin/kubebuilder to one of the paths in your PATH environment variable.

2. Creating the webserver-operator project

Next, we can use kubebuilder to create the webserver-operator project.

|

|

Note: -repo specifies the module root path in go.mod, you can define your own module root path.

3. Creating the API and generating the initial CRD

The Operator consists of a CRD and a controller. Here we create our own CRD, i.e., a custom resource type, which is the endpoint of the API, and we use the following kubebuilder create command to complete this step.

|

|

After that, we execute make manifests to generate the yaml file corresponding to the final CRD.

At this moment, the directory file layout of the entire project is as follows.

|

|

4. The basic structure of the webserver-operator

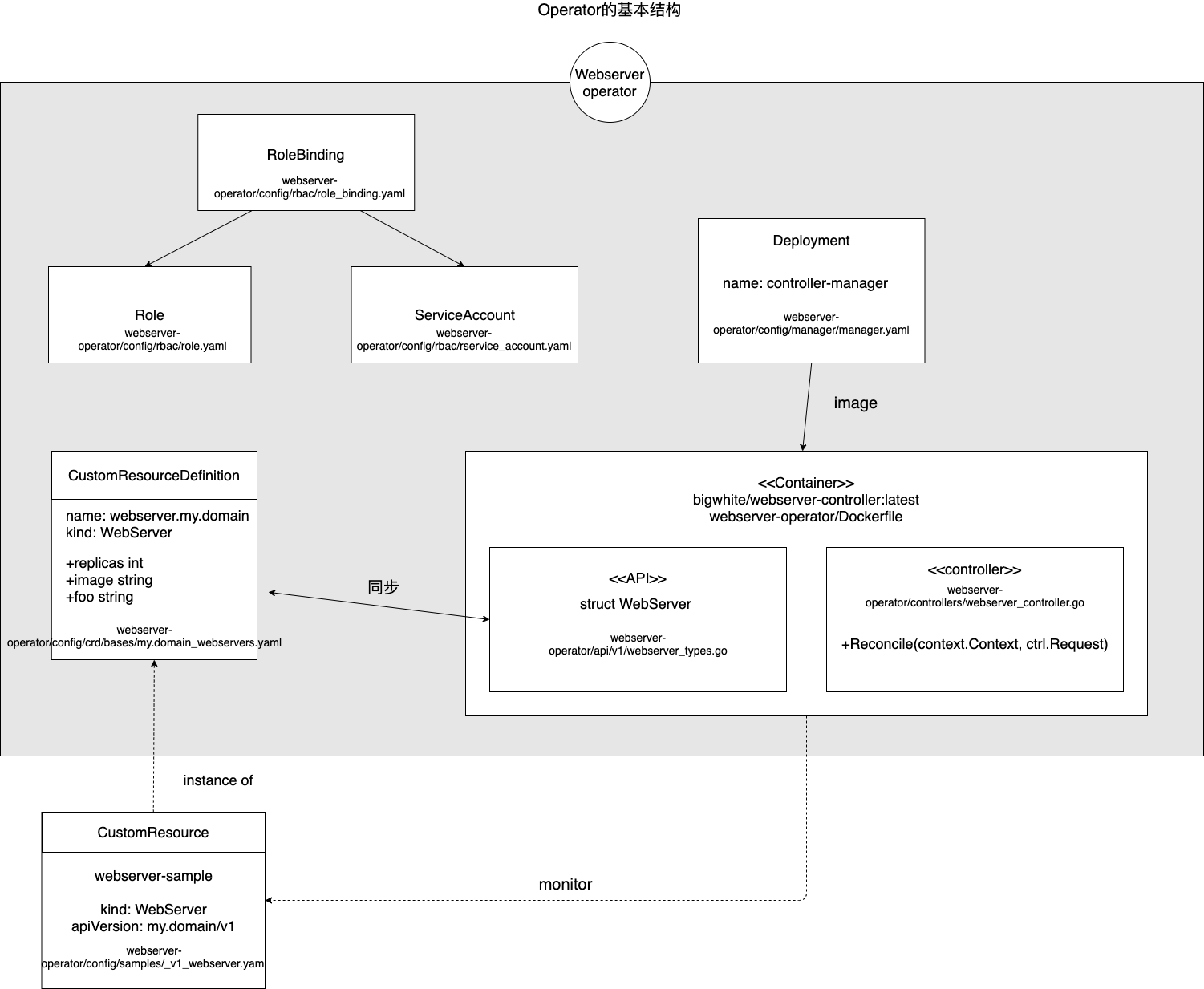

Ignoring things like leader election, auth_proxy, etc. that we don’t care about this time, I’ve organized the main parts of this operator example into the following diagram.

The parts of the diagram are the basic structure of the operator generated using kubebuilder.

The webserver operator consists mainly of a CRD and a controller.

-

CRD

The box in the lower left corner of the diagram is the CRD yaml file generated above: config/crd/bases/my.domain_webservers.yaml. CRD is closely related to api/v1/webserver_types.go. We define spec related fields for CRD in api/v1/webserver_types.go, after that make manifests command can parse the changes in webserver_types.go and update the CRD yaml file.

-

controller

The right part of the diagram shows that the controller itself is deployed as a deployment running in the k8s cluster, which monitors the running state of the CRD’s instance CR and checks in the Reconcile method whether the expected state is consistent with the current state, and if not, performs the relevant action.

-

Other

The top left corner of the figure is about the controller’s permission settings. The controller accesses the k8s API server through serviceaccount, and sets the controller’s role and permission through role.yaml and role_binding.yaml.

5. Adding fields to the CRD spec (fields)

In order to achieve the functional goals of the webserver operator, we need to add some status fields to the CRD spec. As mentioned before, the CRD is synchronized with the webserver_types.go file in the api, so we just need to modify the webserver_types.go file. We add Replicas and Image fields to the WebServerSpec structure, which are used to indicate the number of copies of the webserver instance and the container image used, respectively.

|

|

After saving the changes, execute make manifests to regenerate config/crd/bases/my.domain_webservers.yaml

|

|

Once the CRD is defined, we can install it into k8s.

|

|

Check the installation.

6. Modify role.yaml

Before we start the controller development, let’s “pave the way” for the subsequent operation of the controller, i.e., set the appropriate permissions.

We will create the corresponding deployments and services for the CRD instance in the controller, which requires the controller to have the permission to operate the deployments and services, so we need to modify role.yaml to add service account: controller-manager to manipulate deployments and services.

|

|

The modified role.yaml will be placed here first and then deployed to k8s together with the controller.

7. Implementing the controller’s Reconcile (coordination) logic

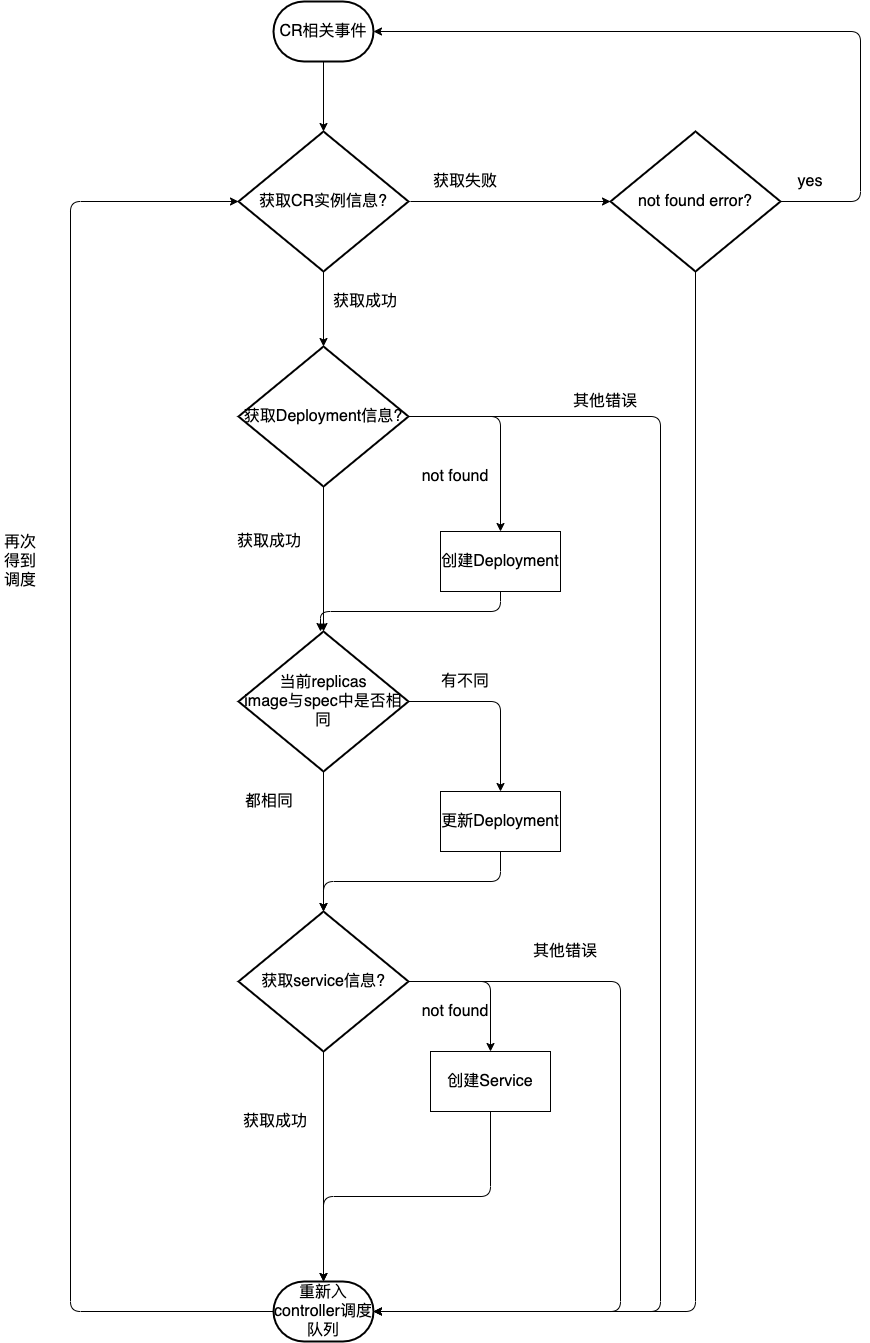

kubebuilder builds the code framework of the controller for us, we just need to implement the Reconcile method of WebServerReconciler in controllers/webserver_controller.go. Here is a simple flowchart of Reconcile, it is much easier to understand the code with this diagram.

The following is the code for the corresponding Reconcile method.

|

|

Here you may find: The original CRD controller eventually translates CR into k8s native Resource, such as service, deployment, etc. The state changes of CR (such as here replicas, images, etc.) are eventually converted into a native resource such as deployment. This is the essence of operator! Understanding this layer, operator is no longer an inaccessible concept for everyone.

Some people may also find that the above flowchart does not seem to consider the operation of deployment and service when the CR instance is deleted, which is true. But for a 7×24 service running in the background, we are more concerned about its changes, scaling, upgrades and other operations, deletion is the lowest priority requirements.

8. Building the controller image

After the controller code is written, we will build the controller image, which we know from the previous article is actually a pod running in k8s under deployment.

We need to build its image and deploy it to k8s via deployment.

The operator project created by kubebuilder contains the Makefile, and the controller image can be built by make docker-build. docker-build uses the golang builder image to build the controller source code, but if you don’t modify the Docker-build uses the golang builder image to build the controller source code, but without a slight modification to the Dockerfile, you will have a hard time compiling over it, because the default GOPROXY is not accessible in China. The easiest way to modify here is to use vendor build, and here is the modified Dockerfile.

|

|

The following are the steps of the build.

|

|

Note: Before executing the make command, change the initial value of the IMG variable in the Makefile to IMG ? = bigwhite/webserver-controller:latest

After a successful build, execute make docker-push to push the image to the image repository (the public repository provided by docker is used here).

9. Deploying the controller

We have already installed the CRD into k8s by make install, next we deploy the controller to k8s and our operator is deployed. Execute make deploy to achieve deployment.

|

|

We see that deploy not only installs controller, serviceaccount, role, rolebinding, it also creates namespace and also installs crd all over again. In other words, deploy is a complete operator installation command.

Note: Use make undeploy to completely uninstall operator-related resources.

We use kubectl logs to view the controller’s operational logs.

|

|

As you can see, the controller has been successfully started and is waiting for a WebServer CR related event (e.g. creation). Let’s create a WebServer CR!

10. Creating the WebServer CR

There is a CR sample in the webserver-operator project, located under config/samples, which we modify to add the fields we added in the spec.

We create this WebServer CR via kubectl.

Observe the controller’s log.

|

|

We see that when the CR is created, the controller listens to the relevant events and creates the corresponding Deployment and service, so let’s take a look at the Deployment, the three Pods and the service created for the CR.

|

|

Let’s request the service.

|

|

The service returns a response as expected!

11. Scaling, Changing Versions, and Service Self-Healing

Next, let’s do some common O&M operations on CR.

-

Number of copies changed from 3 to 4

We change the replicas of CR from 3 to 4 and do an extension operation on the container instance.

Then make it effective by kubectl apply.

After the above command is executed, we observe the controller log of operator as follows.

11.660208962767797e+09 INFO controllers.WebServer Deployment spec.replicas change {"Webserver": "default/webserver-sample", "from": 3, "to": 4}Later, check the number of pods.

The number of webserver pod copies was successfully expanded from 3 to 4.

-

Changing the webserver image version

We change the version of the CR image from nginx:1.23.1 to nginx:1.23.0 and then execute kubectl apply to make it work.

We check the response log of the controller as follows.

11.6602090494113188e+09 INFO controllers.WebServer Deployment spec.template.spec.container[0].image change {"Webserver": "default/webserver-sample", "from": "nginx:1.23.1", "to": "nginx:1.23.0"}The controller updates the deployment, causing a rolling upgrade of the pods under its jurisdiction.

1 2 3 4 5 6 7$kubectl get pods NAME READY STATUS RESTARTS AGE webserver-sample-bc698b9fb-8gq2h 1/1 Running 0 10m webserver-sample-bc698b9fb-vk6gw 1/1 Running 0 10m webserver-sample-bc698b9fb-xgrgb 1/1 Running 0 10m webserver-sample-ffcf549ff-g6whk 0/1 ContainerCreating 0 12s webserver-sample-ffcf549ff-ngjz6 0/1 ContainerCreating 0 12sAfter waiting patiently for a short while, the final pod list is as follows.

-

service self-healing: restore the service that was undeleted

Let’s do a “mistake” and remove the webserver-sample-service to see if the controller can help the service heal itself.

View controller logs.

11.6602096994710526e+09 INFO controllers.WebServer Creating a new Service {"Webserver": "default/webserver-sample", "Service.Namespace": "default", "Service.Name": "webserver-sample-service"}We see that the controller detected the status of the service being deleted and rebuilt a new service!

Request the newly created service.

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 22 23 24curl http://192.168.10.182:30010 <!DOCTYPE html> <html> <head> <title>Welcome to nginx!</title> <style> html { color-scheme: light dark; } body { width: 35em; margin: 0 auto; font-family: Tahoma, Verdana, Arial, sans-serif; } </style> </head> <body> <h1>Welcome to nginx!</h1> <p>If you see this page, the nginx web server is successfully installed and working. Further configuration is required.</p> <p>For online documentation and support please refer to <a href="http://nginx.org/">nginx.org</a>.<br/> Commercial support is available at <a href="http://nginx.com/">nginx.com</a>.</p> <p><em>Thank you for using nginx.</em></p> </body> </html>You can see that the service has finished healing itself with the help of the controller!

5. Summary

In this article, we have introduced the concept and advantages of Kubernetes Operator, and developed a level 2 operator based on kubebuilder, which is still a long way from perfection.

I believe that after reading this article, you will have a clear understanding of the operator, especially its basic structure, and will be able to develop a simple operator!

The source code for this article can be downloaded from here.

6. Reference

- https://tonybai.com/2022/08/15/developing-kubernetes-operators-in-go-part1/

- kubernetes operator 101, Part 1: Overview and key features

- Kubernetes Operators 101, Part 2: How operators work

- Operator SDK: Build Kubernetes Operators

- kubernetes doc: Custom Resources

- kubernetes doc: Operator pattern

- kubernetes doc: API concepts

- Introducing Operators: Putting Operational Knowledge into Software

- CNCF Operator White Paper v1.0

- Best practices for building Kubernetes Operators and stateful apps

- A deep dive into Kubernetes controllers

- Kubernetes Operators Explained

- Books: Kubernetes Operator

- Books: Programming Kubernetes

- Operator SDK Reaches v1.0

- What is the difference between kubebuilder and operator-sdk

- Kubernetes Operators in Depth

- Get started using Kubernetes Operators

- Use Kubernetes operators to extend Kubernetes’ functionality

- memcached operator