When learning C language there is a strange keyword volatile, what exactly is the use of this?

volatile and the compiler

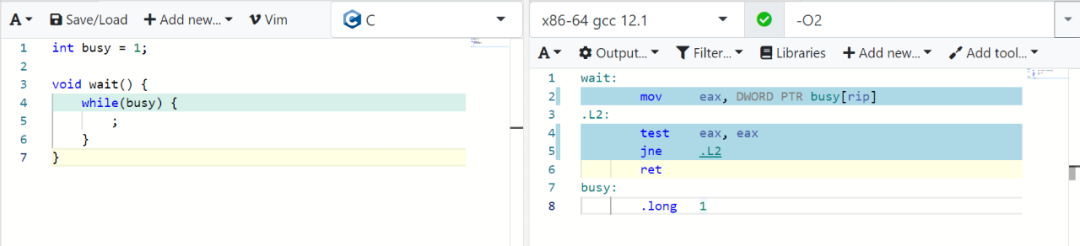

First look at a piece of code like this.

Compile it and note that O2 optimization is used here:

Let’s take a closer look at this generated assembly.

As you can see, the decision to jump out of the loop is made by checking the eax register, but not by checking the real content of the memory where the variable busy is located.

Note that the optimization is correct for this code, but the problem is that if there is other code that modifies the variable busy, then the optimization will cause the other code to modify the variable busy in such a way that it will not take effect at all, like this.

If the machine instruction corresponding to the while loop in the wait function simply reads from a register, then even if thread B’s signal function modifies the busy variable, it will not allow the wait function to jump out of the loop.

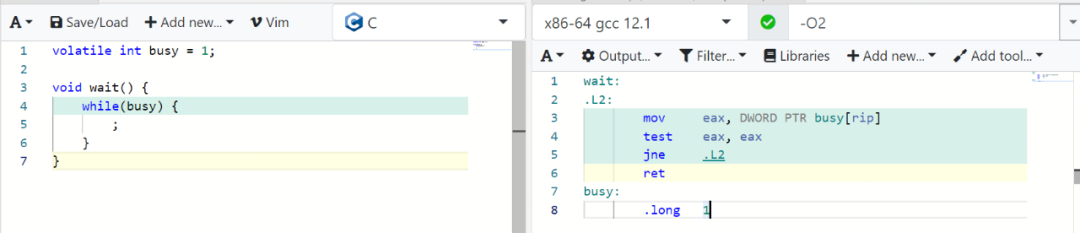

If you use the volatile modifier on the busy variable, the resulting instruction would look like this.

Note that at this point the paragraph L2, each time reads the data from the memory where the busy variable is located and stores it in eax, then goes to the judgment, so as to ensure that the latest value of the busy variable is read every time.

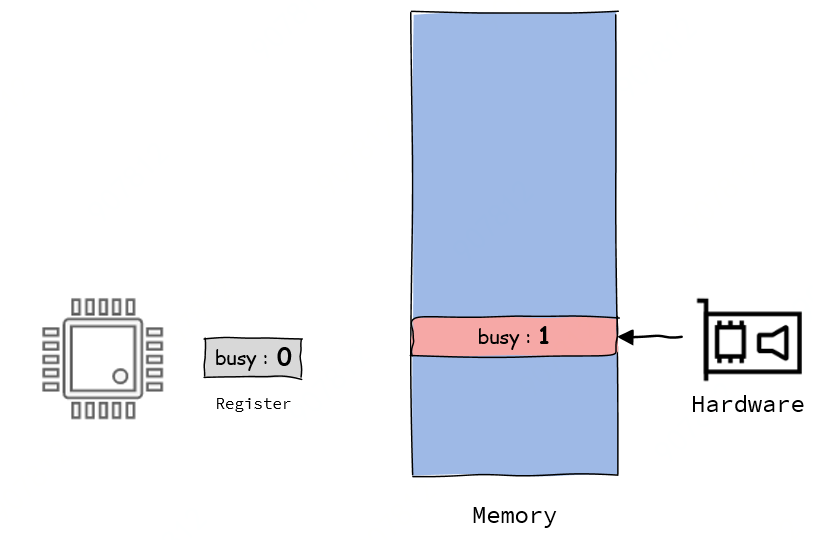

In fact, you can treat the register eax as the cache of the memory where the busy is located. When the cache (register) and the data in memory are the same, there is no problem, but when the cache and the data in memory are not the same (that is, the memory has been updated but the cache still holds the old data), the program often runs unexpectedly.

In addition to the multi-threaded example, there is also a category of signal handler and hardware modification of the variable (often encountered when interacting with hardware in C), if the compiler generates instructions like the one at the beginning of the article then the waiting thread will not detect the signal handler or hardware modification of the variable.

So here we need to tell the compiler: “Don’t be clever, don’t just read data from registers, this variable may have been modified elsewhere, get the latest data from memory when using it”.

Now it’s time to briefly summarize that volatile only prevents the compiler from trying to optimize read operations on variables.

volatile and multithreading

Be sure to note that volatile only ensures the visibility of variables, but has nothing halfway to do with atomic access to variables, which are two completely different tasks.

Suppose there is a very complex struct struct foo.

You just use volatile to modify the variable foo just to ensure that when the variable is modified by thread1 we can read the latest value in thread2, but this does not solve the problem of multi-threaded concurrent reads and writes that require atomic access to foo.

Ensuring atomic access to variables generally uses locks, and when locks are used, the locks themselves include the ability to provide volatile, i.e., ensure visibility of the variable, so there is no need to use volatile when locks are used.

volatile and memory order

Some of you may be thinking that if the variable I want to modify with volatile is not that complicated and is just an int, like this.

|

|

Is it possible for thread A to read the busy variable, thread B to update the busy variable, and then perform a specific action when A detects a change in the busy? Since the volatile modification ensures that the busy is read from memory every time, it should be possible to use it that way.

However, computers may be relatively simple conceptually, but they are complex in engineering practice.

We know that since the speed difference between CPU and memory is very large, there is a layer of cache between CPU and memory, and CPU does not actually read memory directly. The existence of cache will complicate the problem, and limited to space and the topic of this article here will not be expanded.

In order to optimize memory reading and writing, the CPU may reorder the instructions for memory reading and writing operations. The consequence is: suppose the Nth line of code and the N+1th line of code are executed successively in Thread 1, but Thread 2 seems to be the N+1th line of code that takes effect first, assuming the initial value of X is 0 and the initial value of Y is 1.

When thread 2 reads the value of X after detecting that BUSY is 0, the value of X read at this point may be 0.

To solve this problem, what we need is not volatile, which does not solve the reordering problem, but a memory barrier.

A memory barrier is a class of machine instructions that limits the memory operations that the processor can perform before and after the barrier instruction, ensuring that no reordering problems occur.

The effect of a memory barrier still covers the functionality provided by volatile, so volatile is not needed.

As you can see, we almost always do not use the volatile keyword in a multi-threaded environment.