Preface

This article is an in-depth comparison of Python concurrency scenarios and their advantages and disadvantages, mainly introducing the asyncio solution.

Note: The code in this article requires Python 3.10 and above to run properly.

Python Concurrency and Parallelism Schemes

There are three concurrency and parallelism schemes in the Python world, as follows:

- multi-threading

- multiprocessing

- asynchronous IO (asyncio)

Note: The difference between concurrency and parallelism will not be mentioned first, and will be better explained with examples at the end, and concurrent.futures will be mentioned later, but it is not a standalone program, so it is not listed here.

These solutions are designed to address performance bottlenecks with different characteristics. There are 2 main types of performance problems:

-

CPU-intensive (CPU-bound). This refers to computationally intensive tasks that require a large amount of computation. Examples include the execution of various methods of Python’s built-in objects, scientific computation, video transcoding, and so on.

-

I/O-intensive (I/O-bound). Tasks that involve network, memory access, disk I/O, etc. are IO-intensive tasks, which are characterized by low CPU consumption and spend most of their time waiting for I/O operations to complete. Examples include database connections, Web services, file reading and writing, and so on.

If you don’t know what type of task a task is, my experience is that you ask yourself, if you are given a better and faster CPU it can be faster, then it is a CPU intensive task, otherwise it is an I/O intensive task.

For CPU-intensive tasks, there is only one optimization solution, which is to use multiple processes to make full use of multi-core CPUs to complete the task together to achieve speedup. For I/O-intensive tasks, all three options are possible.

Let’s take a small example of crawling a web page and writing it locally (a typical I/O-intensive task) to break down and compare each of these options.

Let’s look at the example first:

|

|

In this example, the requests library is used to crawl 25 pages of the Douban Top250 (each page shows 10 movies). The different pages are requested sequentially, and it takes 3.9 seconds in total.

This speed though still looks good, on the one hand, Douban did a good job of optimizing, on the one hand, my home bandwidth network speed is also relatively good. Then optimize with the above three options to see the effect.

Multiprocess version

The Python interpreter uses a single process, which is actually quite wasteful if the server or your computer is multi-core, so you can speed it up by multi-processing.

Note: The above code has been omitted and only the changed part is shown here.

Using a multi-process pool, but without specifying the number of processes, 10 processes are started to work together according to the number of cores of the Macbook. It takes the following time.

Multiprocessing can theoretically have a tenfold efficiency gain, since 10 processes are executing tasks together. Of course, since the number of tasks is 25, not an integer multiple, it is not possible to achieve a 10x reduction in elapsed time, and since the crawl is too fast to fully show the efficiency gain under the multi-process scheme, it takes 1 second, which is about a 4x efficiency gain.

There is no obvious disadvantage in a multi-process solution, as long as the machine is strong enough, it can be faster.

multi-threaded version

The Python interpreter is not thread-safe, so Python has designed GIL: Get a GIL lock to access Python objects in a thread. So at any one time, only one thread can execute the code, so it won’t trigger a Race Condition. Although there are many problems with GIL, there are advantages to GIL, such as simplifying memory management, etc. These are not the focus of this article, so I won’t expand on them.

So one might ask, what is the reason why multiple threads can improve concurrency efficiency, since there is always only one thread working at the same time?

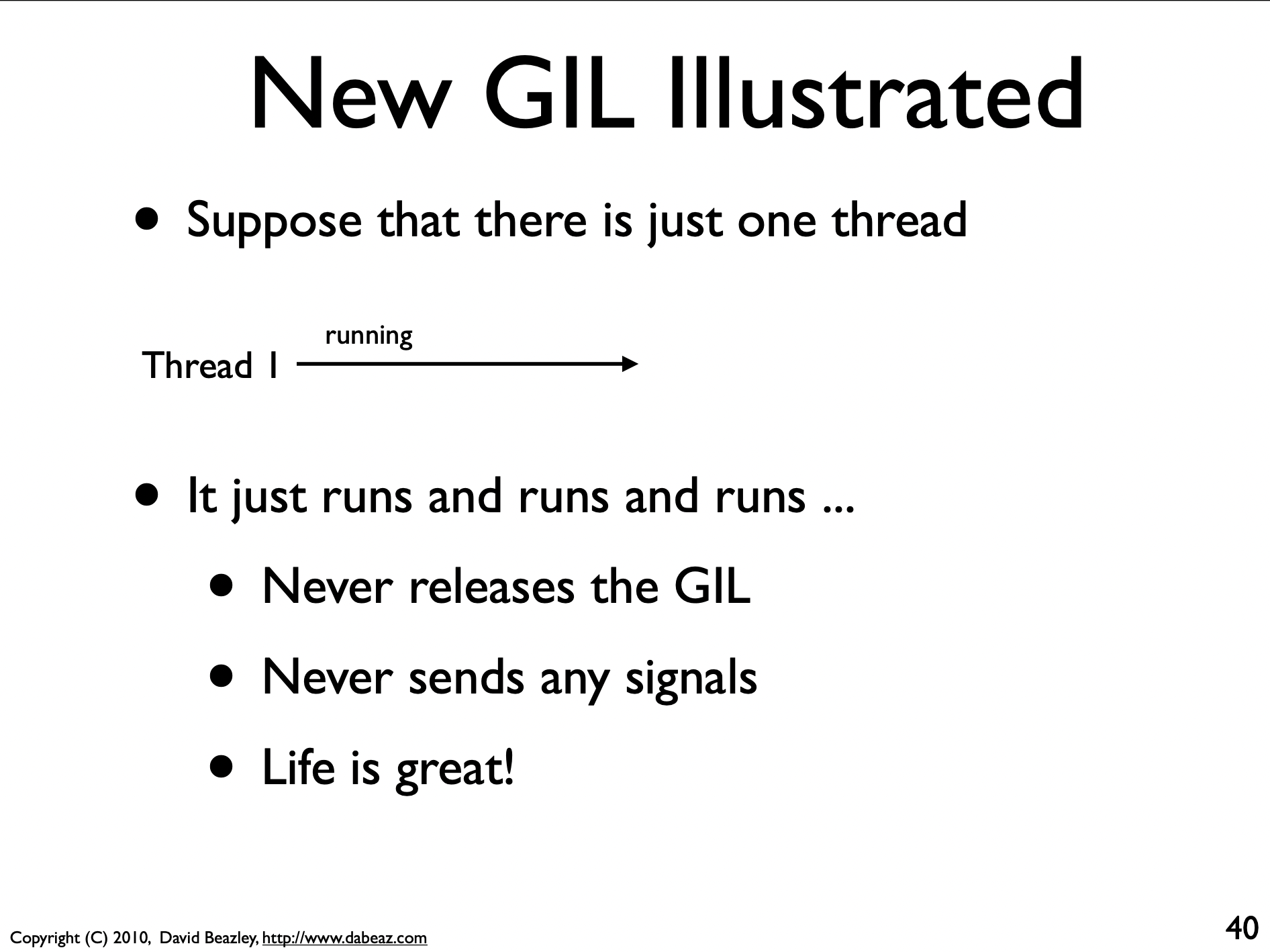

A good explanation of this is given in Understanding the Python GIL at link 1 (note that we’re talking about the new Python 3.2 GIL, not the old Python2 GIL, which has a lot of outdated descriptions on the web. You can also check out the article at extended reading link 2 to help understand the differences), and I’ll take a few of the PPT images to illustrate.

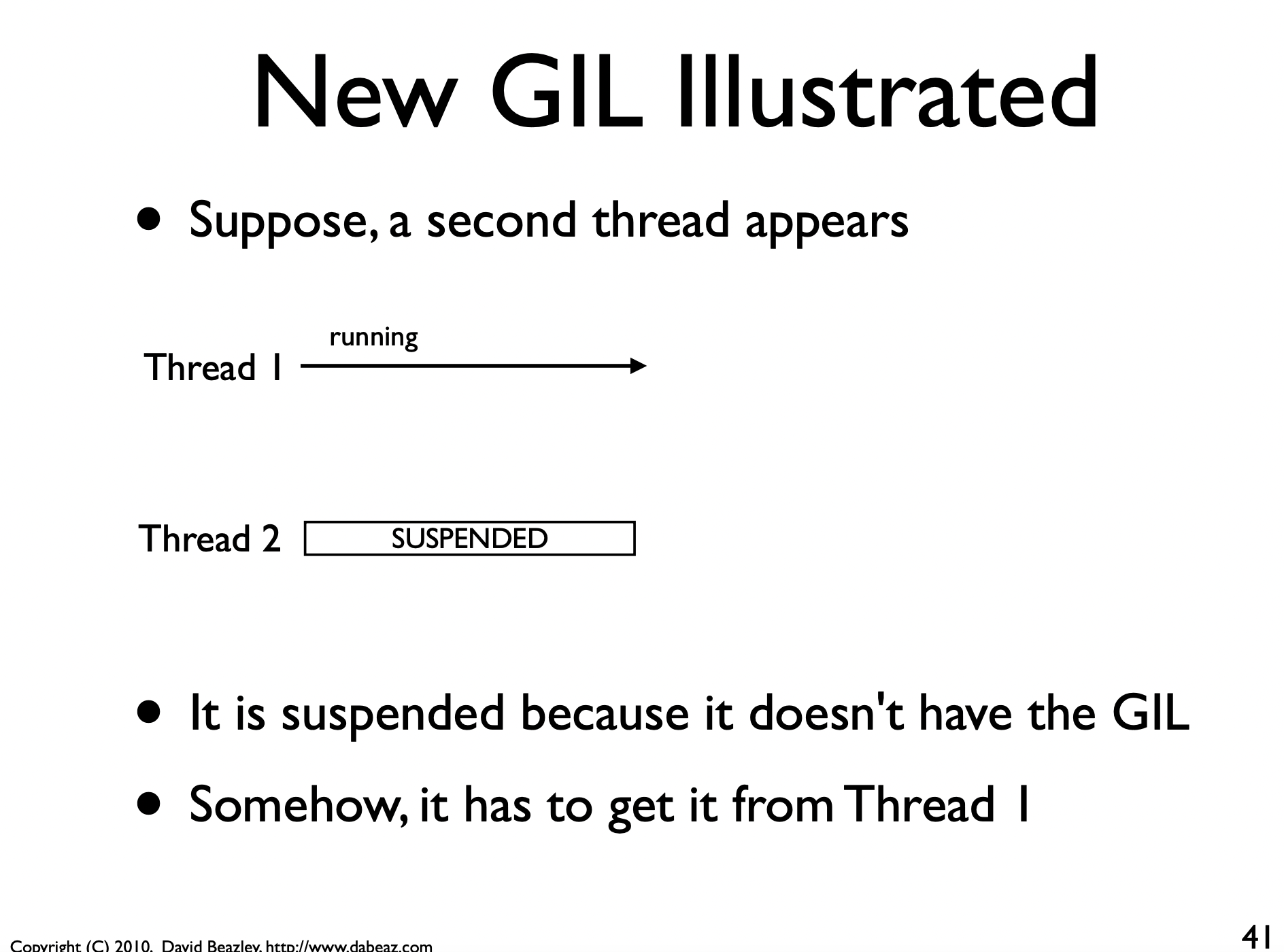

In the above diagram, there was only 1 thread, so there was no need to release or get a GIL, but then there was a second thread, so there were multiple threads, and at first thread 2 was hung because it didn’t have a GIL.

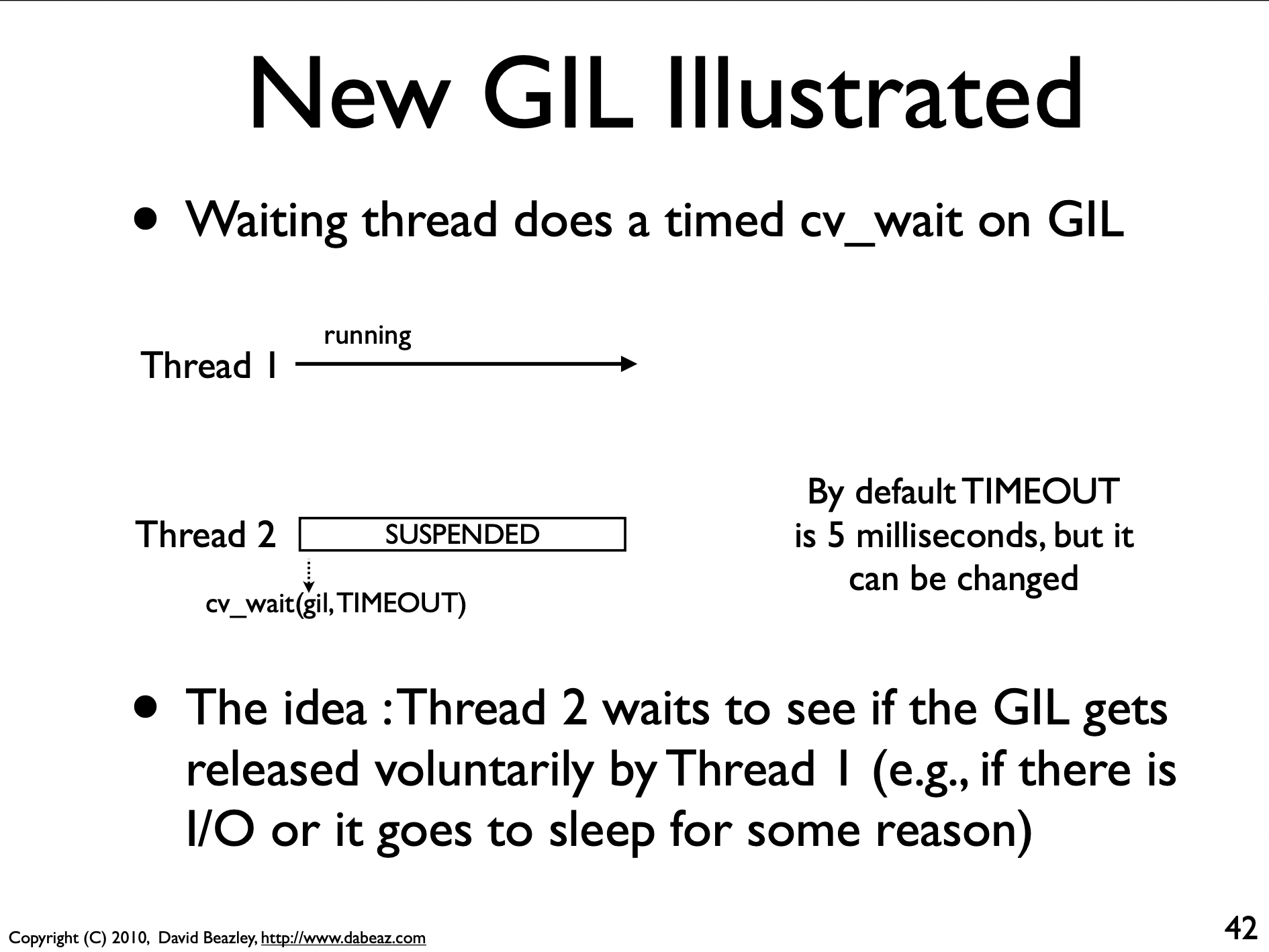

Thread 1 voluntarily abandons the GIL during a cv_wait cycle, for example, if there is an I/O block, or if it times out, it triggers a GIL release. (Threads can’t keep holding, even if there is no I/O blocking in a cycle, they have to release execution rights, the default time is 5 milliseconds, which can be set via sys.setswitchinterval, of course you have to know what you are doing before setting)

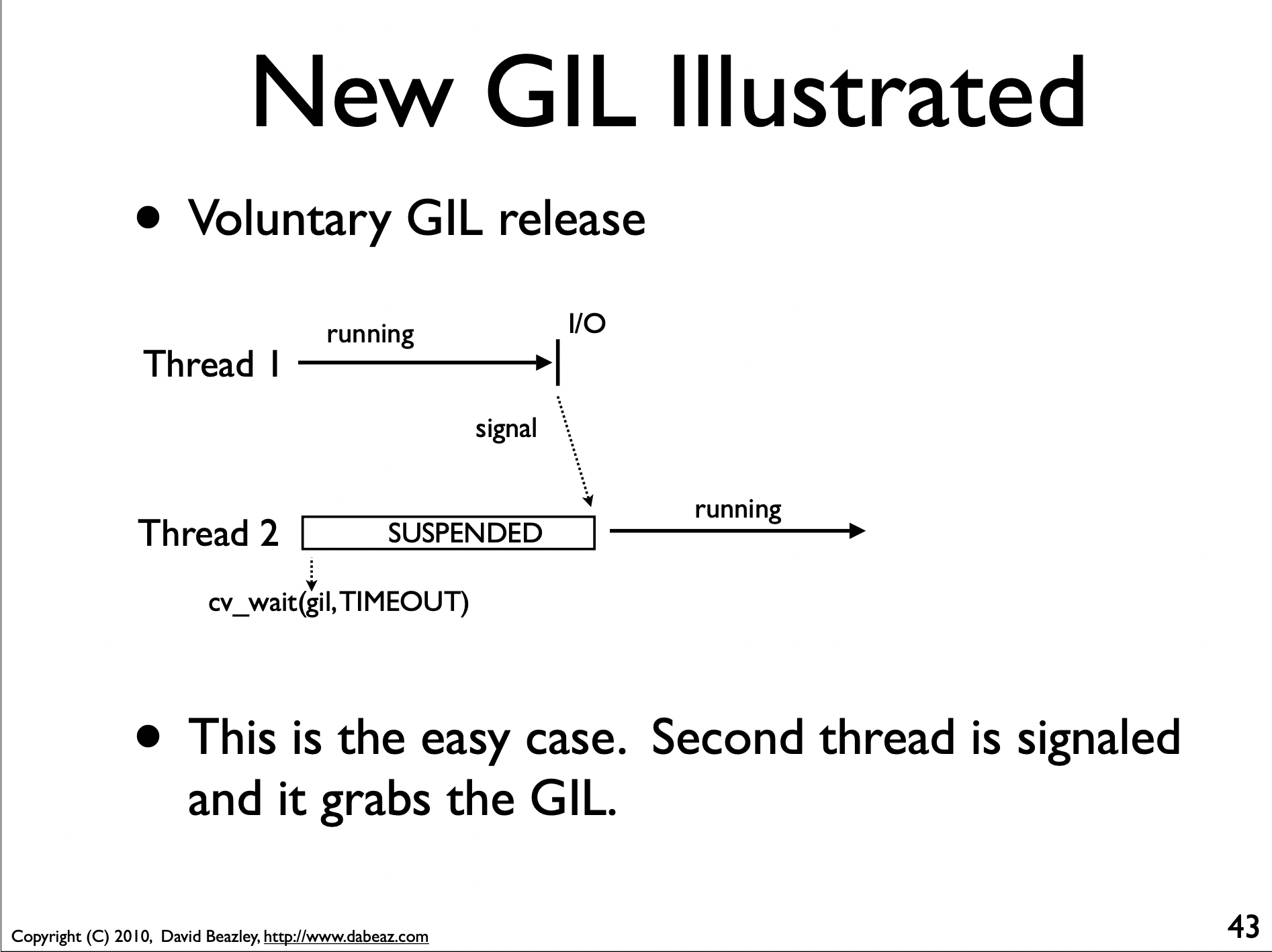

Here is a regular example (non-timeout forced release), in the cv_wait phase, thread 1 will send a signal to thread 2 because it encounters an I/O block, then thread 1 gives up the GIL and hangs, while thread 2 gets the GIL, and so on, after which thread 2 will release the GIL to thread 1.

This PPT is very well known in the industry, so we suggest you take a look at it. After the PPT also lists the processing of the timeout, because and we are a little far away from this article also do not expand, interested in the next look. btw, I first look at this PPT think this timeout time is terrible, that is, 1 second to switch at least 200 times, which is too wasteful, so you can try to adjust the timeout time in the code.

As you can understand from the above, multithreading is controlled by GIL, each thread gets a better timing of execution, so there is no blocking by a thread task all the time, because if a thread encounters blocking it will voluntarily give up GIL to let itself hang and give the opportunity to other threads, which improves the overall efficiency of executing tasks. The perfect scenario in multi-threaded mode is that the corresponding threads are doing something at any point in time, rather than having threads that are actually waiting to be executed, but are actually blocked.

Let’s look at the multi-threaded scenario:

Here are 2 points:

- I used

poolin both the multi-process and multi-threaded examples, which is a good habit because too many threads bring extra overhead, including creation and destruction overhead, scheduling overhead, etc., and also reduce the overall performance of the computer. Use a thread pool to maintain multiple threads waiting for the supervisor to assign tasks that can be executed concurrently. This avoids the cost of creating and destroying threads while processing tasks on the one hand, and avoids the over-scheduling problems caused by an inflated number of threads on the other, ensuring full utilization of the kernel. In addition, the process pool and thread pool implementations in the standard library write very little additional code, and the code structure is still very similar, which makes it particularly suitable for writing comparative examples. processes, if not specified, is also consistent with the number of CPU cores 10, but not the more threads the better, because more threads, but the normal effective execution is forced to release by the GIL, which causes the redundant context switch is a burden.

In this example, the number of threads is 5, which is actually the result of experience on the one hand, and debugging values on the other, so this also exposes the problem that multi-threaded programming can be poorly optimized if you are not careful, and there is no optimal value found, because GIL control of threads is a black-box operation that developers cannot directly control , which is very unfriendly even to some relatively experienced Python developers.

Let’s see how long it takes.

As you can see, the multi-threaded solution is more than twice as fast as the original one. However, it is a little bit worse than the multi-process solution (in fact, I think it is much worse in the real example). This is because in the multi-processing scheme, the multi-core CPUs are working independently, while the multi-threading scheme cannot use so many threads due to the efficiency problem on the one hand, and due to the GIL limitation, the GIL is still forced to be released when it is not needed, so the process of constant switching reduces the efficiency and makes the effect much less effective.

concurrent.futures version

By the way, I would like to mention the concurrent.futures scheme. It’s not a completely new solution, it’s a framework that has been around for a long time in other languages (e.g. Java) to control the start, execution and shutdown of threads. I understand it as abstracting the code of multi-process pools and multi-thread pools, so that developers don’t need to focus on the specifics and usage of multi-thread and multi-process modules. It’s not that hard to understand, you can break it down like this:

For example, ThreadPoolExecutor can be broken down as follows: ThreadPoolExecutor = Thread + Pool + Executor, which is actually Thread + Pool + Executor. The Executor decouples task submission from task execution, and it does both the thread provisioning (how and when) and the task execution part.

If you want to know more about it, I recommend reading the comments in the header of the source code file, which explains the data flow in great detail and is more in-depth and accurate than any technical article.

Here is just a demonstration of the usage of ThreadPoolExecutor:

Is it familiar? The interface is very similar to the process pool thread pool used above, but note that max_workers is the number of CPUs + 4 if not specified, with a maximum of 32. It is the same as the multi-threaded usage problem, and this max_workers needs to be tuned (for comparison, so the same value is used here).

Although concurrent.futures is now the more mainstream solution, in my experience it is slightly less efficient than code that directly uses process or thread pools, because it is highly abstract but makes things more complicated, for example by using the corresponding queue (queue module) and semaphore, which limits the performance gains. So my advice is that Python beginners can use it, but advanced developers should control their own concurrency implementation.

asyncio version

The previous multithreading solutions required the developer to find an optimal number of threads (or multiple threads) based on experience or experimentation, and this value varied greatly from scenario to scenario, which was very unfriendly to beginners and made it very easy to get into a situation where you were using multithreading, but using it wrong or not using it well enough.

Python later introduced a new concurrency model: aysncio, and this section explains why the latest asyncio scheme is a better choice. Let’s start with the one-page PPT from Understanding the Python GIL.

Let’s recall that it mentions that when there is only a single thread, GIL is not actually triggered and this separate thread can keep executing. This is where asyncio finds its entry point: because it is single-process and single-threaded, it is theoretically not subject to GIL. With an event-driven mechanism, the performance of single-threaded threads can be better exploited, especially since the await keyword allows the developer to decide the scheduling scheme himself, instead of the multi-threaded one controlled by the GIL.

Then imagine that in the best case, all await places are potentially I/O blocking. Then, during execution, when I/O blocking is encountered, the Coroutine can be switched to perform other tasks that can continue, so the thread is always working without blocking, so to speak, at 100% utilization! This is something that can never be achieved with a multi-threaded solution.

With this in mind, let’s go back and reorganize and understand it again, starting with the basic theory.

Coroutine

Coroutine is a special function that adds the async keyword in front of the original def keyword, essentially it is a generator function that can generate values or receive values sent from outside (via the send method), but its most important feature is that it can save context (or state) when needed, hang itself and give control to the caller, and since it can be executed again in the future because it saves the context at the time of the hang.

In fact, it does not execute immediately when the Coroutine is called:

Asynchronous and Concurrent

Asynchronous, non-blocking, and concurrent are words that can easily confuse people. Combined with the asyncio scenario, my understanding is:

- coroutines are executed asynchronously, in asyncio, a coroutine can [pause] itself while waiting for the result of execution, so that other coroutines can run simultaneously.

- asynchronous allows execution without waiting for the blocking logic to complete before allowing other code to run at the same time, so it will not [block] other code, then it is [non-blocking] code

- When a program written in asynchronous code executes, it looks like all the tasks in it are executing and completing at the same time (because it switches between waiting), so it looks [concurrent].

EventLoop

Event Loop is a concept that I have actually understood for many years, since the Twisted era. I always thought it was very mysterious and complicated, but now it seems that I was actually overthinking. For beginners, it’s better to think differently, it’s all about Event + Loop: Loop is a ring, each task is placed on this ring as an event, and the event will keep looping and trigger the execution of the event when the conditions are met. It has the following features:

- an event loop runs in a thread

- Awaitables (coroutine, Task, Future are mentioned below) can be registered to the event loop

- if another coroutine is called in the coroutine (via await), the coroutine will hang and a context switch will occur to execute the other coroutine, and so on

- if the coroutine encounters I/O blocking while executing, the coroutine will hang with the context and give control back to EventLoop

- Since it is a loop, the loop will restart after all registered events are executed

Future/Task

asyncio.Future I think is like the Javascript Promise, it is a placeholder object that represents something that is not yet done and will be implemented or completed in the future (and of course may throw an exception due to an internal error). It is very similar to concurrent.futures.Futures implemented in the concurrent.futures scheme mentioned above, but with a lot of customizations for asyncio’s event loop. asyncio.Future which is just a container for data.

asyncio.Task is a subclass of asyncio.Future, which is used to run coroutines in event loops.

A very intuitive example is mentioned in the official documentation, which I rewrite here to execute and illustrate inside IPython.

|

|

You can feel:

- the Future object is not a task, but a container for state

create_taskwill let the event loop schedule the execution of the Coroutine- you can use

ensure_futureandcreate_taskto create tasks,ensure_futureis a more advanced wrapper, but Python 3.7 and above should usecreate_task

The next step is to understand what await does. If a Future object is awaited in the Coroutine, the Task will pause the execution of the Coroutine and wait for the completion of the Future. When the Future completes, the execution of the wrapped Coroutine will continue.

|

|

In this example, the entry function of a, which calls b and c, will in turn call d. await will allow the corresponding Coroutine to obtain execution privileges. await within the Coroutine will not release privileges until all other Coroutines are executed, so note that this is more like DFS (depth-first search), so the order of execution is a->b->d->c.

So here’s the conclusion :

The event loop is responsible for the Coroutine scheduling of the concurrent process: the event loop runs one task at a time. While a task waits for an Awaitables object to complete, the event loop runs other tasks, callbacks, or performs IO operations.

asyncio solution

In the asyncio scheme, all libraries that involve I/O blocking operations have to use libraries from the aio ecosystem, so you can no longer use the requests library, but need to use aiohttp, and file operations need to use aiofiles.

The final code is as follows (the 2 packages need to be downloaded for use).

|

|

Look at the efficiency:

So the advantages of asyncio are as follows:

- asyncio, when used well, is the fastest of these concurrency solutions.

- It supports thousands of active connections, which can perform well in scenarios like websockets and MQTT, whereas multi-threaded solutions can have serious performance problems at this scale of thread count.

- the multi-threaded solution has implicit thread switching, and we cannot confirm when it will switch thread execution rights, so it is very prone to Race Condition. In contrast, the Coroutine switch in the asyncio scheme is explicit and explicit, and the developer can explicitly know or specify the order of execution.

Concurrency and parallelism

I found a statement comparing these solutions (extension 4), which also mentions concurrency and parallelism in a particularly graphic way, so I’ll clarify it:

- multiple processes. 10 kitchens, 10 cooks, 10 dishes. It is also 1 kitchen 1 cook to make 1 dish.

- multi-threaded. 1 kitchen, 10 cooks, 10 dishes. Because the kitchen is relatively small, we can only squeeze in together, in fact, we take turns, and when one cook is doing it, the others have to wait for their turn.

- asyncio. 1 kitchen, 1 cook, 10 dishes. It sounds like this is a sequential execution, but in fact, when a dish needs to be braised or whatever other time-consuming cooking method, other dishes can be made or prepared at the same time, and the best scenario is that this chef is always busy doing it.

For concurrency and parallelism I recommend reading the article in Extension Connection 3. Concurrency allows the simultaneous execution of multiple tasks that may access the same shared resources, such as the hard disk, the network, and the corresponding single-core CPU. since there will be access to shared resources, there may be a race condition, so there is in fact only one task executing at a given point in time. in essence the goal is to prevent tasks from blocking each other by switching between them when a task is forced to wait for external resources. In essence, the goal is to prevent tasks from blocking each other by switching between them when a task is forced to wait for an external resource. Parallelism refers to multiple tasks running in parallel on independently partitioned resources (e.g., multiple CPU cores), which maximizes the use of hardware resources.

Extended Reading

- https://speakerdeck.com/dabeaz/understanding-the-python-gil

- https://www.datacamp.com/tutorial/python-global-interpreter-lock

- https://www.infoworld.com/article/3632284/python-concurrency-and-parallelism-explained.html

- https://leimao.github.io/blog/Python-Concurrency-High-Level/

The code for this article can be found in the mp project.