When you are developing and maintaining Kubernetes, the most common concepts you come across are the networking concepts of Docker and Kubernetes. Especially for Kubernetes, there are various IPs, ports, and sometimes confusion. Therefore, it is necessary to learn the underlying network implementation of Docker and Kubernetes. In this article, we will first analyze and introduce the network implementation of Docker.

Docker Networking Basics

Docker’s networking implementation mainly makes use of Linux networking related technologies such as Network Namespace, Veth device pairs, bridges, iptables, routing.

Network Namespace Fundamentals

The role can be summarized in one sentence: to implement Linux network virtualization, i.e., isolation at the network stack level between containers.

Network Namespace technology allows different Docker containers to have their own completely isolated network environment, just like they have their own separate network cards. By default, there is no direct communication between different Network Namespaces.

The Network Namespace in Linux can have its own separate routing table and separate iptables settings to provide packet forwarding, NAT and IP packet filtering operations. In order to isolate a separate protocol stack, the elements that need to be included in the namespace are processes, sockets, network devices, etc. Sockets created by processes must belong to a namespace, and socket operations must be performed in the namespace. Similarly, network devices must also belong to a namespace. Because network devices are public resources, they can be moved between namespaces by modifying their properties.

The Linux network stack is very complex, and since we are not doing the underlying system development here, we are trying to understand the network isolation mechanism of Linux Network Namespace at a conceptual level.

From the related books, we know that the Linux network stack supports the isolation mechanism of Namespace by making some global variables related to the network stack members of a Network Namespace variable. This is the core principle of the Linux implementation of Network Namespace. In this way, the global variables of the protocol stack are made private to ensure effective isolation.

There is only one loopback device in the newly generated Network Namespace, and no other network devices exist, which are created and configured by the Docker container at boot time. While physical devices can only be associated with the root Network Namespace, virtual network devices can be associated with some custom Network Namespace and can be transferred within those Namespaces.

Network Namespace represents a completely independent network stack, i.e., isolated from the outside world, which can be understood as a “host” that is not even connected to a network cable. Therefore, how to implement network communication with the outside world under Network Namespace is a problem. The most basic implementation is: Veth device pair.

Note: Network Namespace-related operations can be implemented using the Linux iproute2 series of tools, which require the root user

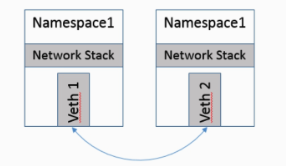

Veth device pair

Veth is actually a virtual Ethernet card, usually when configuring Ethernet cards under Linux we usually see names like ethxxx included, here a V is added, which obviously means a virtual network card. Therefore, the Veth device pair is better understood. The core purpose of introducing the Veth device pair is to achieve communication between two completely isolated Network Namespaces, and to be frank, it can be used to connect two Network Namespaces directly, so the Veth devices appear in pairs and are called Veth A device pair is best described as a pair of Ethernet cards with a directly connected network cable in the middle, which constitutes a so-called device pair. When one end of the Veth device sends data, it sends the data directly to the other end and triggers the receiving operation at the other end.

It is worth mentioning that in the Docker implementation, after putting one end of the Veth into the container Network Namespace, the Veth name will be changed to eth… for those who don’t know, they think it is the local NIC!

Linux Bridge

The role of a bridge, as the name implies, is a bridging role, bridging the object if the network device, the purpose is to achieve mutual communication between the hosts in each network.

A bridge is a Layer 2 virtual network device that “connects” several network interfaces to enable messages to be forwarded between them, based on the MAC address of the network device. The bridge is able to parse incoming and outgoing messages, read the target MAC address information, and combine it with its own recorded MAC table to decide on the forwarding target network interface. To achieve these functions, the bridge is self-learning, it will learn the source MAC address. When forwarding messages, the bridge only needs to forward to a specific network interface to avoid unnecessary network interactions. If it encounters an address it has never learned, it cannot know which network interface the message should be forwarded to and broadcasts the message to all network interfaces (except the network interface from which the message originated), where, to accommodate changes in the network topology, the bridge learns a MAC address table with an expiration time, and if it does not receive a packet back from the corresponding MAC for a long time, it assumes that the device is no longer on that forwarding port, and the next packet to this MAC will be forwarded in the form of a broadcast.

The Linux kernel is bridged by means of a virtual bridge device (Net Device). This virtual device can bind several Ethernet interface devices and thus bridge them together. One of the most obvious features of this Net Device bridge, unlike a normal device, is that it can also have an IP address. The Linux bridge cannot be equated with the traditional switch concept, because a switch is a simple layer 2 device that either forwards or discards messages, while a Linux bridge may send messages to the upper layer of the protocol stack, i.e. the network layer, so a Linux bridge can be considered both a layer 2 and a layer 3 device.

Linux Routing

Linux systems contain a full routing function. When the IP layer is processing data to be sent or forwarded, it uses the routing table to determine where to send it. In general, if the host is directly connected to the destination host, then the host can send IP messages directly to the destination host, which is a relatively simple process. For example, through a peer-to-peer link or network share, if the host is not directly connected to the destination host, then the host sends the IP message to the default router, which then determines where to send the IP message. The routing function is performed by a routing table maintained at the IP layer. When a host receives a data message, it uses this table to decide what action to take next. When a data message is received from the network side, the IP layer first checks to see if the IP address of the message is the same as the host’s own address. If the IP address in the data message is the host’s own address, then the message is sent to the appropriate protocol at the transport layer. If the IP address in the message is not the host’s own address and the host is configured for routing, then the message will be forwarded; otherwise, the message will be discarded.

Docker bridge network implementation

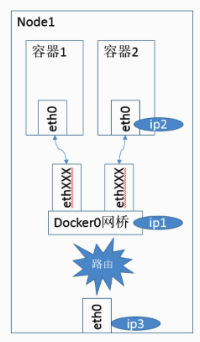

Docker supports the following four network modes: bridge mode (default), none mode, host mode, and container mode. As the work involving container cloud operation and maintenance is all centered on Kubernetes, and only bridge mode is used in Kubernetes among these four network communication modes of Docker, we mainly introduce the bridge network mode of Docker.

Docker defaults to bridge network mode for containers. In bridge mode, Docker Daemon creates a virtual bridge with the default name of docker0 when it first starts up, and then assigns a subnet (usually with an IP starting with 172) to the bridge in private network space. For each container created by Docker, a Veth device pair is created, one end of which is associated to the bridge and the other end is mapped to the eth0 (Veth) device inside the container using Linux’s Network Namespace technology, and then an IP address is assigned to the eth0 interface from within the bridge’s address segment, and the corresponding MAC The corresponding MAC address is also generated based on this IP address. (Yes, you read that right, IPs can be assigned… so don’t treat it as a switch)

After bridge bridging, containers within the same host can communicate with each other, containers on different hosts cannot communicate with each other yet, in fact they may even be in the same network address range (the address segment of docker0 on different hosts may be the same).

Docker networking limitations and a brief discussion

From the network model supported by Docker, we can feel that Docker has not considered this large-scale container clusters, container cross-host communication problems from the beginning, such as Kubernetes container orchestration framework is released later, therefore, the biggest limitation of Docker network is not to consider the network solution of multi-host interconnection at the beginning.

In terms of Docker’s design philosophy, its philosophy has always been “simple is beautiful”, Docker’s biggest contribution is to make the concept of containerization, which has been mentioned for decades, “fly into the common home” today. I think this step is actually very good, which is also a reason for the rapid popularity of Docker.

In summary, for large-scale distributed clusters, container clusters, how to achieve graceful cross-host node communication between containers, by some other frameworks to solve, such as the Kubernetes to be written after, after I will introduce in detail in the Kubernetes is how to solve the problem of network communication of its components, including containers.