This article focuses on learning about.

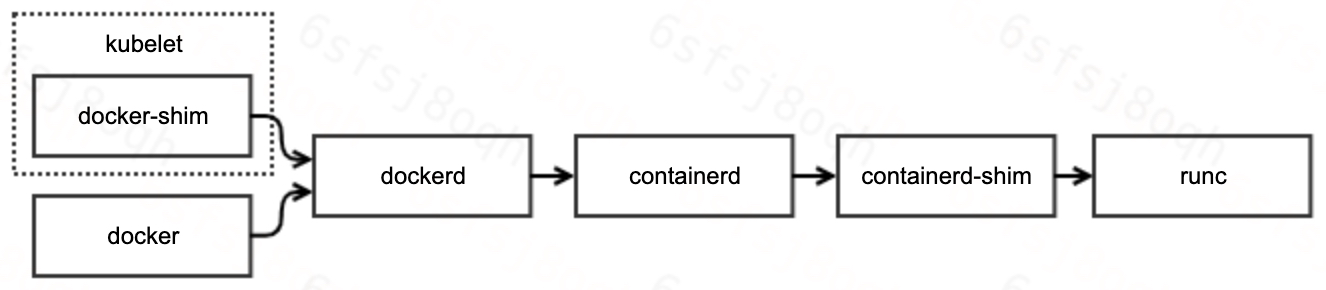

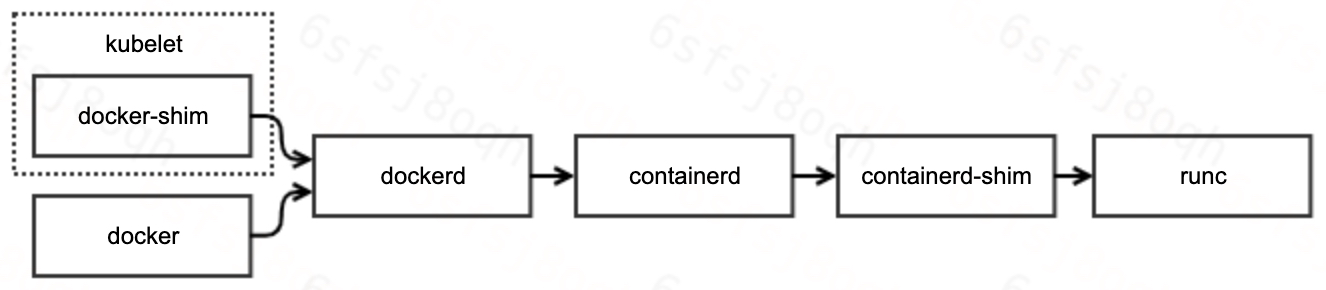

- The direct relationship between kubelet, docker-shim, dockerd, containerd, containerd-shim, and runc

- How to troubleshoot: How to connect containers using docker, containerd-ctr, docker-runc

- runc workflow

Problem Description

Today, in the process of checking the system problem, I found that the system log keeps printing the docker exception log.

1

2

3

|

May 12 09:08:40 HOSTNAME dockerd[4085]: time="2021-05-12T09:08:40.642410594+08:00" level=error msg="stream copy error: reading from a closed fifo"

May 12 09:08:40 HOSTNAME dockerd[4085]: time="2021-05-12T09:08:40.642418571+08:00" level=error msg="stream copy error: reading from a closed fifo"

May 12 09:08:40 HOSTNAME dockerd[4085]: time="2021-05-12T09:08:40.663754355+08:00" level=error msg="Error running exec 110deb1c1b2a2d2671d7368bd02bfc18a968e4712a3c771dedf0b362820e73cb in container: OCI runtime exec failed: exec failed: container_linux.go:348: starting container process caused \"read init-p: connection reset by peer\": unknown"

|

In terms of system riskiness, the reasons for the appearance of abnormal logs need to be ranked clearly and figured out whether they will have an impact on the business.

The following article briefly describes the process of problem identification and the reasons for its generation.

Troubleshooting

The only information we have now is the system logs informing us that dockerd failed to execute the exec.

Before we get into the specifics of the problem, let’s review the working of docker and the invocation chain.

As you can see, the docker call chain is very long and involves more components. Therefore, our troubleshooting path is divided into two main steps as follows.

- Determine the component that caused the failure

- Determine the cause of the component failure

Locating the component

Users familiar with docker will be able to locate the component causing the problem at a glance. But here, we’ll follow the usual troubleshooting process.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

|

// 1. Locate the problem container

# sudo docker ps | grep -v pause | grep -v NAMES | awk '{print $1}' | xargs -ti sudo docker exec {} sleep 1

sudo docker exec aa1e331ec24f sleep 1

OCI runtime exec failed: exec failed: container_linux.go:348: starting container process caused "read init-p: connection reset by peer": unknown

// 2. Exclude docker from suspicion

# docker-containerd-ctr -a /var/run/docker/containerd/docker-containerd.sock -n moby t exec --exec-id stupig1 aa1e331ec24f621ab3152ebe94f1e533734164af86c9df0f551eab2b1967ec4e sleep 1

ctr: OCI runtime exec failed: exec failed: container_linux.go:348: starting container process caused "read init-p: connection reset by peer": unknown

// 3. exclude containererd and containererd-shim suspect

# docker-runc --root /var/run/docker/runtime-runc/moby/ exec aa1e331ec24f621ab3152ebe94f1e533734164af86c9df0f551eab2b1967ec4e sleep

runtime/cgo: pthread_create failed: Resource temporarily unavailable

SIGABRT: abort

PC=0x6b657e m=0 sigcode=18446744073709551610

goroutine 0 [idle]:

runtime: unknown pc 0x6b657e

stack: frame={sp:0x7ffd30f0d218, fp:0x0} stack=[0x7ffd2ab0e738,0x7ffd30f0d760)

00007ffd30f0d118: 0000000000000002 00007ffd30f7f184

00007ffd30f0d128: 000000000069c31c 00007ffd30f0d1a8

00007ffd30f0d138: 000000000045814e <runtime.callCgoMmap+62> 00007ffd30f0d140

00007ffd30f0d148: 00007ffd30f0d190 0000000000411a88 <runtime.persistentalloc1+456>

00007ffd30f0d158: 0000000000bf6dd0 0000000000000000

00007ffd30f0d168: 0000000000010000 0000000000000008

00007ffd30f0d178: 0000000000bf6dd8 0000000000bf7ca0

00007ffd30f0d188: 00007fdcbb4b7000 00007ffd30f0d1c8

00007ffd30f0d198: 0000000000451205 <runtime.persistentalloc.func1+69> 0000000000000000

00007ffd30f0d1a8: 0000000000000000 0000000000c1c080

00007ffd30f0d1b8: 00007fdcbb4b7000 00007ffd30f0d1e0

00007ffd30f0d1c8: 00007ffd30f0d210 00007ffd30f0d220

00007ffd30f0d1d8: 0000000000000000 00000000000000f1

00007ffd30f0d1e8: 0000000000000011 0000000000000000

00007ffd30f0d1f8: 000000000069c31c 0000000000c1c080

00007ffd30f0d208: 000000000045814e <runtime.callCgoMmap+62> 00007ffd30f0d210

00007ffd30f0d218: <00007ffd30f0d268 fffffffe7fffffff

00007ffd30f0d228: ffffffffffffffff ffffffffffffffff

00007ffd30f0d238: ffffffffffffffff ffffffffffffffff

00007ffd30f0d248: ffffffffffffffff ffffffffffffffff

00007ffd30f0d258: ffffffffffffffff ffffffffffffffff

00007ffd30f0d268: ffffffffffffffff ffffffffffffffff

00007ffd30f0d278: ffffffffffffffff ffffffffffffffff

00007ffd30f0d288: ffffffffffffffff ffffffffffffffff

00007ffd30f0d298: ffffffffffffffff 0000000000000000

00007ffd30f0d2a8: 00000000006b68ba 0000000000000020

00007ffd30f0d2b8: 0000000000000000 0000000000000000

00007ffd30f0d2c8: 0000000000000000 0000000000000000

00007ffd30f0d2d8: 0000000000000000 0000000000000000

00007ffd30f0d2e8: 0000000000000000 0000000000000000

00007ffd30f0d2f8: 0000000000000000 0000000000000000

00007ffd30f0d308: 0000000000000000 0000000000000000

runtime: unknown pc 0x6b657e

stack: frame={sp:0x7ffd30f0d218, fp:0x0} stack=[0x7ffd2ab0e738,0x7ffd30f0d760)

00007ffd30f0d118: 0000000000000002 00007ffd30f7f184

00007ffd30f0d128: 000000000069c31c 00007ffd30f0d1a8

00007ffd30f0d138: 000000000045814e <runtime.callCgoMmap+62> 00007ffd30f0d140

00007ffd30f0d148: 00007ffd30f0d190 0000000000411a88 <runtime.persistentalloc1+456>

00007ffd30f0d158: 0000000000bf6dd0 0000000000000000

00007ffd30f0d168: 0000000000010000 0000000000000008

00007ffd30f0d178: 0000000000bf6dd8 0000000000bf7ca0

00007ffd30f0d188: 00007fdcbb4b7000 00007ffd30f0d1c8

00007ffd30f0d198: 0000000000451205 <runtime.persistentalloc.func1+69> 0000000000000000

00007ffd30f0d1a8: 0000000000000000 0000000000c1c080

00007ffd30f0d1b8: 00007fdcbb4b7000 00007ffd30f0d1e0

00007ffd30f0d1c8: 00007ffd30f0d210 00007ffd30f0d220

00007ffd30f0d1d8: 0000000000000000 00000000000000f1

00007ffd30f0d1e8: 0000000000000011 0000000000000000

00007ffd30f0d1f8: 000000000069c31c 0000000000c1c080

00007ffd30f0d208: 000000000045814e <runtime.callCgoMmap+62> 00007ffd30f0d210

00007ffd30f0d218: <00007ffd30f0d268 fffffffe7fffffff

00007ffd30f0d228: ffffffffffffffff ffffffffffffffff

00007ffd30f0d238: ffffffffffffffff ffffffffffffffff

00007ffd30f0d248: ffffffffffffffff ffffffffffffffff

00007ffd30f0d258: ffffffffffffffff ffffffffffffffff

00007ffd30f0d268: ffffffffffffffff ffffffffffffffff

00007ffd30f0d278: ffffffffffffffff ffffffffffffffff

00007ffd30f0d288: ffffffffffffffff ffffffffffffffff

00007ffd30f0d298: ffffffffffffffff 0000000000000000

00007ffd30f0d2a8: 00000000006b68ba 0000000000000020

00007ffd30f0d2b8: 0000000000000000 0000000000000000

00007ffd30f0d2c8: 0000000000000000 0000000000000000

00007ffd30f0d2d8: 0000000000000000 0000000000000000

00007ffd30f0d2e8: 0000000000000000 0000000000000000

00007ffd30f0d2f8: 0000000000000000 0000000000000000

00007ffd30f0d308: 0000000000000000 0000000000000000

goroutine 1 [running]:

runtime.systemstack_switch()

/usr/local/go/src/runtime/asm_amd64.s:363 fp=0xc4200fe788 sp=0xc4200fe780 pc=0x454120

runtime.main()

/usr/local/go/src/runtime/proc.go:128 +0x63 fp=0xc4200fe7e0 sp=0xc4200fe788 pc=0x42bb83

runtime.goexit()

/usr/local/go/src/runtime/asm_amd64.s:2361 +0x1 fp=0xc4200fe7e8 sp=0xc4200fe7e0 pc=0x456c91

rax 0x0

rbx 0xbe2978

rcx 0x6b657e

rdx 0x0

rdi 0x2

rsi 0x7ffd30f0d1a0

rbp 0x8347ce

rsp 0x7ffd30f0d218

r8 0x0

r9 0x6

r10 0x8

r11 0x246

r12 0x2bedc30

r13 0xf1

r14 0x11

r15 0x0

rip 0x6b657e

rflags 0x246

cs 0x33

fs 0x0

gs 0x0

exec failed: container_linux.go:348: starting container process caused "read init-p: connection reset by peer"

|

From the above, it is clear that the exception was returned by runc.

Locating the cause

While locating the exception component, runc also gives us a surprise: it provides a detailed exception log.

The exception log shows that runc exec failed because of Resource temporarily unavailable, which is a typical under-resource problem. The common types of insufficient resources include (ulimit -a)

- Thread limit reached

- File limit reached

- Memory limit is reached

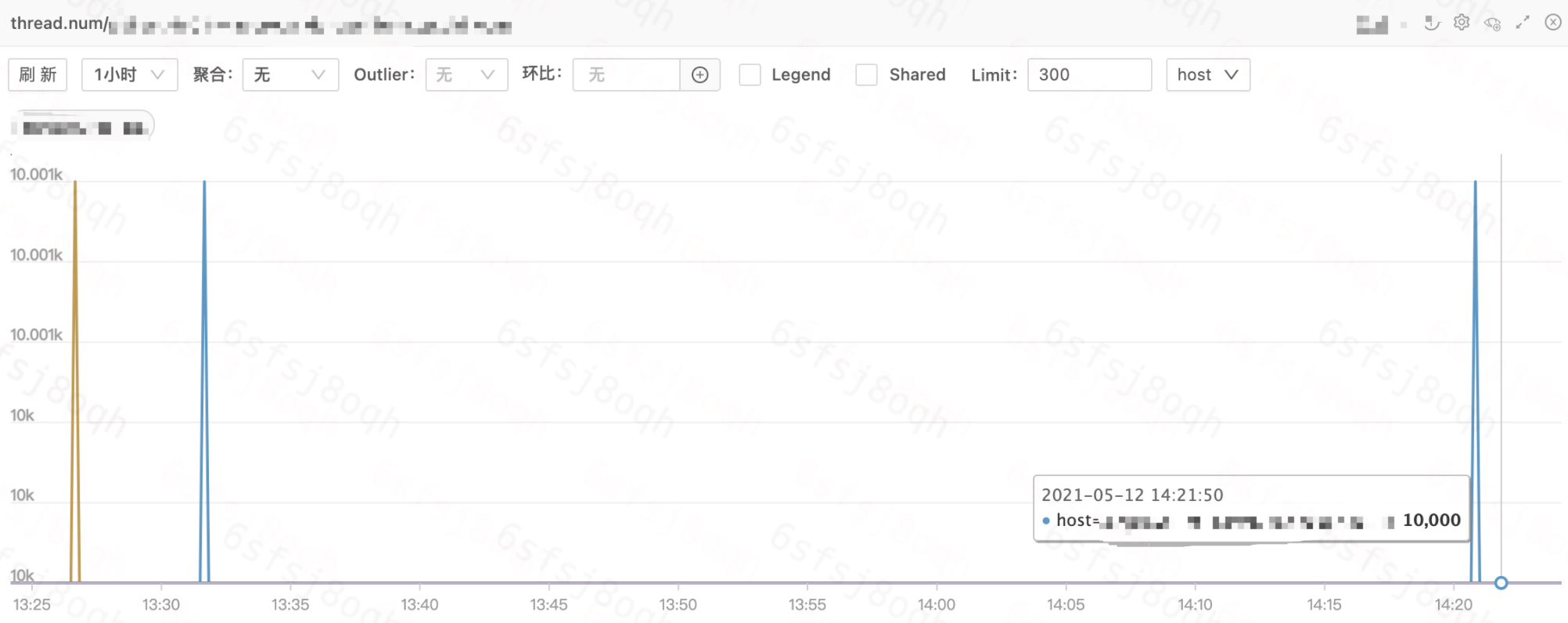

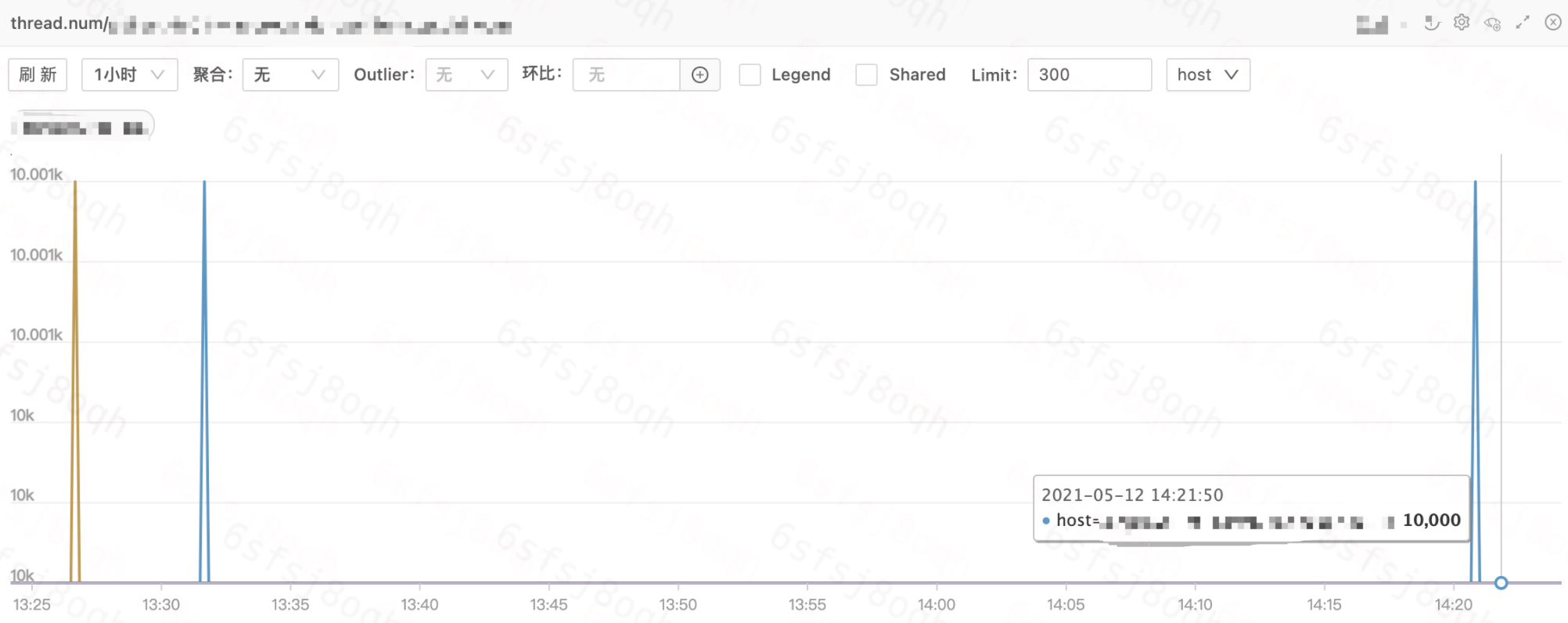

Therefore, we need to further troubleshoot the business container monitoring to locate the type of insufficient resources.

The above figure shows the thread count monitoring of the business containers. The thread count of all containers has reached 1w, and the default limit of the Elastic Cloud is 1w. The reason for setting this limit is also to avoid single container thread leakage and exhausting the thread resources of the host.

1

2

|

# cat /sys/fs/cgroup/pids/kubepods/burstable/pod64a6c0e7-830c-11eb-86d6-b8cef604db88/aa1e331ec24f621ab3152ebe94f1e533734164af86c9df0f551eab2b1967ec4e/pids.max

10000

|

At this point, the cause of the problem has been located clearly, and yes, it is that simple.

runc sorting

Although we have located the cause of the exception log, we have only a vague idea of how runc works.

While we’re at it, let’s take runc exec as an example and sort out the workflow of runc.

- runc exec first starts the child process runc init

- runc init is responsible for initializing the container namespace

- runc init uses the constructor feature of C to set the container namespace before the go code starts

- C code nsexec executes clone twice, three threads in total: parent process, child process, and grandchild process, to complete the initialization of container namespace

- the parent process and the child process exit after completing the initialization task, at this time, the grandchild process is already in the container namespace, and the grandchild process starts to execute the go code initialization and waits to receive the runc exec to send the configuration

- runc exec adds the grand process to the container cgroup

- runc exec sends configuration to the grand process, the configuration mainly contains: the specific commands and parameters of exec, etc.

- Sun process calls system.Execv to execute user commands

Notes.

- Step 2.c and step 3 are executed concurrently

- runc exec and runc init communication is based on socket pair (init-p and init-c)

The flow of interaction between the processes during runc exec and the initialization of namespace and cgroup is shown in the following figure.

Combining our combing of the runc exec execution flow and the error messages returned by runc exec, we basically locate the code where runc exec returns an error.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

|

func (p *setnsProcess) start() (err error) {

defer p.parentPipe.Close()

err = p.cmd.Start()

p.childPipe.Close()

if err != nil {

return newSystemErrorWithCause(err, "starting setns process")

}

if p.bootstrapData != nil {

if _, err := io.Copy(p.parentPipe, p.bootstrapData); err != nil { // clone标志位,ns配置

return newSystemErrorWithCause(err, "copying bootstrap data to pipe")

}

}

if err = p.execSetns(); err != nil {

return newSystemErrorWithCause(err, "executing setns process")

}

if len(p.cgroupPaths) > 0 {

if err := cgroups.EnterPid(p.cgroupPaths, p.pid()); err != nil { // 这里将runc init添加到容器cgroup中

return newSystemErrorWithCausef(err, "adding pid %d to cgroups", p.pid())

}

}

if err := utils.WriteJSON(p.parentPipe, p.config); err != nil { // 发送配置:命令、环境变量等

return newSystemErrorWithCause(err, "writing config to pipe")

}

ierr := parseSync(p.parentPipe, func(sync *syncT) error { // 这里返回 read init-p: connection reset by peer

switch sync.Type {

case procReady:

// This shouldn't happen.

panic("unexpected procReady in setns")

case procHooks:

// This shouldn't happen.

panic("unexpected procHooks in setns")

default:

return newSystemError(fmt.Errorf("invalid JSON payload from child"))

}

})

if ierr != nil {

p.wait()

return ierr

}

return nil

}

|

Now, the cause of the problem and the code analysis have all been completed.