Problem phenomenon

We have some GPU machines and need to count GPU related information, the data is taken from Prometheus, but one day we suddenly found that some labels of some GPU node metrics are empty.

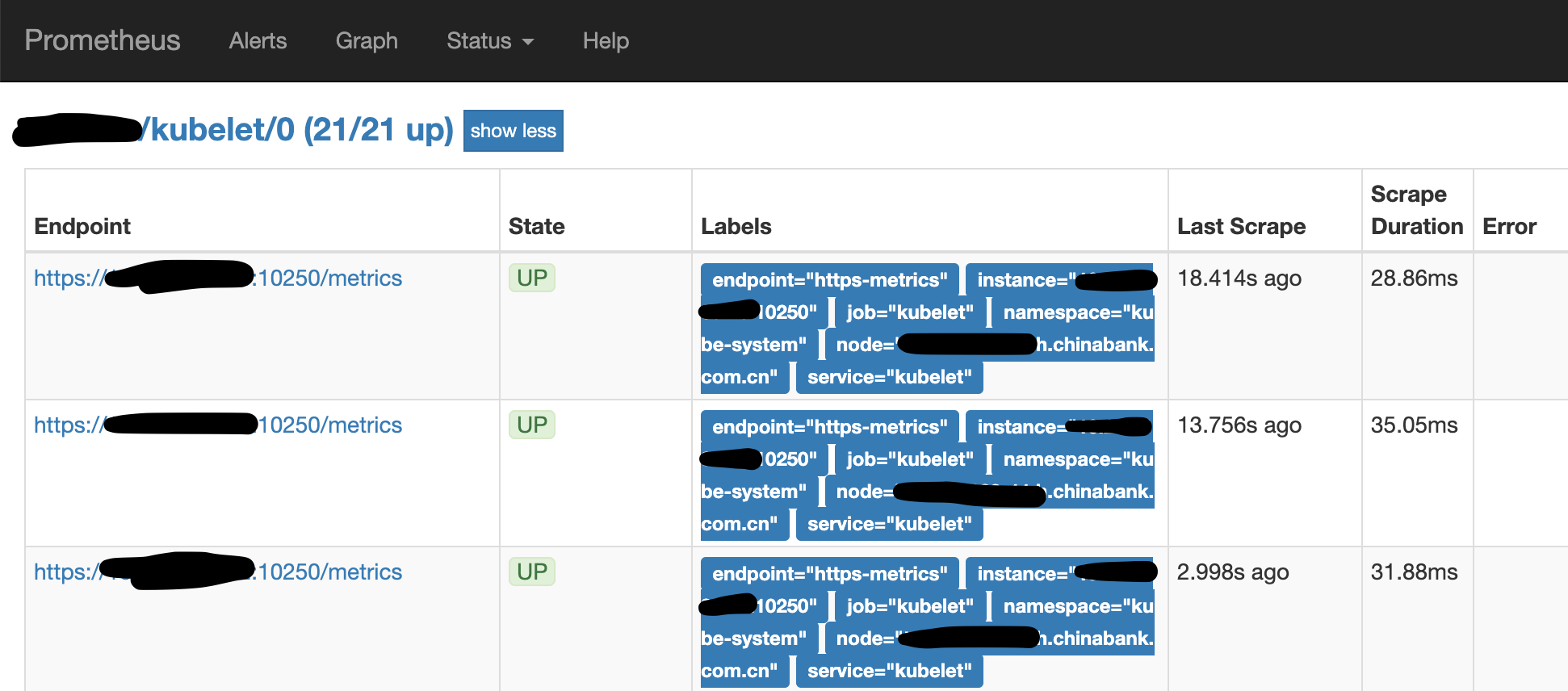

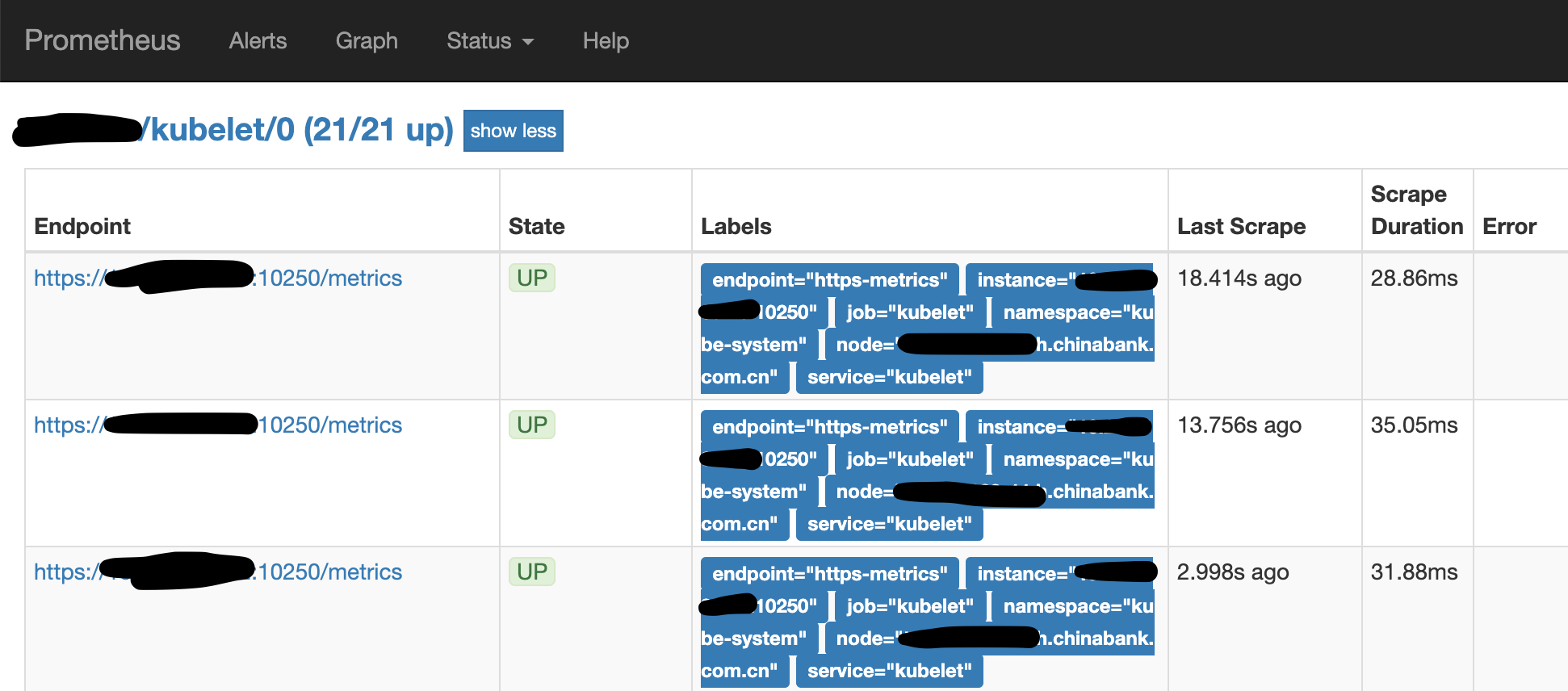

Here are the metrics of normal nodes.

1

|

container_accelerator_duty_cycle{acc_id="GPU-d8384090-81d2-eda5-ed02-8137eb037460",container_name="nvidia-device-plugin-ctr",endpoint="https-metrics",id="/kubepods/besteffort/podd917e00f-a779-11e9-b971-6805ca7f5b2a/38a5decf96e9f007fcb0059d79017ea3b3c29ff4c9a81b7fec86cf63c06baf53",image="sha256:7354b8a316796fba0463a68da79d79eb654d741241ca3c4f62a1ef24d8e11718",instance="10.0.0.1:10250",job="kubelet",make="nvidia",model="Tesla P40",name="k8s_nvidia-device-plugin-ctr_nvidia-device-plugin-daemonset-sn2cg_lambda_d917e00f-a779-11e9-b971-6805ca7f5b2a_1",namespace="ieevee",node="x1.ieevee.com",pod_name="nvidia-device-plugin-daemonset-sn2cg",service="kubelet"} 0

|

Here are the metrics of the exception node.

1

|

container_accelerator_duty_cycle{acc_id="GPU-a7b535d0-d6ca-022c-5b23-1bff863646a4",container_name="",endpoint="https-metrics",id="/kubepods/besteffort/pod8bb25662-de9a-11e9-84e7-f8f21e04010c/cde3858becb05366e71f230e876204be586662f274dcb4a6e2b75ea404f2d5a9",instance="10.0.0.2:10250",job="kubelet",make="nvidia",model="Tesla V100-PCIE-16GB",name="",namespace="",pod_name=""}

|

You can see that the data taken out by Prometheus, the container_name, name, namespace, and pod_name in the label are all empty.

container_accelerator_duty_cycle This metric is provided by kubelet (kubelet is a target of Prometheus) and can be viewed as metrics via https://10.0.0.2:10250/metrics.

Use the following command to view the metrics and compare the difference in the data taken from the kubelet of the normal and abnormal nodes.

1

|

curl -i -k -H "Authorization: Bearer ${token}" https://10.0.0.2:10250/metrics/cadvisor |grep container_accelerator_duty_cycle

|

Normal nodes.

1

|

container_accelerator_duty_cycle{acc_id="GPU-0e67d3a8-5c36-4232-46b1-14d4470adcb6",endpoint="https-metrics",id="/kubepods/besteffort/pod756bcd3a-e9a7-11e9-84e7-f8f21e04010c/9c6af6f329cf4d01462b0ba8c2f8bbb79269bc309434489228b4c97154f0f2d5",instance="10.0.0.1:10250",job="kubelet",make="nvidia",model="Tesla V100-PCIE-16GB",node="x1.ieevee.com",service="kubelet"}

|

Exception node.

1

|

container_accelerator_duty_cycle{acc_id="GPU-a7b535d0-d6ca-022c-5b23-1bff863646a4",container_name="",id="/kubepods/besteffort/pod8bb25662-de9a-11e9-84e7-f8f21e04010c/cde3858becb05366e71f230e876204be586662f274dcb4a6e2b75ea404f2d5a9",image="",make="nvidia",model="Tesla V100-PCIE-16GB",name="",namespace="",pod_name=""} 0

|

That is, the data taken from the kubelet already has the problem of some Label being empty, and the problem has nothing to do with Prometheus, so let’s see why the kubelet has the problem.

kubelet and cadvisor

We know that many of the kubelet metrics, are obtained through cadvisor, which runs directly in the kubelet process, and there is not a separate cadvisor process on the node. However, the cadvisor code still uses github.com/google/cadvisor, in other words, cadvisor has been turned into an SDK for kubelet calls.

Let’s briefly analyze the code (the following is based on 1.11.1).

The kubelet initializes a CAdvisorInterface when it starts.

1

2

3

4

5

6

7

8

9

|

func run(s *options.KubeletServer, kubeDeps *kubelet.Dependencies, stopCh <-chan struct{}) (err error) {

...

if kubeDeps.CAdvisorInterface == nil {

imageFsInfoProvider := cadvisor.NewImageFsInfoProvider(s.ContainerRuntime, s.RemoteRuntimeEndpoint)

kubeDeps.CAdvisorInterface, err = cadvisor.New(s.Address, uint(s.CAdvisorPort), imageFsInfoProvider, s.RootDirectory, cadvisor.UsingLegacyCadvisorStats(s.ContainerRuntime, s.RemoteRuntimeEndpoint))

if err != nil {

return err

}

}

|

The initialization process is mainly done by starting a cadvisor http server.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

// New creates a cAdvisor and exports its API on the specified port if port > 0.

func New(address string, port uint, imageFsInfoProvider ImageFsInfoProvider, rootPath string, usingLegacyStats bool) (Interface, error) {

...

cadvisorClient := &cadvisorClient{

imageFsInfoProvider: imageFsInfoProvider,

rootPath: rootPath,

Manager: m,

}

err = cadvisorClient.exportHTTP(address, port)

if err != nil {

return nil, err

}

return cadvisorClient, nil

|

The exportHTTP function starts the http server and registers a kubelet callback function containerLabels with the cadvisor http server, where the kubelet adds the label it needs to the cadvisor’s metrics.

1

2

3

4

5

6

|

func (cc *cadvisorClient) exportHTTP(address string, port uint) error {

// Register the handlers regardless as this registers the prometheus

// collector properly.

mux := http.NewServeMux()

...

cadvisorhttp.RegisterPrometheusHandler(mux, cc, "/metrics", containerLabels)

|

First look at what containerLabels has done.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

|

func containerLabels(c *cadvisorapi.ContainerInfo) map[string]string {

// Prometheus requires that all metrics in the same family have the same labels,

// so we arrange to supply blank strings for missing labels

var name, image, podName, namespace, containerName string

if len(c.Aliases) > 0 {

name = c.Aliases[0]

}

image = c.Spec.Image

if v, ok := c.Spec.Labels[types.KubernetesPodNameLabel]; ok {

podName = v

}

if v, ok := c.Spec.Labels[types.KubernetesPodNamespaceLabel]; ok {

namespace = v

}

if v, ok := c.Spec.Labels[types.KubernetesContainerNameLabel]; ok {

containerName = v

}

set := map[string]string{

metrics.LabelID: c.Name,

metrics.LabelName: name,

metrics.LabelImage: image,

"pod_name": podName,

"namespace": namespace,

"container_name": containerName,

}

return set

}

|

What do we see? pod_name, namespace, container_name, which correspond to the missing labels.

1

|

container_accelerator_duty_cycle{acc_id="GPU-a7b535d0-d6ca-022c-5b23-1bff863646a4",container_name="",id="/kubepods/besteffort/pod8bb25662-de9a-11e9-84e7-f8f21e04010c/cde3858becb05366e71f230e876204be586662f274dcb4a6e2b75ea404f2d5a9",image="",make="nvidia",model="Tesla V100-PCIE-16GB",name="",namespace="",pod_name=""} 0

|

So, when does cadvisor call containerLabels? Continue to RegisterPrometheusHandler.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

|

func RegisterPrometheusHandler(mux httpmux.Mux, containerManager manager.Manager, prometheusEndpoint string, f metrics.ContainerLabelsFunc) {

r := prometheus.NewRegistry()

r.MustRegister(

metrics.NewPrometheusCollector(containerManager, f),

...

}

func NewPrometheusCollector(i infoProvider, f ContainerLabelsFunc) *PrometheusCollector {

if f == nil {

f = DefaultContainerLabels

}

c := &PrometheusCollector{

infoProvider: i,

containerLabelsFunc: f, //here 1

...

containerMetrics: []containerMetric{

{

name: "container_last_seen",

help: "Last time a container was seen by the exporter",

}, {

...

name: "container_accelerator_duty_cycle", //here 2

help: "Percent of time over the past sample period during which the accelerator was actively processing.",

valueType: prometheus.GaugeValue,

extraLabels: []string{"make", "model", "acc_id"},

getValues: func(s *info.ContainerStats) metricValues {

values := make(metricValues, 0, len(s.Accelerators))

for _, value := range s.Accelerators {

values = append(values, metricValue{

value: float64(value.DutyCycle),

labels: []string{value.Make, value.Model, value.ID},

})

}

return values

},

}, {

|

In the NewPrometheusCollector, we see the container_accelerator_duty_cycle collected earlier, and the three labels make, model, and acc_id; and also containerLabels hooked up to the containerLabelsFunc.

Thus, when the user API requests metrics, the cadvisor can add the labels/values he needs to the metrics’ labels by calling the kubelet’s callback function containerLabels, as requested by the kubelet.

1

2

3

4

5

6

7

8

9

10

|

func (c *PrometheusCollector) collectContainersInfo(ch chan<- prometheus.Metric) {

..

for _, container := range containers {

values := make([]string, 0, len(rawLabels))

labels := make([]string, 0, len(rawLabels))

containerLabels := c.containerLabelsFunc(container)

for l := range rawLabels {

labels = append(labels, sanitizeLabelName(l))

values = append(values, containerLabels[l])

}

|

cadvisor and runtime

As you can see from the previous analysis, the missing labels were “delegated” to the cadvisor by the kubelet, but apparently the cadvisor is having some problems with them. Where is it?

Back to the “delegate function”, you can see from the following code, containerLabels actually reads the value of the map Labels from ContainerInfo, if the map does not have the corresponding key, then naturally the value is empty ( The initial value is an empty string).

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

func containerLabels(c *cadvisorapi.ContainerInfo) map[string]string {

var name, image, podName, namespace, containerName string

...

if v, ok := c.Spec.Labels[types.KubernetesPodNameLabel]; ok {

podName = v

}

if v, ok := c.Spec.Labels[types.KubernetesPodNamespaceLabel]; ok {

namespace = v

}

if v, ok := c.Spec.Labels[types.KubernetesContainerNameLabel]; ok {

containerName = v

}

const (

KubernetesPodNameLabel = "io.kubernetes.pod.name"

KubernetesPodNamespaceLabel = "io.kubernetes.pod.namespace"

KubernetesPodUIDLabel = "io.kubernetes.pod.uid"

KubernetesContainerNameLabel = "io.kubernetes.container.name"

KubernetesContainerTypeLabel = "io.kubernetes.container.type"

)

|

So the question is simplified to, c.Spec.Labels When was this processed?

cadvisor and docker

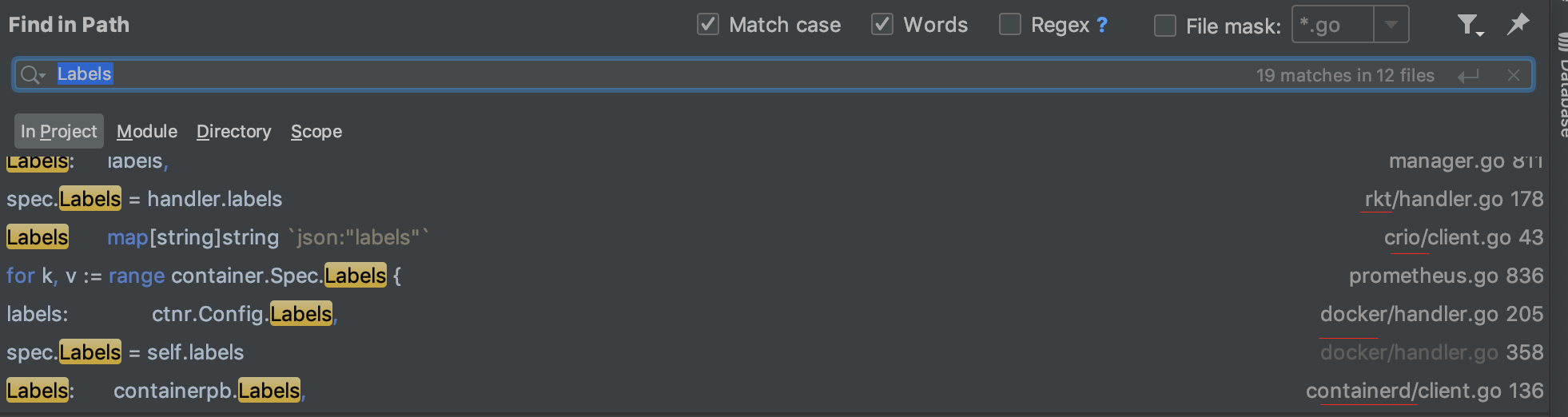

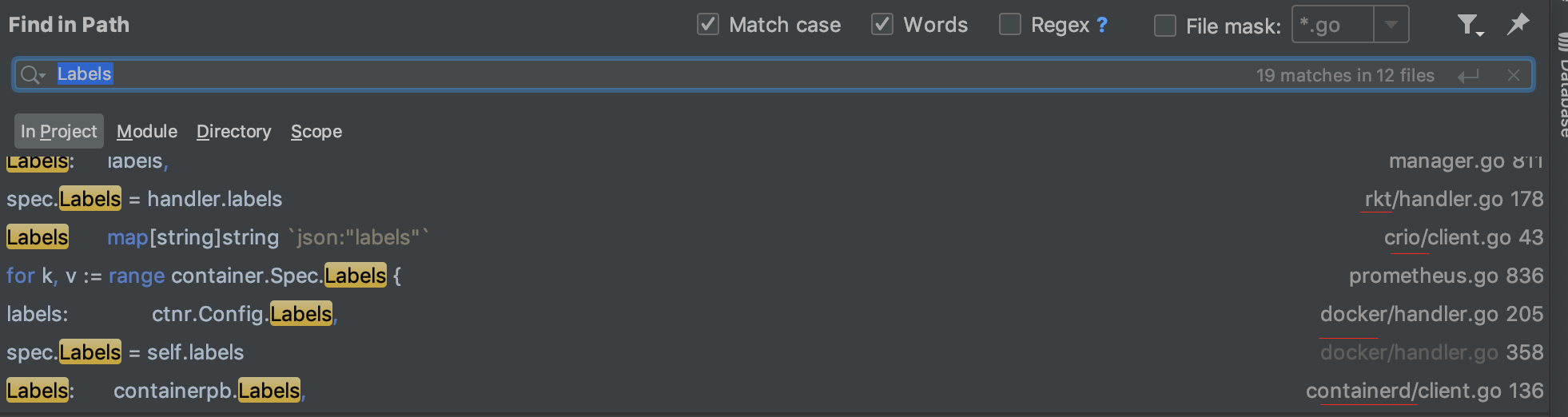

Because I hadn’t seen cadvisor’s code before that and didn’t understand cadvisor’s architecture, I went straight to see how Labels were obtained in cadvisor’s code.

As you can see, there are implementations related to docker, containerd, rkt, crio, etc. Since we are using docker on our cluster, let’s look at how docker is handled here.

1

2

3

4

5

6

7

8

9

10

11

|

func (self *dockerContainerHandler) GetSpec() (info.ContainerSpec, error) {

hasFilesystem := !self.ignoreMetrics.Has(container.DiskUsageMetrics)

spec, err := common.GetSpec(self.cgroupPaths, self.machineInfoFactory, self.needNet(), hasFilesystem)

spec.Labels = self.labels

spec.Envs = self.envs

spec.Image = self.image

spec.CreationTime = self.creationTime

return spec, err

}

|

Obviously, ContainerSpec is c.Spec. in containerLabels, and the line spec.Labels = self.labels tells us that cadvisor is getting Labels from dockerContainerHandler.

And where does dockerContainerHandler get the Labels from? Keep looking for the code.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

func newDockerContainerHandler(

client *docker.Client,

name string, ...)

...

// We assume that if Inspect fails then the container is not known to docker.

ctnr, err := client.ContainerInspect(context.Background(), id)

if err != nil {

return nil, fmt.Errorf("failed to inspect container %q: %v", id, err)

}

handler := &dockerContainerHandler{

...

labels: ctnr.Config.Labels,

ignoreMetrics: ignoreMetrics,

zfsParent: zfsParent,

}

|

OK, we found the real source of the data. In fact, docker inspects the container, gets the container’s ctnr.Config.Labels, and then passes it out layer by layer, so the cause of the problem is probably that ctnr.Config.Labels doesn’t have the pod_name, namespace, container_name, that is types.KubernetesPodNameLabel and other Labels.

Go to the GPU node where the exception is located and actually look at the docker information.

1

2

3

4

5

6

7

8

9

10

|

# docker inspect b8f6b265835e

[

{

"Id": "b8f6b265835ea112000aec5fd426be66f922bb241f3e65c45997e7bc63bad003",

"Config": {

"Labels": {

"io.kubernetes.container.name": "xxx",

"io.kubernetes.docker.type": "container",

"io.kubernetes.pod.name": "xxx-67d8c96565-nmcwg",

"io.kubernetes.pod.namespace": "ieevee",

|

Unfortunately, docker inspect has the corresponding Label, which is a bit puzzling and shouldn’t not be available.

The real reason

So is it possible that cadvisor has a poor path to docker and is not getting any information from docker directly?

Let’s take a look at the flow from cadvisor startup to the establishment of the path to docker.

When kubelet creates cadvisorClient, it creates the container manager, and then it calls the Start function of the container manager, and in the Start function, it registers docker, rkt, containerd, crio, etc.

So, cadvisor is actually the mover of Labels, and its data is also obtained by calling the APIs of docker, containerd, etc.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

// Start the container manager.

func (self *manager) Start() error {

err := docker.Register(self, self.fsInfo, self.ignoreMetrics)

if err != nil {

glog.V(5).Infof("Registration of the Docker container factory failed: %v.", err)

}

err = rkt.Register(self, self.fsInfo, self.ignoreMetrics)

...

err = containerd.Register(self, self.fsInfo, self.ignoreMetrics)

if err != nil {

glog.V(5).Infof("Registration of the containerd container factory failed: %v", err)

}

err = crio.Register(self, self.fsInfo, self.ignoreMetrics)

if err != nil {

glog.V(5).Infof("Registration of the crio container factory failed: %v", err)

}

|

On a wild assumption, if docker.Register fails to register, then you won’t be able to get any information from docker inspect.

Check the kubelet logs.

1

2

3

|

[root@10-0-0-2 /var/log]# grep "Registration of the Docker container factory" messages*

messages-20191013:Oct 8 16:51:45 10-0-0-2 kubelet: I1008 16:51:45.784752 3217 manager.go:297] Registration of the Docker container factory failed: failed to validate Docker info: version string "dev" doesn't match expected regular expression: "(\d+)\.(\d+)\.(\d+)".

messages-20191013:Oct 9 10:10:11 10-0-0-2 kubelet: I1009 10:10:11.221103 751886 manager.go:297] Registration of the Docker container factory failed: failed to validate Docker info: version string "dev" doesn't match expected regular expression: "(\d+)\.(\d+)\.(\d+)".

|

Sure enough, docker registration failed. The reason for the registration failure is simple: the version of the docker was modified, and the version of the docker server is “dev”, which does not satisfy the regular expression "(\d+)\. (\d+)\. (\d+)", so the registration fails, so the desired label cannot be inspected.

Although it can’t be fetched from docker, can it be fetched from containerd?

The same, directly to the log grep “Registration of”.

1

2

3

4

5

|

[root@10-0-0-2 /var/log]# grep "Registration of" messages*

messages-20191013:Oct 8 16:51:45 10-0-0-2 kubelet: I1008 16:51:45.784752 3217 manager.go:297] Registration of the Docker container factory failed: failed to validate Docker info: version string "dev" doesn't match expected regular expression: "(\d+)\.(\d+)\.(\d+)".

messages-20191013:Oct 8 16:51:45 10-0-0-2 kubelet: I1008 16:51:45.784809 3217 manager.go:302] Registration of the rkt container factory failed: unable to communicate with Rkt api service: rkt: cannot tcp Dial rkt api service: dial tcp [::1]:15441: connect: connection refused

messages-20191013:Oct 8 16:51:45 10-0-0-2 kubelet: I1008 16:51:45.784937 3217 manager.go:313] Registration of the containerd container factory failed: unable to create containerd client: containerd: cannot unix dial containerd api service: dial unix /run/containerd/containerd.sock: connect: no such file or directory

messages-20191013:Oct 8 16:51:45 10-0-0-2 kubelet: I1008 16:51:45.785134 3217 manager.go:318] Registration of the crio container factory failed: Get http://%2Fvar%2Frun%2Fcrio%2Fcrio.sock/info: dial unix /var/run/crio/crio.sock: connect: no such file or directory

|

As you can see, containerd doesn’t work either, there is no file socket, and everything else fails too. In other words, cadvisor has no data source and no way to add the labels that kubelet wants.

Solution

It’s simple, just generate the version of docker server by regular expression, for example “18.06.1”.