Kubernetes (often referred to as K8s) is an open source system for automating the deployment, scaling, and management of “containerized applications”. The system was designed by Google and donated to the Cloud Native Computing Foundation (now part of the Linux Foundation) for use.

It is designed to provide “a platform for automating the deployment, scaling, and running of application containers across host clusters”. It supports a range of container tools, including Docker and others.

Kubernetes (which means “helmsman” or “pilot” in Greek) was founded by Joe Beda, Brendan Burns and Craig McLuckie and joined by other Google engineers, including Brian Grant and Tim Hockin, and was first announced by Google in 2014. The system’s development and design were heavily influenced by Google’s Borg system, and many of its top contributors were previously developers of Borg. Within Google, the original codename for Kubernetes used to be Seven, the Borg of Star Trek, and the seven spokes of the rudder wheel in the Kubernetes logo are a nod to the project’s codename.

Kuberbetes has been a hot technology in the IT industry, many people want to learn it, but just want to get started encountered a problem, need to build a k8s cluster. Although cloud service providers such as AliCloud and BaiduCloud offer k8s clustering services, you still need to spend a fortune to buy the services and nodes. How can you build a k8s cluster to learn on your own machine? This article gives you a way to build a k8s test cluster using minikube.

Installation

minikube is primarily based on running a single-node Kubernetes cluster in order to support development within VMs on local machines. It supports VM drivers such as VirtualBox, HyperV, KVM2. Since Minikube is a relatively mature solution in the Kubernetes architecture, the list of supported features is very impressive. These features are load balancers, multiple clusters, node ports, persistent volumes, portals, dashboards, or container runtimes.

Based on the Minikube open source tool, it enables developers, operations staff and DevOps engineers to quickly build Kubernetes single-node cluster environments locally. After all, Minikube does not require too much hardware and software resources, making it easy for technical staff to learn and practice, as well as for daily project development.

To run minikube, you need at least:

- 2 CPU or more.

- 2GB of RAM.

- 20GB of disk space.

- Internet access.

- A container or virtual machine manager such as: Docker, Hyperkit, Hyper-V, KVM, Parallels, Podman, VirtualBox, or VMware Fusion/Workstation.

I’m using a MacBook Pro M1 and have Docker installed on my machine, so all of these requirements are met. If you have Linux or Windows, you can also install it, but you need to download the appropriate installer.

This article introduces my environment as an example.

For MacOS, you can install it in two ways.

One is the manual installation method.

1

2

|

curl -LO https://storage.googleapis.com/minikube/releases/latest/minikube-darwin-amd64

sudo install minikube-darwin-amd64 /usr/local/bin/minikube

|

If for some reason you don’t have a way to download this file, you can also go to the github site and download the binary into /usr/local/bin/minikube.

The second way is to use the brew command to install it:

1

2

3

4

|

brew install minikube

// See where minikube is installed

➜ ~ which minikube

/usr/local/bin/minikube

|

Start

The next step is to start minikube.

Execute the following command to start it:

The first time you start, you need to download various mirrors that k8s depends on (in China, some mirrors may fail to pull down and install because of GFW).

I use the method of pulling mirrors from Ali cloud, and then re-tagging to the corresponding k8s dependent mirrors, such as

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.22.3

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.22.3 k8s.gcr.io/kube-proxy:v1.22.3

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.8.4

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:v1.8.4 k8s.gcr.io/coredns/coredns:v1.8.4

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.22.3

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.22.3 k8s.gcr.io/kube-scheduler:v1.22.3

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/storage-provisioner:v5

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/storage-provisioner:v5 gcr.io/k8s-minikube/storage-provisioner:v5

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.22.3

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.22.3 k8s.gcr.io/kube-apiserver:v1.22.3

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.0-0

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.0-0 k8s.gcr.io/etcd:3.5.0-0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.22.3

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.22.3 k8s.gcr.io/kube-controller-manager:v1.22.3

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.5

docker tag registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.5 k8s.gcr.io/pause:3.5

|

After manually pulling these images locally and running minikube start, it started successfully.

I also saw another way to start on the internet:

1

|

minikube start --image-mirror-country='cn'

|

I think this is a much simpler way, but I have already installed it, so I have not tried this way, you can try it.

The minikube command provides a very large number of configuration parameters, such as

--driver: Starting with version 1.5.0, Minikube by default uses the system-preferred driver to create a Kubernetes local environment, e.g. if you already have a Docker environment installed, minikube will use the docker driver.-cpus=2: Allocate CPU cores to the minikube virtual machine.--memory=4096mb: the number of memory allocated for the minikube virtual machine.--kubernetes-version=: The version of kubernetes that the minikube virtual machine will use.

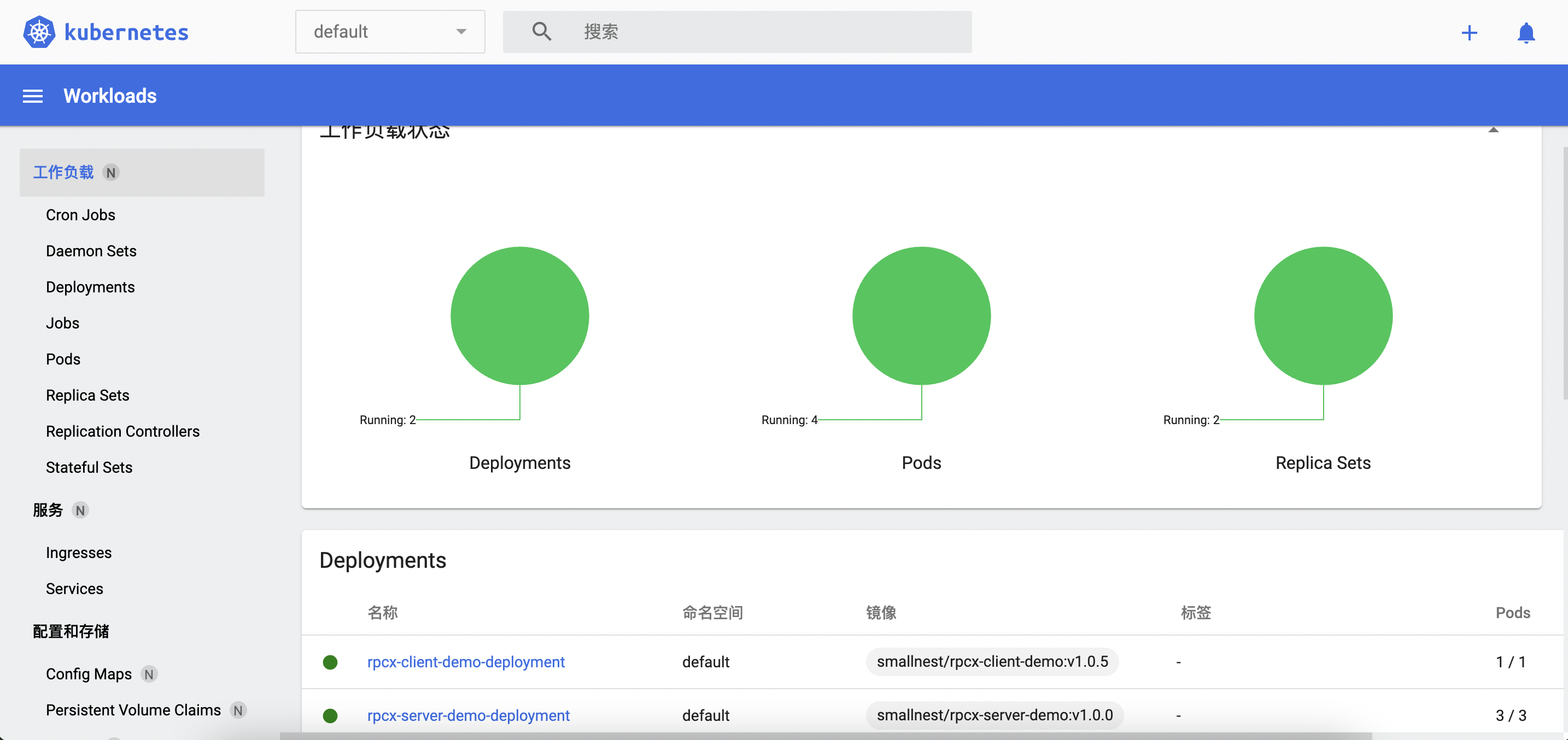

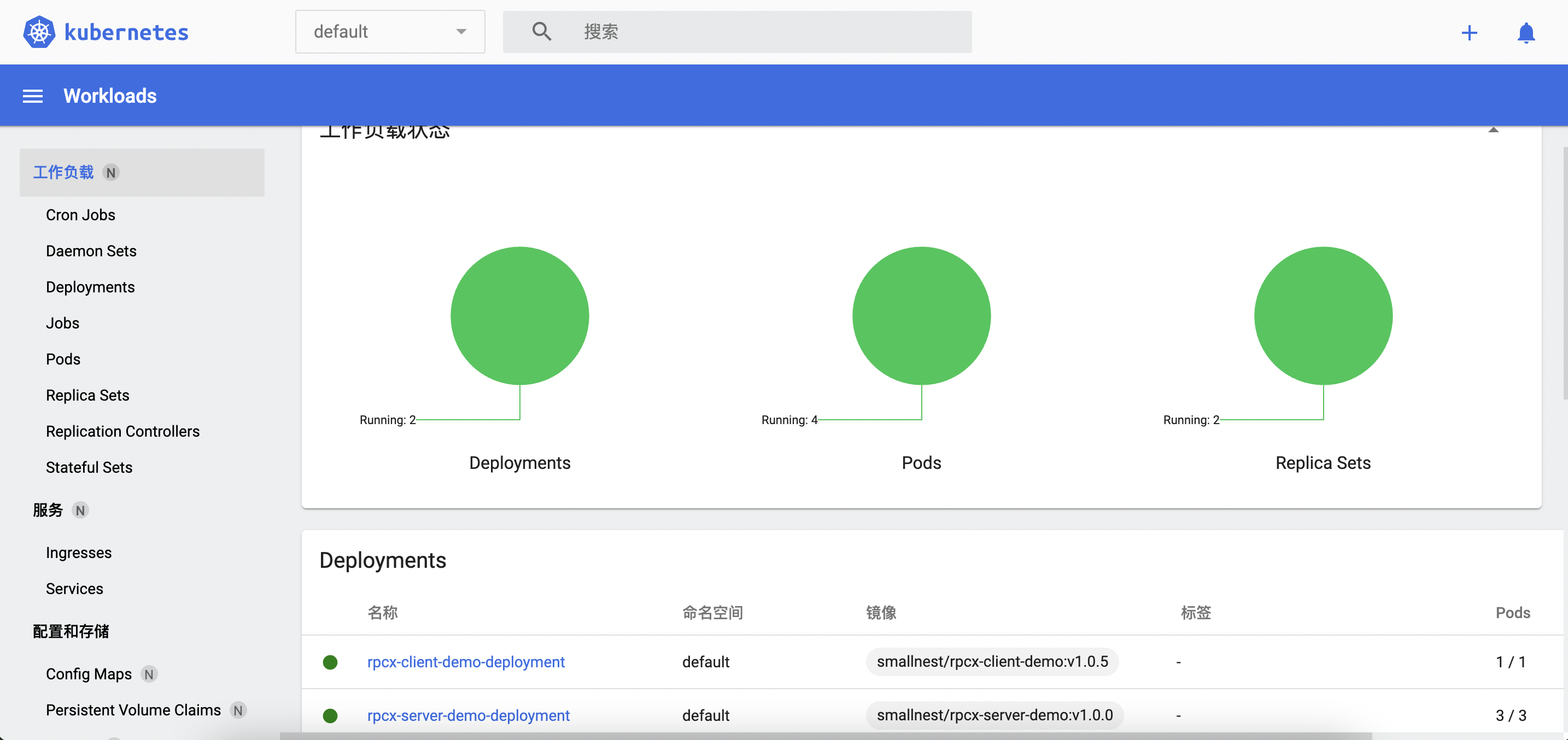

Now, you can open the Kubernetes console: minikube dashboard.

Now, a local test k8s cluster is set up.

If you don’t use it for a while, you can call minikube stop to pause it and start it again when you need it.

Plugins

You can now quickly create, update and delete Kubernetes objects directly using the kubectl command. You are now free to learn and test k8s technologies in your own cluster.

minikube also provides many plugins, such as istio, ingress, helm-tiller, and more. As you master k8s, you can gradually install and test these extensions.

The list of plugins is as follows:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

|

➜ ~ minikube addons list

|-----------------------------|----------|--------------|-----------------------|

| ADDON NAME | PROFILE | STATUS | MAINTAINER |

|-----------------------------|----------|--------------|-----------------------|

| ambassador | minikube | disabled | unknown (third-party) |

| auto-pause | minikube | disabled | google |

| csi-hostpath-driver | minikube | disabled | kubernetes |

| dashboard | minikube | enabled ✅ | kubernetes |

| default-storageclass | minikube | enabled ✅ | kubernetes |

| efk | minikube | disabled | unknown (third-party) |

| freshpod | minikube | disabled | google |

| gcp-auth | minikube | disabled | google |

| gvisor | minikube | disabled | google |

| helm-tiller | minikube | disabled | unknown (third-party) |

| ingress | minikube | disabled | unknown (third-party) |

| ingress-dns | minikube | disabled | unknown (third-party) |

| istio | minikube | disabled | unknown (third-party) |

| istio-provisioner | minikube | disabled | unknown (third-party) |

| kubevirt | minikube | disabled | unknown (third-party) |

| logviewer | minikube | disabled | google |

| metallb | minikube | disabled | unknown (third-party) |

| metrics-server | minikube | disabled | kubernetes |

| nvidia-driver-installer | minikube | disabled | google |

| nvidia-gpu-device-plugin | minikube | disabled | unknown (third-party) |

| olm | minikube | disabled | unknown (third-party) |

| pod-security-policy | minikube | disabled | unknown (third-party) |

| portainer | minikube | disabled | portainer.io |

| registry | minikube | disabled | google |

| registry-aliases | minikube | disabled | unknown (third-party) |

| registry-creds | minikube | disabled | unknown (third-party) |

| storage-provisioner | minikube | enabled ✅ | kubernetes |

| storage-provisioner-gluster | minikube | disabled | unknown (third-party) |

| volumesnapshots | minikube | disabled | kubernetes |

|-----------------------------|----------|--------------|-----------------------|

|

Enabling a plugin can be done with the following command:

1

2

3

4

5

6

|

➜ ~ minikube addons enable ingress

💡 After the addon is enabled, please run "minikube tunnel" and your ingress resources would be available at "127.0.0.1"

▪ Using image k8s.gcr.io/ingress-nginx/kube-webhook-certgen:v1.1.1

▪ Using image k8s.gcr.io/ingress-nginx/controller:v1.0.4

▪ Using image k8s.gcr.io/ingress-nginx/kube-webhook-certgen:v1.1.1

🔎 Verifying ingress addon...

|

k8s cluster

Now that we have built a k8s cluster, we might as well use it to deploy applications and services.

Let’s take the rpcx microservices framework for a hello world application as an example.

Deploying an rpcx server-side application

The rpcx server-side application is simple:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

|

package main

import (

"context"

"flag"

"fmt"

example "github.com/rpcxio/rpcx-examples"

"github.com/smallnest/rpcx/server"

)

var addr = flag.String("addr", ":8972", "server address")

type Arith struct{}

// the second parameter is not a pointer

func (t *Arith) Mul(ctx context.Context, args example.Args, reply *example.Reply) error {

reply.C = args.A * args.B

fmt.Println("C=", reply.C)

return nil

}

func main() {

flag.Parse()

s := server.NewServer()

// s.Register(new(Arith), "")

s.RegisterName("Arith", new(Arith), "")

err := s.Serve("tcp", *addr)

if err != nil {

panic(err)

}

}

|

Write a Docker file, compile it and generate the image:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

FROM golang:1.18-alpine as builder

WORKDIR /usr/src/app

ENV GOPROXY=https://goproxy.cn

RUN sed -i 's/dl-cdn.alpinelinux.org/mirrors.aliyun.com/g' /etc/apk/repositories && \

apk add --no-cache ca-certificates tzdata

COPY ./go.mod ./

COPY ./go.sum ./

RUN go mod download

COPY . .

RUN CGO_ENABLED=0 go build -ldflags "-s -w" -o rpcx_server

FROM scratch as runner

COPY --from=builder /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

COPY --from=builder /etc/ssl/certs/ca-certificates.crt /etc/ssl/certs/

COPY --from=builder /usr/src/app/rpcx_server /opt/app/

EXPOSE 8972

CMD ["/opt/app/rpcx_server"]

|

Generate the image (I also pushed it to the docker platform):

1

2

|

docker build . -t smallnest/rpcx-server-demo:0.1.0

docker push smallnest/rpcx-server-demo:0.1.0

|

The next step is to write the deployment file, using our image, with a copy number of 3:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

|

apiVersion: apps/v1

kind: Deployment

metadata:

name: rpcx-server-demo-deployment

spec:

selector:

matchLabels:

app: rpcx-server-demo

replicas: 3

template:

metadata:

labels:

app: rpcx-server-demo

spec:

containers:

- name: rpcx-server-demo

image: smallnest/rpcx-server-demo:0.1.0

ports:

- containerPort: 8972

|

Next, we define the service and expose our microservice as a service of k8s:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

apiVersion: v1

kind: Service

metadata:

name: rpcx-server-demo-service #Name of Service

labels: #Service's own tags

app: rpcx-server-demo #Set the tag for the Service with key app and value rpcx-server-demo

spec: #This is the definition of the Service, which describes how the Service selects Pods and how they are accessed

selector: #Tag selector

app: rpcx-server-demo #Select the Pod that contains the tag app:rpcx-server-demo

ports:

- name: rpcx-server-demo-port #Name of the port

protocol: TCP #Protocol type TCP/UDP

port: 9981 #Other container groups in the cluster can access the Service on port 9981

targetPort: 8972 #Forward the request to port 8972 of the matching Pod

|

Finally, execute the following command to publish the application and services:

1

2

|

kubectl apply -f rpcx-server-demo.yaml

kubectl apply -f rpcx-server-demo-service.yaml

|

You can view the published applications and services:

1

2

3

4

5

|

➜ ~ kubectl get pods

NAME READY STATUS RESTARTS AGE

rpcx-server-demo-deployment-7f9d85c5dc-42wbm 1/1 Running 1 (102d ago) 103d

rpcx-server-demo-deployment-7f9d85c5dc-499gm 1/1 Running 1 (102d ago) 103d

rpcx-server-demo-deployment-7f9d85c5dc-s6gh9 1/1 Running 1 (25h ago) 103d

|

1

2

3

4

|

➜ ~ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 114d

rpcx-server-demo-service ClusterIP 10.98.134.3 <none> 9981/TCP 103d

|

Deploying the rpcx client application

Similarly, we deploy the rpcx client application, which will call the rpcx service we just deployed.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

|

package main

import (

"context"

"flag"

"fmt"

"log"

"os"

"strings"

"time"

"github.com/smallnest/rpcx/protocol"

example "github.com/rpcxio/rpcx-examples"

"github.com/smallnest/rpcx/client"

)

func main() {

flag.Parse()

port := os.Getenv("RPCX_SERVER_DEMO_SERVICE_PORT")

addr := strings.TrimPrefix(port, "tcp://")

fmt.Println("dial ", addr)

d, _ := client.NewPeer2PeerDiscovery("tcp@"+addr, "")

opt := client.DefaultOption

opt.SerializeType = protocol.JSON

xclient := client.NewXClient("Arith", client.Failtry, client.RandomSelect, d, opt)

defer xclient.Close()

args := example.Args{

A: 10,

B: 20,

}

for {

reply := &example.Reply{}

err := xclient.Call(context.Background(), "Mul", args, reply)

if err != nil {

log.Fatalf("failed to call: %v", err)

}

log.Printf("%d * %d = %d", args.A, args.B, reply.C)

time.Sleep(time.Second)

}

}

|

Write a Dockerfile file to generate the image:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

FROM golang:1.18-alpine as builder

WORKDIR /usr/src/app

ENV GOPROXY=https://goproxy.cn

RUN sed -i 's/dl-cdn.alpinelinux.org/mirrors.aliyun.com/g' /etc/apk/repositories && \

apk add --no-cache ca-certificates tzdata

COPY ./go.mod ./

COPY ./go.sum ./

RUN go mod download

COPY . .

RUN CGO_ENABLED=0 go build -ldflags "-s -w" -o rpcx_client

FROM busybox as runner

COPY --from=builder /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

COPY --from=builder /etc/ssl/certs/ca-certificates.crt /etc/ssl/certs/

COPY --from=builder /usr/src/app/rpcx_client /opt/app/

CMD ["/opt/app/rpcx_client"]

|

Compile the generated image.

1

2

|

docker build . -t smallnest/rpcx-client-demo:0.1.0

docker push smallnest/rpcx-client-demo:0.1.0

|

Write yaml files:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

|

apiVersion: apps/v1

kind: Deployment

metadata:

name: rpcx-client-demo-deployment

spec:

selector:

matchLabels:

app: rpcx-client-demo

replicas: 1

template:

metadata:

labels:

app: rpcx-client-demo

spec:

containers:

- name: rpcx-client-demo

image: smallnest/rpcx-client-demo:0.1.0

|

Finally, release.

1

|

kubectl apply -f rpcx-client-demo.yaml

|

Check if the client has published successfully:

1

2

3

4

5

6

|

➜ ~ kubectl get pods

NAME READY STATUS RESTARTS AGE

rpcx-client-demo-deployment-699bfb8799-wdsww 1/1 Running 1 (25h ago) 103d

rpcx-server-demo-deployment-7f9d85c5dc-42wbm 1/1 Running 1 (102d ago) 103d

rpcx-server-demo-deployment-7f9d85c5dc-499gm 1/1 Running 1 (102d ago) 103d

rpcx-server-demo-deployment-7f9d85c5dc-s6gh9 1/1 Running 1 (25h ago) 103d

|

Since our copy number is 1, there is only one node here.

Checking the output of the client, you can see that it has called the service successfully:

1

2

3

4

5

6

7

8

9

10

|

➜ ~ kubectl logs rpcx-client-demo-deployment-699bfb8799-wdsww |more

dial 10.98.134.3:9981

2022/06/02 15:45:17 10 * 20 = 200

2022/06/02 15:45:18 10 * 20 = 200

2022/06/02 15:45:19 10 * 20 = 200

2022/06/02 15:45:20 10 * 20 = 200

2022/06/02 15:45:21 10 * 20 = 200

2022/06/02 15:45:22 10 * 20 = 200

2022/06/02 15:45:23 10 * 20 = 200

2022/06/02 15:45:24 10 * 20 = 200

|