memory ballast and auto gc tuner are optimizations to solve the problem of Go triggering frequent GCs when memory is not fully utilized, resulting in high CPU usage of GCs.

memory ballast tricks GOGC by allocating a huge object (typically several GB) on the heap, allowing Go to use as much heap space as possible to reduce the frequency of GC triggers.

The auto gc tuner shared by uber later is a bit smarter, setting the program’s memory usage threshold and dynamically setting the GOGC every time a GC is triggered by a callback to the user’s finalizer function during a GC, so that the memory used by the application gradually converges to the target.

When I was working in a company, the monitoring group next door once implemented a set of dynamic GOGC scheme (below 100) to keep the memory within 8GB and not to OOM, which is similar to uber’s way.

In the end, the above two approaches are both hack, because users cannot directly modify the GC logic, so they can only modify the behavior of the application to achieve the purpose of cheating the gc pacer to eventually avoid OOM or optimize GC.

The new debug.SetMemoryLimit in Go 1.19 solves this problem from the ground up, allowing memory ballast and gc tuner to be thrown directly into the trash.

- OOM scenario: SetMemoryLimit can set the memory limit.

- Scenarios with high GC trigger frequency: SetMemoryLimit sets the memory limit, GOGC = off

How was this achieved?

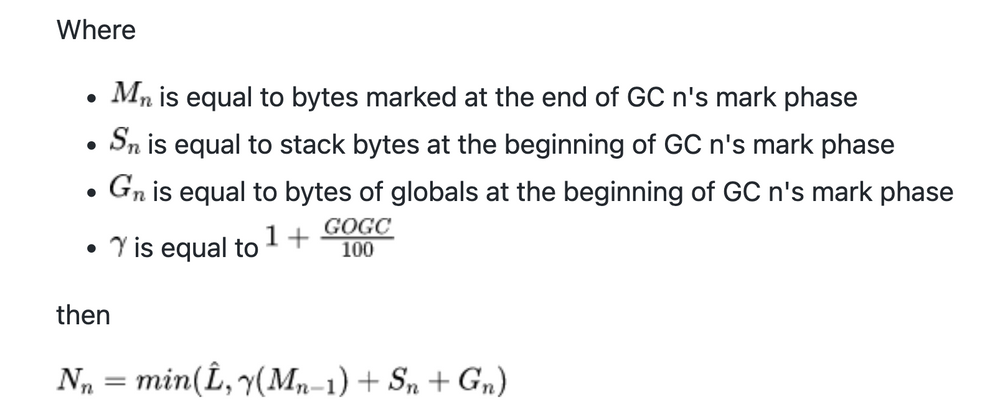

In short, the heap goal, now Nn.

- subtract the space occupied by the stack/and other non-heap by the memory limit

- 1+GOGC/100 * heap size + stack size + global variable size

The smaller of the two, if the user sets the memory limit, the heap will not grow unexpectedly to OOM as it did before by using only GOGC to calculate the heap goal.

According to the official statement, even if GOGC = off, the memory limit constraint will be strictly adhered to, so the previous gc tuner optimization will just set GOGC to off and then set the memory limit to 70% (the recommended value in uber’s article).

In addition to the GC update, another interesting update in 1.19, Determine initial size by stack statistics will average the scanned The next time newproc creates a goroutine, it will use this average to create a new goroutine instead of the previous 2KB.

The initial goroutine stack based on statistics should be a boon for those heavily connected api gateway systems, and you shouldn’t see those optimizations that count stack size based on functions anymore.

It is too tiring to reduce the number of nested function calls in order to optimize the memory footprint of the stack.