Recently working in an intranet environment, I needed to build a K8s cluster from scratch and perform a microservice migration.

The following table shows the pre-researched scenarios for offline deployment of K8S.

| Item |

Language |

Star |

Fork |

Off-line deployment support |

| kops |

Golang |

13.2k |

4.1k |

Not supported |

| kubespray |

Ansible |

11.1k |

4.7k |

Support, you need to build your own installation package |

| kubeasz |

Ansible |

7.2k |

2.7k |

Support, you need to build your own installation package |

| sealos |

Golang |

4.1k |

790 |

Support, payment required |

| RKE |

Golang |

2.5k |

480 |

Not supported, you need to install docker by yourself |

| sealer |

Golang |

503 |

112 |

Support, from sealos |

| kubekey |

Golang |

471 |

155 |

Partially supported, only mirrors available offline |

I tried sealos and kukubekeybekey for the above solution, but the cni has been unable to pull up properly due to the unclean network environment of the machine itself that was initially given to me. And these deployment tools simplify the deployment, but also shield a lot of details, resulting in locating the problem is more trouble, the follow-up or use kubeadm to build their own.

Deployment material preparation

Server

- System environment: Ubuntu 18.04

- Machine: 3

docker

1

2

3

|

wget -P /home/deploy/deb/docker/ https://download.docker.com/linux/ubuntu/dists/bionic/pool/stable/amd64/docker-ce_19.03.13~3-0~ubuntu-bionic_amd64.deb

wget -P /home/deploy/deb/docker/ https://download.docker.com/linux/ubuntu/dists/bionic/pool/stable/amd64/containerd.io_1.3.7-1_amd64.deb

wget -P /home/deploy/deb/docker/ https://download.docker.com/linux/ubuntu/dists/bionic/pool/stable/amd64/docker-ce-cli_19.03.13~3-0~ubuntu-bionic_amd64.deb

|

K8S

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

apt-get update && apt-get install -y apt-transport-https

curl https://mirrors.aliyun.com/kubernetes/apt/doc/apt-key.gpg | apt-key add -

cat > /etc/apt/sources.list.d/kubernetes.list << ERIC

deb https://mirrors.aliyun.com/kubernetes/apt/ kubernetes-xenial main

ERIC

apt-get update

apt-cache madison kubeadm

VERSION=1.19.16-00

###### Download the package locally

apt-get install -y --download-only -o dir::cache::archives=/home/deploy/deb/k8s kubelet=$VERSION kubeadm=$VERSION kubectl=$VERSION

|

Mirroring (if you are a Chinese user)

Domestic mirror preparation

1

|

registry.cn-hangzhou.aliyuncs.com/google_containers

|

Prepare the following images in a networked environment.

1

2

3

4

5

6

7

8

|

➜ ~ kubeadm config images --kubernetes-version=v1.19.16 --image-repository registry.cn-hangzhou.aliyuncs.com/google_containers

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.19.16

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.19.16

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.19.16

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.19.16

registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2

registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.13-0

registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.7.0

|

pull

1

2

3

4

5

6

7

|

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.19.16

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.19.16

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.19.16

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.19.16

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.13-0

docker pull registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.7.0

|

save

1

2

3

4

5

6

7

|

docker save -o k8s.tar registry.cn-hangzhou.aliyuncs.com/google_containers/kube-apiserver:v1.19.16

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-controller-manager:v1.19.16

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-scheduler:v1.19.16

registry.cn-hangzhou.aliyuncs.com/google_containers/kube-proxy:v1.19.16

registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.2

registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.4.13-0

registry.cn-hangzhou.aliyuncs.com/google_containers/coredns:1.7.0

|

Flannel

Download: https://raw.githubusercontent.com/coreos/flannel/master/Documentation/kube-flannel.yml

Implement

Close swap partition

Turn off the firewall

1

|

systemctl disable ufw && systemctl stop ufw

|

Install docker

1

|

dpkg -i /home/deploy/deb/docker/*.deb

|

After installation the default cgroups driver uses cgroupfs , which needs to be adjusted to systemd, so edit the docker configuration file and execute.

1

|

sudo vi /etc/docker/daemon.json

|

Add the following.

1

2

3

|

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

|

Restart docker.

1

|

sudo systemctl daemon-reload && sudo systemctl restart docker

|

Install kubeadm, kubelet and kubectl

1

2

|

###### Offline installation of k8s

dpkg -i /home/deploy/deb/k8s/*.deb

|

Import Mirror

Start

1

2

|

sudo kubeadm init --pod-network-cidr 10.244.0.0/16 \

--image-repository registry.cn-hangzhou.aliyuncs.com/google_containers

|

After executing init successfully, record the last two lines starting with “kubeadm join”.

1

2

3

|

kubeadm join 192.168.20.104:6443 --token 0mj488.h6v5r010bfhlq9b1 \

--discovery-token-ca-cert-hash sha256:3ea2cc19ceb0f109834f82bde13f5d29c534aba115cd41f8d3719db6b8ec074b

root@master01:/home/deploy/deb/yaml

|

Finally, in order of execution.

1

2

3

4

5

|

mkdir -p $HOME/.kube

sudo cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

sudo chown $(id -u):$(id -g) $HOME/.kube/config

|

Enable Flannel Network

Execute

1

|

kubectl apply -f ./kube-flannel.yml

|

After successful execution, wait for 3 or 5 minutes and execute kubectl get nodes and kubectl get pods -all-namespaces again, you will see that the status is normal.

1

2

3

4

5

6

7

8

9

10

|

yance@yance-ub:~$ kubectl get pod -n kube-system

NAME READY STATUS RESTARTS AGE

coredns-6c76c8bb89-vjghr 1/1 Running 0 46m

coredns-6c76c8bb89-zswv9 1/1 Running 0 46m

etcd-yance-ub 1/1 Running 0 46m

kube-apiserver-yance-ub 1/1 Running 0 46m

kube-controller-manager-yance-ub 1/1 Running 0 46m

kube-flannel-ds-dlxgv 1/1 Running 0 23m

kube-proxy-nhdwj 1/1 Running 0 46m

kube-scheduler-yance-ub 1/1 Running 0 46m

|

Add worker nodes

On each worker node, execute:

1

2

3

|

kubeadm join 192.168.20.104:6443 --token 0mj488.h6v5r010bfhlq9b1 \

--discovery-token-ca-cert-hash sha256:3ea2cc19ceb0f109834f82bde13f5d29c534aba115cd41f8d3719db6b8ec074b

root@master01:/home/deploy/deb/yaml

|

Execute on master:

1

|

kubectl label node node_name node-role.kubernetes.io/worker=worker

|

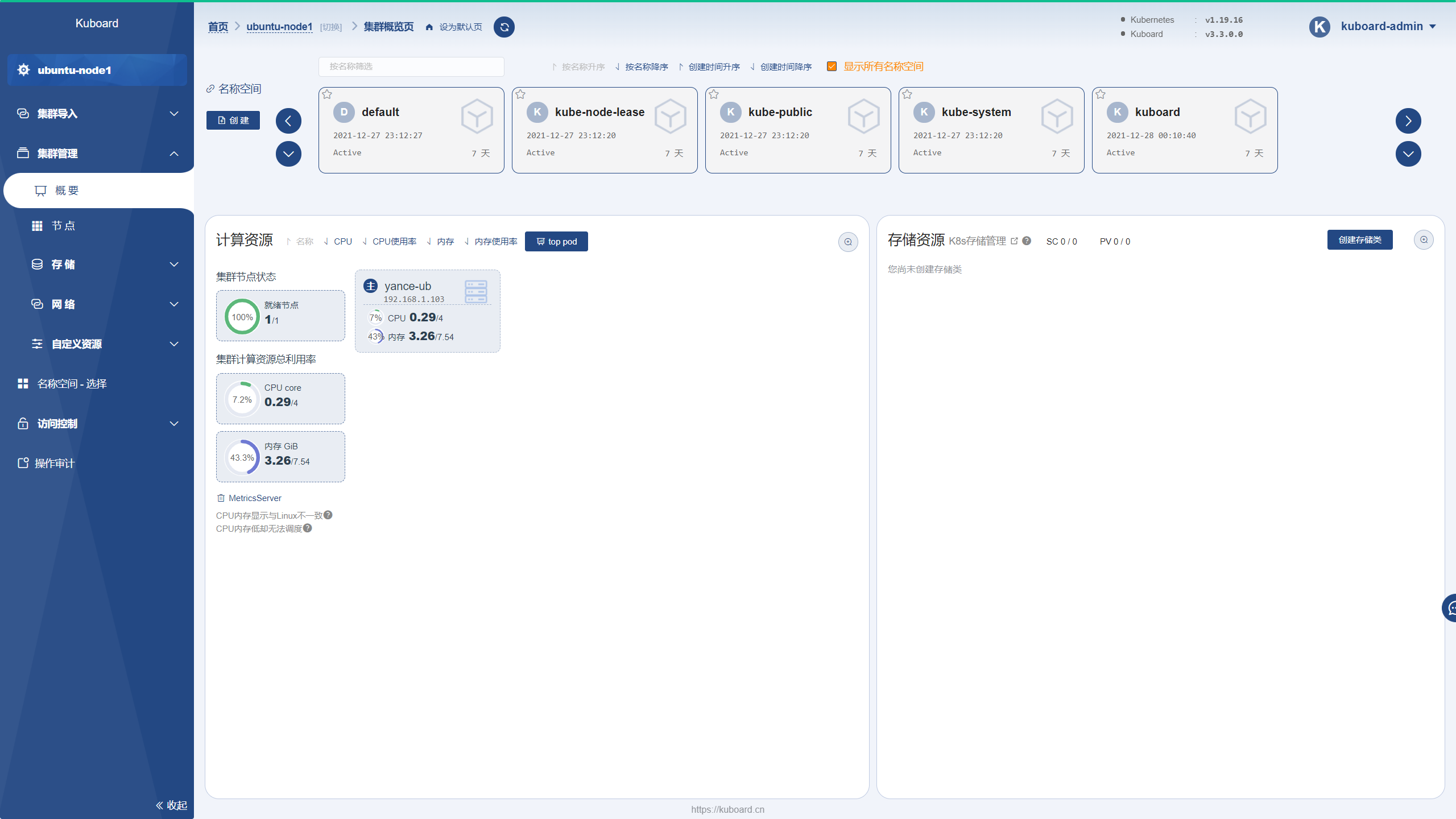

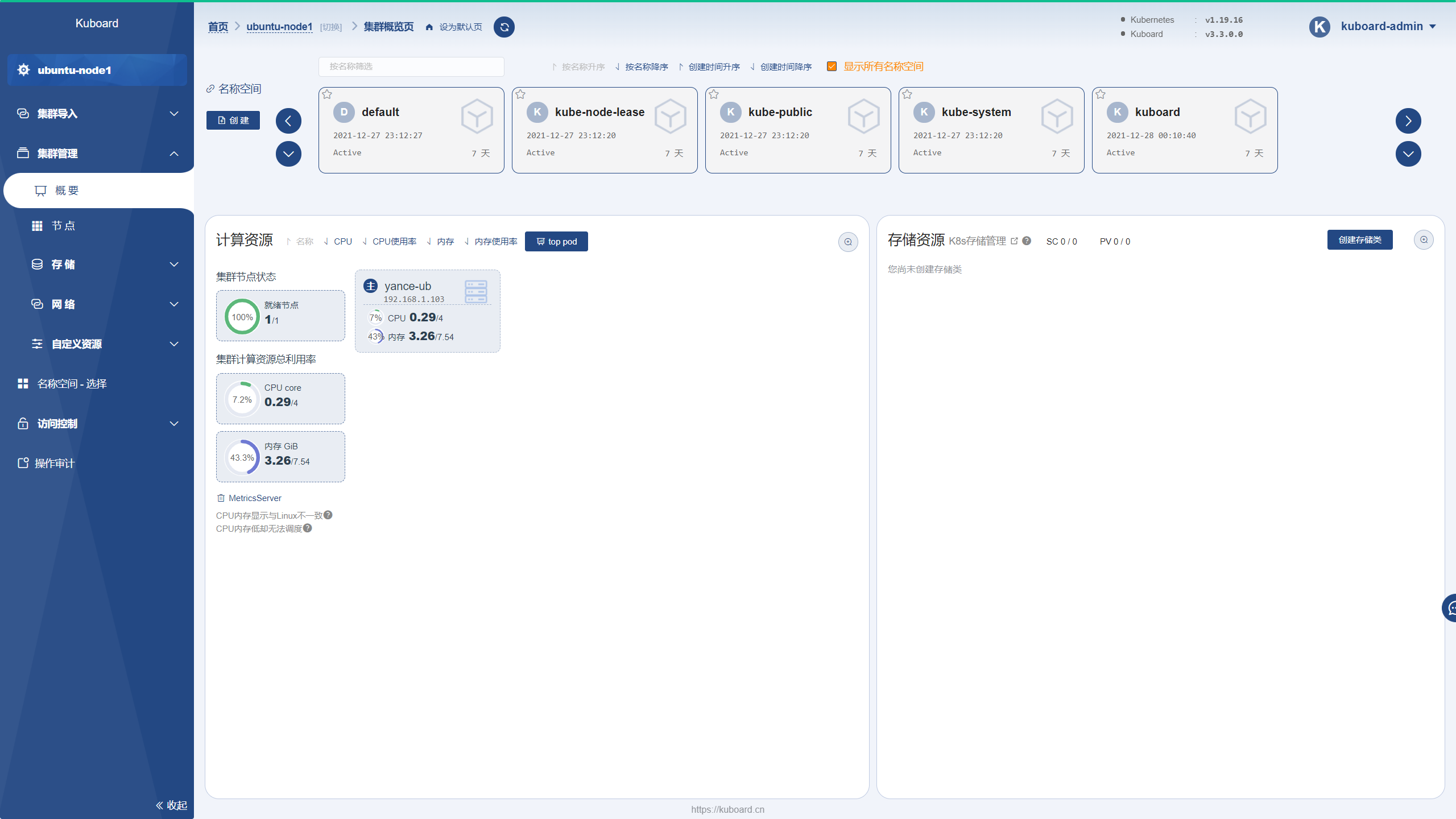

Install Kuboard v3.x

1

2

3

4

5

6

7

8

9

|

sudo docker run -d \

--restart=unless-stopped \

--name=kuboard \

-p 80:80/tcp \

-p 10081:10081/tcp \

-e KUBOARD_ENDPOINT="http://内网IP:80" \

-e KUBOARD_AGENT_SERVER_TCP_PORT="10081" \

-v /root/kuboard-data:/data \

eipwork/kuboard:v3

|

The Kuboard v3.x interface can be accessed by entering http://your-host-ip:80 in your browser and logging in as follows

- User name:

admin

- Password:

Kuboard123