Python has become incredibly popular in recent years. The Python language is cheap to learn, writes like pseudo-code (even like English), is highly readable, and has many other obvious benefits. It is favored by DevOps, Data Science, and Web Development scenarios. But these reputations never include Python’s speed. Compared to other languages, whether JIT or AOT, Python is almost always the slowest. There are many aspects that contribute to Python’s performance problems, and this article tries to talk about them.

TL;DR

- Python has GIL

- Python is an “interpreted” language

- Python is a dynamically typed language

GIL

Modern computer processors generally have multiple cores, and some servers even have multiple processors. Therefore, the operating system abstracts Threads to spawn multiple Threads in a process, so that these Threads can run on multiple cores at the same time to maximize the efficiency of the processor. (You can see the number of threads in the system in the top command)

So it’s clear that programming in Threads to run in parallel can increase speed.

But Python (sometimes) doesn’t.

Python doesn’t require you to manually manage memory (C requires manual malloc/free), it comes with its own garbage collector. This means that you can request and set variables as you like, and the Python interpreter will automatically determine when the variable will no longer be used (e.g., if the function exits, the function’s internal variables will not be used), and then automatically release that memory. There are many ways to implement a garbage collection mechanism, and Python chooses reference counting + generational collection. Reference counting is dominant. The principle is that each object remembers how many other objects refer to itself, and when no one refers to itself, it is garbage.

But in the multi-threaded case, we all run together, reference counting multiple threads operating together, how to ensure that no thread insecurity will occur? Obviously multiple threads operating on the same object need to add locks.

This is GIL, except that the lock is so granular that only one Thread can run globally in the entire Python interpreter. See the dabeaz blog for more details.

Because other languages do not have GIL, many people have misconceptions about GIL. For example.

“Python can only run one thread at a time, so I can write multi-threaded programs without locks.” This is not true. “Only one thread at a time” means that the Python interpreter can only run one thread of bytecode at a time (Python code is compiled into bytecode for the Python virtual machine), at the opcode level. A line of Python code, such as a += 1, will actually compile multiple opcodes: first load parameter a, then a + 1, then save back to parameter a. If the load is done and not yet computed, then the thread switches and the other thread modifies the value of a, then switches back to continue performing the computation and storing a, then it will be thread-unsafe. So when multiple threads operate on a variable at the same time, you still need to add a lock.

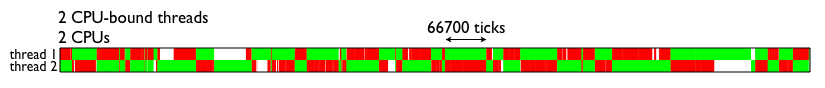

“Python can only run one thread at a time, so Python’s multithreading is pointless.” That’s not entirely true. If you want to use multithreading to take advantage of the performance of multiple cores, then Python really can’t. But if the CPU is not the bottleneck, and the network is, multithreading is still useful. The usual programming model is that one thread handles one network connection, and while only one thread is running, the other threads are waiting for the network connection and are not “idle”. In short, Python’s multithreading is really useless for CPU-intensive tasks (even slower than single-threaded because of the overhead of switching between multiple threads), but it can still speed up IO-intensive tasks.

It’s probably better to say that no matter how many cores your computer’s CPU has, Python uses only 1 core.

Pypy has GIL, but it can be 3x faster than CPython. Jython is based on the JVM, which doesn’t have GIL.

What about other languages? Java also uses reference counting, but its GC is multithread-aware, which is more complicated to implement (a friend told me that Java is no longer reference counting, so please pay attention to this place and attach a reference). JavaScript is a single-threaded asynchronous programming model, so it does not have this problem.

It is an interpreted language

Languages like C/C++/Rust are compiled directly into machine code and run as compiled languages; Python runs by a virtual machine reading in Python code (text), lexical analysis, compiling it into opcode that the virtual machine recognizes, and then the virtual machine interprets the opcode and executes it. But that’s not really the main reason; Python import caches the compiled opcode afterwards, (either the pyc file or the __pycache__ folder). So reading, lexical analysis and compiling doesn’t take much time.

So which step is really slow? It’s the later part where the virtual machine interprets the opcode execution. The front-end compilation is compiling Python code into intermediate code that the interpreter can understand, and the interpreter then translates the intermediate code into instructions that the CPU can understand. Compared to AOT (advance compiled languages, like C) compiling directly into machine code is definitely slower.

But why isn’t Java slower?

Because Java has JIT, a just-in-time compilation technique to split the code into frames, the AOT compiler is responsible for translating the intermediate code into CPU-understandable code at runtime. This part is not much different from Python’s interpreter, which still translates the intermediate code and executes it. The really fast part is that JIT can do optimizations at runtime. For example, if the VM finds a piece of code that is executing frequently (most of the time our programs are executing a piece of code over and over again), it will start optimizing and replace that piece of code with a changed version. This is an advantage that only virtual machine languages have, because of the runtime information that has to be collected. AOT compilers like gcc can only do some analysis based on static analysis.

Why doesn’t Python have a JIT?

The first is that JIT is expensive and complex to develop, C# also has a good JIT because Microsoft has money.

The second is that JIT is slow, Java and C# VMs start a lot, CPython is slow, Pypy has JIT, it’s 2x - 3x slower than CPython, and for long running programs, it’s okay to start slower, after all, the code gets faster and more profitable after a long run time. But CPython is a general-purpose virtual machine, like command-line programs, and the slow start-up experience is much worse.

The third is that Java and C# are statically typed virtual machines, and the compiler can make some assumptions. (I don’t know if this is right, because Lua also has a good JIT)

Dynamic typing

Statically typed languages like C, Java, and Go require that you declare variables with their types. Python doesn’t. Python decides for you what type a variable is, and you can change it at will.

Why is dynamic typing slow? The overhead of checking the type and changing it every time is too high; it’s hard to optimize with such dynamic types.

The benefits of dynamic typing are that it’s very simple and intuitive to write (maintenance is another matter); you can modify the behavior of objects at runtime, and Monkey Patch is very simple.

Recent languages are statically typed, such as Go and Rust, and static types are not only more friendly to compilers, but also better for programmers to maintain. Personally, I think the future belongs to static types.