I first learned about the slab algorithm when I was learning the Linux kernel. The kernel uses the Buddy System algorithm to manage memory pages. But for small objects, it would be wasteful to use a page allocator, so slab was born. The kernel’s kmalloc() is managed using slab. nginx’s shared memory management uses the same idea, but it is not as complex as slab in the Linux kernel.

slab principle

It basically works as follows.

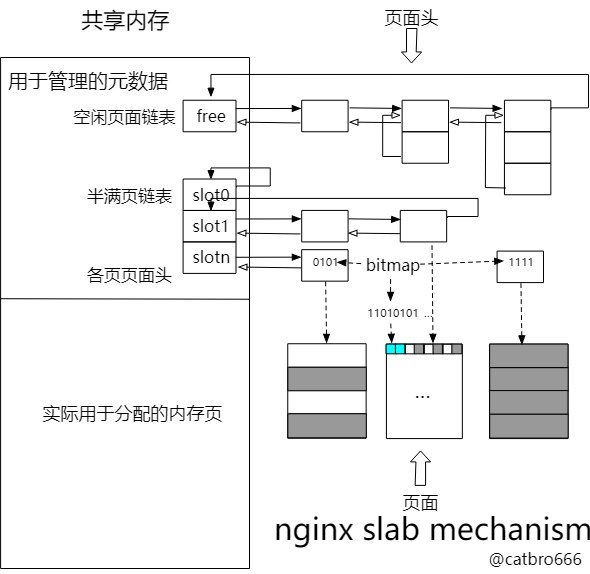

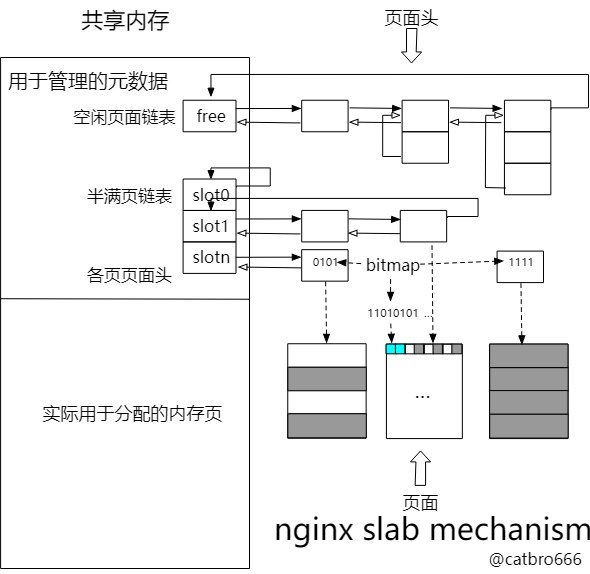

- The shared memory is first initialized with the top part being the metadata used for management and the remaining part divided by page. Each page has a corresponding page header in the preceding metadata, which is used to manage the page

- There is a free page block linkedlist in the metadata that manages all free page blocks (initially a complete contiguous page block)

- Objects are allocated with their length aligned to the power of 2, which is called a different slot in nginx

- A page that is assigned to a certain slot is used only for the allocation of objects of that length, e.g. a 4KB page can be sliced into 64 objects of 64B, or 32 objects of 128B.

- Each object of a slot has an unfilled page linkedlist that manages all the unfilled and not empty pages of that slot.

- slot objects are managed by bitmap, an object is allocated to the corresponding location 1, released set to 0, thus determining which memory blocks in the page is free

- If a page is full, it is removed from the unfull page chain; if a page becomes unfull (i.e., a full page releases an object), it is relisted to the unfull page chain

- If a slot object is allocated and the unfulfilled page table is found empty, then a free page is allocated from the free page block table and inserted into the unfulfilled page table of the slot after the object is allocated; if the page is empty after the object is released, it is returned to the free page block table

- When allocating and releasing pages, the free page block linkedlist has cut and merge operations

- large objects (more than half a page) are allocated directly from the free page block linkedlist, without going to slot

It is worth mentioning that slot is divided into 3 categories according to the size of the object. For large size objects, because the number of memory blocks that a page can be divided into is small, it is enough to use the slab field in the page header directly as a bitmap. For small size objects, because of the large number of slice blocks, it is necessary to use the first few blocks in the actual memory page as bitmap.

Main data structures

Note: The code that follows is based on Nginx-1.19.3

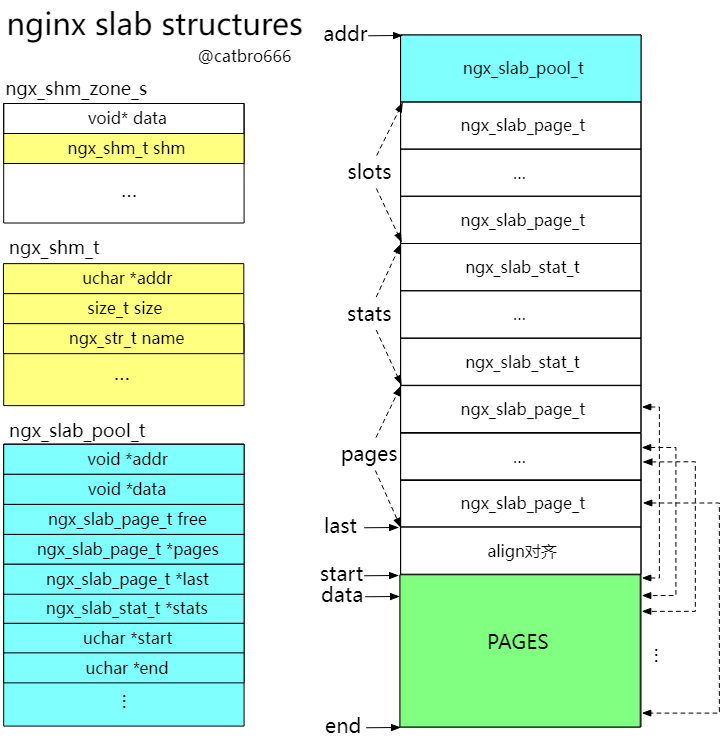

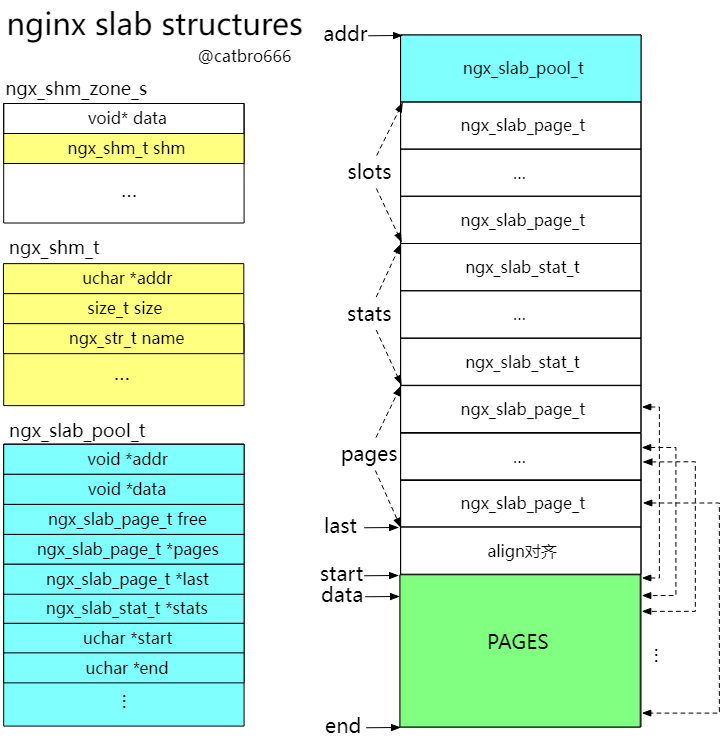

The following diagram gives the relationships between several major structures and the locations of the shared memory pointed to by several major pointers. From addr to last is the metadata used for management, and from start to end is the actual memory allocated. slots and stats are the half-full linkedlist headers and statistics for each slot, respectively. pages is an array of page headers that corresponds to the actual memory pages allocated later. The page header can be found by the page header, and its page header can also be found by the page address, and the page header is also continuous for consecutive page blocks.

ngx_shm_zone_t is the structure used to describe the shared memory, data is the private data pointer, init is the private initialization function, and tag is used to distinguish between different shared memories.

1

2

3

4

5

6

7

8

9

|

// ngx_shm_zone_t是共享内存的结构体,里面包含了shm,data是私有数据

struct ngx_shm_zone_s {

void *data;

ngx_shm_t shm;

ngx_shm_zone_init_pt init;

void *tag;

void *sync;

ngx_uint_t noreuse; /* unsigned noreuse:1; */

};

|

Where shm records specific information about the shared memory, addr is the shared memory start address, size is the size, and name name.

1

2

3

4

5

6

7

8

|

// shm里的addr指向实际mmap的共享内存

typedef struct {

u_char *addr;

size_t size;

ngx_str_t name;

ngx_log_t *log;

ngx_uint_t exists; /* unsigned exists:1; */

} ngx_shm_t;

|

ngx_slab_pool_t is used to manage the slab, which is located at the very beginning of the shared memory. min_size/min_shift records the size of the smallest slot and the corresponding bit offset, and free is the head of the free page block linkedlist already mentioned. pages/last/stats/start/end/data/ addr are pointers to different locations in the shared memory. addr are pointers to different locations in shared memory, see the following figure. lock and mutex are used to protect shared memory.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

|

// 共享内存最开头是ngx_slab_pool_t,它用于管理slab,后面会详细介绍它的字段

typedef struct {

ngx_shmtx_sh_t lock;

size_t min_size; // 分配内存最小尺寸,8

size_t min_shift;

ngx_slab_page_t *pages; // 指向页面头数组

ngx_slab_page_t *last; // 指向页面头数组末尾

ngx_slab_page_t free; // 空闲页面链表头

ngx_slab_stat_t *stats; // 指向统计数组

ngx_uint_t pfree; // 总空闲页面数

u_char *start; // 实际数据页面起始位置

u_char *end; // 共享内存结束位置

ngx_shmtx_t mutex;

u_char *log_ctx;

u_char zero;

unsigned log_nomem:1;

void *data; // 私有数据

void *addr;

} ngx_slab_pool_t;

|

ngx_slab_page_s is used to manage pages, which is what we called page header earlier, it acts as both a linkedlist node and records some information about the page. slab field has different uses in different cases, prev is used to indicate the page type in addition to being a pointer to the linkedlist, and the low bit is also used to indicate the page type.

1

2

3

4

5

6

|

// 页面头,用于管理页面

struct ngx_slab_page_s {

uintptr_t slab; // 对于空闲页面头节点,表示当前节点页块大小;空闲页面非头节点,为FREE;对于已分配页块,第一个页是pages | NGX_SLAB_PAGE_START,其余是NGX_SLAB_PAGE_BUSY;对于slab页面,小对象slab记录shift值,中对象slab用作bitmap,大对象slab高位用作bitmap,低位记录shift值

ngx_slab_page_t *next; // 不在链表中时,为NULL

uintptr_t prev; // 对于已经分配的页,低位还兼职表示页类型

};

|

ngx_slab_stat_t is used to count the information of slots.

1

2

3

4

5

6

7

8

|

// 用于统计slot信息

typedef struct {

ngx_uint_t total; // 总共有多少个对象

ngx_uint_t used; // 目前使用了多少个

ngx_uint_t reqs; // 请求该slot次数,累加计数器

ngx_uint_t fails; // 请求失败的次数,累加计数器

} ngx_slab_stat_t;

|

Initialization process

First start-up

The main function call relationships for shared initialization are as follows.

1

2

3

4

5

6

|

ngx_shared_memory_add() // 初始化ngx_shm_zone_t,并放到全局链表cf->cycle->shared_memory中

ngx_init_cycle()

ngx_shm_alloc(&shm_zone[i].shm) // 分配内存

ngx_init_zone_pool(cycle, &shm_zone[i]) // slab初始化

ngx_slab_init()

shm_zone[i].init(&shm_zone[i], oshm_zone[n].data) // 各共享内存私有的初始化函数,例如ngx_ssl_session_cache_init,主要负责初始化ngx_ssl_session_cache_t

|

- Add shm_zone to the linkedlist during the configuration processing phase

- Then allocate shared memory in init_cycle to initialize shm_zone

- ngx_slab_init() mainly initializes the ngx_slab_pool_t structure

- After completing the general-purpose initialization, the initialization of each shared memory private operation is performed

ngx_slab_init() is a common initialization operation for all shared memory, mainly initializing the fields in the ngx_slab_pool_t structure.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

|

void

ngx_slab_init(ngx_slab_pool_t *pool)

{

u_char *p;

size_t size;

ngx_int_t m;

ngx_uint_t i, n, pages;

ngx_slab_page_t *slots, *page;

pool->min_size = (size_t) 1 << pool->min_shift;

slots = ngx_slab_slots(pool);

p = (u_char *) slots;

size = pool->end - p;

ngx_slab_junk(p, size);

n = ngx_pagesize_shift - pool->min_shift; // slot的种类

for (i = 0; i < n; i++) {

/* only "next" is used in list head */

slots[i].slab = 0;

slots[i].next = &slots[i];

slots[i].prev = 0;

}

p += n * sizeof(ngx_slab_page_t);

pool->stats = (ngx_slab_stat_t *) p;

ngx_memzero(pool->stats, n * sizeof(ngx_slab_stat_t));

p += n * sizeof(ngx_slab_stat_t);

size -= n * (sizeof(ngx_slab_page_t) + sizeof(ngx_slab_stat_t));

pages = (ngx_uint_t) (size / (ngx_pagesize + sizeof(ngx_slab_page_t))); // 计算页数

pool->pages = (ngx_slab_page_t *) p; // 指向页面头数组

ngx_memzero(pool->pages, pages * sizeof(ngx_slab_page_t));

page = pool->pages;

/* only "next" is used in list head */

pool->free.slab = 0;

pool->free.next = page;

pool->free.prev = 0;

// 初始只有一个大的块

page->slab = pages; // 页块的页数

page->next = &pool->free; // 指向空闲链表的哨兵

page->prev = (uintptr_t) &pool->free;// 指向空闲链表的哨兵

pool->start = ngx_align_ptr(p + pages * sizeof(ngx_slab_page_t),

ngx_pagesize); // 实际数据页面开始位置

m = pages - (pool->end - pool->start) / ngx_pagesize;

if (m > 0) {

pages -= m;

page->slab = pages;

}

pool->last = pool->pages + pages; // 页面头数组的末尾

pool->pfree = pages; // 总空闲页面数

pool->log_nomem = 1;

pool->log_ctx = &pool->zero;

pool->zero = '\0';

}

|

Next shm_zone[i].init() is the initialization of each shared memory private, which generally initializes the private data and then assigns the pointer to the private data data field of ngx_shm_zone_t and ngx_slab_pool_t.

reload

By default, reloads reuse the previous shared memory unless noreuse in ngx_shm_zone_s is set to 1, but this field is currently written dead in code and not open as configuration. So reloads generally just execute the following function.

1

2

|

ngx_shared_memory_add()

shm_zone[i].init(&shm_zone[i], oshm_zone[n].data)

|

But there is one case where reload will also regenerate shared memory, and that is when the size has changed.

Allocation Logic

The allocation logic and the release logic are the core of slab, you need to read the next 4 functions carefully to fully understand them.

Allocating pages

The page allocation logic is relatively simple, the remaining free pages are a linkedlist, pool->free is the header node and is used as a sentry. Iterate through the chain to find a large enough free page block, if the page block is exactly equal to the requested size, then remove it from the chain, if the page block is still remaining, insert the first page header of the remaining part into the chain, if the remaining block is larger than 1 page, then point the prev of the page header of the last page to the position of its first page header (needed when merging page blocks).

For the allocated page block, the first page header records the page block header flag and records the page block size, and the subsequent page headers record the BUSY flag. the last two digits of prev indicate the page type, and NGX_SLAB_PAGE indicates that it is a whole page allocation.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

|

static ngx_slab_page_t *

ngx_slab_alloc_pages(ngx_slab_pool_t *pool, ngx_uint_t pages)

{

ngx_slab_page_t *page, *p;

for (page = pool->free.next; page != &pool->free; page = page->next) {

if (page->slab >= pages) {

if (page->slab > pages) { // 有剩余的情况

page[page->slab - 1].prev = (uintptr_t) &page[pages]; // 这一步是合并时候用的,找到它的块头

// 剩余部分仍然插入链表中

page[pages].slab = page->slab - pages;

page[pages].next = page->next;

page[pages].prev = page->prev;

p = (ngx_slab_page_t *) page->prev;

p->next = &page[pages];

page->next->prev = (uintptr_t) &page[pages];

} else { // 正好的情况

p = (ngx_slab_page_t *) page->prev; // 将节点从链表移除

p->next = page->next;

page->next->prev = page->prev;

}

// 第一个页记录页数

page->slab = pages | NGX_SLAB_PAGE_START;

page->next = NULL;

page->prev = NGX_SLAB_PAGE;

pool->pfree -= pages; // 更新剩余空闲页数

if (--pages == 0) {

return page;

}

// 其余页设为BUSY

for (p = page + 1; pages; pages--) {

p->slab = NGX_SLAB_PAGE_BUSY;

p->next = NULL;

p->prev = NGX_SLAB_PAGE;

p++;

}

return page;

}

}

if (pool->log_nomem) {

ngx_slab_error(pool, NGX_LOG_CRIT,

"ngx_slab_alloc() failed: no memory");

}

return NULL;

}

|

Assigning objects

Small objects are divided into 3 categories based on size, namely NGX_SLAB_BIG , NGX_SLAB_EXACT and NGX_SLAB_SMALL in the code. The reason for splitting into three categories is to save the memory overhead of management. For EXACT, the slab field in the page header can be used as the bitmap of a page. SMALL uses the top memory block of the page as the bitamp, and the slab is used to record the shift value of the page (i.e., which slot it belongs to). For big ones, because the number of memory blocks is small, the high bit of the slab field is used as a bitmap, and the low bit is used to record the shift value.

For very large objects directly allocate the page, otherwise traverse the corresponding slot half full linkedlist to get a free memory block, find the corresponding bit position bit, if the linkedlist is empty then allocate a new page first, and then insert it into the slot half full linkedlist. If the page is full after allocating an object, it is removed from the half-full linkedlist.

ngx_slab_alloc_locked is one of the most core functions, if you are interested, you can see how it operates the bitmap and the link table. The code is basically commented out, so I won’t go over it again here.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

|

void *

ngx_slab_alloc_locked(ngx_slab_pool_t *pool, size_t size)

{

size_t s;

uintptr_t p, m, mask, *bitmap;

ngx_uint_t i, n, slot, shift, map;

ngx_slab_page_t *page, *prev, *slots;

// 超大对象直接分配页

if (size > ngx_slab_max_size) {

ngx_log_debug1(NGX_LOG_DEBUG_ALLOC, ngx_cycle->log, 0,

"slab alloc: %uz", size);

page = ngx_slab_alloc_pages(pool, (size >> ngx_pagesize_shift)

+ ((size % ngx_pagesize) ? 1 : 0));

if (page) {

p = ngx_slab_page_addr(pool, page);

} else {

p = 0;

}

goto done;

}

// 小对象选择对应slot

if (size > pool->min_size) {

shift = 1;

for (s = size - 1; s >>= 1; shift++) { /* void */ }

slot = shift - pool->min_shift;

} else {

shift = pool->min_shift;

slot = 0;

}

// 对应slot请求+1

pool->stats[slot].reqs++;

ngx_log_debug2(NGX_LOG_DEBUG_ALLOC, ngx_cycle->log, 0,

"slab alloc: %uz slot: %ui", size, slot);

slots = ngx_slab_slots(pool);

page = slots[slot].next; // slot的链表

if (page->next != page) { // 链表为空,跳到后面分配新的1页

if (shift < ngx_slab_exact_shift) { // 需要使用页面开头部分空间作为bitmap

bitmap = (uintptr_t *) ngx_slab_page_addr(pool, page); // 页面开头是bitmap

map = (ngx_pagesize >> shift) / (8 * sizeof(uintptr_t)); // 几个uintptr_t大小的map

for (n = 0; n < map; n++) { // 遍历寻找一个空闲的对象

if (bitmap[n] != NGX_SLAB_BUSY) { // 当前bitmap块没有全置位

for (m = 1, i = 0; m; m <<= 1, i++) {

if (bitmap[n] & m) {

continue;

}

// 第n个map的第i位(从右往左数)

bitmap[n] |= m;

// 总共第几位 * 对象大小 = 对象地址关于页地址的偏移

i = (n * 8 * sizeof(uintptr_t) + i) << shift;

p = (uintptr_t) bitmap + i; // p为对象地址

pool->stats[slot].used++; // 正在使用的+1

// 如果当前bitmap满了,检查后面的是否都满了

if (bitmap[n] == NGX_SLAB_BUSY) {

for (n = n + 1; n < map; n++) {

if (bitmap[n] != NGX_SLAB_BUSY) {

goto done;

}

}

// 如果整个页都用完了,将其从slot链表中移除

prev = ngx_slab_page_prev(page);

prev->next = page->next;

page->next->prev = page->prev;

page->next = NULL;

page->prev = NGX_SLAB_SMALL;

}

goto done;

}

}

}

} else if (shift == ngx_slab_exact_shift) { // 页面头中的slab正好作为bimap

for (m = 1, i = 0; m; m <<= 1, i++) {

if (page->slab & m) {

continue;

}

page->slab |= m; // 置位

// 该页如果满了,移出slot链表

if (page->slab == NGX_SLAB_BUSY) {

prev = ngx_slab_page_prev(page);

prev->next = page->next;

page->next->prev = page->prev;

page->next = NULL;

page->prev = NGX_SLAB_EXACT;

}

// 获取对象地址

p = ngx_slab_page_addr(pool, page) + (i << shift);

pool->stats[slot].used++;

goto done;

}

} else { /* shift > ngx_slab_exact_shift */

// 111...11,1页对象个数个1

mask = ((uintptr_t) 1 << (ngx_pagesize >> shift)) - 1;

mask <<= NGX_SLAB_MAP_SHIFT; // 移到高位上,为啥? 因为低位还要记录shift。为啥需要记录shift,因为在释放对象的时候需要知道大小

for (m = (uintptr_t) 1 << NGX_SLAB_MAP_SHIFT, i = 0;

m & mask;

m <<= 1, i++)

{

if (page->slab & m) {

continue;

}

page->slab |= m; // 置位

// 该页如果满了,移出slot链表

if ((page->slab & NGX_SLAB_MAP_MASK) == mask) {

prev = ngx_slab_page_prev(page);

prev->next = page->next;

page->next->prev = page->prev;

page->next = NULL;

page->prev = NGX_SLAB_BIG;

}

p = ngx_slab_page_addr(pool, page) + (i << shift);

pool->stats[slot].used++;

goto done;

}

}

ngx_slab_error(pool, NGX_LOG_ALERT, "ngx_slab_alloc(): page is busy");

ngx_debug_point();

}

// 分配新页

page = ngx_slab_alloc_pages(pool, 1);

if (page) {

if (shift < ngx_slab_exact_shift) {

bitmap = (uintptr_t *) ngx_slab_page_addr(pool, page);

// 计算需要开头几个对象作为bitmap: n = 1页对象个数 / 1个对象位数

n = (ngx_pagesize >> shift) / ((1 << shift) * 8);

// 不满1个对象,使用1对象

if (n == 0) {

n = 1;

}

// 接下来初始化bitmap,将bitmap本身占用的对象置1,将申请的目标对象置1

/* "n" elements for bitmap, plus one requested */

for (i = 0; i < (n + 1) / (8 * sizeof(uintptr_t)); i++) {

bitmap[i] = NGX_SLAB_BUSY;

}

// 不满一个bitmap的部分置1

m = ((uintptr_t) 1 << ((n + 1) % (8 * sizeof(uintptr_t)))) - 1;

bitmap[i] = m;

// 剩余bitmap置0

map = (ngx_pagesize >> shift) / (8 * sizeof(uintptr_t));

for (i = i + 1; i < map; i++) {

bitmap[i] = 0;

}

page->slab = shift; // slab记录shift

page->next = &slots[slot]; // 插入slot链表头

page->prev = (uintptr_t) &slots[slot] | NGX_SLAB_SMALL;

slots[slot].next = page;

// 增加该slot总对象数(不包括bitmap本身占用的对象)

pool->stats[slot].total += (ngx_pagesize >> shift) - n;

p = ngx_slab_page_addr(pool, page) + (n << shift);

pool->stats[slot].used++;

goto done;

} else if (shift == ngx_slab_exact_shift) {

page->slab = 1; // 申请的对象置位

// 插入slot链表头

page->next = &slots[slot];

page->prev = (uintptr_t) &slots[slot] | NGX_SLAB_EXACT;

slots[slot].next = page;

// 增加该slot总对象数

pool->stats[slot].total += 8 * sizeof(uintptr_t);

p = ngx_slab_page_addr(pool, page);

pool->stats[slot].used++;

goto done;

} else { /* shift > ngx_slab_exact_shift */

// 高位申请的对象置位 + 低位记录shift

page->slab = ((uintptr_t) 1 << NGX_SLAB_MAP_SHIFT) | shift;

page->next = &slots[slot];

page->prev = (uintptr_t) &slots[slot] | NGX_SLAB_BIG;

slots[slot].next = page;

pool->stats[slot].total += ngx_pagesize >> shift;

p = ngx_slab_page_addr(pool, page);

pool->stats[slot].used++;

goto done;

}

}

p = 0;

pool->stats[slot].fails++; // 分配对象失败次数

done:

ngx_log_debug1(NGX_LOG_DEBUG_ALLOC, ngx_cycle->log, 0,

"slab alloc: %p", (void *) p);

return (void *) p;

}

|

Logic for releasing

Release pages

If it is releasing multiple pages, zero out all subsequent page headers. If it is a slot page, remove it from the slot linkedlist. Then try to merge the pages before and after, thus forming a larger contiguous page. After the merge is complete, insert it into the free page block linkedlist.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

|

static void

ngx_slab_free_pages(ngx_slab_pool_t *pool, ngx_slab_page_t *page,

ngx_uint_t pages)

{

ngx_slab_page_t *prev, *join;

pool->pfree += pages;

page->slab = pages--;

// 第二页开始的页面头都清零

if (pages) {

ngx_memzero(&page[1], pages * sizeof(ngx_slab_page_t));

}

// next不都等于NULL么? 不等于NULL表示是slot页,将其从slot链表中移除

if (page->next) {

prev = ngx_slab_page_prev(page);

prev->next = page->next;

page->next->prev = page->prev;

}

// 尝试合并后面的页面

join = page + page->slab;

if (join < pool->last) { // 检查后面是否还有页

if (ngx_slab_page_type(join) == NGX_SLAB_PAGE) { // 是整页

if (join->next != NULL) { // 在空闲页链表中

pages += join->slab; // 加上合并块的页数

page->slab += join->slab; // 增加页块大小

// 将join块从空闲链表中移除

prev = ngx_slab_page_prev(join);

prev->next = join->next;

join->next->prev = join->prev;

// 清理页块头,恢复指针和标志

join->slab = NGX_SLAB_PAGE_FREE;

join->next = NULL;

join->prev = NGX_SLAB_PAGE;

}

}

}

// 尝试合并前面的页

if (page > pool->pages) { // 前面还有页

join = page - 1; // join指向被释放页的前一页

if (ngx_slab_page_type(join) == NGX_SLAB_PAGE) { // 是整页

if (join->slab == NGX_SLAB_PAGE_FREE) { // 不是页块头

join = ngx_slab_page_prev(join); // join指向页块头

}

if (join->next != NULL) { // 在空闲链表中

pages += join->slab; // 加上合并块的页数

join->slab += page->slab; // 增加页块大小

// 将join块从空闲链表中移除

prev = ngx_slab_page_prev(join);

prev->next = join->next;

join->next->prev = join->prev;

// 清理页块头,恢复指针和标志

page->slab = NGX_SLAB_PAGE_FREE;

page->next = NULL;

page->prev = NGX_SLAB_PAGE;

page = join; // 更新页块头

}

}

}

// 如果页块大于1页,将最后一页的prev指向页块头,合并时用

if (pages) {

page[pages].prev = (uintptr_t) page;

}

// 将页块插入空闲页链表头

page->prev = (uintptr_t) &pool->free;

page->next = pool->free.next;

page->next->prev = (uintptr_t) page;

pool->free.next = page;

}

|

Release the object

First get the corresponding page header and the corresponding page information according to the object pointer, and then do different processing according to the page type. For the slot object, the corresponding bit bit is reset. If the page is full before releasing the object, the page is reinserted into the slot half-full chain. If the page is empty after releasing the object, the page is released.

If you already understand the allocation logic, then the release logic should be well understood.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

|

void

ngx_slab_free_locked(ngx_slab_pool_t *pool, void *p)

{

size_t size;

uintptr_t slab, m, *bitmap;

ngx_uint_t i, n, type, slot, shift, map;

ngx_slab_page_t *slots, *page;

ngx_log_debug1(NGX_LOG_DEBUG_ALLOC, ngx_cycle->log, 0, "slab free: %p", p);

if ((u_char *) p < pool->start || (u_char *) p > pool->end) {

ngx_slab_error(pool, NGX_LOG_ALERT, "ngx_slab_free(): outside of pool");

goto fail;

}

// 计算释放的指针在第几页

n = ((u_char *) p - pool->start) >> ngx_pagesize_shift;

page = &pool->pages[n]; // 获取对应页面头

slab = page->slab;

type = ngx_slab_page_type(page);

switch (type) {

case NGX_SLAB_SMALL:

shift = slab & NGX_SLAB_SHIFT_MASK;

size = (size_t) 1 << shift;

// 检查指针是否对象对齐

if ((uintptr_t) p & (size - 1)) {

goto wrong_chunk;

}

// 第几个对象: n = 页中的偏移 / 对象大小

n = ((uintptr_t) p & (ngx_pagesize - 1)) >> shift;

m = (uintptr_t) 1 << (n % (8 * sizeof(uintptr_t)));

n /= 8 * sizeof(uintptr_t);

bitmap = (uintptr_t *)

((uintptr_t) p & ~((uintptr_t) ngx_pagesize - 1)); // 页起始地址,获取bitmap

// 第n个bitmap的m位

if (bitmap[n] & m) {

slot = shift - pool->min_shift;

// 如果当前页不在slot链表中(即释放前是满的),重新加回链表

if (page->next == NULL) {

slots = ngx_slab_slots(pool);

page->next = slots[slot].next;

slots[slot].next = page;

page->prev = (uintptr_t) &slots[slot] | NGX_SLAB_SMALL;

page->next->prev = (uintptr_t) page | NGX_SLAB_SMALL;

}

// 清bit位

bitmap[n] &= ~m;

// 计算需要开头几个对象作为bitmap: n = 1页对象个数 / 1个对象位数

n = (ngx_pagesize >> shift) / ((1 << shift) * 8);

if (n == 0) {

n = 1;

}

// 接下来检查除了bitmap本身占用的对象外,是否还有其他对象

// 已经被bitmap占满的uintptr_t不用检查,对于bitmap对象和实际数据对象混用的uintptr_t需要unmask掉bitmap的部分

i = n / (8 * sizeof(uintptr_t));

m = ((uintptr_t) 1 << (n % (8 * sizeof(uintptr_t)))) - 1;

if (bitmap[i] & ~m) {

goto done;

}

// map = 多少个uintptr_t大小的map

map = (ngx_pagesize >> shift) / (8 * sizeof(uintptr_t));

for (i = i + 1; i < map; i++) {

if (bitmap[i]) {

goto done;

}

}

// 如果空了,就释放该页

ngx_slab_free_pages(pool, page, 1);

// 减去slot的对象个数

pool->stats[slot].total -= (ngx_pagesize >> shift) - n;

goto done;

}

goto chunk_already_free;

case NGX_SLAB_EXACT:

m = (uintptr_t) 1 <<

(((uintptr_t) p & (ngx_pagesize - 1)) >> ngx_slab_exact_shift); // 目标对象对应的位

size = ngx_slab_exact_size;

// 检查对齐

if ((uintptr_t) p & (size - 1)) {

goto wrong_chunk;

}

if (slab & m) {

slot = ngx_slab_exact_shift - pool->min_shift;

if (slab == NGX_SLAB_BUSY) { // bitmap是满的,重新加入链表

slots = ngx_slab_slots(pool);

page->next = slots[slot].next;

slots[slot].next = page;

page->prev = (uintptr_t) &slots[slot] | NGX_SLAB_EXACT;

page->next->prev = (uintptr_t) page | NGX_SLAB_EXACT;

}

page->slab &= ~m; // 清bit位

// 如果空了,就释放该页

if (page->slab) {

goto done;

}

ngx_slab_free_pages(pool, page, 1);

pool->stats[slot].total -= 8 * sizeof(uintptr_t);

goto done;

}

goto chunk_already_free;

case NGX_SLAB_BIG:

shift = slab & NGX_SLAB_SHIFT_MASK;

size = (size_t) 1 << shift;

if ((uintptr_t) p & (size - 1)) {

goto wrong_chunk;

}

// 目标对象对应的位

m = (uintptr_t) 1 << ((((uintptr_t) p & (ngx_pagesize - 1)) >> shift)

+ NGX_SLAB_MAP_SHIFT);

if (slab & m) {

slot = shift - pool->min_shift;

// 如果当前页不在slot链表中(即释放前是满的),重新加回链表

if (page->next == NULL) {

slots = ngx_slab_slots(pool);

page->next = slots[slot].next;

slots[slot].next = page;

page->prev = (uintptr_t) &slots[slot] | NGX_SLAB_BIG;

page->next->prev = (uintptr_t) page | NGX_SLAB_BIG;

}

page->slab &= ~m;

// 如果空了,就释放该页

if (page->slab & NGX_SLAB_MAP_MASK) {

goto done;

}

ngx_slab_free_pages(pool, page, 1);

pool->stats[slot].total -= ngx_pagesize >> shift;

goto done;

}

goto chunk_already_free;

case NGX_SLAB_PAGE:

if ((uintptr_t) p & (ngx_pagesize - 1)) {

goto wrong_chunk;

}

if (!(slab & NGX_SLAB_PAGE_START)) {

ngx_slab_error(pool, NGX_LOG_ALERT,

"ngx_slab_free(): page is already free");

goto fail;

}

if (slab == NGX_SLAB_PAGE_BUSY) {

ngx_slab_error(pool, NGX_LOG_ALERT,

"ngx_slab_free(): pointer to wrong page");

goto fail;

}

size = slab & ~NGX_SLAB_PAGE_START;

ngx_slab_free_pages(pool, page, size);

ngx_slab_junk(p, size << ngx_pagesize_shift);

return;

}

/* not reached */

return;

done:

pool->stats[slot].used--; // 对象使用数减1

ngx_slab_junk(p, size);

return;

wrong_chunk:

ngx_slab_error(pool, NGX_LOG_ALERT,

"ngx_slab_free(): pointer to wrong chunk");

goto fail;

chunk_already_free:

ngx_slab_error(pool, NGX_LOG_ALERT,

"ngx_slab_free(): chunk is already free");

fail:

return;

}

|

Shared Memory Destruction

We talked about initialization, allocation logic, and release logic earlier, so we’ll talk about destruction logic here in order not to lose completeness.

I searched the whole code and found no place to release the shared memory, only when cycle_init() fails, munmap() will release the newly created shared memory, or reload will release the replaced shared memory.

So I guess the release is done automatically when the process exits. So how do we verify this? Let’s write a simple test program.

1

2

3

4

5

6

7

8

9

10

11

12

|

#include <stdio.h>

#include <sys/mman.h>

int main()

{

char *addr = (char *)0x7f1000000000;

size_t size = 8192;

char *p = mmap(addr, size, PROT_READ|PROT_WRITE,

MAP_SHARED|MAP_ANONYMOUS, -1, 0);

printf("addr p = %p, size = %zu\n", p, size);

return 0;

}

|

For testing convenience, a suggested address is specified in the parameters. The output of running the program is as follows.

1

2

|

$ ./a.out

addr p = 0x7f1000000000, size = 8192

|

Then, we write a systemtap script that prints parameter information and call stack information.

1

2

3

4

5

6

7

8

|

probe kernel.function("unmap_page_range") {

if ($addr != 0x7f1000000000)

next;

printf("--------\n");

printf("addr: 0x%lx, size: %lu\n", $addr, $end-$addr);

print_backtrace();

printf("--------\n");

}

|

We run the stap script, and then execute . /a.out The result is as follows.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

--------

addr: 0x7f1000000000, size: 8192

0xffffffff8bc168e0 : unmap_page_range+0x0/0xd00 [kernel]

0xffffffff8bc1765d : unmap_single_vma+0x7d/0xf0 [kernel]

0xffffffff8bc179a1 : unmap_vmas+0x51/0xb0 [kernel]

0xffffffff8bc21535 : exit_mmap+0xb5/0x1d0 [kernel]

0xffffffff8ba8b5e7 : mmput+0x57/0x140 [kernel]

0xffffffff8ba93b22 : do_exit+0x352/0xb90 [kernel]

0xffffffff8ba943e3 : do_group_exit+0x43/0xb0 [kernel]

0xffffffff8ba94464 : SyS_exit_group+0x14/0x20 [kernel]

0xffffffff8ba03a43 : do_syscall_64+0x73/0x130 [kernel]

0xffffffff8c400085 : entry_SYSCALL_64_after_hwframe+0x41/0xa6 [kernel]

0xffffffff8c400085 : entry_SYSCALL_64_after_hwframe+0x41/0xa6 [kernel]

--------

|

You can see that the memory we allocated with mmap is automatically unmaped when the process exits.

Statistics and monitoring

The ngx_slab_stat module can be used to view slab statistics, and it looks like this.

1

2

3

4

5

6

7

8

9

10

11

12

13

|

^_^$ curl http://127.0.0.1:50090/slab

* shared memory: SSL

total: 64(KB) free: 20(KB) size: 4(KB)

pages: 20(KB) start:00007FA1F9D8C000 end:00007FA1F9D9B000

slot: 8(Bytes) total: 504 used: 1 reqs: 1 fails: 0

slot: 16(Bytes) total: 254 used: 1 reqs: 1 fails: 0

slot: 32(Bytes) total: 127 used: 1 reqs: 1 fails: 0

slot: 64(Bytes) total: 64 used: 2 reqs: 2 fails: 0

slot: 128(Bytes) total: 32 used: 12 reqs: 15 fails: 0

slot: 256(Bytes) total: 16 used: 2 reqs: 2 fails: 0

slot: 512(Bytes) total: 8 used: 1 reqs: 1 fails: 0

slot: 1024(Bytes) total: 4 used: 1 reqs: 1 fails: 0

slot: 2048(Bytes) total: 2 used: 2 reqs: 5 fails: 0

|

The main thing is to read those statistics from the ngx_slab_stat_t structure. Of course it needs to be added to the module at compile time, and then the appropriate configuration items added to the access interface to get it. If this is not acceptable, it is possible to implement similar functionality non-invasively using systemtap.

ssl session cache

A final mention of ssl session cache, which is an example of actual use of shared memory and why I am writing this blog. ngx_ssl_session_cache_init is its private initialization function, which mainly initializes the ngx_ssl_session_cache_t structure, including the initialization of the red-black tree and the queue. The red-black tree is used for session cache lookup, and the queue is used for expiry elimination. Then the pointers are assigned to the private data data fields of ngx_shm_zone_t and ngx_slab_pool_t.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

|

ngx_int_t

ngx_ssl_session_cache_init(ngx_shm_zone_t *shm_zone, void *data)

{

size_t len;

ngx_slab_pool_t *shpool;

ngx_ssl_session_cache_t *cache;

if (data) {

shm_zone->data = data;

return NGX_OK;

}

shpool = (ngx_slab_pool_t *) shm_zone->shm.addr;

if (shm_zone->shm.exists) {

shm_zone->data = shpool->data;

return NGX_OK;

}

cache = ngx_slab_alloc(shpool, sizeof(ngx_ssl_session_cache_t));

if (cache == NULL) {

return NGX_ERROR;

}

shpool->data = cache;

shm_zone->data = cache;

ngx_rbtree_init(&cache->session_rbtree, &cache->sentinel,

ngx_ssl_session_rbtree_insert_value);

ngx_queue_init(&cache->expire_queue);

len = sizeof(" in SSL session shared cache \"\"") + shm_zone->shm.name.len;

shpool->log_ctx = ngx_slab_alloc(shpool, len);

if (shpool->log_ctx == NULL) {

return NGX_ERROR;

}

ngx_sprintf(shpool->log_ctx, " in SSL session shared cache \"%V\"%Z",

&shm_zone->shm.name);

shpool->log_nomem = 0;

return NGX_OK;

}

|

ngx_ssl_new_session() is used to create a new session cache, each creation will first try to eliminate up to 2 expired sessions, so as to ensure that the cache will not pile up, but also inert elimination will not let the event take too long. Each time the application fails, there is a chance to force the elimination. Because of the underlying slab mechanism, no matter how many expired sessions are eliminated, there is no guarantee that the new session will be applied successfully. Unless the eliminated object and the newly allocated belong to the same slot, or the eliminated object happens to be empty after a page.

Because ssl session cache originally belongs to a kind of optimization, so optimization failure is also normal. If you are more concerned about this, then when forced elimination occurs, it already means that the shared memory space is not enough and you need to increase the cache capacity or reduce the timeout accordingly. The cache capacity and timeout should satisfy the following relationship.

1

|

Cache capacity > ∑ [( TPS / timeout time ) * individual size]

|