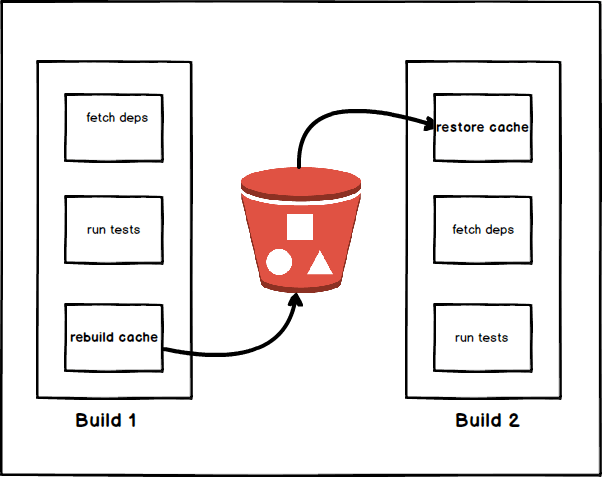

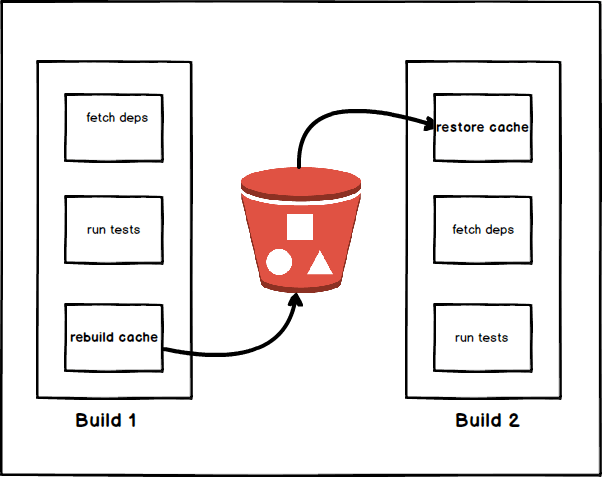

In non-phased build scenarios, when using containers for builds, we can mount the cache directory in the container to the build host to perform the build task; then copy the product to the run image to make the application image. However, in a phased build, the build image and the run image are in the same Dockerfile, which makes it difficult to optimize the cache for third-party dependencies.

1. Create a Vue instance project

Using the default configuration, create the sample project: hello-world

At this point, the project already contains all the dependencies and you can run the project directly:

Dependency packages are usually not committed to the code repository, so to better simulate the build scenario, here we remove the dependency and build.

- Add the Dockerfile file to the project

Go to the project directory:

Edit and save the Dockerfile file.

1

2

3

4

5

6

7

8

9

10

11

12

13

|

vim Dockerfile

FROM node:lts-alpine as builder

WORKDIR /

COPY package.json /

RUN npm install

COPY . .

RUN npm run build

FROM nginx:alpine

COPY --from=builder /dist/ /usr/share/nginx/html/

EXPOSE 80

|

Execute the command.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

|

docker build --no-cache -t shaowenchen/hello-world:v1 -f Dockerfile .

[+] Building 139.2s (13/13) FINISHED

=> [internal] load build definition from Dockerfile 2.6s

=> => transferring dockerfile: 228B 0.2s

=> [internal] load .dockerignore 3.4s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for docker.io/library/nginx:alpine 4.2s

=> [internal] load metadata for docker.io/library/node:lts-alpine 4.3s

=> CACHED [builder 1/6] FROM docker.io/library/node:lts-alpine@sha256:2c6c59cf4d34d4f937ddfcf33bab9d8bbad8658d1b9de7b97622566a52167f2b 0.0s

=> [internal] load build context 1.8s

=> => transferring context: 5.03kB 0.4s

=> CACHED [stage-1 1/2] FROM docker.io/library/nginx:alpine@sha256:da9c94bec1da829ebd52431a84502ec471c8e548ffb2cedbf36260fd9bd1d4d3 0.0s

=> [builder 2/6] COPY package.json / 5.3s

=> [builder 3/6] RUN npm install 93.1s

=> [builder 4/6] COPY . . 5.9s

=> [builder 5/6] RUN npm run build 13.6s

=> [stage-1 2/2] COPY --from=builder /dist/ /usr/share/nginx/html/ 4.0s

=> exporting to image 4.0s

=> => exporting layers 2.3s

=> => writing image sha256:dc0f72b655eb95235b51d8fb30c430c3c1803c2d538d9948941f3e7afd23ab56 0.2s

=> => naming to docker.io/shaowenchen/hello-world:v1

|

Execute the command to create a container.

1

|

docker run --rm -it -p 80:80 shaowenchen/hello-world:v1

|

Open locally: http://localhost, you can see the page.

2. Optimize with Buildkit Mounted Cache

The idea of this approach is to store third-party packages in a separate cache image and mount the files from the cache image to the build environment when building the application image.

2.1 Turning on Buildkit

Buildkit is turned off by default. There are two ways to turn on Buildkit:

- The first is to add a buildkit configuration to

/etc/docker/daemon.json, { "features": { "buildkit": true }} to turn on buildkit features by default.

- The second is to add the environment variable

DOCKER_BUILDKIT=1 each time you execute the docker command.

2.2 Mounting the cache using Bind

- Prepare the Dockerfile for the cached image

Create the Dockerfile file.

1

2

3

4

5

6

7

|

vim Dockerfile-Cache

FROM node:lts-alpine as builder

WORKDIR /

COPY . .

RUN npm install

RUN npm run build

|

One small detail here is that you need npm run build to compile third-party packages. You can’t get good acceleration by just caching third-party packages. Also, pre-compiling reduces CPU and memory consumption.

- Compile a cached image with third-party packages.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

docker build --no-cache -t shaowenchen/hello-world:cache -f Dockerfile-Cache .

[+] Building 111.9s (9/9) FINISHED

=> [internal] load build definition from Dockerfile-Cache 1.8s

=> => transferring dockerfile: 132B 0.0s

=> [internal] load .dockerignore 2.9s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for docker.io/library/node:lts-alpine 4.2s

=> [internal] load build context 1.7s

=> => transferring context: 4.57kB 0.2s

=> CACHED [1/5] FROM docker.io/library/node:lts-alpine@sha256:2c6c59cf4d34d4f937ddfcf33bab9d8bbad8658d1b9de7b97622566a52167f2b 0.0s

=> [2/5] COPY . . 3.6s

=> [3/5] RUN npm install 69.2s

=> [4/5] RUN npm run build 14.5s

=> exporting to image 13.9s

=> => exporting layers 13.0s

=> => writing image sha256:e6ba7406f5d0c33d446ecc9a3c8e35fa593176ec9dedd899d39a1c00a14a5179 0.2s

=> => naming to docker.io/shaowenchen/hello-world:cache

|

- Prepare the build Dockerfile file for the application

1

2

3

4

5

6

7

8

9

10

11

12

13

|

vim Dockerfile-Bind

FROM node:lts-alpine as builder

WORKDIR /

COPY . .

RUN --mount=type=bind,from=shaowenchen/hello-world:cache,source=/node_modules,target=/node_modules \

--mount=type=bind,from=shaowenchen/hello-world:cache,source=/root/.npm,target=/root/.npm npm install

RUN --mount=type=bind,from=shaowenchen/hello-world:cache,source=/node_modules,target=/node_modules \

--mount=type=bind,from=shaowenchen/hello-world:cache,source=/root/.npm,target=/root/.npm npm run build

FROM nginx:alpine

COPY --from=builder /dist/ /usr/share/nginx/html/

EXPOSE 80

|

Each command that uses the cache needs to be preceded by -mount.

- Compile the application image image

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

|

docker build --no-cache -t shaowenchen/hello-world:v1-bind -f Dockerfile-Bind .

[+] Building 55.3s (13/13) FINISHED

=> [internal] load build definition from Dockerfile-Bind 2.5s

=> => transferring dockerfile: 42B 0.0s

=> [internal] load .dockerignore 3.4s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for docker.io/library/nginx:alpine 4.0s

=> [internal] load metadata for docker.io/library/node:lts-alpine 3.8s

=> [internal] load build context 2.4s

=> => transferring context: 4.47kB 0.2s

=> CACHED FROM docker.io/shaowenchen/hello-world:cache 0.3s

=> CACHED [stage-1 1/2] FROM docker.io/library/nginx:alpine@sha256:da9c94bec1da829ebd52431a84502ec471c8e548ffb2cedbf36260fd9bd1d4d3 0.0s

=> CACHED [builder 1/5] FROM docker.io/library/node:lts-alpine@sha256:2c6c59cf4d34d4f937ddfcf33bab9d8bbad8658d1b9de7b97622566a52167f2b 0.0s

=> [builder 2/5] COPY . . 4.2s

=> [builder 3/5] RUN --mount=type=bind,from=shaowenchen/hello-world:cache,source=/node_modules,target=/node_modules --mount=type=bind,from=shaowenchen/hello-world:cache 16.8s

=> [builder 4/5] RUN --mount=type=bind,from=shaowenchen/hello-world:cache,source=/node_modules,target=/node_modules --mount=type=bind,from=shaowenchen/hello-world:cache 13.2s

=> [stage-1 2/2] COPY --from=builder /dist/ /usr/share/nginx/html/ 3.7s

=> exporting to image 4.8s

=> => exporting layers 3.1s

=> => writing image sha256:de18663c5752a41cd61c23fb2cbbc1ac9c4c79cf5fdbe15ca16e806d0ce18d9d 0.2s

=> => naming to docker.io/shaowenchen/hello-world:v1-bind

|

As you can see, after adding the cache, the total time to execute install and build drops from over 100 seconds to less than 30 seconds.

3. Optimization with S3 storage cache

3.1 Quickly deploying a minio

3.2 Configuring the secret key

Create the credentials file .s3cfg in the hello-world directory.

1

2

3

4

5

6

7

8

|

host_base = 1.1.1.1:9000

host_bucket = 1.1.1.1:9000

use_https = False

access_key = minio

secret_key = minio123

signature_v2 = False

|

3.3 Adapting Dockerfile to S3 cache

The main point of work here is:

- install s3cmd

- get and unpack the cache, ignoring errors (first time is empty)

- … install dependencies and build

- compress and upload the cache

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

|

vim Dockerfile-S3

FROM node:lts-alpine as builder

ARG BUCKETNAME

ENV BUCKETNAME=$BUCKETNAME

RUN apk add python3 && ln -sf python3 /usr/bin/python && apk add py3-pip

RUN wget https://sourceforge.net/projects/s3tools/files/s3cmd/2.2.0/s3cmd-2.2.0.tar.gz \

&& mkdir -p /usr/local/s3cmd && tar -zxf s3cmd-2.2.0.tar.gz -C /usr/local/s3cmd \

&& ln -s /usr/local/s3cmd/s3cmd-2.2.0/s3cmd /usr/bin/s3cmd && pip3 install python-dateutil

WORKDIR /

# Get Cache

COPY .s3cfg /root/

RUN s3cmd get s3://$BUCKETNAME/node_modules.tar.gz && tar xf node_modules.tar.gz || exit 0

RUN s3cmd get s3://$BUCKETNAME/npm.tar.gz && tar xf npm.tar.gz || exit 0

COPY . .

RUN npm install

RUN npm run build

# Uploda Cache

RUN s3cmd del s3://$BUCKETNAME/node_modules.tar.gz || exit 0

RUN s3cmd del s3://$BUCKETNAME/npm.tar.gz || exit 0

RUN tar cvfz node_modules.tar.gz node_modules

RUN tar cvfz npm.tar.gz ~/.npm

RUN s3cmd put node_modules.tar.gz s3://$BUCKETNAME/

RUN s3cmd put npm.tar.gz s3://$BUCKETNAME/

FROM nginx:alpine

COPY --from=builder /dist/ /usr/share/nginx/html/

EXPOSE 80

|

- First time building an application image using S3 cache

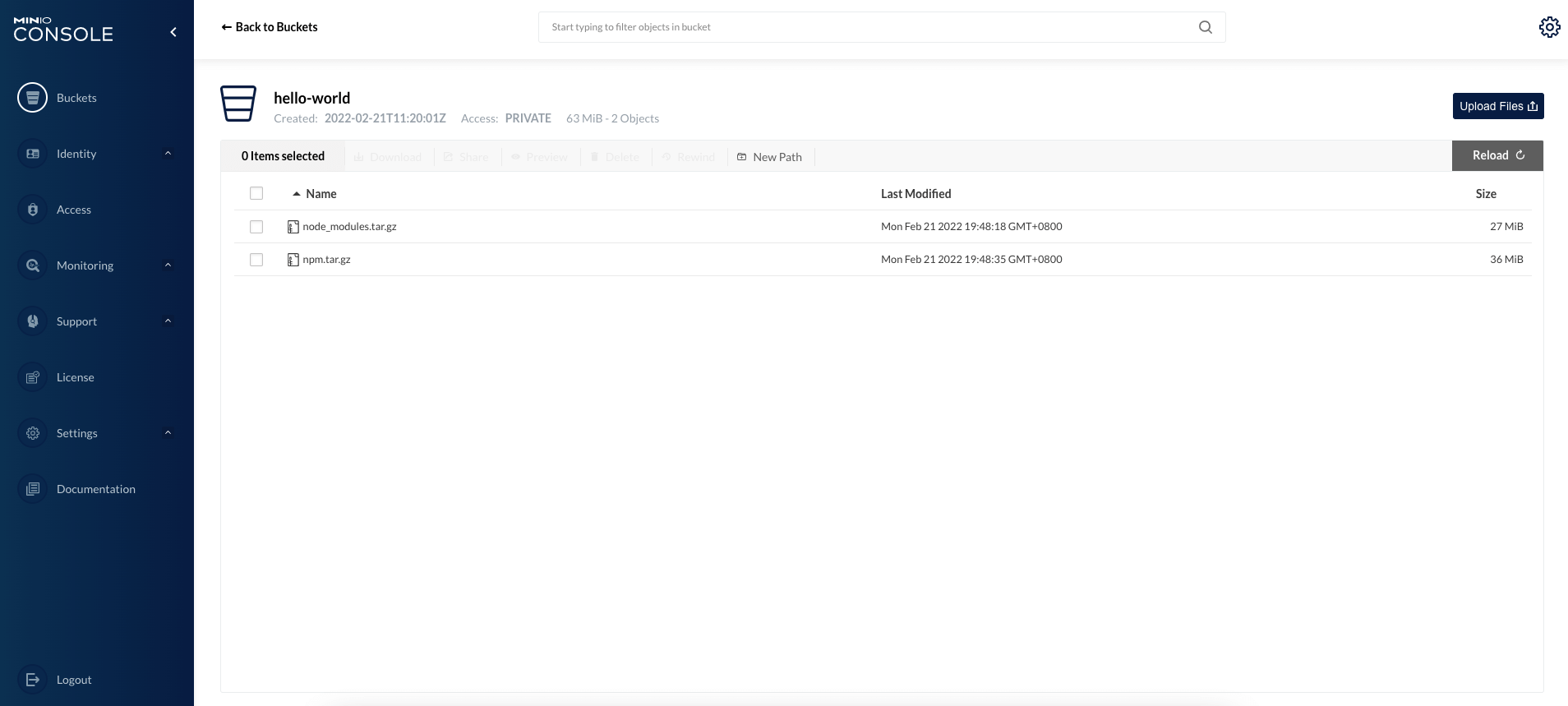

Before you build, you need to create a Bucket named hello-world in advance.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

|

docker build --no-cache --build-arg BUCKETNAME="hello-world" -t shaowenchen/hello-world:v1-s3 -f Dockerfile-S3 .

[+] Building 244.7s (23/23) FINISHED

=> [internal] load build definition from Dockerfile-S3 1.7s

=> => transferring dockerfile: 40B 0.1s

=> [internal] load .dockerignore 2.6s

=> => transferring context: 2B 0.1s

=> [internal] load metadata for docker.io/library/nginx:alpine 2.6s

=> [internal] load metadata for docker.io/library/node:lts-alpine 0.0s

=> CACHED [builder 1/16] FROM docker.io/library/node:lts-alpine 0.0s

=> [internal] load build context 2.2s

=> => transferring context: 4.53kB 0.1s

=> CACHED [stage-1 1/2] FROM docker.io/library/nginx:alpine@sha256:da9c94bec1da829ebd52431a84502ec471c8e548ffb2cedbf36260fd9bd1d4d3 0.0s

=> [builder 2/16] RUN apk add python3 && ln -sf python3 /usr/bin/python && apk add py3-pip 32.3s

=> [builder 3/16] RUN wget https://sourceforge.net/projects/s3tools/files/s3cmd/2.2.0/s3cmd-2.2.0.tar.gz && mkdir -p /usr/local/s3cmd && tar -zxf s3cmd-2.2.0.tar.g 12.8s

=> [builder 4/16] COPY .s3cfg /root/ 5.8s

=> [builder 5/16] RUN s3cmd get s3://hello-world/node_modules.tar.gz && tar xf node_modules.tar.gz || exit 0 6.7s

=> [builder 6/16] RUN s3cmd get s3://hello-world/npm.tar.gz && tar xf npm.tar.gz || exit 0 7.3s

=> [builder 7/16] COPY . . 5.7s

=> [builder 8/16] RUN npm install 71.3s

=> [builder 9/16] RUN npm run build 14.4s

=> [builder 10/16] RUN s3cmd del s3://hello-world/node_modules.tar.gz || exit 0 7.5s

=> [builder 11/16] RUN s3cmd del s3://hello-world/npm.tar.gz || exit 0 6.9s

=> [builder 12/16] RUN tar cvfz node_modules.tar.gz node_modules 11.3s

=> [builder 13/16] RUN tar cvfz npm.tar.gz ~/.npm 9.4s

=> [builder 14/16] RUN s3cmd put node_modules.tar.gz s3://hello-world/ 14.8s

=> [builder 15/16] RUN s3cmd put npm.tar.gz s3://hello-world/ 15.9s

=> [stage-1 2/2] COPY --from=builder /dist/ /usr/share/nginx/html/ 4.5s

=> exporting to image 3.9s

=> => exporting layers 2.5s

=> => writing image sha256:dceead698b2c5f3980bf17f246078fe967dda2d9b009c30d9fdb0c60263146e5 0.1s

=> => naming to docker.io/shaowenchen/hello-world:v1-s3

|

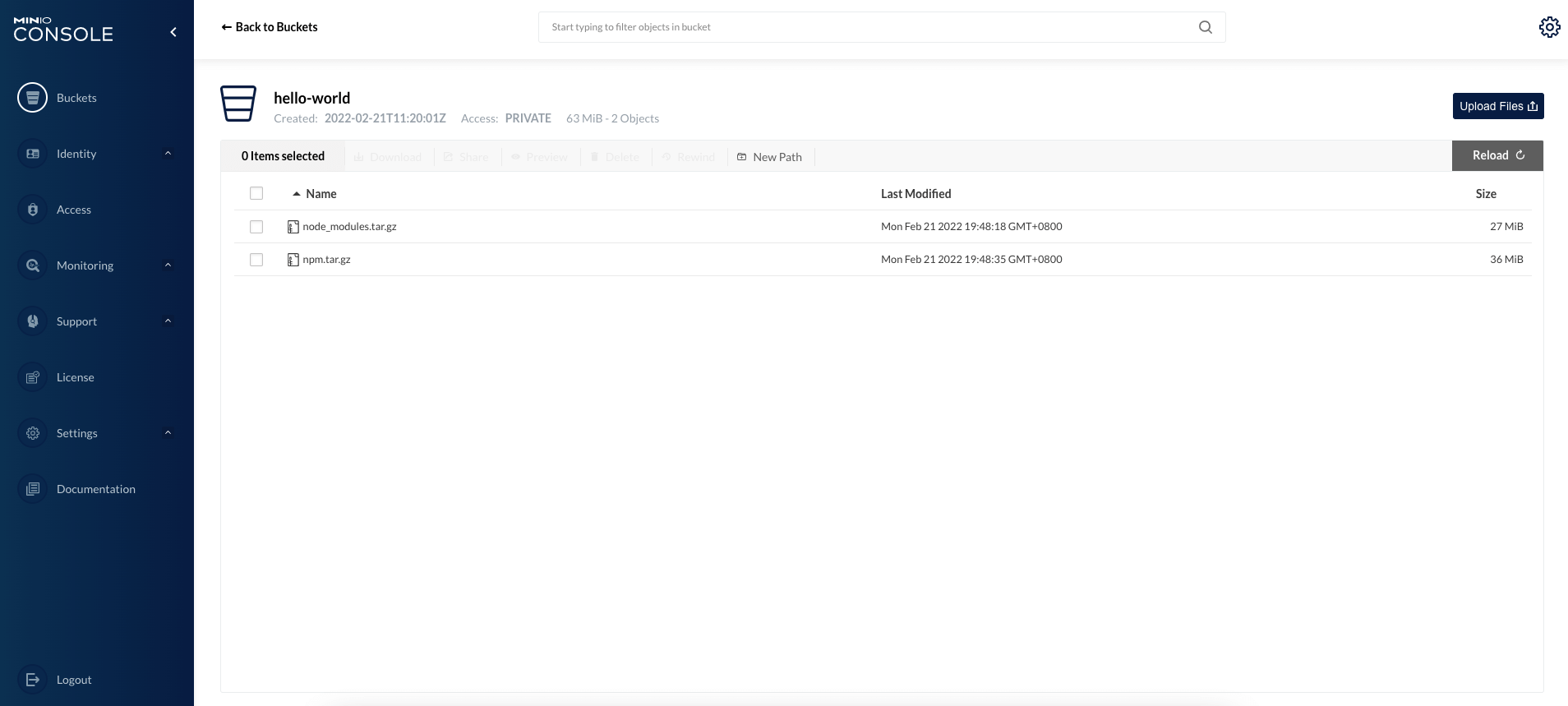

You can see the relevant cache files in the Minio UI.

- Build the application image again using the S3 cache

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

|

docker build --no-cache --build-arg BUCKETNAME="hello-world" -t shaowenchen/hello-world:v1-s3 -f Dockerfile-S3 .

[+] Building 213.8s (23/23) FINISHED

=> [internal] load build definition from Dockerfile-S3 2.0s

=> => transferring dockerfile: 40B 0.0s

=> [internal] load .dockerignore 2.7s

=> => transferring context: 2B 0.0s

=> [internal] load metadata for docker.io/library/nginx:alpine 4.6s

=> [internal] load metadata for docker.io/library/node:lts-alpine 0.0s

=> CACHED [builder 1/16] FROM docker.io/library/node:lts-alpine 0.0s

=> CACHED [stage-1 1/2] FROM docker.io/library/nginx:alpine@sha256:da9c94bec1da829ebd52431a84502ec471c8e548ffb2cedbf36260fd9bd1d4d3 0.0s

=> [internal] load build context 1.9s

=> => transferring context: 4.53kB 0.1s

=> [builder 2/16] RUN apk add python3 && ln -sf python3 /usr/bin/python && apk add py3-pip 30.9s

=> [builder 3/16] RUN wget https://sourceforge.net/projects/s3tools/files/s3cmd/2.2.0/s3cmd-2.2.0.tar.gz && mkdir -p /usr/local/s3cmd && tar -zxf s3cmd-2.2.0.tar.g 13.2s

=> [builder 4/16] COPY .s3cfg /root/ 5.5s

=> [builder 5/16] RUN s3cmd get s3://hello-world/node_modules.tar.gz && tar xf node_modules.tar.gz || exit 0 16.7s

=> [builder 6/16] RUN s3cmd get s3://hello-world/npm.tar.gz && tar xf npm.tar.gz || exit 0 15.3s

=> [builder 7/16] COPY . . 4.7s

=> [builder 8/16] RUN npm install 18.4s

=> [builder 9/16] RUN npm run build 13.6s

=> [builder 10/16] RUN s3cmd del s3://hello-world/node_modules.tar.gz || exit 0 7.4s

=> [builder 11/16] RUN s3cmd del s3://hello-world/npm.tar.gz || exit 0 7.9s

=> [builder 12/16] RUN tar cvfz node_modules.tar.gz node_modules 10.8s

=> [builder 13/16] RUN tar cvfz npm.tar.gz ~/.npm 10.0s

=> [builder 14/16] RUN s3cmd put node_modules.tar.gz s3://hello-world/ 17.9s

=> [builder 15/16] RUN s3cmd put npm.tar.gz s3://hello-world/ 16.3s

=> [stage-1 2/2] COPY --from=builder /dist/ /usr/share/nginx/html/ 5.0s

=> exporting to image 3.8s

=> => exporting layers 2.5s

=> => writing image sha256:a9c46eef6073b3ef8e6c4cd33cc1ed11c94dcebdb0883c89283883d9434de331 0.2s

=> => naming to docker.io/shaowenchen/hello-world:v1-s3 0.2s

|

As you can see, the install and build commands take about 80 seconds, but the S3 cache-related operations take about 50 seconds.

The 80 seconds can be optimized because the network speed limit between the build environment and S3 service is 1.2 MB/S, which causes the pull and push time to be too long, so there is more room for optimization. I think it is more reasonable to be within 30 seconds.

4. Summary

Cache acceleration is a difficult issue for CI products. Users use it in different ways, and all we can do is provide solutions for their scenarios without forcing them to change their usage habits. In my previous CI product, the cache was mounted on the host and accelerated in the build environment, which is not applicable to the phased build scenario. There are two main options here:

The first one is to enable the Buildkit feature to store third-party dependencies in a cache image. The cache image can be updated regularly according to the policy. When building the image, the third-party packages in the cache image are mounted.

The second way is to use S3 to store third-party dependencies and use the s3cmd command to manage the cache at build time.

Neither of these two approaches is very good. The main reason is that they both require changes to the Dockerfile, which is more invasive to the business.