1. Global network planning

Many globally oriented multi-regional infrastructures are designed without much thought put into network planning at the beginning. When the complexity of the business reaches a certain level, they are then forced to make network adjustments and optimizations. And any major adjustments on the network will have a huge impact on the business. In the end, you will be caught in a dilemma and have to invest more manpower, carry the historical burden, and walk on the precipice again and again.

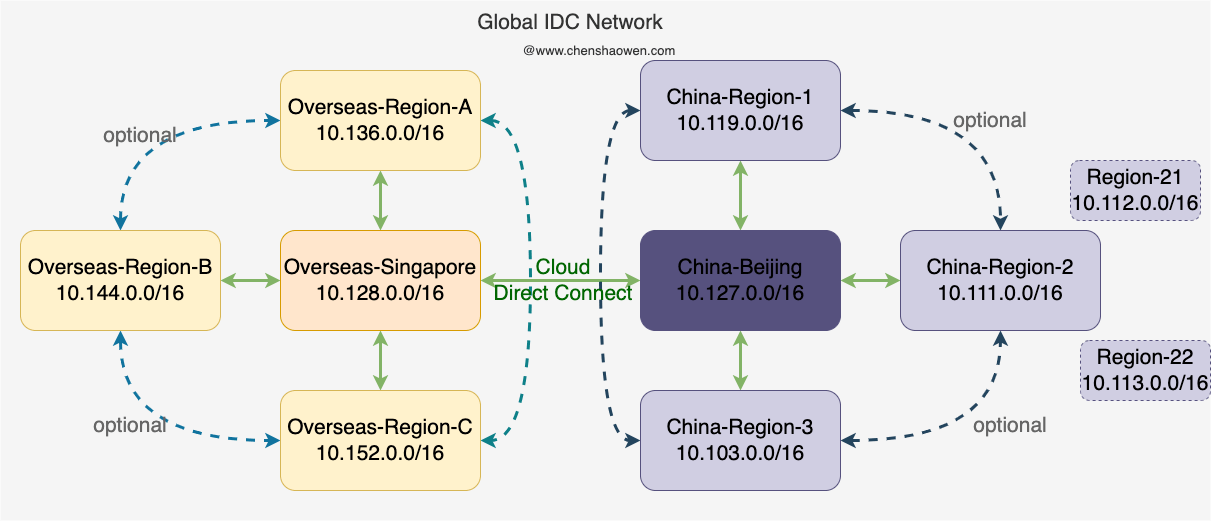

The following diagram is a network topology that I think is ideal:

The main points of network planning are as follows:

- Network segmentation

Under the global business model, the network is divided into two parts: overseas and mainland China. I prefer to set up two centers, with the core node in Beijing for domestic business and the core node in Singapore for overseas business.

Therefore, the 10.128.0.0/16 and above network segments are divided into overseas, and 10.127.0.0/16 and below are divided into domestic. At the same time, the segments in each zone are separated by 8, leaving some room for expansion.

- Enabling connectivity

If it is the same VPC, then the intranet is reachable. However, if it is between different VPCs, different vendors, and different regions, we usually use a certain method to achieve connectivity: public network or private line.

The public network is a more general method. We can build a VPN intranet based on the public network to achieve network connectivity. However, the quality of public network connectivity is not guaranteed, so another way is private line.

Private lines enable cross-regional network connectivity, but cloud private lines are usually limited to the same cloud vendor. In other words, Huawei Cloud Beijing’s cloud private line can only connect to Huawei Cloud Singapore, but not to AWS Singapore.

- Configuring Routing

Connecting is just like plugging in a network cable, but when forwarding packets, it is not clear where the next hop of the IP packet is, so you also need to configure the route.

Since there are two network cores set up, the overseas region needs to interoperate with the overseas core node, and the domestic region needs to interoperate with the domestic core node. As for whether the other regions are interoperable, it depends on whether there is a need. For example, if we need to do P2P distribution of imageed data on the intranet, then the regions need to interoperate as well.

2. build global image distribution capabilities

Global image distribution capabilities are built on the premise of global IDC intranet interoperability. We cannot expose the infrastructure to the public network, and all image data is transmitted through intranet traffic.

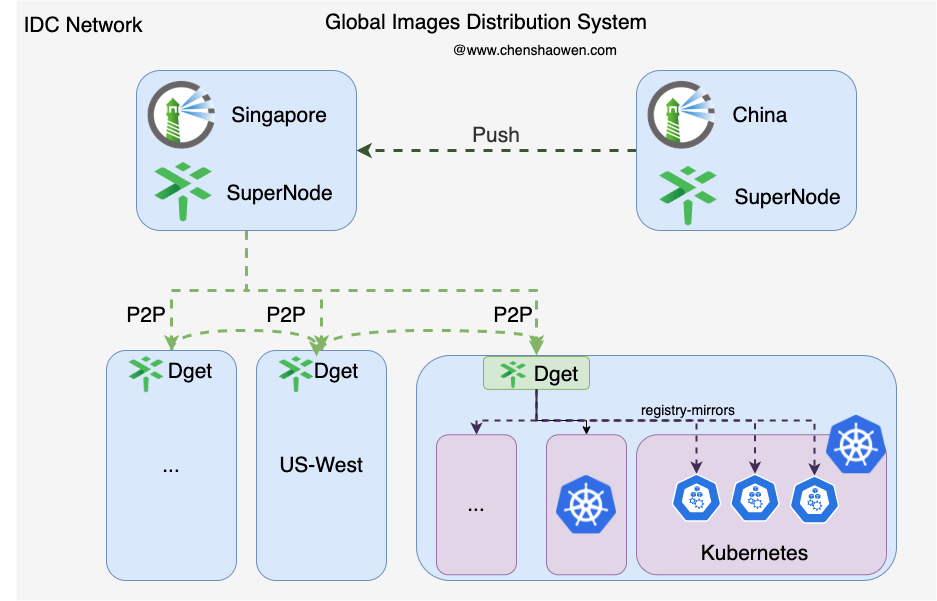

The following figure shows a global image distribution system:

Our R&D department is based in China, while the services are deployed worldwide. The flow of image data will go through the following process:

- the image is built domestically and pushed to the domestic Habor.

- domestic Habor synchronizes images to overseas Habor.

- deploy the overseas application in a region and pull the image.

- the application image is cached in Dget as the address of Dget is configured as registry-images in each Docker.

- in the same region, multiple copies of the deployment will pull images directly from Dget.

3. Habor deployment and high availability

3.1 Deploying Habor

There are two main ways to deploy Harbor, Helm Chart and Docker Compose, and Docker Compose is recommended here because VM is a more suitable infrastructure for Habor than Kubernetes as a service that does not change frequently and requires high stability.

3.2 Highly Available Harbor

There are two main approaches to high availability in Harbor:

- Shared storage. Highly consistent, requiring deployment of a dual-active/primary storage backend.

- Synchronization between multiple Harbor. Consistency is not high, and image synchronization takes time.

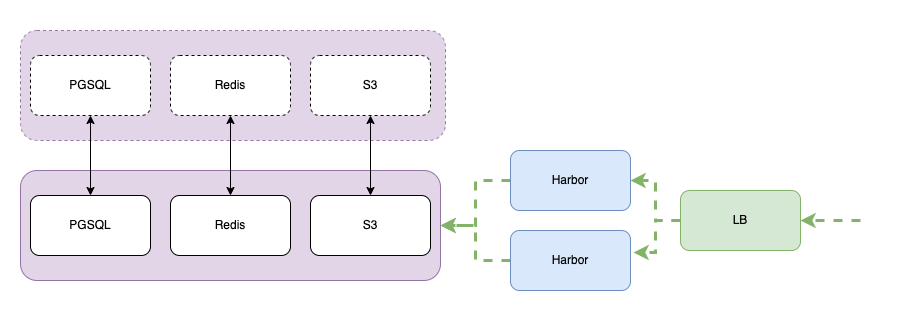

The solution I recommend is shared storage, so you don’t have to wait for the Harbor to finish synchronizing, and you can use the image after pushing. In the figure below, the shared storage solution requires the storage components to be deployed as a dual-active/primary backup:

There is one small detail about LB configuration.

If you use a seven-tier LB to offload the certificate, the back-end host provides port 80, so you need to forward port 80 to 443 at the LB layer, otherwise docker login will not be able to log in. If you are using a four-tier LB, you don’t need to consider this problem. When debugging, there is another problem, because VPN and LB will modify the IP packet, which may cause some weird problems, such as can’t connect, unstable connection. At this point, you need to pay attention to the MTU value.

The components that need to be shared here are:

- Shared PGSQL

You can directly purchase the service from the cloud vendor and then initialize the table creation.

|

|

Just add the external database configuration to the harbor.yaml file.

|

|

- Shared Redis

Harbor’s Redis primarily stores session information, which affects logins to Harbor UI pages. If you don’t have high availability requirements, you can use a self-built Redis instance, because even if the Redis storage data is lost, there is no impact on Harbor’s data integrity.

- Shared S3 Object Storage

I am using Huawei OBS Object Storage, where AKSK needs to be given full permissions.

|

|

If you are concerned about a single point of S3, you can purchase two Buckets and synchronize imageed data with each other. This way, when one of the Buckets has an exception, you can quickly switch to the other Bucket to restore service.

4. Save bandwidth with Dragonfly

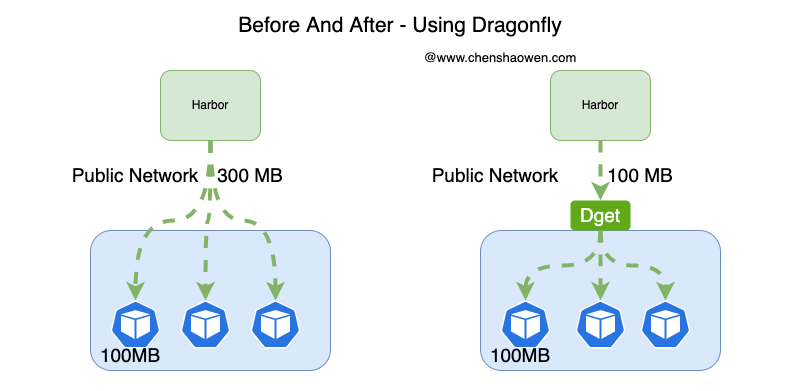

Why do you need Dragonfly to distribute images? One of the big reasons is to save bandwidth, and to avoid overloading Habor.

If you didn’t use Dragonfly to distribute images, you would be requesting data from Habor every time you pulled a image. This is shown in the figure below:

With Dragonfly, the same region only needs to request Harbor once, and all other requests can be completed through intra-regional traffic. This approach greatly speeds up the image pulling process, saves bandwidth across regions, and reduces the load on Habor.

5. Summary

Recently in the business re-planning the deployment of a image management system, this is a summary of some relevant thinking and practice.

In this article, we started with network planning and talked about global image distribution. Network planning mainly involves network segment planning, connectivity, configuration routing three parts. The image distribution mainly adopts the Habor + Dragonfly scheme. At the same time, it is recommended to deploy a highly available Harbor using shared storage.

In fact, after deploying Habor, I also tested the speed of pulling images from each region. In addition, there is a need to monitor the configuration of the dependencies that affect the Habor service and to continuously improve it in order to create a good image repository and distribution system.