When we are using Linux, if there is a problem with network or disk I/O, we may find that the process is stuck, even if we use kill -9, we cannot kill the process, and many common debugging tools such as strace, pstack, etc. are not working.

At this point, we use ps to view the list of processes and see that the status of the stuck process is shown as D.

The D state is described in man ps as Uninterruptible Sleep.

Linux processes have two sleep states.

- Interruptible Sleep, which is interruptible sleep, is shown in the ps command as S. A process in this sleep state can be woken up by sending it a signal.

- Uninterruptible Sleep, uninterruptible sleep, is shown in the ps command as D. A process in this sleep state cannot immediately process any signals sent to it, which is why it cannot be killed with a kill.

There is an answer at Stack Overflow.

kill -9just sends aSIGKILLsignal to the process. When a process is in a special state (signal handling, or in a system call) it cannot handle any signals, includingSIGKILL, and the process cannot be killed immediately, which is often referred to as theDstate (non-interruptible sleep state). Common debugging tools (e.g.strace,pstack, etc.), which also make use of a particular signal, cannot be used in this state either.

As you can see, a process in state D is usually in a kernel state system call, so how do you know which system call it is and what it is waiting for? Fortunately, Linux provides procfs (the /proc directory under Linux), which allows you to see the current kernel call stack of any process. Let’s simulate this with a process accessing JuiceFS (since the JuiceFS client is based on FUSE, a user state file system, it is easier to simulate I/O failures).

First mount JuiceFS to the foreground (in the . /juicefs mount command with a -f argument), then stop the process with Cltr+Z. If you then use ls /jfs to access the mount point, you will see that ls is stuck.

With the following command you can see that ls is stuck on the vfs_fstatat call, which sends a getattr request to the FUSE device and is waiting for a response. The JuiceFS client process has been stopped by us, so it is stuck on.

|

|

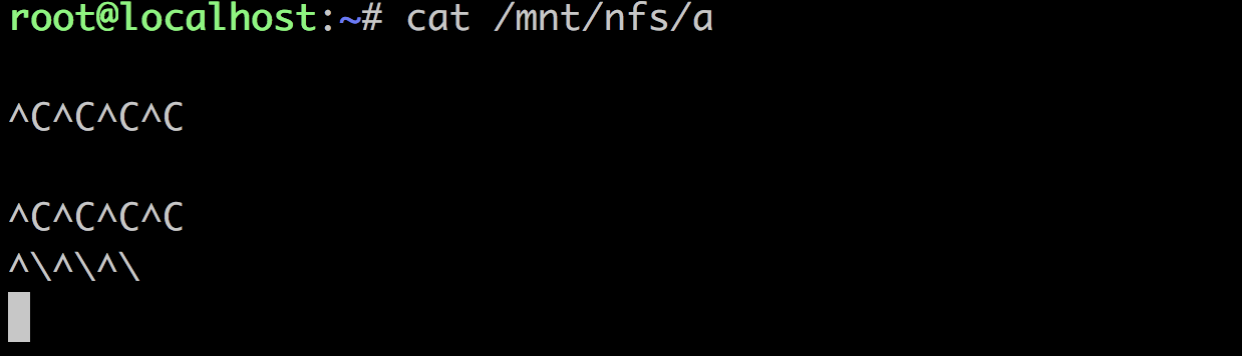

Pressing Ctrl+C at this point does not exit either.

But with strace it wakes up and starts processing the previous interrupt signal, and then exits.

|

|

It can also be killed with kill -9 at this point.

Because the simple system call vfs_lstatat() does not block signals like SIGKILL, SIGQUIT, SIGABRT, etc. it can be handled in the usual way.

Let’s simulate a more complex I/O error by configuring JuiceFS with an unwritable storage type, mounting it, and trying to write to it with cp, which also gets stuck:

|

|

Why are you stuck on close_fd()? This is because writing to JFS is asynchronous, when cp calls write(), the data is first cached in the JuiceFS client process and is written to the backend storage asynchronously, when cp finishes writing the data, it calls close to make sure the data is written, which corresponds to the FUSE flush operation. When the flush operation is encountered, it needs to make sure that all the data written is persisted to the backend storage, and when the backend storage fails to write, it is in the process of retrying several times, so the flush operation is stuck and has not yet replied to cp, so cp is stuck too.

At this point it is possible to interrupt cp with Cltr+C or kill, as JuiceFS implements interrupt handling for various filesystem operations, allowing it to abort the current operation (e.g. flush) and return EINTR, which will interrupt the application accessing JuiceFS in case of various network failures.

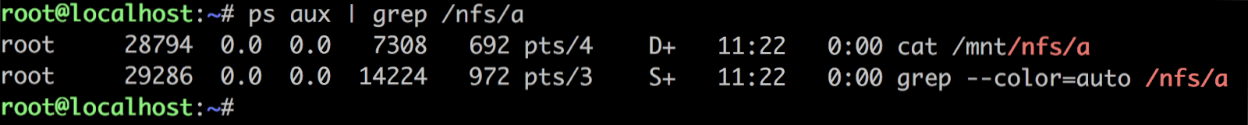

At this point if I stop the JuiceFS client process so that it can no longer handle any FUSE requests (including interrupt requests), then if I try to kill it, it will not kill it, including kill -9, and when I check the process status with ps, it is already in the D state.

|

|

But at this point it is possible to use cat /proc/1592/stack to see its kernel call stack

|

|

The kernel call stack shows that it is stuck on a flush call from FUSE, which can be interrupted immediately by resuming the JuiceFS client process cp to get it to exit.

Operations like close, which are data-safe, are not restartable and cannot be interrupted at will by SIGKILL, for example, until the FUSE implementation responds to the interrupt.

Therefore, as long as the JuiceFS client process can respond to interrupts in a healthy way, you don’t have to worry about your application accessing JuiceFS getting stuck. Alternatively, killing the JuiceFS client process can end the current mount point and interrupt all applications that are accessing the current mount point .