Introduction

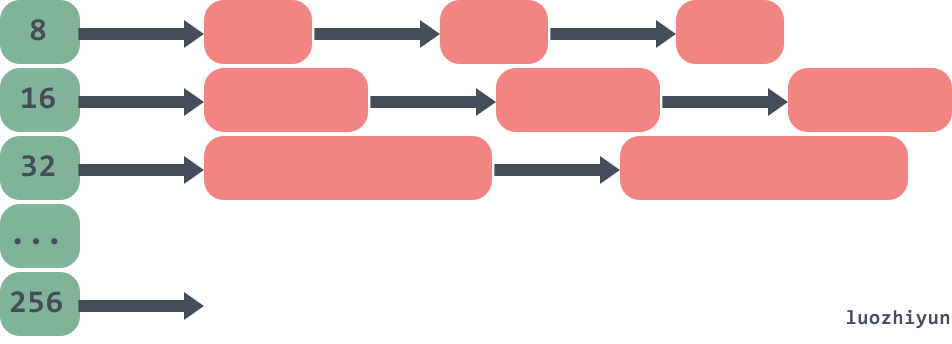

The Go language’s memory allocator takes its cue from TCMalloc’s design for high-speed memory allocation. The core idea is to use a multi-level cache to sort objects by size and implement different allocation strategies by class.

Information about TCMalloc can be found here: http://goog-perftools.sourceforge.net/doc/tcmalloc.html.

If the object to be allocated is a small object (<= 32k), there is a lock-free cache of small objects in each thread that can be allocated in a straightforward and efficient lock-free manner.

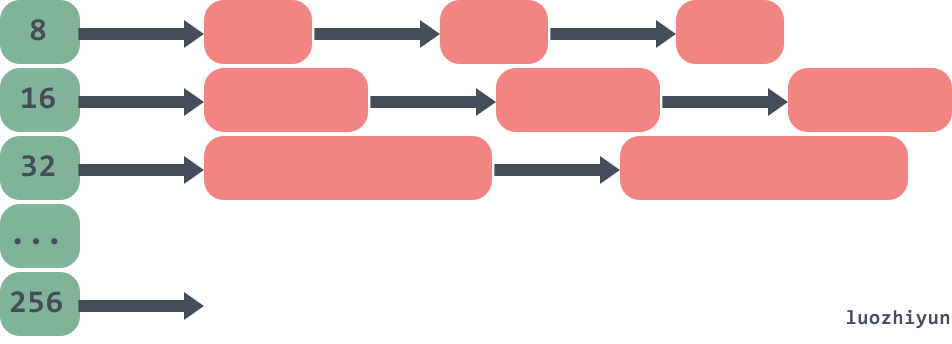

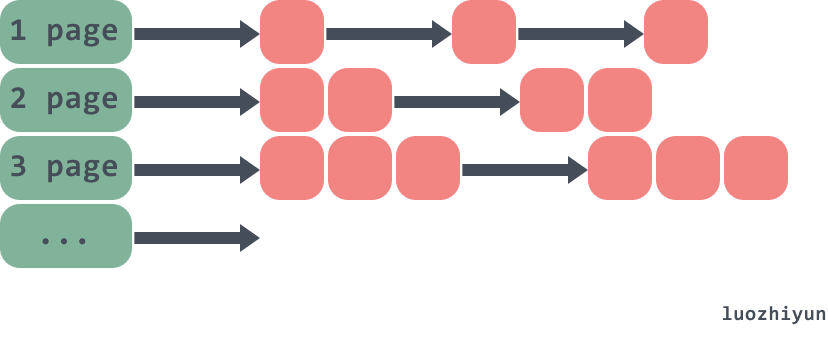

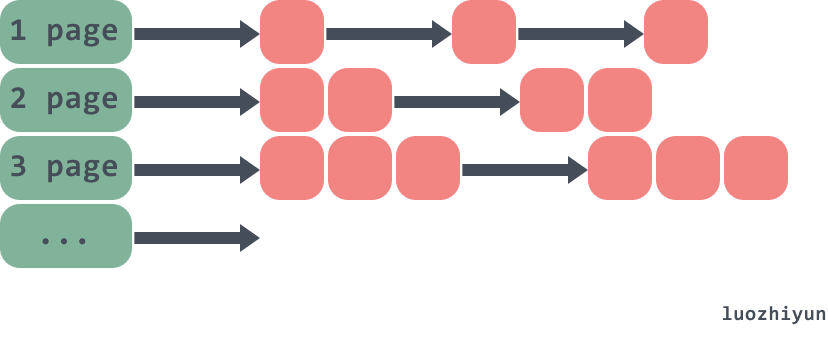

As follows: objects are divided into chained tables in different memory size groups.

If it is a large object (>32k), then the page heap is allocated. as follows.

Although the go memory allocator was originally based on tcmalloc, it is now very different. So some of the structure above will have changed slightly, and I’ll ramble on about it below.

Because the source code for memory allocation is quite complex, it is important to see how breakpoint assembly is used for debugging before proceeding with the source code analysis.

Breakpoint debugging assembly

The Go language currently supports several debuggers, GDB, LLDB and Delve. Only Delve is a debugging tool designed and developed specifically for the Go language. Moreover, Delve itself is developed in Go and provides the same support for the Windows platform. In this section we briefly explain how to debug Go assembler based on Delve. Project address: https://github.com/go-delve/delve

Installation.

1

|

go get github.com/go-delve/delve/cmd/dlv

|

First write an example - test.go.

1

2

3

4

5

6

7

8

9

10

11

|

package main

import "fmt"

type A struct {

test string

}

func main() {

a := new(A)

fmt.Println(a)

}

|

Then enter the package directory on the command line and type dlv debug to debug.

1

2

|

PS C:\document\code\test_go\src> dlv debug

Type 'help' for list of commands.

|

You can then use the break command to set a breakpoint on the main method of the main package.

1

2

|

(dlv) break main.main

Breakpoint 1 set at 0x4bd30a for main.main() c:/document/code/test_go/src/test.go:8

|

See all the breakpoints that have been set via breakpoints.

1

2

3

4

5

|

(dlv) breakpoints

Breakpoint runtime-fatal-throw at 0x4377e0 for runtime.fatalthrow() c:/software/go/src/runtime/panic.go:1162 (0)

Breakpoint unrecovered-panic at 0x437860 for runtime.fatalpanic() c:/software/go/src/runtime/panic.go:1189 (0)

print runtime.curg._panic.arg

Breakpoint 1 at 0x4bd30a for main.main() c:/document/code/test_go/src/test.go:8 (0)

|

The continue command allows the program to run to the next breakpoint.

1

2

3

4

5

6

7

8

9

10

11

12

13

|

(dlv) continue

> main.main() c:/document/code/test_go/src/test.go:8 (hits goroutine(1):1 total:1) (PC: 0x4bd30a)

3: import "fmt"

4:

5: type A struct {

6: test string

7: }

=> 8: func main() {

9: a := new(A)

10: fmt.Println(a)

11: }

12:

13:

|

View the assembly code corresponding to the main function with the disassemble command.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

|

(dlv) disassemble

TEXT main.main(SB) C:/document/code/test_go/src/test.go

test.go:8 0x4bd2f0 65488b0c2528000000 mov rcx, qword ptr gs:[0x28]

test.go:8 0x4bd2f9 488b8900000000 mov rcx, qword ptr [rcx]

test.go:8 0x4bd300 483b6110 cmp rsp, qword ptr [rcx+0x10]

test.go:8 0x4bd304 0f8697000000 jbe 0x4bd3a1

=> test.go:8 0x4bd30a* 4883ec78 sub rsp, 0x78

test.go:8 0x4bd30e 48896c2470 mov qword ptr [rsp+0x70], rbp

test.go:8 0x4bd313 488d6c2470 lea rbp, ptr [rsp+0x70]

test.go:9 0x4bd318 488d0581860100 lea rax, ptr [__image_base__+874912]

test.go:9 0x4bd31f 48890424 mov qword ptr [rsp], rax

test.go:9 0x4bd323 e8e800f5ff call $runtime.newobject

test.go:9 0x4bd328 488b442408 mov rax, qword ptr [rsp+0x8]

test.go:9 0x4bd32d 4889442430 mov qword ptr [rsp+0x30], rax

test.go:10 0x4bd332 4889442440 mov qword ptr [rsp+0x40], rax

test.go:10 0x4bd337 0f57c0 xorps xmm0, xmm0

test.go:10 0x4bd33a 0f11442448 movups xmmword ptr [rsp+0x48], xmm0

test.go:10 0x4bd33f 488d442448 lea rax, ptr [rsp+0x48]

test.go:10 0x4bd344 4889442438 mov qword ptr [rsp+0x38], rax

test.go:10 0x4bd349 8400 test byte ptr [rax], al

test.go:10 0x4bd34b 488b4c2440 mov rcx, qword ptr [rsp+0x40]

test.go:10 0x4bd350 488d15099f0000 lea rdx, ptr [__image_base__+815712]

test.go:10 0x4bd357 4889542448 mov qword ptr [rsp+0x48], rdx

test.go:10 0x4bd35c 48894c2450 mov qword ptr [rsp+0x50], rcx

test.go:10 0x4bd361 8400 test byte ptr [rax], al

test.go:10 0x4bd363 eb00 jmp 0x4bd365

test.go:10 0x4bd365 4889442458 mov qword ptr [rsp+0x58], rax

test.go:10 0x4bd36a 48c744246001000000 mov qword ptr [rsp+0x60], 0x1

test.go:10 0x4bd373 48c744246801000000 mov qword ptr [rsp+0x68], 0x1

test.go:10 0x4bd37c 48890424 mov qword ptr [rsp], rax

test.go:10 0x4bd380 48c744240801000000 mov qword ptr [rsp+0x8], 0x1

test.go:10 0x4bd389 48c744241001000000 mov qword ptr [rsp+0x10], 0x1

test.go:10 0x4bd392 e869a0ffff call $fmt.Println

test.go:11 0x4bd397 488b6c2470 mov rbp, qword ptr [rsp+0x70]

test.go:11 0x4bd39c 4883c478 add rsp, 0x78

test.go:11 0x4bd3a0 c3 ret

test.go:8 0x4bd3a1 e82a50faff call $runtime.morestack_noctxt

.:0 0x4bd3a6 e945ffffff jmp $main.main

|

We can now use break breakpoints to calls to the runtime.newobject function.

1

2

|

(dlv) break runtime.newobject

Breakpoint 2 set at 0x40d426 for runtime.newobject() c:/software/go/src/runtime/malloc.go:1164

|

Type continue to jump to the location of the breakpoint.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

(dlv) continue

> runtime.newobject() c:/software/go/src/runtime/malloc.go:1164 (hits goroutine(1):1 total:1) (PC: 0x40d426)

Warning: debugging optimized function

1159: }

1160:

1161: // implementation of new builtin

1162: // compiler (both frontend and SSA backend) knows the signature

1163: // of this function

=>1164: func newobject(typ *_type) unsafe.Pointer {

1165: return mallocgc(typ.size, typ, true)

1166: }

1167:

1168: //go:linkname reflect_unsafe_New reflect.unsafe_New

1169: func reflect_unsafe_New(typ *_type) unsafe.Pointer {

|

The print command is used to view the data of the typ.

1

2

|

(dlv) print typ

*runtime._type {size: 16, ptrdata: 8, hash: 875453117, tflag: tflagUncommon|tflagExtraStar|tflagNamed (7), align: 8, fieldAlign: 8, kind: 25, equal: runtime.strequal, gcdata: *1, str: 5418, ptrToThis: 37472}

|

You can see that the size printed here is 16bytes, because we have just one string type field inside the A structure.

Once you get to the mallocgc method, look at the arguments and local variables of the function with the args and locals commands.

1

2

3

4

5

6

7

|

(dlv) args

size = (unreadable could not find loclist entry at 0x8b40 for address 0x40ca73)

typ = (*runtime._type)(0x4d59a0)

needzero = true

~r3 = (unreadable empty OP stack)

(dlv) locals

(no locals)

|

Entrance to each object

As we can tell from the assembly, all the function entries are runtime.mallocgc, but the following two objects need some attention.

int64 object

runtime.convT64

1

2

3

4

5

6

7

8

9

|

func convT64(val uint64) (x unsafe.Pointer) {

if val < uint64(len(staticuint64s)) {

x = unsafe.Pointer(&staticuint64s[val])

} else {

x = mallocgc(8, uint64Type, false)

*(*uint64)(x) = val

}

return

}

|

This code indicates that if a value of type int64 is less than 256 and is fetched directly as a cached value, no memory allocation will be made for this value.

string object

runtime.convTstring

1

2

3

4

5

6

7

8

9

|

func convTstring(val string) (x unsafe.Pointer) {

if val == "" {

x = unsafe.Pointer(&zeroVal[0])

} else {

x = mallocgc(unsafe.Sizeof(val), stringType, true)

*(*string)(x) = val

}

return

}

|

As shown by this code, if a string object is created as “”, then a fixed address value will be returned directly and no memory allocation will be done.

Debugging examples

You can also use the following example to debug when debugging, because the object allocation inside go is divided into large objects, small objects and micro-objects, so the following three methods are prepared to debug when creating each of the three kinds of objects.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

|

type smallobj struct {

arr [1 << 10]byte

}

type largeobj struct {

arr [1 << 26]byte

}

func tiny() {

y := 100000

fmt.Println(y)

}

func large() {

large := largeobj{}

println(&large)

}

func small() {

small := smallobj{}

print(&small)

}

func main() {

//tiny()

//small()

//large()

}

|

Analysis

Components of the allocator

Memory allocation is done by the memory allocator, which consists of 3 components: runtime.mspan, runtime.mcache, runtime.mcentral, runtime.mheap.

runtime.mspan

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

|

type mspan struct {

// 上一个节点

next *mspan

// 下一个节点

prev *mspan

// span集合

list *mSpanList

// span开始的地址值

startAddr uintptr

// span管理的页数

npages uintptr

// Object n starts at address n*elemsize + (start << pageShift).

// 空闲节点的索引

freeindex uintptr

// span中存放的对象数量

nelems uintptr

// 用于快速查找内存中未被使用的内存

allocCache uint64

// 用于计算mspan管理了多少内存

elemsize uintptr

// span的结束地址值

limit uintptr

...

}

|

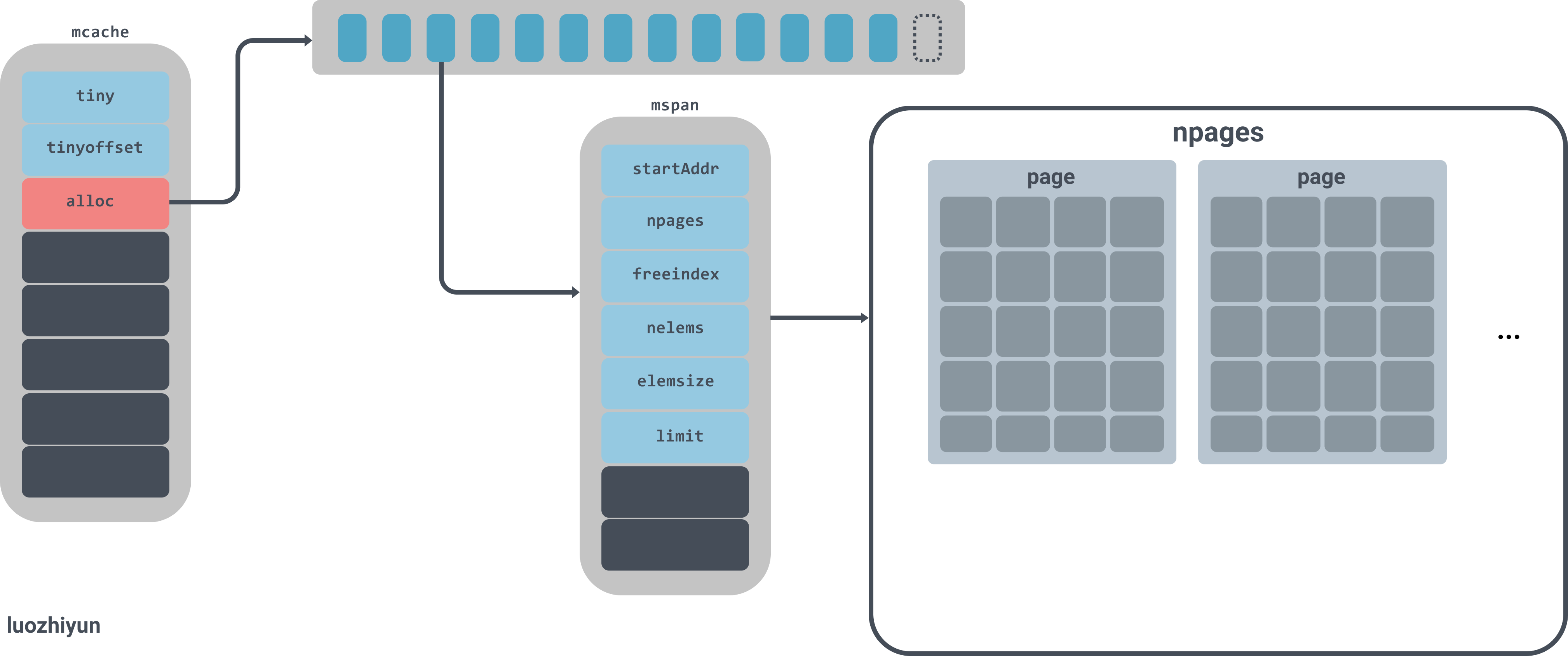

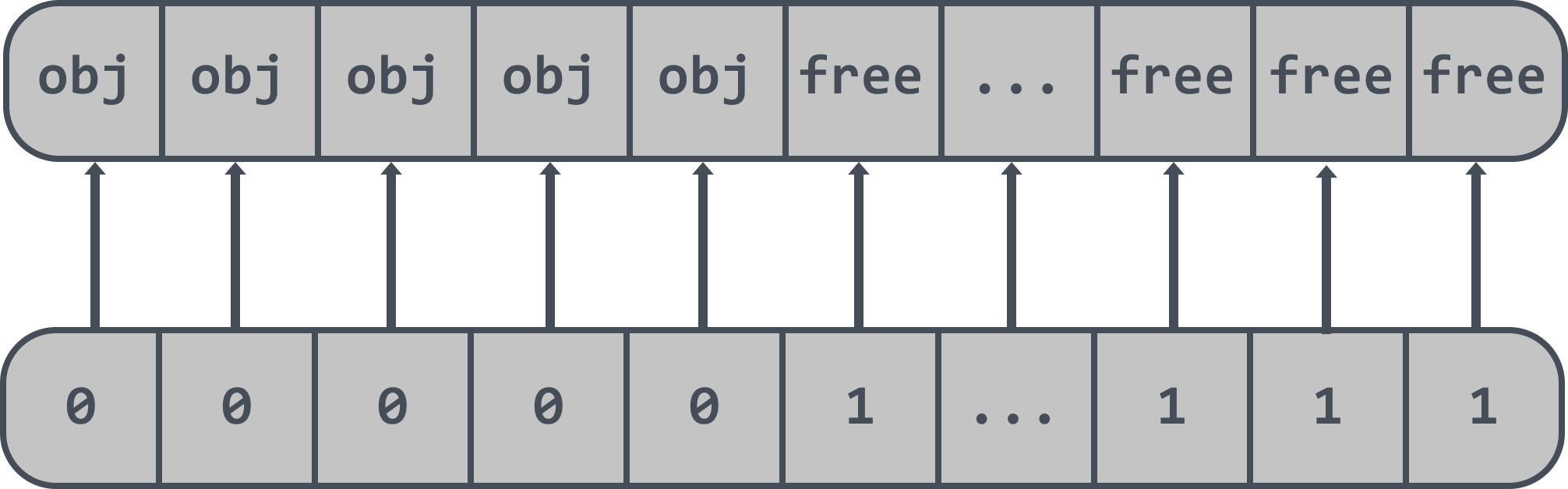

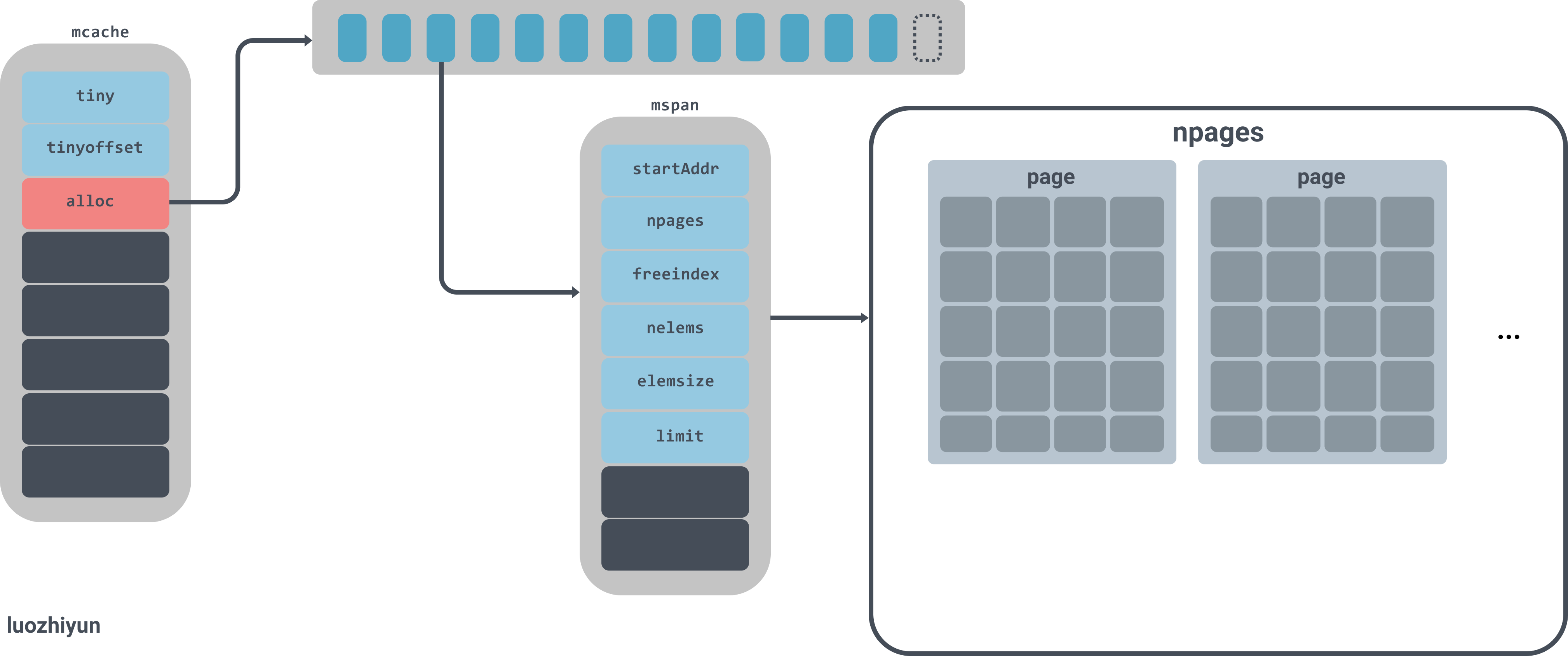

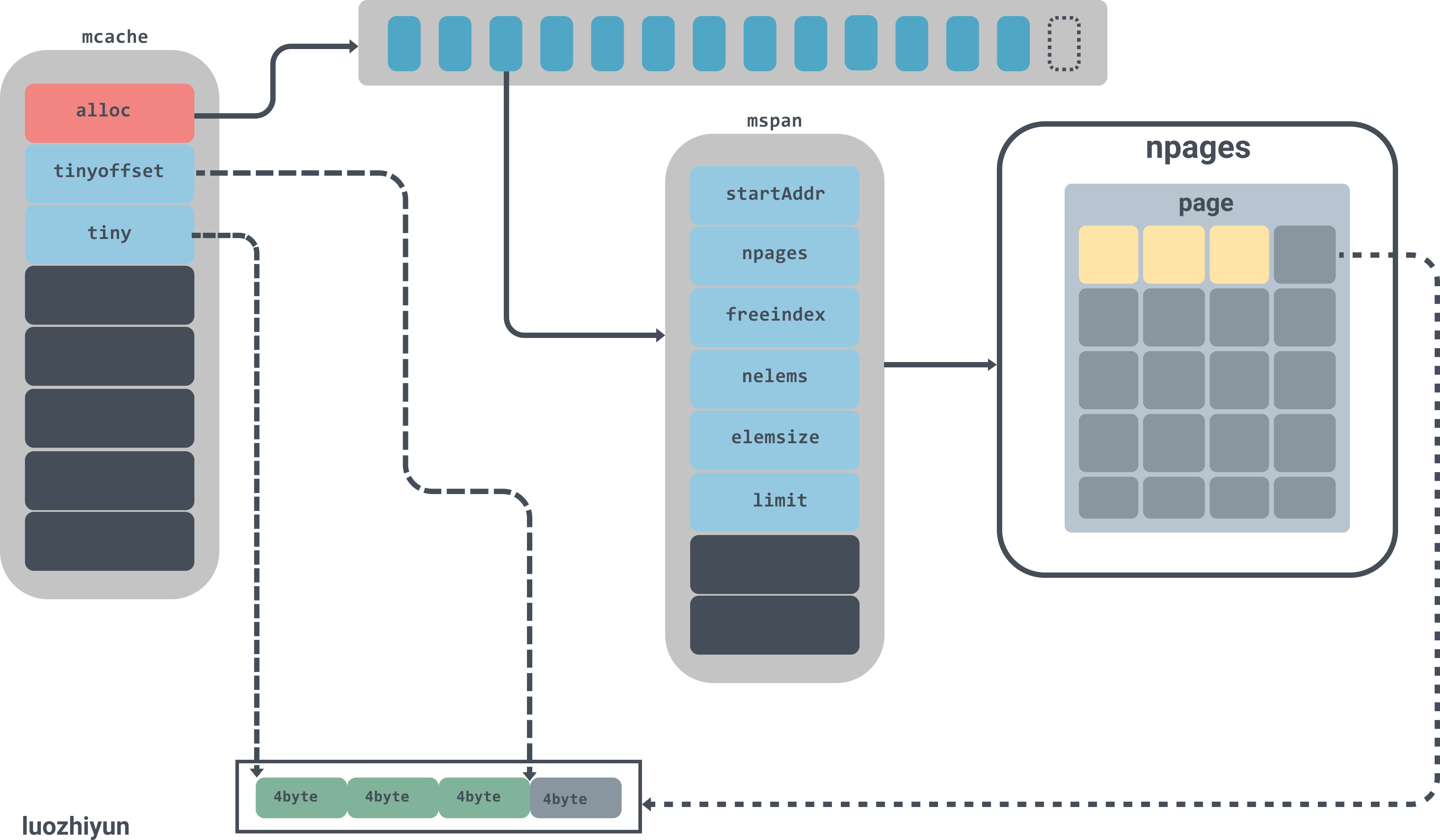

runtime.mspan is the smallest granular unit inside the memory manager, and all objects are managed under mspan.

mspan is a linked table with upper and lower pointers.

npages represents the number of heap pages managed by mspan.

freeindex is the index of a free object.

nelems represents how many objects can be stored in this mspan and is equal to (npages * pageSize)/elemsize.

allocCache is used to quickly find unused memory addresses.

elemsize indicates how many bytes an object will take up, equal to class_to_size[sizeclass], note that sizeclass will sizeclass method each time it is fetched, will sizeclass>>1.

limit indicates the address value of the end of span, equal to startAddr+ npages*pageSize.

The example diagram is as follows.

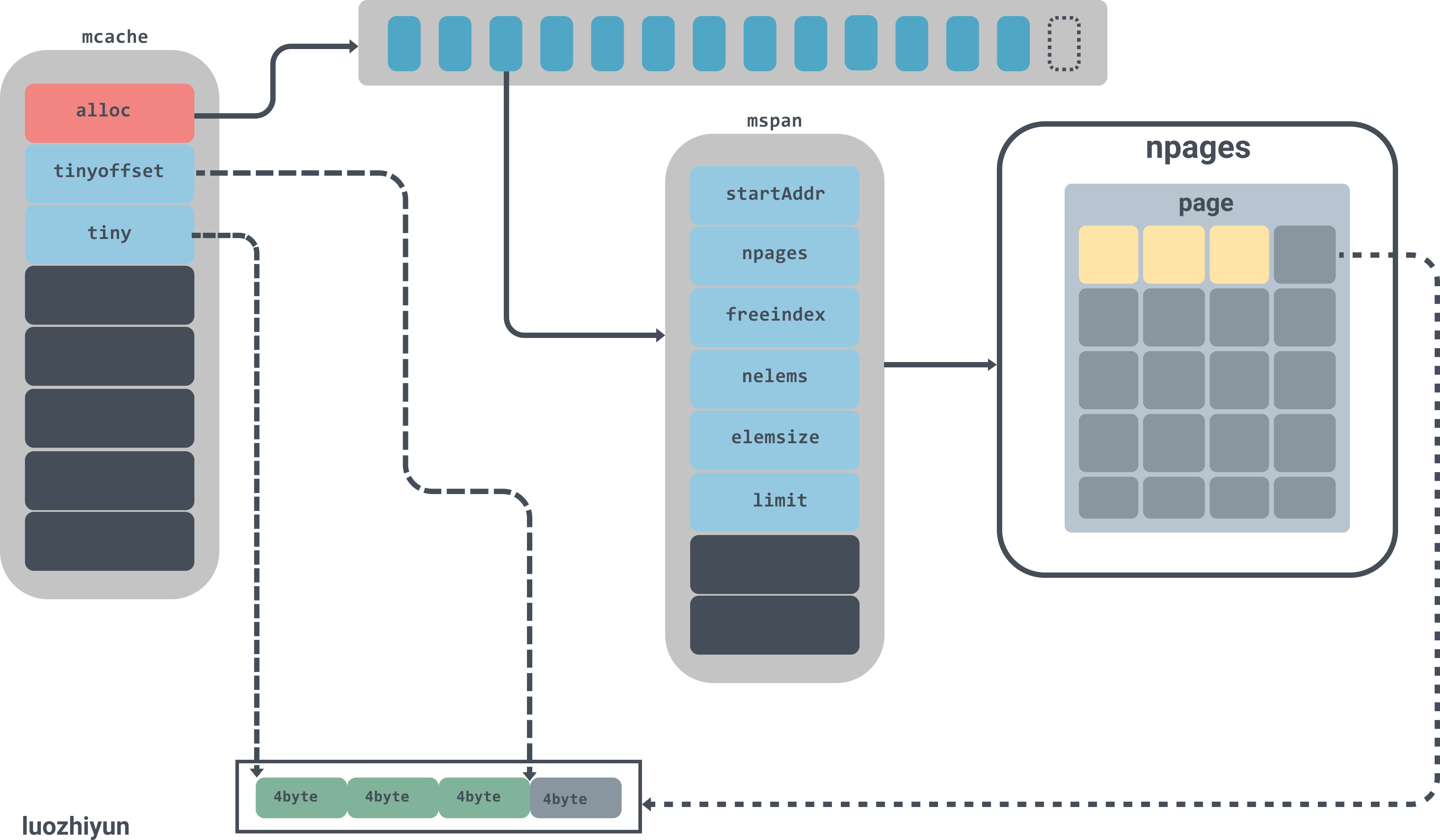

The figure alloc is an mspan array with 137 elements. The mspan array manages several pages of memory, each page is 8k, and the number of pages is determined by the spanclass specification.

runtime.mcache

1

2

3

4

5

6

7

8

9

10

11

12

|

type mcache struct {

...

// 申请小对象的起始地址

tiny uintptr

// 从起始地址tiny开始的偏移量

tinyoffset uintptr

// tiny对象分配的数量

local_tinyallocs uintptr // number of tiny allocs not counted in other stats

// mspan对象集合,numSpanClasses=134

alloc [numSpanClasses]*mspan // spans to allocate from, indexed by spanClass

...

}

|

runtime.mcache is tied to the concurrency model GPM’s P. When allocating micro- and small objects it goes to runtime.mcache first, and each processor is allocated a thread cache runtime.mcache, so allocations from runtime.mcache are done without locking.

There is an alloc array in runtime.mcache, a collection of runtime.mspan, which is the basic unit of memory management in Go. For objects of [16B,32KB] this part of the span is used for memory allocation, so all objects of this size in this interval are looked at from the alloc array, as will be analysed below.

runtime.mcentral

1

2

3

4

5

6

7

8

9

10

11

|

type mcentral struct {

//spanClass Id

spanclass spanClass

// 空闲的span列表

partial [2]spanSet // list of spans with a free object

// 已经被使用的span列表

full [2]spanSet // list of spans with no free objects

//分配mspan的累积计数

nmalloc uint64

}

|

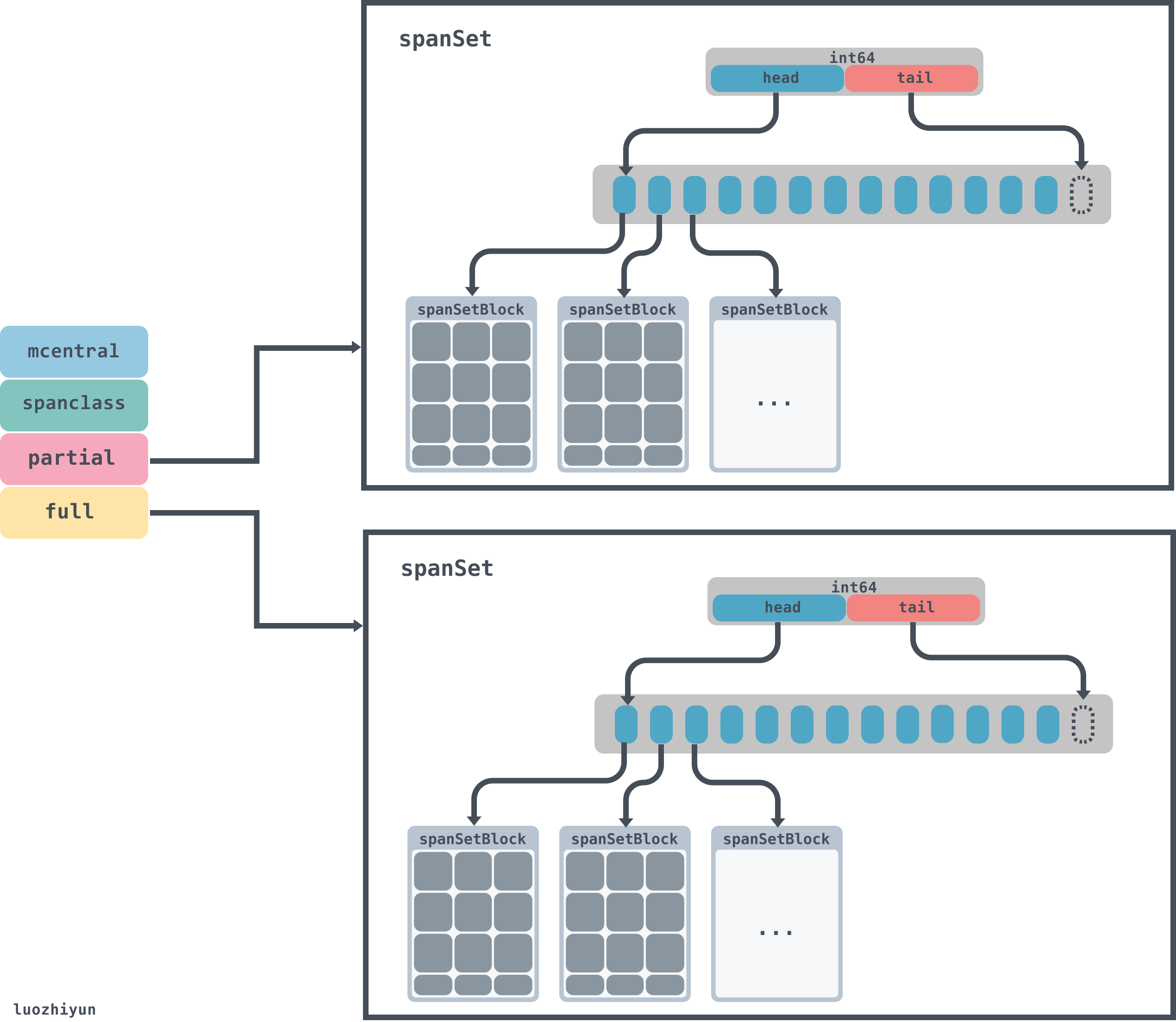

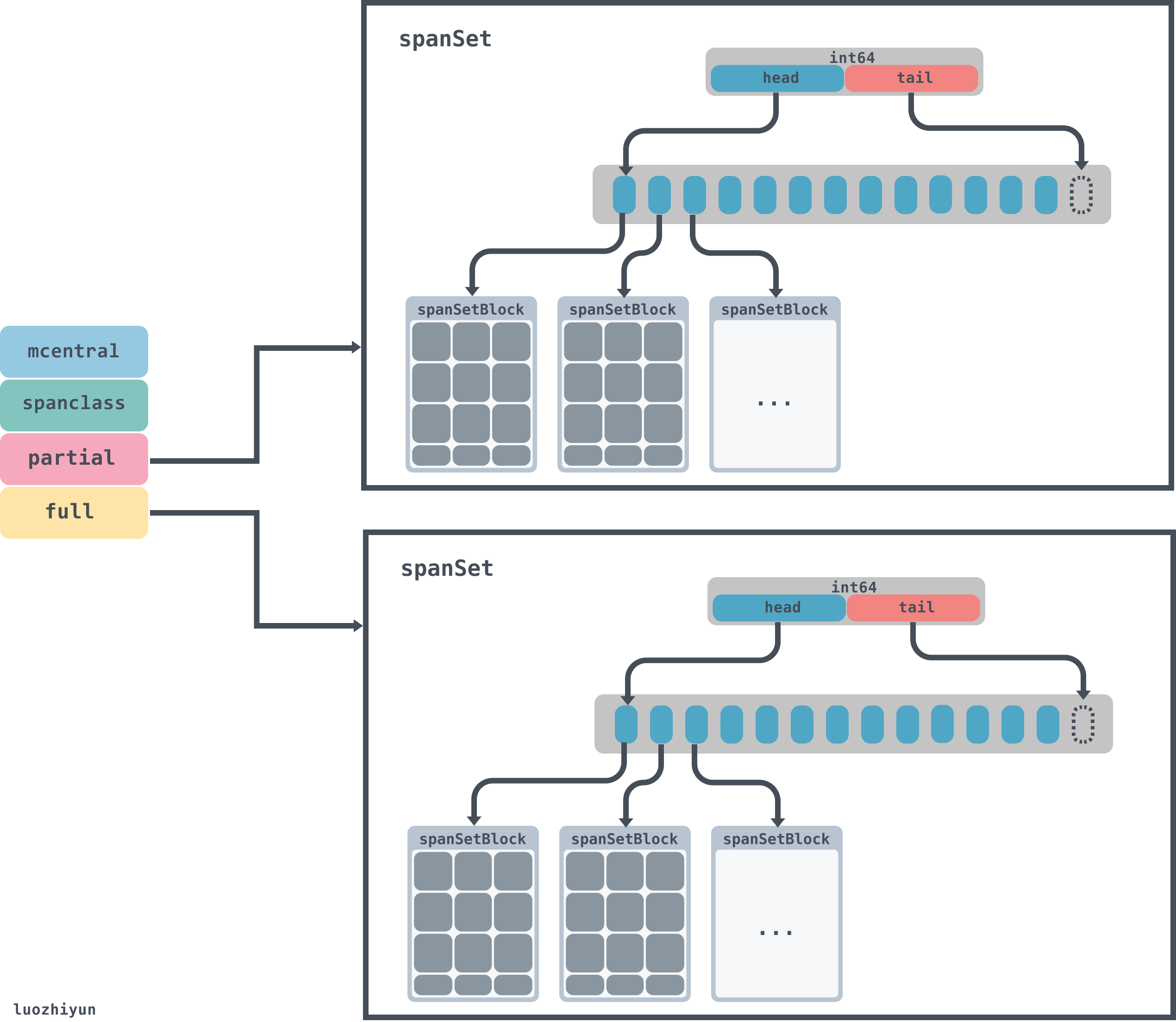

When there is not enough space in runtime.mcache, it will go to runtime.mcentral and request the mspan of the corresponding size. mspan will be fetched from the partial list and the full list, and will be fetched in a lock-free way.

In runtime.mcentral, there is the spanclass identifier, and the spanclass indicates the type of this mcentral, as we will see below, when allocating objects of size [16B,32KB], the size of the object is divided into 67 groups.

1

|

var class_to_size = [_NumSizeClasses]uint16{0, 8, 16, 32, 48, 64, 80, 96, 112, 128, 144, 160, 176, 192, 208, 224, 240, 256, 288, 320, 352, 384, 416, 448, 480, 512, 576, 640, 704, 768, 896, 1024, 1152, 1280, 1408, 1536, 1792, 2048, 2304, 2688, 3072, 3200, 3456, 4096, 4864, 5376, 6144, 6528, 6784, 6912, 8192, 9472, 9728, 10240, 10880, 12288, 13568, 14336, 16384, 18432, 19072, 20480, 21760, 24576, 27264, 28672, 32768}

|

So runtime.mcentral is responsible for only one spanclass specification type.

The data in runtime.mcentral will be hosted by two spanSet, partial for the free list and full for the used list.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

|

type headTailIndex uint64

type spanSet struct {

// lock

spineLock mutex

// 数据块的指针

spine unsafe.Pointer // *[N]*spanSetBlock, accessed atomically

// len

spineLen uintptr // Spine array length, accessed atomically

// cap

spineCap uintptr // Spine array cap, accessed under lock

// 头尾的指针,前32位是头指针,后32位是尾指针

index headTailIndex

}

|

The spanSet data structure has a head and tail pointer consisting of an index, which is fetched from the head when popping data and put in from the tail when pushing data. spine is equivalent to a pointer to a data block, and the exact position of each data block can be calculated by the position of the head and tail, which is represented by the spanSetBlock.

1

2

3

4

5

|

const spanSetBlockEntries = 512

type spanSetBlock struct {

...

spans [spanSetBlockEntries]*mspan

}

|

The spanSetBlock is a data block that holds the mspan and will contain a data pointer to the 512 mspan stored inside. So the overall data structure of mcentral is as follows.

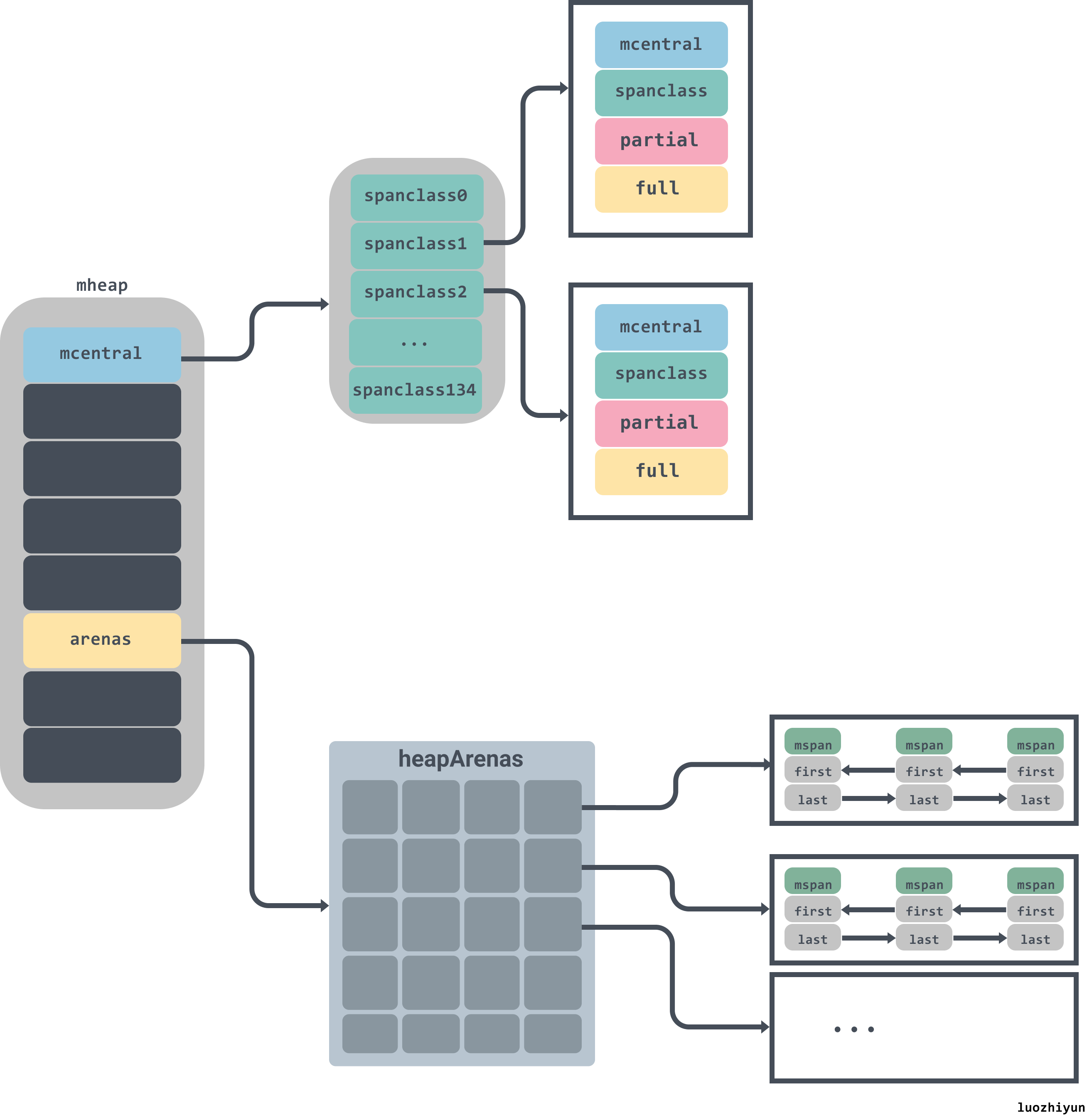

runtime.mheap

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

type mheap struct {

lock mutex

pages pageAlloc // page allocation data structure

//arenas数组集合,一个二维数组

arenas [1 << arenaL1Bits]*[1 << arenaL2Bits]*heapArena

//各个规格的mcentral集合

central [numSpanClasses]struct {

mcentral mcentral

pad [cpu.CacheLinePadSize - unsafe.Sizeof(mcentral{})%cpu.CacheLinePadSize]byte

}

...

}

|

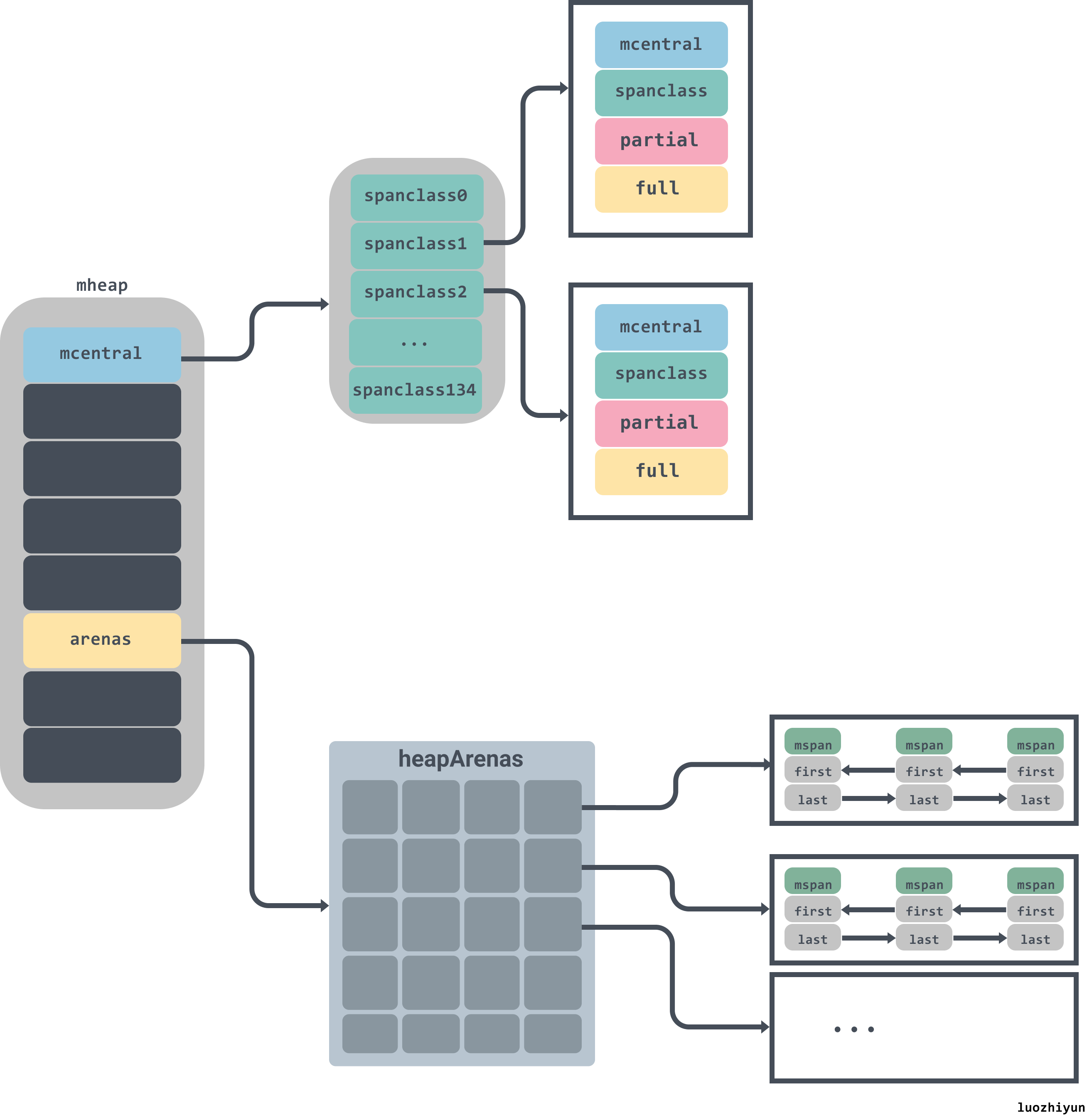

For runtime.mheap attention needs to be paid to central and arenas. central is a collection of mcentral for each specification, which is created by traversing class_to_size during initialization; arenas is a two-dimensional array that manages memory space. arenas consists of multiple runtime.heapArena, each of which manages 64MB of memory space.

1

2

3

4

5

6

7

8

9

10

11

12

13

|

const (

pageSize = 8192 // 8KB

heapArenaBytes = 67108864 // 64MB

pagesPerArena = heapArenaBytes / pageSize // 8192

)

type heapArena struct {

bitmap [heapArenaBitmapBytes]byte

spans [pagesPerArena]*mspan

pageInUse [pagesPerArena / 8]uint8

pageMarks [pagesPerArena / 8]uint8

zeroedBase uintptr

}

|

Note that the 64M heapArenaBytes above is only displayed on 64-bit machines other than windows, where it is 4MB. see the official notes below for details.

1

2

3

4

5

6

|

// Platform Addr bits Arena size L1 entries L2 entries

// -------------- --------- ---------- ---------- -----------

// */64-bit 48 64MB 1 4M (32MB)

// windows/64-bit 48 4MB 64 1M (8MB)

// */32-bit 32 4MB 1 1024 (4KB)

// */mips(le) 31 4MB 1 512 (2KB)

|

L1 entries and L2 entries represent the one-dimensional and two-dimensional values of arenas in runtime.mheap respectively.

Allocating memory to objects

We know from decompiling the source code that all objects on the heap are allocated memory by calling the runtime.newobject function, which calls runtime.mallocgc.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

|

//创建一个新的对象

func newobject(typ *_type) unsafe.Pointer {

//size表示该对象的大小

return mallocgc(typ.size, typ, true)

}

func mallocgc(size uintptr, typ *_type, needzero bool) unsafe.Pointer {

...

dataSize := size

// 获取mcache,用于处理微对象和小对象的分配

c := gomcache()

var x unsafe.Pointer

// 表示对象是否包含指针,true表示对象里没有指针

noscan := typ == nil || typ.ptrdata == 0

// maxSmallSize=32768 32k

if size <= maxSmallSize {

// maxTinySize= 16 bytes

if noscan && size < maxTinySize {

...

} else {

...

}

// 大于 32 Kb 的内存分配,通过 mheap 分配

} else {

...

}

...

return x

}

|

As you can tell from mallocgc’s code, mallocgc allocates memory in 3 classes according to the size of the object.

- small objects smaller than 16bytes.

- micro-objects between 16bytes and 32k.

- large objects larger than 32 Kbytes.

Allocation of large objects

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

func mallocgc(size uintptr, typ *_type, needzero bool) unsafe.Pointer {

...

var s *mspan

shouldhelpgc = true

systemstack(func() {

s = largeAlloc(size, needzero, noscan)

})

s.freeindex = 1

s.allocCount = 1

x = unsafe.Pointer(s.base())

size = s.elemsize

...

return x

}

|

From the above we can see that when allocating space larger than 32KB, a largeAlloc is used directly to allocate an mspan.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

func largeAlloc(size uintptr, needzero bool, noscan bool) *mspan {

// _PageSize=8k,也就是表明对象太大,溢出

if size+_PageSize < size {

throw("out of memory")

}

// _PageShift==13,计算需要分配的页数

npages := size >> _PageShift

// 如果不是整数,多出来一些,需要加1

if size&_PageMask != 0 {

npages++

}

...

// 从堆上分配

s := mheap_.alloc(npages, makeSpanClass(0, noscan), needzero)

if s == nil {

throw("out of memory")

}

...

return s

}

|

The allocation of memory is done on a per-page basis, with each page having a size of _PageSize (8K), and then it is necessary to determine how many pages need to be divided according to the size passed in, and finally call alloc to allocate from the heap.

1

2

3

4

5

6

7

8

9

10

11

12

13

|

func (h *mheap) alloc(npages uintptr, spanclass spanClass, needzero bool) *mspan {

var s *mspan

systemstack(func() {

if h.sweepdone == 0 {

// 回收一部分内存

h.reclaim(npages)

}

// 进行内存分配

s = h.allocSpan(npages, false, spanclass, &memstats.heap_inuse)

})

...

return s

}

|

Continue with the implementation of allocSpan.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

|

const pageCachePages = 8 * unsafe.Sizeof(pageCache{}.cache)

func (h *mheap) allocSpan(npages uintptr, manual bool, spanclass spanClass, sysStat *uint64) (s *mspan) {

// Function-global state.

gp := getg()

base, scav := uintptr(0), uintptr(0)

pp := gp.m.p.ptr()

// 申请的内存比较小,尝试从pcache申请内存

if pp != nil && npages < pageCachePages/4 {

c := &pp.pcache

if c.empty() {

lock(&h.lock)

*c = h.pages.allocToCache()

unlock(&h.lock)

}

base, scav = c.alloc(npages)

if base != 0 {

s = h.tryAllocMSpan()

if s != nil && gcBlackenEnabled == 0 && (manual || spanclass.sizeclass() != 0) {

goto HaveSpan

}

}

}

lock(&h.lock)

// 内存比较大或者线程的页缓存中内存不足,从mheap的pages上获取内存

if base == 0 {

base, scav = h.pages.alloc(npages)

// 内存也不够,那么进行扩容

if base == 0 {

if !h.grow(npages) {

unlock(&h.lock)

return nil

}

// 重新申请内存

base, scav = h.pages.alloc(npages)

// 内存不足,抛出异常

if base == 0 {

throw("grew heap, but no adequate free space found")

}

}

}

if s == nil {

// 分配一个mspan对象

s = h.allocMSpanLocked()

}

unlock(&h.lock)

HaveSpan:

// 设置参数初始化

s.init(base, npages)

...

// 建立mheap与mspan之间的联系

h.setSpans(s.base(), npages, s)

...

return s

}

|

Here another determination is made based on the size of the memory to be allocated.

- If the number of pages to be allocated is less than

pageCachePages/4=64/4=16 pages, then an attempt is made to request memory from the pcache.

- If the memory requested is large or the thread is running low on memory in the page cache, memory is allocated from the page heap via

runtime.pageAlloc.alloc.

- if there is not enough memory on the page heap, then the grow method of mheap requests memory from the system and then calls pageAlloc’s alloc to allocate memory.

Here’s a look at grow’s request for memory from the operating system.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

|

func (h *mheap) grow(npage uintptr) bool {

// We must grow the heap in whole palloc chunks.

ask := alignUp(npage, pallocChunkPages) * pageSize

totalGrowth := uintptr(0)

nBase := alignUp(h.curArena.base+ask, physPageSize)

// 内存不够则调用sysAlloc申请内存

if nBase > h.curArena.end {

av, asize := h.sysAlloc(ask)

if av == nil {

print("runtime: out of memory: cannot allocate ", ask, "-byte block (", memstats.heap_sys, " in use)\n")

return false

}

// 重新设置curArena的值

if uintptr(av) == h.curArena.end {

h.curArena.end = uintptr(av) + asize

} else {

if size := h.curArena.end - h.curArena.base; size != 0 {

h.pages.grow(h.curArena.base, size)

totalGrowth += size

}

h.curArena.base = uintptr(av)

h.curArena.end = uintptr(av) + asize

}

nBase = alignUp(h.curArena.base+ask, physPageSize)

}

...

return true

}

|

grow will use the end value of curArena to determine if it needs to request memory from the system; if end is less than nBase then the runtime.mheap.sysAlloc method will be called to request more memory from the OS.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

|

func (h *mheap) sysAlloc(n uintptr) (v unsafe.Pointer, size uintptr) {

n = alignUp(n, heapArenaBytes)

// 在预先保留的内存中申请一块可以使用的空间

v = h.arena.alloc(n, heapArenaBytes, &memstats.heap_sys)

if v != nil {

size = n

goto mapped

}

// 根据页堆的arenaHints在目标地址上尝试扩容

for h.arenaHints != nil {

hint := h.arenaHints

p := hint.addr

if hint.down {

p -= n

}

if p+n < p {

// We can't use this, so don't ask.

v = nil

} else if arenaIndex(p+n-1) >= 1<<arenaBits {

// Outside addressable heap. Can't use.

v = nil

} else {

// 从操作系统中申请内存

v = sysReserve(unsafe.Pointer(p), n)

}

if p == uintptr(v) {

// Success. Update the hint.

if !hint.down {

p += n

}

hint.addr = p

size = n

break

}

if v != nil {

sysFree(v, n, nil)

}

h.arenaHints = hint.next

h.arenaHintAlloc.free(unsafe.Pointer(hint))

}

...

// 将内存由Reserved转为Prepared

sysMap(v, size, &memstats.heap_sys)

mapped:

// Create arena metadata.

// 初始化一个heapArena来管理刚刚申请的内存

for ri := arenaIndex(uintptr(v)); ri <= arenaIndex(uintptr(v)+size-1); ri++ {

l2 := h.arenas[ri.l1()]

if l2 == nil {

l2 = (*[1 << arenaL2Bits]*heapArena)(persistentalloc(unsafe.Sizeof(*l2), sys.PtrSize, nil))

if l2 == nil {

throw("out of memory allocating heap arena map")

}

atomic.StorepNoWB(unsafe.Pointer(&h.arenas[ri.l1()]), unsafe.Pointer(l2))

}

var r *heapArena

r = (*heapArena)(h.heapArenaAlloc.alloc(unsafe.Sizeof(*r), sys.PtrSize, &memstats.gc_sys))

...

// 将创建heapArena放入到arenas列表中

h.allArenas = h.allArenas[:len(h.allArenas)+1]

h.allArenas[len(h.allArenas)-1] = ri

atomic.StorepNoWB(unsafe.Pointer(&l2[ri.l2()]), unsafe.Pointer(r))

}

return

}

|

The sysAlloc method calls runtime.linearAlloc.alloc to request a piece of memory from the pre-reserved memory; if not, the sysReserve method is called to request memory from the operating system; finally, a heapArena is initialised to manage the memory just requested, and the created The heapArena is then placed in the list of arenas.

This concludes the allocation process for large objects.

Allocation of small objects

For objects between 16bytes and 32K the allocation is as follows.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

|

func mallocgc(size uintptr, typ *_type, needzero bool) unsafe.Pointer {

...

dataSize := size

// 获取mcache,用于处理微对象和小对象的分配

c := gomcache()

var x unsafe.Pointer

// 表示对象是否包含指针,true表示对象里没有指针

noscan := typ == nil || typ.ptrdata == 0

// maxSmallSize=32768 32k

if size <= maxSmallSize {

// maxTinySize= 16 bytes

if noscan && size < maxTinySize {

...

} else {

var sizeclass uint8

//计算 sizeclass

// smallSizeMax=1024

if size <= smallSizeMax-8 {

// smallSizeDiv=8

sizeclass = size_to_class8[(size+smallSizeDiv-1)/smallSizeDiv]

} else {

// largeSizeDiv=128,smallSizeMax = 1024

sizeclass = size_to_class128[(size-smallSizeMax+largeSizeDiv-1)/largeSizeDiv]

}

size = uintptr(class_to_size[sizeclass])

spc := makeSpanClass(sizeclass, noscan)

span := c.alloc[spc]

//从对应的 span 里面分配一个 object

v := nextFreeFast(span)

if v == 0 {

// mcache不够用了,则从 mcentral 申请内存到 mcache

v, span, shouldhelpgc = c.nextFree(spc)

}

x = unsafe.Pointer(v)

if needzero && span.needzero != 0 {

memclrNoHeapPointers(unsafe.Pointer(v), size)

}

}

...

}

...

return x

}

|

The sizeclass size is first calculated by pre-defining two arrays: size_to_class8 and size_to_class128. less than 1024 - 8 = 1016 (smallSizeMax=1024), use size_to_class8, otherwise use the array size_to_class128. class8, otherwise the array size_to_class128 is used.

For example, if you want to allocate 20 byte of memory, then sizeclass = size_to_calss8[(20+7)/8] = size_to_class8[3] = 3. Then the corresponding value of 32 is obtained from class_to_size[3], indicating that 32bytes of memory value should be allocated.

Then it will get a pointer to span from the alloc array, try to get memory from the mcache by calling nextFreeFast, and if the mcache is running low, try to call nextFree to request memory from mcentral to the mcache.

See nextFreeFast below.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

func nextFreeFast(s *mspan) gclinkptr {

// 获取allocCache二进制中0的个数

theBit := sys.Ctz64(s.allocCache) // Is there a free object in the allocCache?

if theBit < 64 {

result := s.freeindex + uintptr(theBit)

if result < s.nelems {

freeidx := result + 1

if freeidx%64 == 0 && freeidx != s.nelems {

return 0

}

s.allocCache >>= uint(theBit + 1)

s.freeindex = freeidx

s.allocCount++

return gclinkptr(result*s.elemsize + s.base())

}

}

return 0

}

|

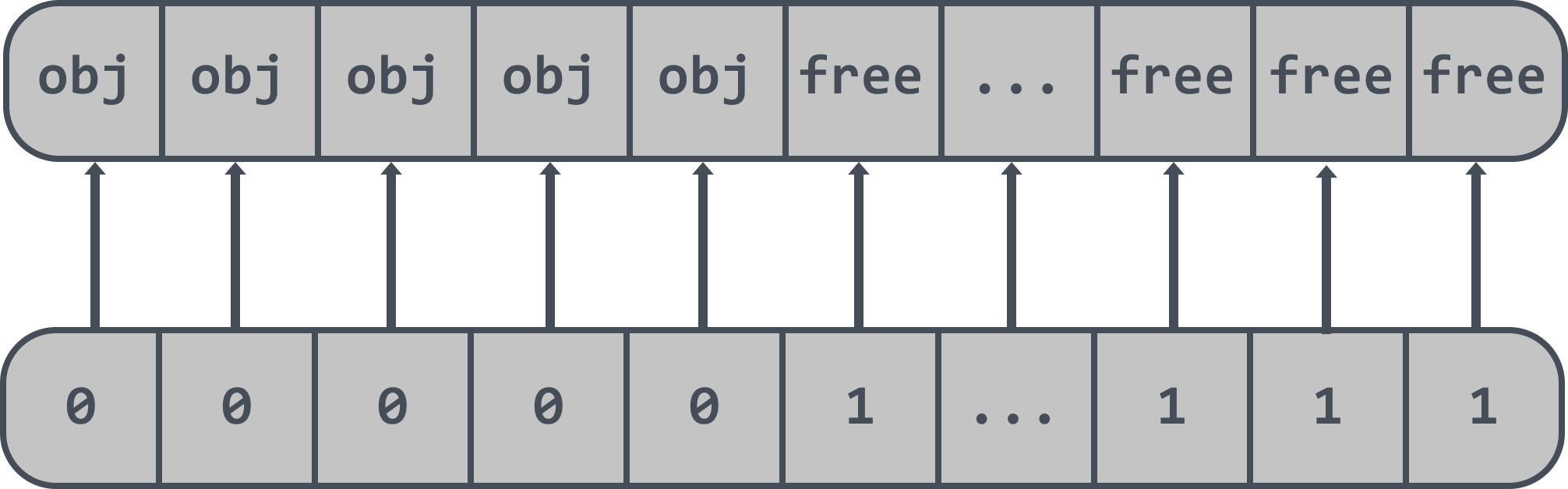

allocCache is initialised to ^uint64(0) during initialisation, converted to binary, if it is 0 then it is occupied, the allocCache allows you to quickly locate the space to be allocated.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

|

func (c *mcache) nextFree(spc spanClass) (v gclinkptr, s *mspan, shouldhelpgc bool) {

s = c.alloc[spc]

shouldhelpgc = false

// 当前span中找到合适的index索引

freeIndex := s.nextFreeIndex()

// 当前span已经满了

if freeIndex == s.nelems {

if uintptr(s.allocCount) != s.nelems {

println("runtime: s.allocCount=", s.allocCount, "s.nelems=", s.nelems)

throw("s.allocCount != s.nelems && freeIndex == s.nelems")

}

// 从 mcentral 中获取可用的span,并替换掉当前 mcache里面的span

c.refill(spc)

shouldhelpgc = true

s = c.alloc[spc]

// 再次到新的span里面查找合适的index

freeIndex = s.nextFreeIndex()

}

if freeIndex >= s.nelems {

throw("freeIndex is not valid")

}

// 计算出来内存地址,并更新span的属性

v = gclinkptr(freeIndex*s.elemsize + s.base())

s.allocCount++

if uintptr(s.allocCount) > s.nelems {

println("s.allocCount=", s.allocCount, "s.nelems=", s.nelems)

throw("s.allocCount > s.nelems")

}

return

}

|

NextFree will determine if the current span is full, and if so, call the refill method to retrieve the available span from the mcentral and replace the span in the current mcache.

1

2

3

4

5

6

7

8

9

10

|

func (c *mcache) refill(spc spanClass) {

s := c.alloc[spc]

...

s = mheap_.central[spc].mcentral.cacheSpan()

if s == nil {

throw("out of memory")

}

...

c.alloc[spc] = s

}

|

Refill gets the corresponding span according to the specified sizeclass and uses it as the span corresponding to the new sizeclass of the mcache.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

|

func (c *mcentral) cacheSpan() *mspan {

...

sg := mheap_.sweepgen

spanBudget := 100

var s *mspan

// 从清理过的、包含空闲空间的spanSet结构中查找可以使用的内存管理单元

if s = c.partialSwept(sg).pop(); s != nil {

goto havespan

}

for ; spanBudget >= 0; spanBudget-- {

// 从未被清理过的、有空闲对象的spanSet查找可用的span

s = c.partialUnswept(sg).pop()

if s == nil {

break

}

if atomic.Load(&s.sweepgen) == sg-2 && atomic.Cas(&s.sweepgen, sg-2, sg-1) {

// 找到要回收的span,触发sweep进行清理

s.sweep(true)

goto havespan

}

}

for ; spanBudget >= 0; spanBudget-- {

// 获取未被清理的、不包含空闲空间的spanSet查找可用的span

s = c.fullUnswept(sg).pop()

if s == nil {

break

}

if atomic.Load(&s.sweepgen) == sg-2 && atomic.Cas(&s.sweepgen, sg-2, sg-1) {

s.sweep(true)

freeIndex := s.nextFreeIndex()

if freeIndex != s.nelems {

s.freeindex = freeIndex

goto havespan

}

c.fullSwept(sg).push(s)

}

}

// 从堆中申请新的内存管理单元

s = c.grow()

if s == nil {

return nil

}

havespan:

n := int(s.nelems) - int(s.allocCount)

if n == 0 || s.freeindex == s.nelems || uintptr(s.allocCount) == s.nelems {

throw("span has no free objects")

}

//更新 nmalloc

atomic.Xadd64(&c.nmalloc, int64(n))

usedBytes := uintptr(s.allocCount) * s.elemsize

atomic.Xadd64(&memstats.heap_live, int64(spanBytes)-int64(usedBytes))

if trace.enabled {

// heap_live changed.

traceHeapAlloc()

}

if gcBlackenEnabled != 0 {

// heap_live changed.

gcController.revise()

}

freeByteBase := s.freeindex &^ (64 - 1)

whichByte := freeByteBase / 8

// 更新allocCache

s.refillAllocCache(whichByte)

// s.allocCache.

s.allocCache >>= s.freeindex % 64

return s

}

|

cacheSpan mainly looks for available spans from mcentral’s spanset, if not found then the grow method is called to request a new memory management unit from the heap.

Once obtained, the fields nmalloc, allocCache, etc. are updated.

runtime.mcentral.grow triggers an expand operation to request new memory from the heap.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

|

func (c *mcentral) grow() *mspan {

// 获取待分配的页数

npages := uintptr(class_to_allocnpages[c.spanclass.sizeclass()])

size := uintptr(class_to_size[c.spanclass.sizeclass()])

// 获取新的span

s := mheap_.alloc(npages, c.spanclass, true)

if s == nil {

return nil

}

// Use division by multiplication and shifts to quickly compute:

// n := (npages << _PageShift) / size

n := (npages << _PageShift) >> s.divShift * uintptr(s.divMul) >> s.divShift2

// 初始化limit

s.limit = s.base() + size*n

heapBitsForAddr(s.base()).initSpan(s)

return s

}

|

The method runtime.mheap.alloc will be called inside grow to get the span, this method has already been covered above, if you don’t remember it you can look up the article.

This is the end of the allocation of micro-objects.

Micro-object allocation

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

|

func mallocgc(size uintptr, typ *_type, needzero bool) unsafe.Pointer {

...

dataSize := size

// 获取mcache,用于处理微对象和小对象的分配

c := gomcache()

var x unsafe.Pointer

// 表示对象是否包含指针,true表示对象里没有指针

noscan := typ == nil || typ.ptrdata == 0

// maxSmallSize=32768 32k

if size <= maxSmallSize {

// maxTinySize= 16 bytes

if noscan && size < maxTinySize {

off := c.tinyoffset

// 指针内存对齐

if size&7 == 0 {

off = alignUp(off, 8)

} else if size&3 == 0 {

off = alignUp(off, 4)

} else if size&1 == 0 {

off = alignUp(off, 2)

}

// 判断指针大小相加是否超过16

if off+size <= maxTinySize && c.tiny != 0 {

// 获取tiny空闲内存的起始位置

x = unsafe.Pointer(c.tiny + off)

// 重设偏移量

c.tinyoffset = off + size

// 统计数量

c.local_tinyallocs++

mp.mallocing = 0

releasem(mp)

return x

}

// 重新分配一个内存块

span := c.alloc[tinySpanClass]

v := nextFreeFast(span)

if v == 0 {

v, _, shouldhelpgc = c.nextFree(tinySpanClass)

}

x = unsafe.Pointer(v)

//将申请的内存块全置为 0

(*[2]uint64)(x)[0] = 0

(*[2]uint64)(x)[1] = 0

// 如果申请的内存块用不完,则将剩下的给 tiny,用 tinyoffset 记录分配了多少。

if size < c.tinyoffset || c.tiny == 0 {

c.tiny = uintptr(x)

c.tinyoffset = size

}

size = maxTinySize

}

...

}

...

return x

}

|

If the object is less than 16bytes in size and does not contain a pointer, then it can be considered a micro-object.

When allocating a micro-object, a judgement is made as to whether the memory block pointed to by tiny is sufficient, and if the space left in tiny exceeds the size, then memory is allocated directly on tiny and returned.

Here again I will use my diagram above to explain. First it will go to the mcache array and find the corresponding span. The properties of the span corresponding to the tinySpanClass are as follows.

1

2

3

4

5

6

7

8

9

10

|

startAddr: 824635752448,

npages: 1,

manualFreeList: 0,

freeindex: 128,

nelems: 512,

elemsize: 16,

limit: 824635760640,

allocCount: 128,

spanclass: tinySpanClass (5),

...

|

The mspan corresponding to tinySpanClass has only one page, which can contain 512 elements (nelems); the size of each object in the page is 16bytes (elemsize), and 128 objects have been allocated so far (allocCount), but of course I can’t draw so many pages above, so I symbolically draw them.

The diagram above also shows that one of the objects in the page has been used by 12bytes, leaving 4bytes unused, so the values of tinyoffset and tiny will be updated.

Summary

This article started by describing how to debug the go assembly, and then went into three levels to explain how memory allocation works in go. For objects less than 32k, go can get the corresponding memory directly from mcache in a lock-free way. If there is not enough mcache memory, it will first go to mcentral to get memory, and then finally to mheap to request memory. For large objects (>32k) you can apply directly to mheap, but for large objects there are certain optimisations, when a large object requires less than 16 pages to be allocated it will be allocated directly from pageCache, otherwise it will be fetched from the heap pages.