If you have an app, you can increase access speed by more than 15% without any modification. Especially when the network is weak, it can improve access speed by more than 20%. If your App, with frequent switching between 4G and WIFI networks, does not disconnect, does not require reconnection, and has no user perception. If you have an App that needs the security of TLS but also wants the power of HTTP2 multiplexing. If you’ve just heard that HTTP2 is the next generation of Internet protocols, and if you’ve just noticed that TLS 1.3 is a revolutionary landmark protocol, but both protocols are constantly being influenced and challenged by another, more emerging protocol. If this emerging protocol, its name is “Fast” and it is being standardized as the next generation of Internet transport protocols. Are you willing to spend a little time understanding this protocol? Are you willing to invest the effort to study this protocol? Are you willing to push your business to use this protocol?

What is QUIC?

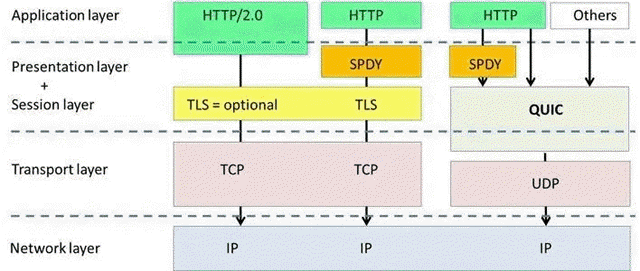

QUIC (Quick UDP Internet Connection) is a UDP-based transport protocol from Google that implements TCP + HTTPS + HTTP/2 with the goal of ensuring reliability while reducing network latency. Because UDP is a simple transport protocol, UDP-based can get rid of TCP transport confirmation, slow start of retransmission, etc., and establish a secure connection in a round-trip time, it also implements HTTP/2 multiplexing, header compression, etc.

The QUIC protocol is a collection of protocols, mainly including.

- Transport protocol (Transport)

- Packet Loss Detection and Congestion Control (Recovery)

- Secure Transport Protocol (TLS)

- HTTP3 protocol

- HTTP Header Compression Protocol (QPACK)

- Load Balancing Protocol (Load Balance)

It is well known that UDP is faster than TCP, and TCP is a reliable protocol, but at the cost of a series of consumptions derived from the confirmation of data by both sides. Secondly, TCP is implemented in the system kernel, if you upgrade the TCP protocol, you have to let the user upgrade the system, the threshold of this is relatively high, while QUIC on the basis of UDP by the client free play, as long as there is a server can be docked.

Quic has the following advantages over the now widely used http2+tcp+tls protocol.

- Reduced TCP triple handshake and TLS handshake time

- Improved congestion control

- Multiplexing to avoid queue head blocking

- Connection migration

- Forward redundancy error correction

HTTP protocol development

HTTP History Process

- HTTP 0.9 (1991) only supports get method not request headers.

- HTTP 1.0 (1996) basically shaped, supports request headers, rich text, status codes, caching, connections cannot be multiplexed.

- HTTP 1.1 (1999) supports connection multiplexing, sending in chunks, breakpoints.

- HTTP 2.0 (2015) binary split-frame transmission, multiplexing, header compression, server push, etc.

- HTTP 3.0 (2018) QUIC was implemented in 2013; in October 2018, the IETF’s HTTP Working Group and the QUIC Working Group jointly decided to call the HTTP mapping on QUIC “HTTP/3” in advance of making it a global standard.

HTTP1.0 and HTTP1.1

- Head-of-queue blocking: the next request must be sent after the previous request returns, resulting in bandwidth not being fully utilized and subsequent requests being blocked (HTTP 1.1 attempted to use pipelining (Pipelining) techniques, but the innate FIFO (First In First Out) mechanism resulted in the execution of the current request being dependent on the completion of the previous request execution, which tended to cause head-of-queue blocking and did not fundamentally (solving the problem).

- high protocol overhead: the content carried in the header is too large and can not be compressed, increasing the cost of transmission.

- One-way request: only one-way request, what the client requests and what the server returns.

- Differences between HTTP 1.0 and HTTP 1.1.

- HTTP 1.0: only supports maintaining a short TCP connection (connection cannot be multiplexed); does not support breakpoints; the next request can only be sent after the previous request response arrives, and there is queue head blocking.

- HTTP 1.1: Supports long connections by default (requests can be reused for TCP connections); supports breakpoints (by setting parameters in Header); optimized cache control policy; pipelined, can send multiple requests at once, but responses are still returned sequentially, still can’t solve the problem of queue head blocking; new error status code notification; request and response messages both support Host header field

HTTP2

Solves some of the problems of HTTP1, but does not solve the underlying TCP protocol-level queue head blocking problem.

- Binary transfer: transferring data in binary format is more efficient than parsing text.

- Multiplexing: redefines the underlying http semantic mapping to allow request and response bi-directional data streams to be used on the same connection. The same domain name only needs to occupy one TCP connection, with data streams (Stream) using frames as the basic protocol unit, avoiding latency due to frequent connection creation, reducing memory consumption and improving usage performance, parallel requests, and slow requests or requests sent first do not block the return of other requests.

- Header compression: reduces redundant data in requests and reduces overhead.

- Server can proactively push: push the necessary resources to the client in advance so that the latency time can be relatively reduced a little.

- Stream prioritization: Controllable data transfer priority, allowing the site to achieve more flexible and powerful page control.

- Resettable: the ability to stop the sending of data without breaking the TCP connection.

Disadvantages: HTTP 2 does not perform as well as HTTP 1.1 in case of packet loss when multiple requests are in a TCP pipeline, because TCP has a special “packet loss retransmission” mechanism to ensure reliable transmission. When HTTP 2 loses a packet, the entire TCP has to start waiting for retransmission, and then all requests in that TCP connection will be blocked. For HTTP 1.1, multiple TCP connections can be opened, so this situation will only affect one of the connections, and the remaining TCP connections can still transmit data normally.

HTTP3 – HTTP Over QUIC

HTTP is built on top of the TCP protocol, and all the bottlenecks of the HTTP protocol and its optimization techniques are based on the characteristics of the TCP protocol itself. HTTP2 has implemented multiplexing, but the underlying TCP protocol level problems have not been solved (HTTP 2.0 only needs to use one TCP connection under the same domain. But if this connection has a packet loss, it causes the entire TCP to start waiting for retransmission and all the data behind it is blocked), and HTTP3’s QUIC was created to solve HTTP2’s TCP problem.

QUIC/HTTP3 features.

- Ordered transfers: The concept of stream is used to ensure that data is ordered. Different streams or packets are not guaranteed to arrive in an orderly fashion.

- Message compression to improve the load ratio: for example, QUIC introduced variable-length integer encoding. another example is the introduction of QPACK for header compression

- Reliable transmission: support for packet loss detection and retransmission

- Secure transmission: TLS 1.3 security protocol

Why do we need QUIC?

With the rapid development of the mobile Internet and the gradual rise of the Internet of Things, the scenarios of network interaction are getting richer and richer, and the contents of network transmission are getting larger and larger, and the users’ requirements for network transmission efficiency and WEB response speed are getting higher and higher.

On the one hand, it is an old protocol with a long history of widespread use, and on the other hand, the user’s usage scenario has higher and higher requirements for transmission performance. The following long-standing problems and conflicts become more and more prominent.

Rigidity of intermediate devices

It may be that the TCP protocol has been used for too long and is very reliable. So many of our intermediate devices, including firewalls, NAT gateways, rectifiers, etc., have developed some agreed-upon actions.

For example, some firewalls only allow through 80 and 443 and do not let through other ports, and NAT gateways rewrite the transport layer headers when converting network addresses, potentially preventing both sides from using the new transport format. Rectifiers and intermediate proxies sometimes remove option fields that they do not recognize for security reasons.

The TCP protocol originally supported the addition and modification of ports, options, and features. But because the TCP protocol and well-known ports and options have been in use for so long, intermediate devices have come to rely on these latent rules, so modifications to them are vulnerable to interference from intermediate links and fail.

And these interferences have caused many optimizations on the TCP protocol to become cautious and difficult to follow.

Dependence on OS implementation leads to protocol rigidity

TCP is implemented by the operating system at the kernel Western stack level and can only be used by applications, not directly modified. While it is very fast and easy for applications to iterate. However, TCP iterations are very slow because the operating system is a pain to upgrade.

Now mobile terminals are more popular, but some users on the mobile side may still be years behind in OS upgrades. the PC side of the system lags even more severely. windows xp is still in use by a large number of users, even though it has existed for almost 20 years.

Server-side systems do not rely on user upgrades, but are also conservative and slow because OS upgrades involve updates to the underlying software and runtime libraries.

This means that even when TCP has better feature updates, it is difficult to roll them out quickly. For example, TCP Fast Open, which was introduced in 2013, is still not supported on many versions of Windows.

Key features of QUIC

Connection Migration

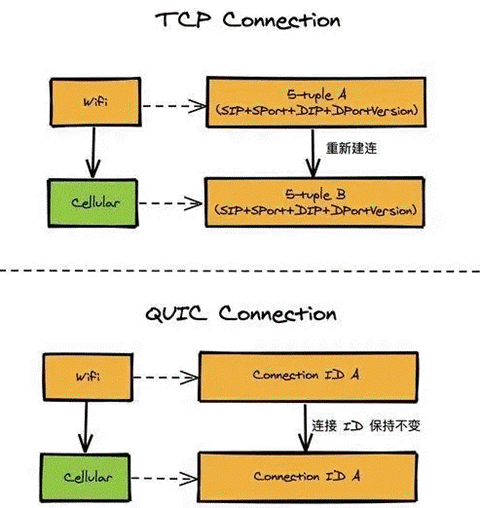

tcp’s connection reconnection pains

A TCP connection is identified by a quadruplet (source IP, source port, destination IP, destination port). What does connection migration mean? It means that when any one of these elements changes, the connection is still maintained and the business logic can be kept uninterrupted. Of course, the main concern here is the change of the client, because the client is not controllable and the network environment often changes, while the server IP and port are generally fixed.

For example, when we use cell phones to switch between WIFI and 4G mobile networks, the IP of the client will definitely change and the TCP connection with the server needs to be re-established. Another example is when you use public NAT outlets, some connections need to rebind the port when competing, resulting in a change in the client’s port, which also requires re-establishing the TCP connection. So from the perspective of TCP connection, this problem is unsolvable.

UDP-based QUIC connection migration implementation

So how does QUIC do connection migration? QUIC is based on the UDP protocol, and any QUIC connection is no longer identified by an IP and port quaternion, but by a 64-bit random number as an ID, so that even if the IP or port changes, as long as the ID remains unchanged, the connection is still maintained and the upper business logic does not perceive the change and does not break, so there is no need to reconnect. Since the ID is randomly generated by the client and has a length of 64 bits, the probability of conflict is very low.

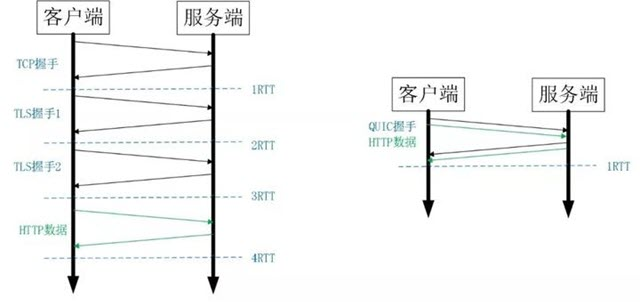

Low connection latency

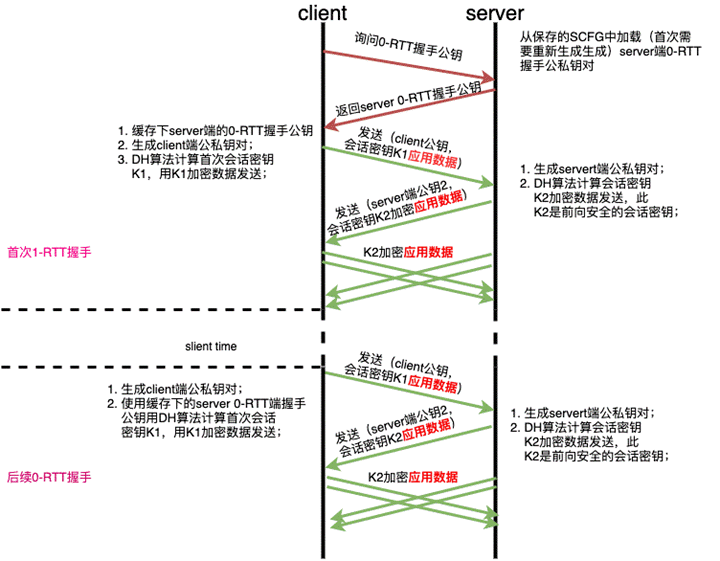

0RTT connection building is arguably the biggest performance advantage of QUIC over HTTP2. So what is 0RTT connection building? There are two layers to this.

- 0RTT at the transport layer is all it takes to establish a connection.

- 0RTT at the encryption layer is enough to establish an encrypted connection.

For example, the above diagram on the left shows a full HTTPS handshake to build a connection, which requires 3 RTTs. Even for Session Resumption, at least 2 RTTs are required.

What about QUIC? Since it is based on UDP and implements a secure handshake with 0 RTT, in most cases, only 0 RTTs are needed to send data, based on forward encryption, and the success rate of 0 RTTs is much higher than the Sesison Ticket for TLS.

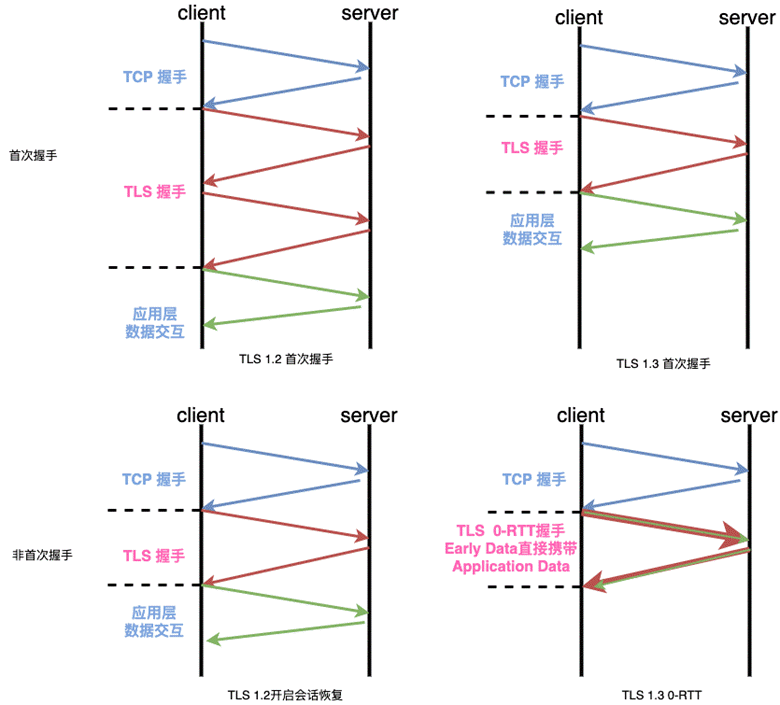

TLS connection latency problem

As an example, a simple browser visit, typing https://www.abc.com in the address bar, will actually result in the following actions.

- DNS recursive lookup of abc.com to obtain the corresponding IP for address resolution.

- TCP handshake, with the familiar TCP triple handshake requiring 1 RTT.

- TLS handshake, the most widely used TLS 1.2, requires 2 RTTs; for non-first-time connections, you can choose to enable session reuse, which reduces the handshake time to 1 RTT.

- HTTP business data interactions, assuming that com data is retrieved in one interaction. Then the business data interaction requires 1 RTT; after the process analysis above, it can be seen that to complete a short HTTPS business data interaction, it needs to go through: 4 RTT + DNS for new connections; 3 RTT + DNS for session reuse.

Therefore, for requests with small data volume, a single request handshake takes up a large amount of time, which has a great impact on user experience. At the same time, in the case of poor user network, RTT latency becomes high, which greatly affects user experience.

The following figure compares the latency of each version of TLS with the scenario.

We can see from the comparison that even with TLS 1.3, the handshake process has been streamlined to achieve the fastest 0-RTT handshake (1-RTT for the first time); however, for user perception, there is a TCP handshake overhead of 1RTT. Google has proposed a Fastopen solution to enable TCP to carry user data without the first handshake, but due to the TCP implementation However, due to the rigidity of the TCP implementation, it is not possible to upgrade the application, and the related RFC is experimental to date. Is there any way to further reduce the latency caused by this layered design? QUIC solves this problem by merging encryption and connection management, so let’s see how it achieves a true 0-RTT handshake, allowing the first packet interaction with the server to bring the user data.

True-0-RTT QUIC handshake

Since QUIC is UDP-based and does not require a TCP connection, in the best case, QUIC can start data transfer with a short connection at 0RTT. For TCP-based HTTPS, even with the best TLS1.3 early data, 1RTT is still required to initiate data transfer. For TLS1.2 full handshake, which is common online today, 3RTT is required to start data transmission. For RTT-sensitive services, QUIC can effectively reduce connection establishment latency.

The reason for this is, on the one hand, the layered design of TCP and TLS: the layered design requires each logical level to establish its own connection state separately. On the other hand, it is due to the complex key negotiation mechanism in the handshake phase of TLS. To reduce the connection establishment time, we need to start from both aspects.

QUIC specific handshake process is as follows.

- the client determines whether all the configuration parameters (certificate configuration information) of the server are already available locally, if so, it jumps directly to (5), otherwise it continues.

- The client sends an inchoate client hello (CHLO) message to the server, requesting the server to transmit the configuration parameters.

- The server receives the CHLO and replies with a rejection(REJ) message, which contains some of the server’s configuration parameters.

- The client receives the REJ, extracts and stores the server configuration parameters, and jumps back to (1).

- The client sends a full client hello message to the server to start the formal handshake, which includes the number of publics selected by the client. At this point, the client can calculate the initial key K1 based on the obtained server configuration parameters and the public number it has chosen.

- the server receives the full client hello and replies REJ if it does not agree to connect, same as (3); if it agrees to connect, it calculates the initial key K1 based on the client’s public number and replies server hello(SHLO) message, which SHLO encrypts with the initial key K1 and contains a temporary public number chosen by the server.

- The client receives the reply from the server, and if it is REJ then the situation is the same as (4); if it is SHLO, it tries to decrypt it with the initial key K1 and extracts the temporary public number.

- Client and server each derive session key K2 based on SHA-256 algorithm based on temporary public number and initial key K1.

- The initial key K1 is useless and the QUIC handshake process is completed. After that, the process of session key K2 update is similar to the above process, but some fields in the packet are slightly different.

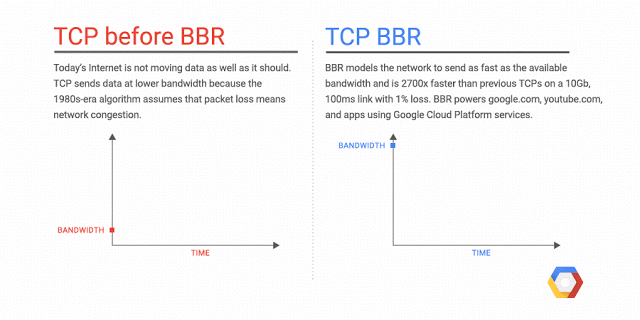

Customizable congestion control

Quic uses pluggable congestion control, which provides richer congestion control information compared to TCP. For example, each packet, original or retransmitted, has a new sequence number (seq), which allows Quic to distinguish between retransmitted and original ACKs, thus avoiding the problem of TCP retransmission ambiguity. In addition, Quic’s ACK Frame supports 256 NACK intervals, which is more flexible than TCP’s SACK (Selective Acknowledgment), and provides more information to the client and server about which packets have been received by each other.

QUIC transmission control no longer relies on the kernel’s congestion control algorithm, but is implemented at the application layer, which means we can implement and configure different congestion control algorithms and parameters according to different business scenarios. The performance is also better. Under QUIC, we can specify the congestion control algorithm and parameters according to the service, and even different connections of the same service can use different congestion control algorithms.

TCP congestion control actually consists of four algorithms: slow start, congestion avoidance, fast retransmission, and fast recovery.

The QUIC protocol currently uses TCP’s Cubic congestion control algorithm by default, and also supports the CubicBytes, Reno, RenoBytes, BBR, PCC, and other congestion control algorithms.

From the point of view of the congestion algorithm itself, QUIC is just a reimplementation of the TCP protocol, so what exactly has been improved in the QUIC protocol? The main points are as follows.

Pluggable

What do we mean by pluggable? It’s the ability to take effect, change, and stop in a very flexible manner. This is reflected in the following aspects.

- Different congestion control algorithms can be implemented at the application level, without the need for an operating system and without kernel support. This is a leap forward, because traditional TCP congestion control must be supported by the end-to-end network stack to achieve the control effect. The kernel and operating system are very expensive to deploy and have a long upgrade cycle, which is obviously a bit too much for today’s rapidly iterating products and explosive network growth.

- Different connections of even a single application can support different configurations of congestion control. Even for a single server, the network environment of the connected users is very different. Combining with big data and artificial intelligence processing, we can provide different but more accurate and effective congestion control for each user. For example, BBR is suitable, Cubic is suitable.

- We only need to modify the configuration and reload on the server side, and then we can switch the congestion control without stopping the service at all.

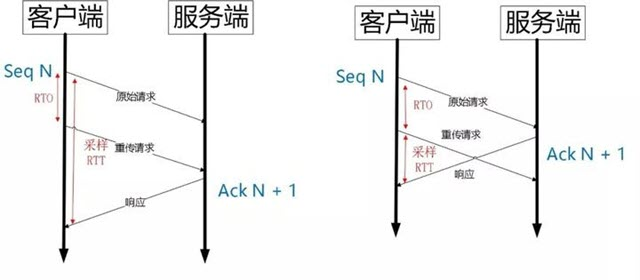

Monotonically Increasing Packet Number

TCP uses Sequence Number and Ack, which are based on byte sequence numbers, to confirm the orderly arrival of messages for reliability.

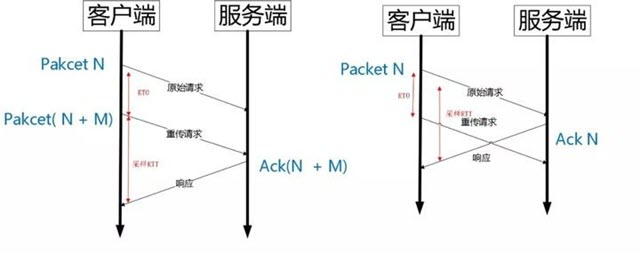

QUIC, also a reliable protocol, uses Packet Number instead of TCP’s sequence number, and each Packet Number is strictly incremental, meaning that even if Packet N is lost, the Packet Number of the retransmitted Packet N is no longer N, but a value larger than N. In the case of TCP, the sequence number of the retransmitted segment remains the same as the Sequence Number of the original segment, and it is this feature that introduces the ambiguity problem of Tcp retransmission.

As shown above, after the timeout event RTO occurs, the client initiates a retransmission and then receives the Ack data. Since the sequence number is the same, is this Ack data the response of the original request or the response of the retransmission request? It is not easy to determine.

If it is counted as the response of the original request, but it is actually the response of the retransmission request (above left), it will cause the sampling RTT to become larger. If it is counted as the response to a retransmission request, but is actually the response to the original request, the sample RTT can easily be too small.

This problem is easily solved since the Pakcet Number of the Packet retransmitted by Quic and the original Packet are strictly incremental.

As shown above, after the RTO occurs, the exact RTT calculation is determined based on the retransmitted Packet Number. If Ack’s Packet Number is N+M, the sample RTT is calculated based on the retransmission request. if Ack’s Pakcet Number is N, the sample RTT is calculated based on the time of the original request, with no ambiguity.

QUIC also introduces a concept of Stream Offset.

That is, a Stream can be transmitted through multiple Packets with strictly incremental Packet Number and no dependencies. However, if the Payload in a Packet is a Stream, it needs to rely on the Stream Offset to ensure the order of the application data. If the error! Source not found. As shown, the sender sends Pakcet N and Pakcet N+1, and the Offset of Stream is x and x+y, respectively.

Suppose Packet N is lost and a retransmission is initiated, and the retransmitted Packet Number is N+2, but the Offset of its Stream is still x. This way, even if Packet N + 2 arrives later, Stream x and Stream x+y can still be organized in order and given to the application for processing.

Reneging is not allowed

What does Reneging mean? The TCP protocol does not encourage this behavior, but it is allowed at the protocol level. This is mainly because of limited server resources, such as Buffer overflow, lack of memory, etc.

Reneging can be very disruptive to data retransmission. This is because the Sack has indicated that it was received, but the data is in fact discarded by the receiver.

QUIC reduces this interference by disabling Reneging at the protocol level, so that a Packet must be received correctly as long as it is Acked.

More Ack Blocks

TCP’s Sack option can tell the sender the range of consecutive Segments that have been received, facilitating selective retransmission by the sender.

Since the TCP header has a maximum of 60 bytes and the standard header takes up 20 bytes, the maximum length of the Tcp Option is only 40 bytes, plus the Tcp Timestamp option takes up 10 bytes [25], so there are only 30 bytes left for the Sack option.

The length of each Sack Block is 8, plus the 2 bytes of the Sack Option header, which means that the Tcp Sack Option can only provide a maximum of 3 blocks.

However, Quic Ack Frame can provide 256 Ack Blocks at the same time. In networks with high packet loss rate, more Sack Blocks can improve network recovery speed and reduce retransmission.

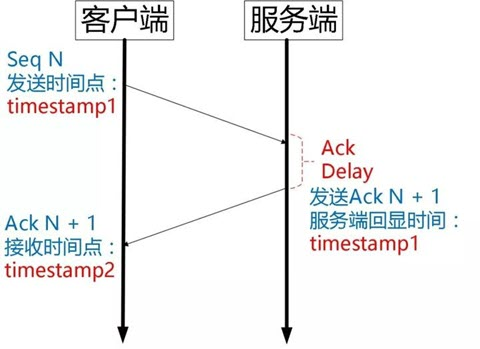

Ack Delay time.

There is a problem with the Timestamp option of Tcp [25], it only echoes the timestamp of the sender, but does not calculate the time between the reception of the segment at the receiving end and the sending of Ack for that segment. This time can be abbreviated as Ack Delay.

This leads to RTT calculation errors. The following figure.

It can be considered that the RTT of TCP is calculated as follows: RTT=timestamp2-timestamp1

And Quic calculated as follows: RTT = timestamp2 - timestamp1 - AckDelay

Of course the specific calculation of RTT is not so simple, it needs to be sampled and smoothed with reference to historical values, refer to the following formula.

Blocking without headers

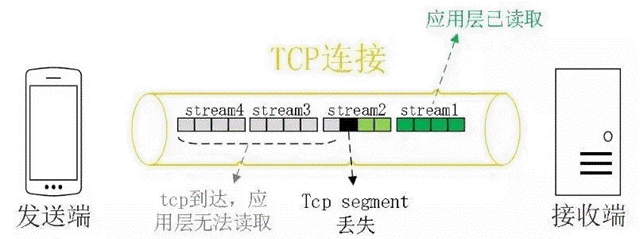

Head Blocking in TCP

Although HTTP2 implements multiplexing, because it is based on byte-stream oriented TCP, packet loss will affect all request streams under multiplexing. quic is based on UDP and is designed to solve the head-of-queue blocking problem.

The main reason for TCP header blocking is that packet timeout or loss blocks the current window from sliding to the right, and the easiest way to solve header blocking is to not let timeout or lost packets block the current window in place.

To ensure reliability, TCP uses Sequence Number and Ack based on the byte sequence number to confirm the orderly arrival of messages.

As shown above, the application layer can read the contents of stream1 without any problem, but because the third segment in stream2 has lost packets, TCP needs the sender to retransmit the third segment in order to ensure the reliability of the data before notifying the application layer to read the next data. Therefore, even if the contents of stream3 and stream4 have arrived successfully, the application layer still cannot read them and has to wait for the lost packets in stream2 to be retransmitted.

In a weak network environment, HTTP2’s header blocking problem is extremely bad in terms of user experience.

QUIC’s non-header blocking solution

QUIC is also a reliable protocol that uses Packet Number instead of TCP’s Sequence Number, and each Packet Number is strictly incremental, meaning that even if Packet N is lost, the Packet Number of the retransmitted Packet N is no longer N, but a value larger than N, such as Packet N+M.

The monotonically increasing Packet Number design used by QUIC allows packets to be acknowledged out of order, unlike TCP, and QUIC supports out-of-order acknowledgements, so that when Packet N is lost, the current window continues to slide to the right as long as a new received packet is acknowledged. When the sender is informed that packet Packet N is lost, it will put the packet that needs to be retransmitted into the queue to be sent, renumber it as Packet N+M and resend it to the receiver, processing the retransmitted packet similar to sending a new packet, so that the current window will not be blocked in place due to packet loss and retransmission, thus solving the queue head blocking problem. So, since the Packet N+M of the retransmitted packet does not match the Packet N number of the lost packet, how can we be sure that the contents of the two packets are the same?

QUIC uses the Stream ID to identify which resource request the current data stream belongs to, which is also the basis for the packet to be assembled properly once it is multiplexed to the receiving end. The retransmitted packet Packet N+M and the lost packet Packet N still cannot be determined by the Stream ID alone, so a new field Stream Offset is needed to identify the byte offset of the current packet in the current Stream ID.

With the Stream Offset field information, packets belonging to the same Stream ID can be transmitted in disorder (HTTP/2 relies only on Stream ID identification and requires that data frames belonging to the same Stream ID must be transmitted in order), and the Stream ID and Stream Offset of two packets are consistent, which means that the contents of these two packets are consistent.

QUIC Protocol Components

QUIC is a reliable TCP-like transport protocol built on top of UDP. HTTP3, on the other hand, completes HTTP transactions on top of QUIC. Networks are always discussed in layers, and here we discuss the QUIC protocol in layers from low to high.

- UDP layer: UDP messages are transmitted at the UDP layer. The concern here is what the UDP message load is and how to send UDP messages efficiently

- Connection layer: Connection confirms the unique connection by CID, and connection carries out reliable and secure transmission of the packet.

- Stream layer: Stream in the corresponding Connection, through the StreamID for unique stream confirmation, stream for stream frame transmission management

- HTTP3 layer: HTTP3 is based on QUIC Stream, which provides more efficient HTTP transaction transfer compared to 1 and HTTP2.0. HTTP3 uses QPACK protocol for header compression.

UDP layer

This section discusses issues related to the UDP portion of QUIC outgoing packets.

UDP load size

Load size is limited by 3 objects: QUIC protocol provisions; path MTU; endpoint acceptance capability

- QUIC cannot run on a single UDP transport network path that does not support 1200 bytes QUIC has a requirement that the initial packet size must not be less than 1200, and if the data itself is less than 1200 (e.g. initial ack), then it needs to be padded with padding to at least 1200 bytes

- QUIC does not want IP layer fragmentation This requirement means that udp will not deliver more than 1 MTU of data to the ip layer, assuming an mtu of 1500, and the upper limit of udp load is 1472 bytes (1500-20-8) for ipv4 scenarios and 1452 (1500-40-8) for ipv6. QUIC recommends using PMTUD as well as DPLPMTUD for mtu probing. In practice, we recommend setting the MTU for IPv6 to 1280. Larger than this value, packet loss can exist in some networks.

- The terminal can accept transport paraments with max_udp_payload_size(0x03) is the terminal’s ability to accept a single udp packet size, and the sender should comply with this convention.

UDP load content

- The UDP load content is the packet in the quic protocol, which states that multiple packets can be sent as a single udp message if the load size limit is not exceeded. In the quic implementation, if each udp message contains only one quic packet, it will be more likely to have disorder problems.

Efficient sending of UDP packets

Unlike tcp, quic requires udp data assembly at the application layer, and each udp packet is no larger than 1 mtu. If not optimized, such as sending each packet directly with sendto/sendmsg, it will inevitably cause a large number of system calls and affect throughput

- Optimization is performed through the sendmmsg interface. sendmmsg can send multiple udp quic packets from the user state to the kernel state through a single system call. The kernel state sends out each udp quic packet independently as an udp packet

- On) solves the problem of the number of system calls, and enabling GSO delays one packet until the moment before it is sent to the NIC driver, which can further improve throughput and reduce CPU consumption

- On top of that, the mainstream NICs now support the hardware GSO offload scheme, which can further improve throughput and reduce cpu consumption.

The sending method described above can in fact be interpreted as udp burst sending method, which brings up a problem, congestion control requires pacing capability!

Connection layer

In our discussion, we know that what is transmitted in a udp message is actually one or more packets defined by the quic protocol, so at the Connection level, it is actually managed in packets. When a packet arrives, the terminal needs to resolve the target ConnectionID (DCID) field and give the packet to the corresponding quic connection.

connection id

Unlike tcp, which uniquely identifies a connection by a 4-tuple, QUIC defines a ConnectionID that is independent of network routing to identify a unique connection. This has the advantage that the connection can be maintained when the quadruplet changes (e.g. nat rebinding or end network switching wifi->4G). Of course, while the connection state is still maintained, congestion control needs to be able to adjust in time due to path changes.

packet header

IETF quic header is divided into two types, long header, short header. long header is divided into four types: initial, 0rtt, handshake, retry. The definition of the type can be directly referred to the rfc document, so I will not repeat it here.

quic stipulates that packet number is always self-increasing, even if the content of a packet is retransmitted frame data, its packet number must be self-increasing, which brings an advantage compared with TCP, and can collect RTT attributes of the path more accurately.

packet number coding and decoding: packet number is a range of values from 0 to 262 - 1. quic introduces the concept of unacked when calculating packet number in order to save space, and by truncating (retaining only valid bit bits), only 1-4 bytes are used, i.e., it can encode/ There is an appendix in the rfc documentation that explains the enc/dec process in detail.

The packet header is protected in secure transmission, which means that timing analysis of the packet using wireshake is not possible without ssl information. Intermediate network devices are also unable to obtain packet numbers for chaotic reordering as TCP does.

packet payload After decrypting the packet and removing the packet header, the packet’s load is all frames (including at least one).

If the packet load does not include three types of frames like ACK, PADDING, and CONNECTION_CLOSE, then the packet is defined as ack-eliciting, which means that the other end must generate the corresponding ack for this packet to notify the sender to ensure that no data is lost.

The types of frames in the packet load are up to 30 types, each type has its own application scenarios, such as ACK Frame for reliable transmission (Recovery), Crypto for secure transmission (TLS handshake), Stream Frame for business data delivery, MAX_DATA/DATA_BLOCKED for flow control, PING Frame can be used for mtu probing, refer to rfc document for specific description.

Secure Transport

QUIC’s secure transport relies on TLS1.3, and boringssl is a dependent library for many QUIC implementations. The protocol protects the Packet header as well as the load (including the packet number.) TLS1.3 0RTT has the ability to provide data protection while sending the Response Header to the client at the first opportunity (when the server receives the first request message). This greatly reduces the first packet time in HTTP services. In order to support 0RTT, the client needs to save PSK information, as well as part of the transport parament information.

Secure transmission also often involves performance issues. in the current mainstream server, AESG has the best performance due to the hardware acceleration provided by the cpu. CHACHA20 requires more CPU resources. On short video service, for the requirement of first frame, it usually uses plaintext transmission directly.

Transport Paramenter (TP) negotiation is done in the handshake phase of secure transmission. In addition to the TP specified in the protocol, users can also extend the private TP content, a feature that brings great convenience, for example: clients can use tp to inform the server for plaintext transmission.

Reliable Transmission

QUIC protocol is required to be able to transmit reliably like TCP, so QUIC has a separate rfc describing the topics of packet loss detection and congestion control.

Packet loss detection: The protocol utilizes two ways to determine whether packet loss has occurred: one is ack-based detection, where the time threshold and packet threshold are used to infer whether packets sent out before this packet are lost based on the packet that has arrived. The second, in the case of lost reference packets, then only by way of PTO to infer whether the packet is lost. Generally speaking, a large number of being triggered should be the way of ACK detection. If PTO is triggered in large numbers, it will affect the efficiency of sending packets.

Congestion control: QUIC is optimized for some defects in the TCP protocol. For example, always increasing packet number, rich ack range, host delay calculation, etc. At the same time, tcp congestion control needs to be implemented in the kernel state, while QUIC is implemented in the user state, which greatly reduces the threshold for studying efficient and reliable transport protocols. newReno implementation is described in the Recovery protocol. In GOOGLE chrome, cubic, bbr, bbrv2 are implemented, while the mvfst project is much richer, including ccp, copa protocols.

Stream layer

Stream is an abstract concept that expresses an ordered stream of transmitted bytes that are actually composed of Stream Frames lined up together. On a quic connection, multiple streams can be transferred at the same time.

Stream header

In the Quic protocol, streams are classified as unidirectional or bidirectional, and as client-initiated or server-initiated. stream’s different type definitions are fully exploited in HTTP3.

Stream payload

The payloadof a stream is a series of Stream Frames, and a single stream is identified by the Stream ID in the header of the Stream Frame.

In TCP, if a segment delivery is lost, then subsequent segments arrive out of order and are not used by the application layer until the lost segment is successfully retransmitted, so the TCP implementation of HTTP2 is constrained in its ability to multiplex. In the QUIC protocol, the concept of order is only maintained in a single stream, and neither the stream nor the packet is required to be ordered. Suppose a packet is lost, it will only affect the streams contained in this packet, and other streams can still extract the data they need from the subsequent packets that arrive in disorder to the application layer.

HTTP3 layer

stream classification

With the introduction of HTTP3, the unidirectional stream type of stream was extended into: Control stream, Push stream and other reserved types. Among them, HTTP3 setting is transmitted in the control stream, while HTTP data transmission is in the client-initiated bidirectional stream, so the reader will find that the stream id of HTTP data transmission is all mod 4 equal to 0.

With the introduction of QPACK, the one-way stream is further extended with two types, encoder stream, and decoder stream, on which the dynamic table updates in QPACK depend.

QPACK

The role of QPACK is header compression. Similar to HPACK, QPACK defines static tables and dynamic tables for header indexing. Static tables are predefined for common headers, protocols. Dynamic tables are built incrementally as the QUIC Connection serves HTTP, and the Encoder/Decoder stream created by QPACK accompanies the QUIC Connection lifecycle for HTTP transactions.

Dynamic tables are not a must for HTTP3 to work, so in some QUIC open source projects, there is no implementation of complex dynamic table functionality.

In QPACK’s dynamic table business, the data stream, encoder stream, decoder stream 3 kinds of objects participate together, encoder stream and decoder stream is responsible for maintaining the dynamic table changes, the data stream is parsed out the index number of the header, go to the dynamic table query, to get the final header definition.

Other

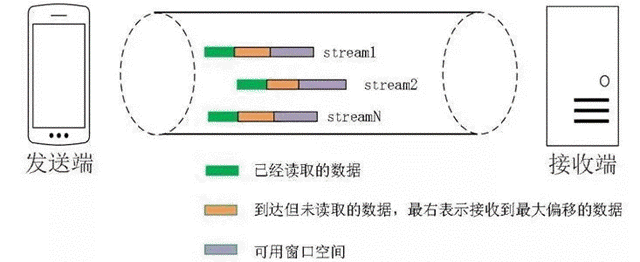

Flow Control

The QUIC protocol introduces the concept of flow control, which is used to express the acceptance capabilities of the receiving end. There are two levels of flow control, Connection level, and Stream level. The data offset sent by the sender cannot exceed the flow control limit, and if the limit is reached, then the sender should notify the receiver via DATA_BLOCKED/STREAM_DATA_BLOCKED. If for the sake of transmission performance, the receiver side should try to keep the limit large enough, for example, update max_data in time to pass it to the sender side when it reaches half of max_data. If the receiver side does not want to accept data too quickly, it can also use flow control to constrain the sender side.

QUIC version

QUIC was designed and developed by google at the beginning, in the chromium project, you can see google quic (GQUIC) version number is defined as Q039,Q043,Q046,Q050 and so on.

With the launch of IETF version of QUIC, ietf quic (IQUIC) also has many versions, such as 29,30,34 (latest version), etc. Different versions may not be interoperable, such as different versions of the safe transmission of the salt variable provisions are not the same. So IQUIC introduces the function of version negotiation for different clients and servers to negotiate a version that can be interoperable.

In practice, there is also a requirement for a service to be able to serve different versions of GQUIC and different versions of IQUIC at the same time. This requires that the service, after receiving the packet, needs to make a judgment on the packet, analyze whether it belongs to QUIC or GQUIC, and then perform logical triage.

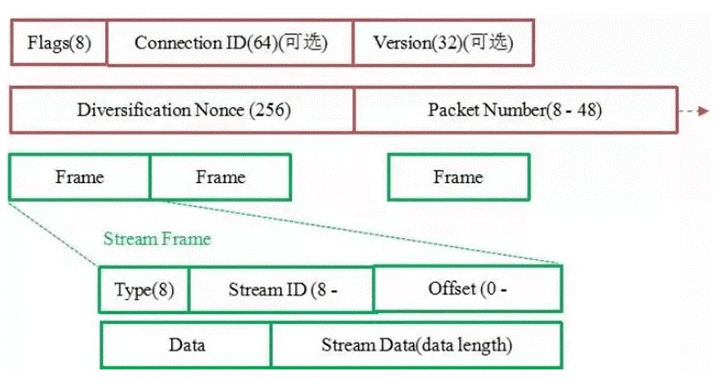

QUIC packets are authenticated in the header and encrypted in the body, except for individual messages such as PUBLIC_RESET and CHLO. This way, any modification to the QUIC packet can be detected by the receiver in time, effectively reducing the security risk.

As shown in the figure, the red part is the header of the Stream Frame message, with authentication. The green part is the content of the message, which is all encrypted.

- Flags: used to indicate information such as Connection ID length, Packet Number length, etc.

- Connection ID: unsigned integer with a maximum length of 64 bits chosen randomly by the client. However, the length is negotiable.

- QUIC Version: the version number of the QUIC protocol, a 32-bit optional field. This field is required if Public Flag & FLAG_VERSION ! = 0, this field is required. The client sets Bit0 in the Public Flag to 1 and fills in the desired version number. If the client’s desired version number is not supported by the server, the server sets Bit0 in the Public Flag to 1 and lists the protocol versions (0 or more) supported by the server in this field, and no messages can follow this field.

- Packet Number: The length depends on the value of the Bit4 and Bit5 bits in the Public Flag, and the maximum length is 6 bytes. The first packet sent by the sender has a sequence number of 1, and the sequence number of the subsequent packets is greater than the sequence number of the previous packet.

- Stream ID: Identifies the resource request to which the current data stream belongs.

- Offset: identifies the byte offset of the current packet in the current Stream ID.

The size of the QUIC message needs to meet the path MTU size to avoid being fragmented. The current maximum message length for QUIC is 1350 under IPV6 and 1370 under IPV4.

QUIC application scenarios

- Image small files: significantly reduce the total file download time and improve efficiency

- Video on demand: improve the first screen second opening rate, reduce the rate of lag, improve the user viewing experience

- Dynamic request: apply to dynamic request, improve access speed, such as web page login, transaction and other interactive experience enhancement

- Weak network environment: provide available services despite severe packet loss and network latency, and optimize transmission indicators such as lag rate, request failure rate, second open rate, and improve connection success rate

- Large concurrent connections: strong connection reliability, support for access rate improvement in the case of high number of page resources and concurrent connections

- Encrypted connection: secure and reliable transmission performance