The data and variables in a program are allocated to the virtual memory where the program resides, which contains two important areas: the Stack and the Heap. Most of the arguments, return values, and local variables of function calls are allocated to the stack, which is managed by the compiler; different programming languages use different methods to manage the memory in the heap area. The objects in the heap are allocated by the memory allocator and collected by the garbage collector.

Different programming languages choose different ways to manage memory. This section introduces the Go language memory allocator, analyzing in detail the process of memory allocation and the design and implementation principles behind it.

Design Principles

Memory management generally consists of three different components, the user program (Mutator), the allocator (Allocator) and the collector (Collector). When the user program requests memory, it requests new memory through the memory allocator, which is responsible for initializing the corresponding memory area from the heap.

The memory allocator implementation of Go language is very complex. Before analyzing the memory allocator implementation, we need to understand the design principles of memory allocation and master the memory allocation process. The allocation methods of the memory allocator and the hierarchical allocation, virtual memory layout and address space of the Go language memory allocator will be described in detail here.

Allocation Methods

The programming language memory allocator generally contains two allocation methods, one is the linear allocator (Sequential Allocator, Bump Allocator), and the other is the free-list allocator (Free-List Allocator).

Linear Allocator

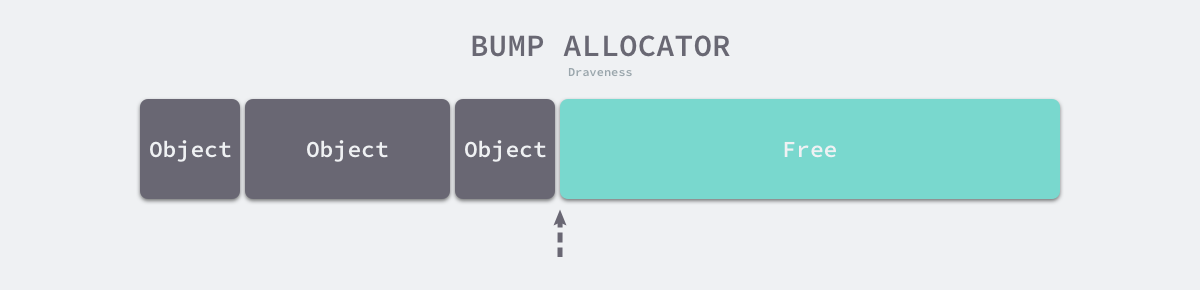

Linear allocation (Bump Allocator) is an efficient method of memory allocation, but has major limitations. When we use a linear allocator, we only need to maintain a pointer to a specific location in memory. If a user program requests memory from the allocator, the allocator only needs to check the remaining free memory, return the allocated memory area and modify the location of the pointer in memory, i.e., move the pointer in the following figure.

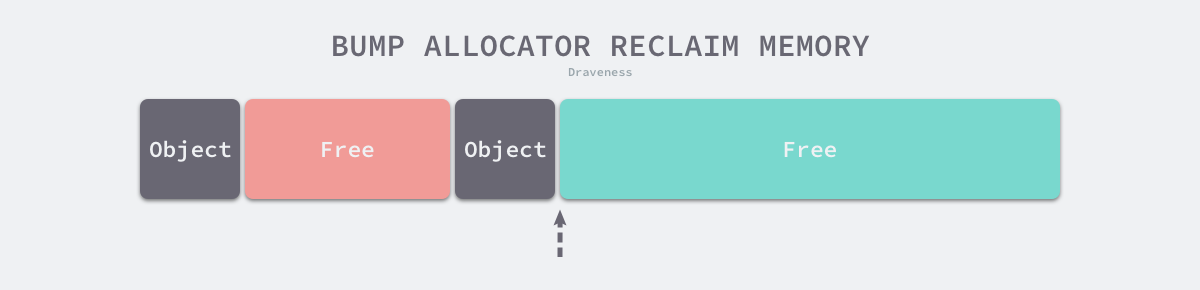

Although the linear allocator implementation brings it a faster execution speed and lower implementation complexity, the linear allocator cannot reuse memory when it is freed. As shown in the figure below, the linear allocator cannot reuse memory in red if it has been allocated and reclaimed.

Because linear allocators have these characteristics, they need to be used with appropriate garbage collection algorithms, such as Mark-Compact, Copying GC, and Generational GC, which sort out fragments of living objects by copying them and periodically merging free memory so that the efficiency of linear allocators can be used to improve the performance of memory allocators.

Because linear allocators need to work with garbage collection algorithms that have copy properties, languages such as C and C++ that need to expose pointers directly to the outside world cannot use this strategy, and we will detail the design principles of common garbage collection algorithms in the next section.

Free Link Allocator

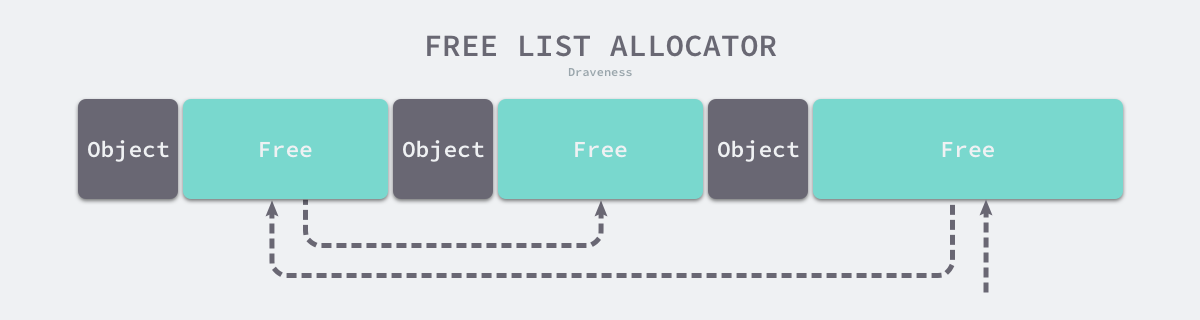

The Free-List Allocator reuses memory that has already been freed and maintains an internal data structure similar to a chain table. When a user program requests memory, the free-list allocator sequentially traverses the free memory blocks, finds a large enough memory, and then requests new resources and modifies the chain.

Since different memory blocks form a chain table through pointers, an allocator using this approach can reuse the reclaimed resources, but it has a time complexity of O(n)O(n) because it needs to traverse the chain table when allocating memory. The free link table allocator can choose different strategies to select among the memory blocks in the link table, the most common ones are the following four.

- First-Fit (First-Fit) - traversing from the head of the chain table and selecting the first memory block whose size is larger than the requested memory.

- Cyclic first adaptation (Next-Fit) - traversing from the end position of the last traversal and selecting the first memory block whose size is larger than the requested memory.

- Best-Fit - traverses the entire chain from the head of the chain and selects the most suitable block.

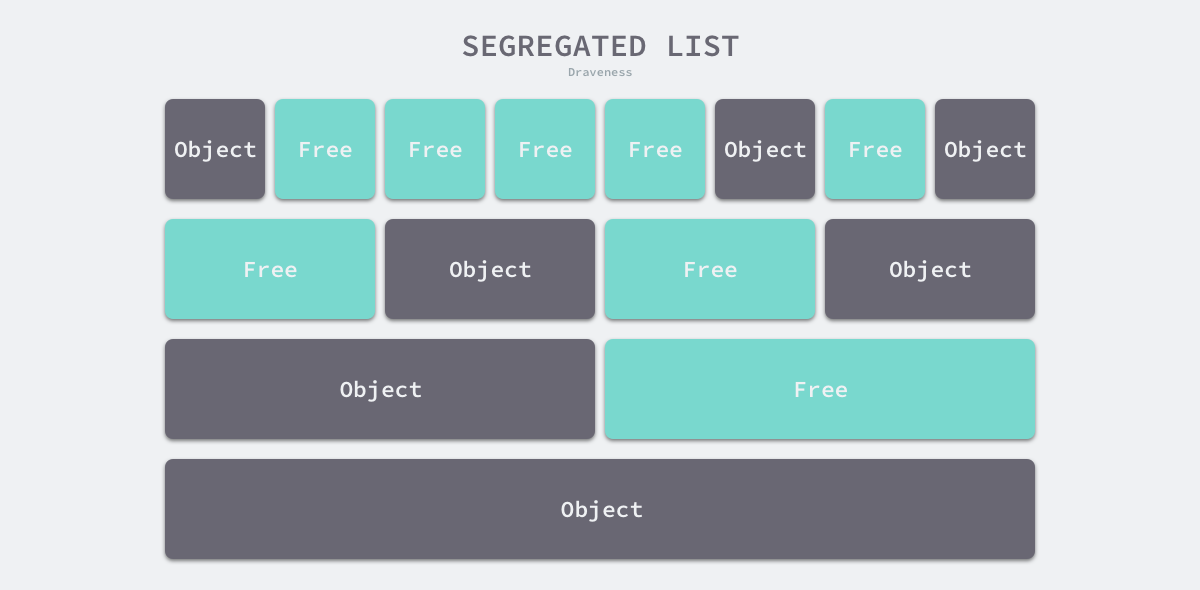

- Segregated-Fit - splitting the memory into multiple chained tables, each with memory blocks of the same size, and requesting memory by first finding the chained table that meets the conditions and then selecting the appropriate memory block from the chained table.

Without going into the first three of the four strategies mentioned above, the memory allocation strategy used by the Go language is somewhat similar to the fourth strategy, and we understand the principle of this strategy through the following diagram.

As shown above, this policy splits the memory into a chain of 4, 8, 16, and 32-byte memory blocks. When we request 8 bytes of memory from the memory allocator, it finds the free memory block that meets the conditions in the above figure and returns it. The isolation-adapted allocation strategy reduces the number of memory blocks to be traversed and improves the efficiency of memory allocation.

Hierarchical allocation

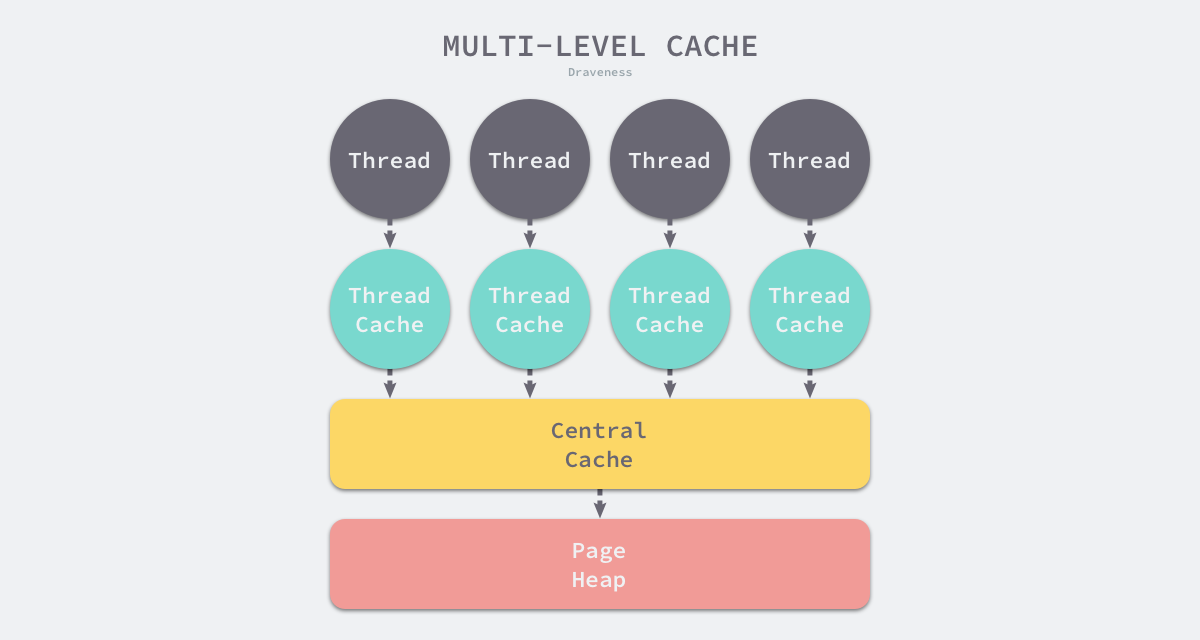

Thread-Caching Malloc (TCMalloc) is a mechanism for allocating memory that is much faster than malloc in glibc. The Go language memory allocator borrows from TCMalloc’s design for high-speed memory allocation. The core idea is to use a multi-level cache to sort objects by size and implement different allocation strategies by category.

Object Size

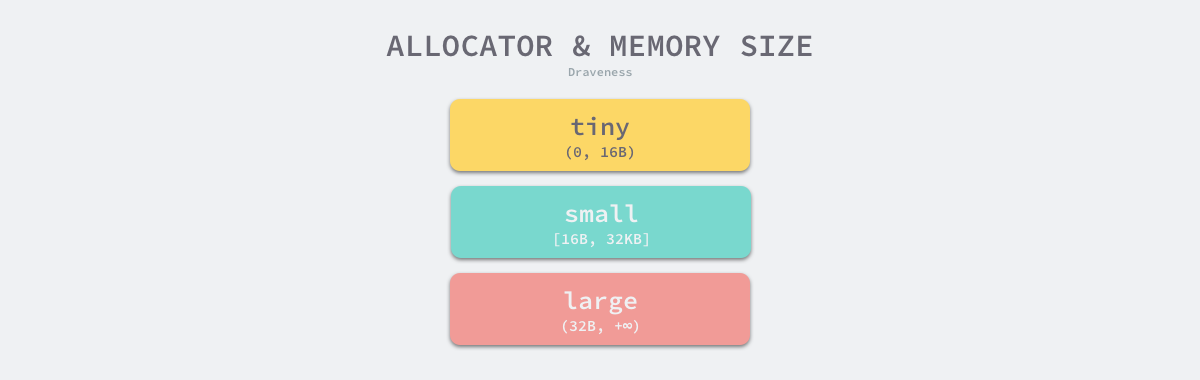

The memory allocator of the Go language selects different processing logic depending on the size of the memory requested to be allocated, and at runtime classifies objects into micro-objects, small objects, and large objects based on their size: the

| category | size |

|---|---|

| micro-objects | (0, 16B) |

| small objects | [16B, 32KB] |

| large objects | (32KB, +∞) |

Since the majority of objects in your program are under 32KB in size, and the size of the memory requested affects the process and overhead of allocating memory at Go runtime, it is beneficial to handle large and small objects separately to improve the performance of the memory allocator.

Multilevel caching

The memory allocator not only treats objects of different sizes differently, but also manages memory at different levels. Both the TCMalloc and Go runtime allocators introduce three components to manage memory hierarchically: Thread Cache, Central Cache, and Page Heap.

The thread cache belongs to each individual thread, and it can satisfy most of the memory allocation needs on the thread. Since it does not involve multiple threads, there is no need to use mutually exclusive locks to protect memory, which can reduce the performance loss caused by lock contention. When the thread cache cannot meet the demand, the runtime will use the central cache as a supplement to solve the memory allocation for small objects. When encountering objects over 32KB, the memory allocator will choose the page heap to allocate large memory directly.

This multi-level memory allocation design is somewhat similar to the multi-level cache in computer operating systems, because most objects are small objects and we can provide enough memory space through the thread cache and the central cache, and get more memory resources from higher-level components when resources are found to be insufficient.

Virtual memory layout

The design and evolution of the Go language’s heap memory address space will be described here. In versions of the Go language prior to 1.10, the heap memory space was contiguous; however, in version 1.11, the Go team used a sparse heap memory space instead of contiguous memory, addressing the limitations posed by contiguous memory and the problems that can arise in special scenarios.

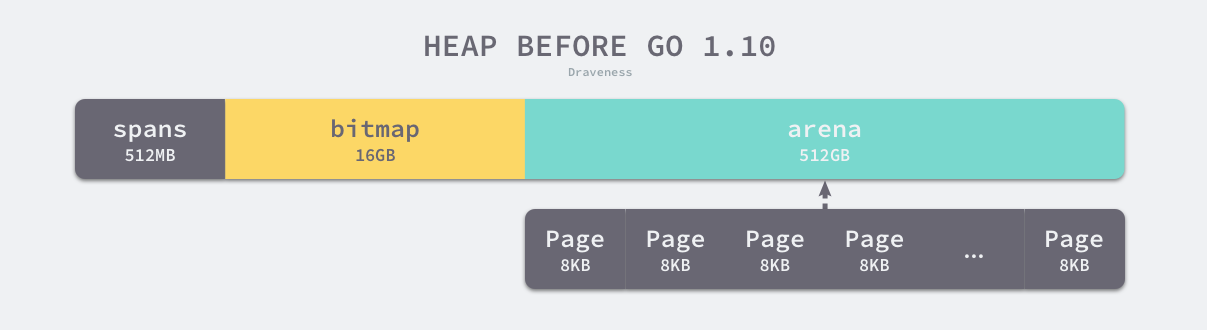

linear memory

The 1.10 version of the Go language program initializes an entire area of virtual memory at startup, with the following three areas spans, bitmap and arena reserved for 512MB, 16GB and 512GB of memory respectively, which are not really physical memory, but virtual memory.

- spans area stores pointers to memory management units runtime.mspan, each of which manages a few pages of memory space, each of 8KB in size.

- bitmap is used to identify those addresses in the arena area where objects are stored, and each byte in the bitmap indicates whether 32 bytes in the heap area are free or not.

- arena area is the real heap area and the 8KB will be considered as one page at runtime; these memory pages store all the objects initialized on the heap.

For any given address, we can calculate the number of pages in the arena based on the base address of the arena and get the runtime.mspan that manages that piece of memory from the spans array, where multiple contiguous locations may correspond to the same runtime.mspan structure.

The Go language uses the procedure described in the previous paragraph to find the runtime.mspan that manages an object in the heap based on the address of the pointer, which is based on the assumption that the memory in the heap is contiguous. While this design is simple and convenient, it can cause crashes when used in a mix of C and Go:

- allocated memory addresses can conflict, causing heap initialization and expansion to fail.

- large chunks of memory that are not reserved may be allocated as C binaries, resulting in a discontinuous heap after expansion.

Linear heap memory requires a large block of memory space to be reserved, but it is impractical to request a large block of memory space and not use it, while not reserving memory space can cause programs to crash in special scenarios. Although contiguous memory is relatively simple to implement, there is no way to ignore these issues.

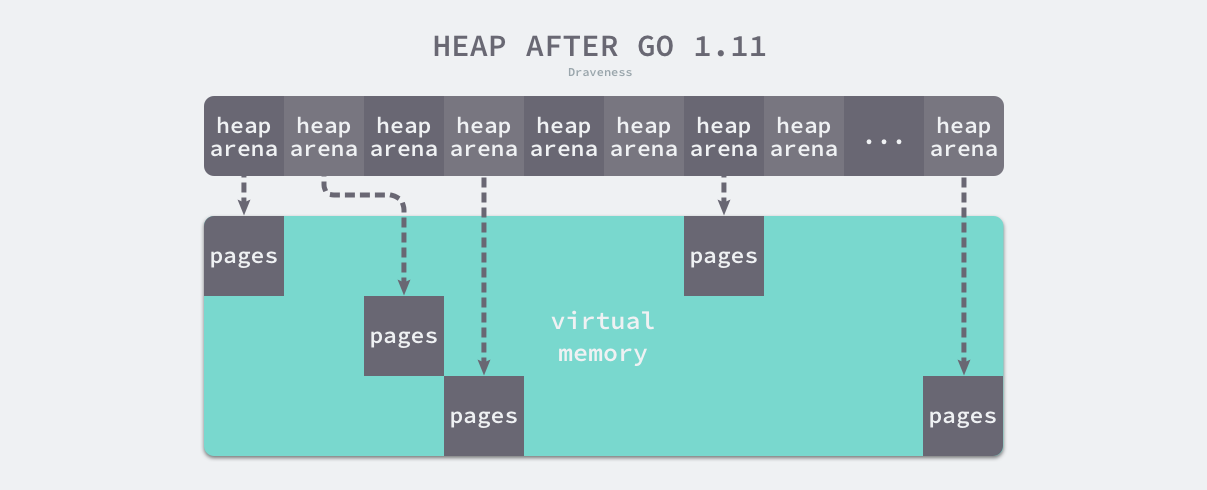

Sparse memory

Sparse memory is a solution proposed by the Go language in 1.11. Using a sparse memory layout not only removes the upper limit on heap size), but also solves address space conflicts when using a mix of C and Go. However, because memory management based on sparse memory loses the assumption of memory continuity, it also makes memory management more complex.

As shown above, the runtime uses a two-dimensional runtime.heapArena array to manage all memory, with each cell managing 64MB of memory space.

The bitmap and spans in this structure correspond to the bitmap and spans regions in linear memory, and the zeroedBase field points to the base address of the memory managed by this structure. The above design slices the original contiguous large memory into sparse small memory, and the meta-information used to manage this memory is also sliced into small pieces.

The size of the two-dimensional array may be completely different for different platforms and architectures; if our Go language service were running on Linux’s x86-64 architecture, the one-dimensional size of the two-dimensional array would be 1, while the two-dimensional size would be 4,194,304, and since each pointer takes up 8 bytes of memory space, the total size of the meta-information would be 32MB. since each runtime. heapArena manages 64MB of memory, the entire heap can manage up to 256TB of memory, which is several orders of magnitude more than the previous 512GB.

The Go team changed linear memory to sparse memory in version 1.11 with the following commits, removing the 512GB memory limit and the assumption of memory continuity in the heap area.

- runtime: use sparse mappings for the heap

- runtime: fix various contiguous bitmap assumptions

- runtime: make the heap bitmap sparse

- runtime: abstract remaining mheap.spans access

- runtime: make span map sparse

- runtime: eliminate most uses of mheap_.arena_*

- runtime: remove non-reserved heap logic

- runtime: move comment about address space sizes to malloc.go

The above changes have a slight impact on garbage collection as memory management becomes more complex, increasing the garbage collection overhead by about 1%, but this is the cost we have to pay to solve the existing problem.

Address space

Because all memory is ultimately requested from the operating system, the Go language runtime builds the operating system’s memory management abstraction layer, which divides the address space managed by the runtime into the following four states

| Status | Explanation |

|---|---|

| None | Memory is not reserved or mapped and is the default state of the address space |

| Reserved | Runtime holds this address space, but accessing this memory will result in an error |

| Prepared | Memory is reserved and generally does not have a physical memory counterpart. The behavior of accessing this memory is undefined and can be quickly transitioned to the Ready state |

| Ready | can be safely accessed |

Each different operating system will contain a specific set of methods for managing memory that will allow the memory address space to transition between different states, which we can understand in the following diagram.

The runtime contains several OS implementations of the state transition methods, all of which are contained in files starting with mem_. This section describes the Linux OS implementation of the methods in the above figure.

- runtime.sysAlloc will fetch a large chunk of free memory space from the operating system, which may be a few hundred KB or a few MB.

- runtime.sysFree will be called when a program runs out of memory (OOM) and return memory unconditionally.

- runtime.sysReserve reserves an area of memory in the operating system, access to which will trigger an exception.

- runtime.sysMap ensures that the memory region can be quickly transitioned to a ready state.

- runtime.sysUsed notifies the operating system that the application needs to use the memory area, ensuring that the memory area can be safely accessed.

- runtime.sysUnused notifies the operating system that the physical memory corresponding to the virtual memory is no longer needed and that the physical memory can be reused.

- runtime.sysFault converts the memory region into a reserved state, mainly for runtime debugging.

The runtime uses system calls such as mmap, munmap and madvise provided by Linux to implement the memory management abstraction layer of the operating system, smoothing out the differences between different operating systems and providing a more convenient interface for the runtime.

Memory management components

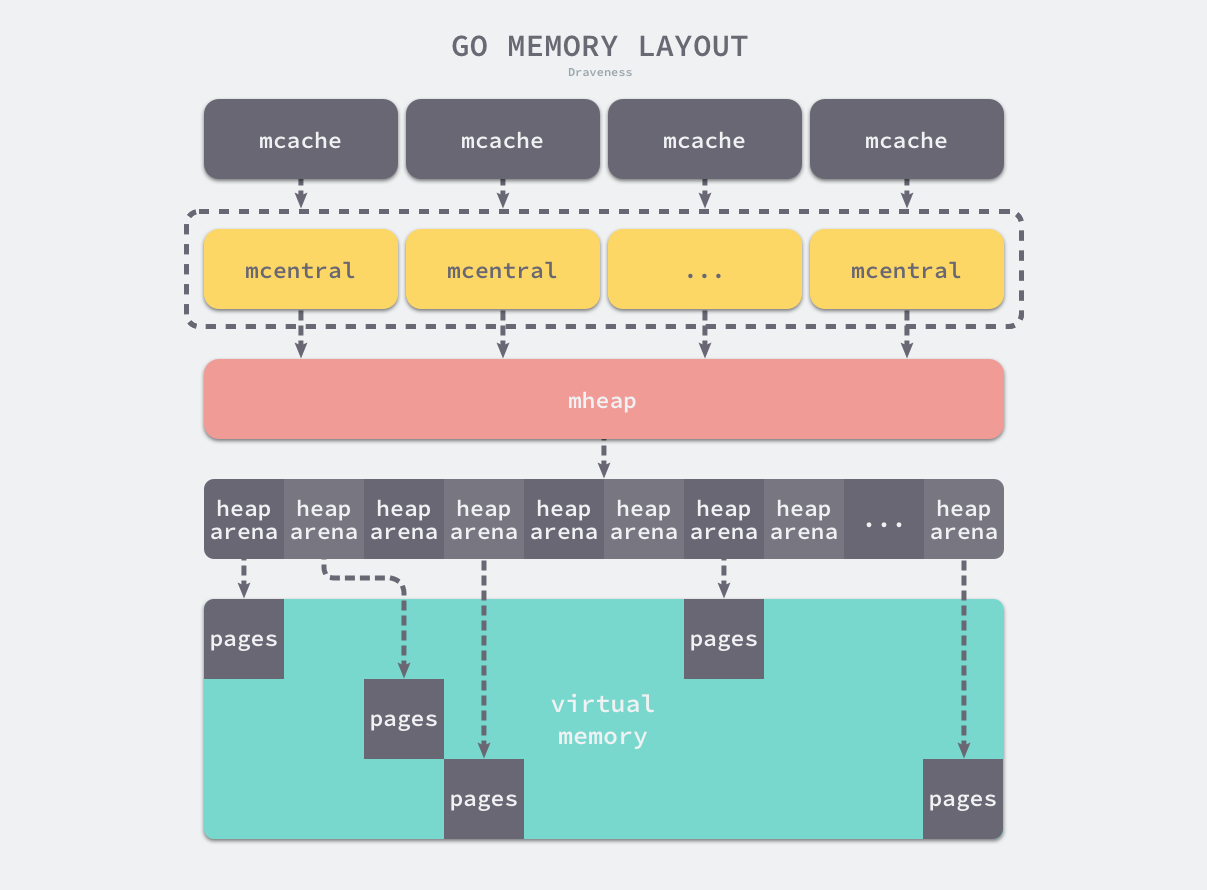

The memory allocator in Go contains several important components: memory management unit, thread cache, central cache, and page heap. This section will introduce the data structures runtime.mspan, runtime.mcache, runtime.mcentral, and runtime.mheap that correspond to these most important components. We will describe in detail their role in the memory allocator and their implementation.

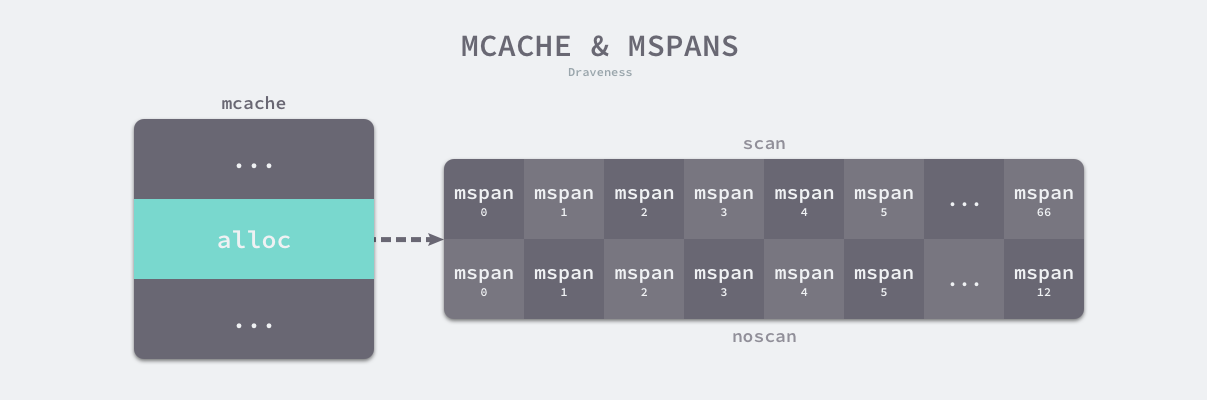

All Go language programs initialize the memory layout shown above at startup. Each processor allocates a thread cache, runtime.mcache, to handle the allocation of micro- and small objects, and they hold the memory management unit runtime.mspan.

Each type of memory management unit manages objects of a specific size, and when there are no free objects in the memory management unit, they fetch new memory units from the 134 central cache runtime.mcentral held by runtime.mheap, which belongs to the global heap structure runtime.mheap and which will It requests memory from the operating system.

On an amd64 Linux operating system, runtime.mheap holds 4,194,304 runtime.heapArena, each of which manages 64MB of memory, for a maximum of 256TB of memory for a single Go language program.

Memory management unit

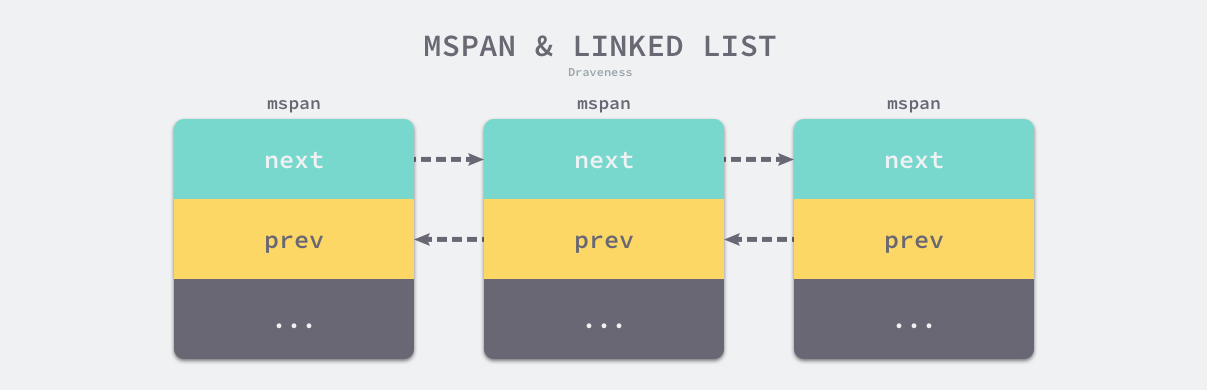

runtime.mspan is the basic Go language memory management unit. The structure contains two fields, next and prev, which point to the previous and next runtime.mspan, respectively.

The above structures are concatenated to form a bi-directional chain table as follows. The runtime.mSpanList stores the head and tail nodes of the bi-directional chain table and uses them in the thread cache and the central cache.

Because adjacent management units refer to each other, we can access other nodes in a bidirectional chain table from any of the structures.

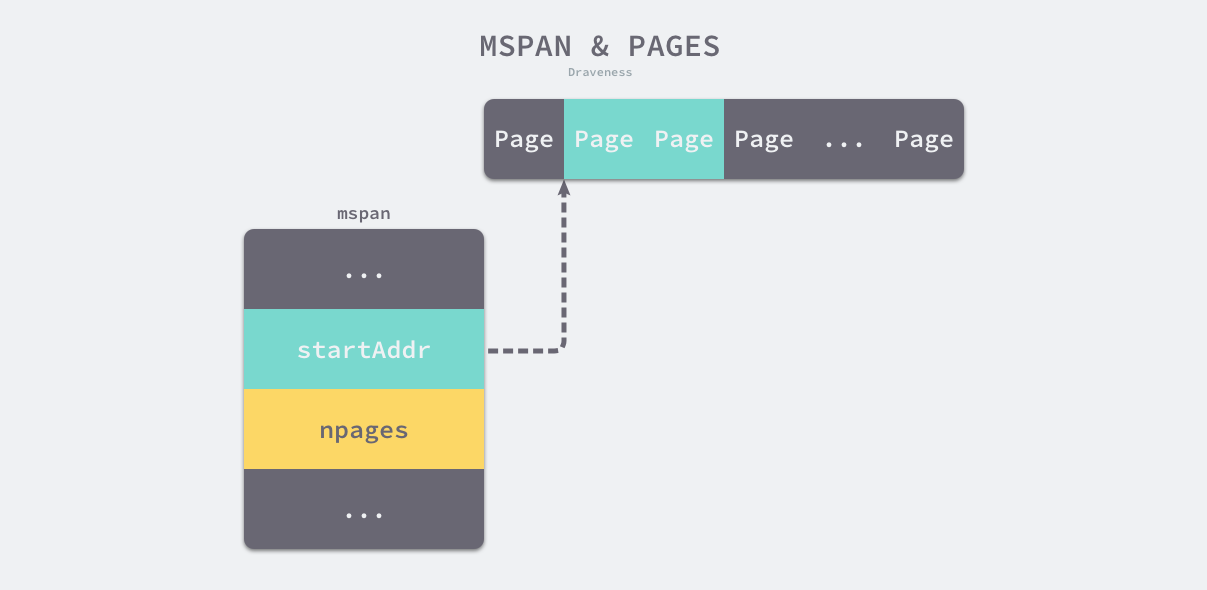

Pages and memory

Each runtime.mspan manages npages of 8KB in size, which are not OS memory pages, they are integer multiples of OS memory pages, and the structure will manage the allocation and reclamation of memory pages using the following fields.

- startAddr and npages - identifies the memory where the multiple pages managed by this structure are located, each with a size of 8KB.

- freeindex - the initial index of the free object in the scanned page.

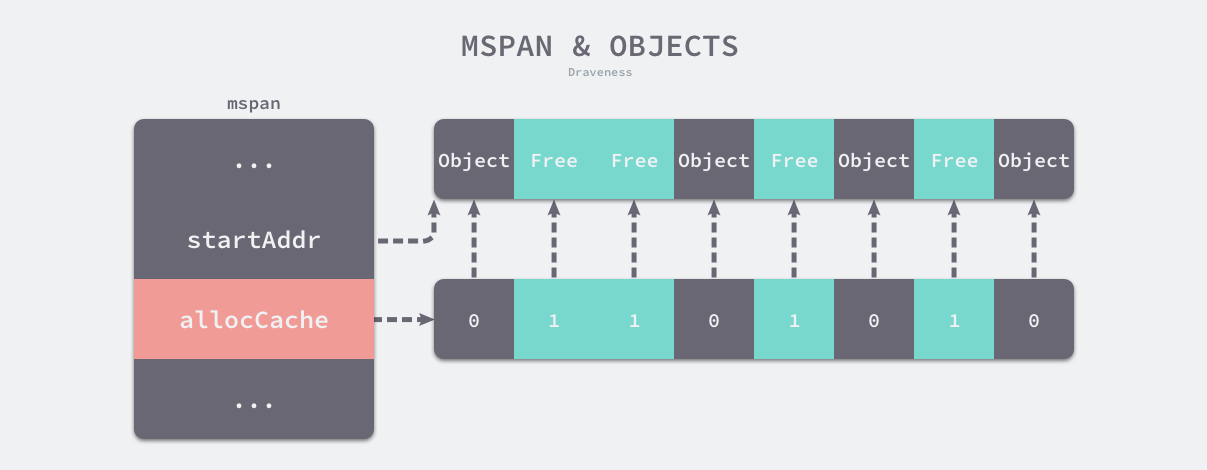

- allocBits and gcmarkBits - used to mark memory occupancy and recycling, respectively.

- allocCache - the complement of allocBits, which can be used to quickly find unused memory in memory.

runtime.mspan takes two different views of managed memory, and when a structure is running low on managed memory, the runtime will request memory from the heap on a page-by-page basis.

When a user program or thread requests memory from runtime.mspan, it uses the allocCache field to quickly find the space to be allocated in managed memory on an object-by-object basis.

If we can find a free memory cell in memory it is returned directly, when memory does not contain free memory, the higher level component runtime.mcache updates the memory management cell for the call to runtime.mcache.refill to meet the need to allocate memory for more objects.

state

The runtime uses runtime.mSpanStateBox to store the state of the memory management unit runtime.mSpanState

This state may be in four cases: mSpanDead, mSpanInUse, mSpanManual and mSpanFree. When runtime.mspan is in the free heap, it will be in the mSpanFree state; when runtime.mspan has been allocated, it will be in the mSpanInUse, mSpanManual state, and the runtime will follow the following rules to convert this state.

- Possible conversion from mSpanFree to mSpanInUse and mSpanManual during any phase of garbage collection.

- Possible conversion from mSpanInUse and mSpanManual to mSpanFree during the purge phase of garbage collection.

- may not be converted from mSpanInUse and mSpanManual to mSpanFree during the mark phase of garbage collection.

The operation to set the runtime.mspan state must be atomic to avoid the thread contention problem caused by garbage collection.

Span Classes

runtime.spanClass is the spanning class of [runtime.mspan that determines the size and number of objects stored in the memory management unit.

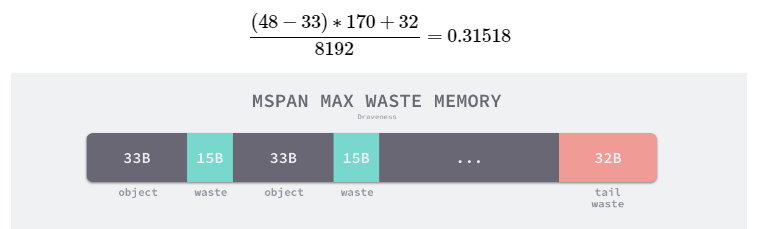

The Go memory management module contains 67 spanning classes, each of which stores a specific size of object and contains a specific number of pages and objects, all of which are preselected and stored in variables such as runtime.class_to_size and runtime.class_to_allocnpages.

| class | bytes/obj | bytes/span | objects | tail waste | max waste |

|---|---|---|---|---|---|

| 1 | 8 | 8192 | 1024 | 0 | 87.50% |

| 2 | 16 | 8192 | 512 | 0 | 43.75% |

| 3 | 24 | 8192 | 341 | 0 | 29.24% |

| 4 | 32 | 8192 | 256 | 0 | 46.88% |

| 5 | 48 | 8192 | 170 | 32 | 31.52% |

| 6 | 64 | 8192 | 128 | 0 | 23.44% |

| 7 | 80 | 8192 | 102 | 32 | 19.07% |

| … | … | … | … | … | … |

| 67 | 32768 | 32768 | 1 | 0 | 12.50% |

The above table shows the size, number of objects stored, and memory space wasted for 67 span classes ranging in size from 8B to 32KB. Take the fourth span class in the table as an example, the maximum object size in runtime.mspan with span class 5 is 48 bytes, manages 1 page, and can store up to 170 objects. Because memory needs to be managed by page, 32 bytes of memory are wasted at the end, and when the objects stored in the page are all 33 bytes, at most 31.52% of the resources are wasted.

In addition to the 67 span classes mentioned above, the runtime also contains a special span class with ID 0, which can manage special objects larger than 32KB. We will describe the allocation process for large objects in more detail later, so we will not expand on it here.

In addition to storing the ID of the class, the span class also stores a noscan token bit that indicates whether the object contains a pointer. We can understand the underlying storage of IDs and tokens by looking at the following functions and methods.

runtime.spanClass is an integer of type uint8, the first 7 bits of which store the ID of the span class and the last bit indicates whether it contains a pointer or not. The two methods provided by this type can help us get the corresponding field quickly.

Thread cache

runtime.mcache is the thread cache in Go language, it will be bound to the processor on the thread one by one, mainly used to cache tiny objects requested by the user program. Each thread cache holds 68 * 2 runtime.mspan, and these memory management units are stored in the alloc field of the structure.

The thread cache is not initialized with runtime.mspan, and only when the user application requests memory does it get a new runtime.mspan from a higher-level component to meet the memory allocation requirements.

initialization

The runtime initializes the processor by calling runtime.allocmcache, which initializes the new runtime.mcache structure on the system stack using the thread cache allocator in runtime.mheap.

|

|

As we mentioned above, all runtime.mspan in the initialized runtime.mcache is the empty placeholder emptymspan.

replacement

runtime.mcache.refill will acquire a memory management unit for the thread cache of the specified span class. The unit being replaced cannot contain free memory space, and the acquired unit needs to contain at least one free object for memory allocation:

As shown in the code above, this method requests a new runtime.mspan from the central cache to be stored in the thread cache, which is the only way to insert a memory management unit into the thread cache.

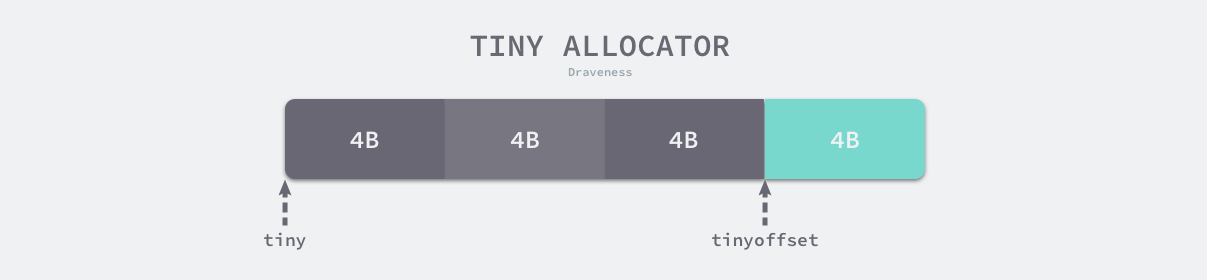

microallocators

The thread cache also contains several fields for allocating micro-objects. The following three fields make up the micro-object allocator, which is dedicated to managing objects of less than 16 bytes.

The microallocator will only be used to allocate non-pointer types of memory. tiny of the three fields above will point to a slice of memory in the heap, tinyOffset is the offset where the next free memory is located, and the final local_tinyallocs will keep track of the number of objects allocated in the memory allocator.

Central cache

runtime.mcentral is the central cache of the memory allocator. Unlike the thread cache, access to the memory management units in the central cache requires the use of a mutex lock:

Each central cache manages memory management units for a span class, and it holds both runtime.spanSet, which stores memory management units containing free objects and those not containing free objects.

Memory Management Unit

The thread cache will get the new memory management unit through the runtime.mcentral.cacheSpan method of the central cache, which has a complex implementation that we can divide into the following parts.

- call runtime.mcentral.partialSwept to find a usable memory management unit from a cleared runtime.spanSet structure containing free space.

- call runtime.mcentral.partialUnswept to find a memory management unit that can be used from the uncleared runtime.spanSet structure that has free objects.

- calling runtime.mcentral.fullUnswept to get the memory management unit from an uncleared runtime.spanSet that does not contain free space and clearing its memory space with runtime.mspan.sweep.

- calling runtime.mcentral.grow to request a new memory management unit from the heap.

- updating fields such as allocCache of the memory management unit to help allocate memory quickly.

First we look for available runtime.mspan in the free set of the central cache, and the runtime will always start by fetching the cleaned memory management units first and checking the uncleared memory management units later.

|

|

When a memory unit is found that needs to be reclaimed, the runtime triggers runtime.mspan.sweep to clean it up. If no managed unit is found in the set containing free space, then the runtime attempts to fetch from the uncleared set.

|

|

If runtime.mcentral does not find a free unit through either of these stages, it calls runtime.mcentral.grow to trigger an expansion to request new memory from the heap.

Regardless of the method through which the memory cell is acquired, the method ends by updating the fields such as allocBits and allocCache of the memory cell, allowing the runtime to quickly find free objects when allocating memory.

Scaling

The central cache’s expand method, runtime.mcentral.grow, gets the number of pages to be allocated and the span class based on the pre-calculated class_to_allocnpages and class_to_size and calls runtime.mheap.alloc to get the new runtime.mspan structure.

|

|

After getting runtime.mspan, we initialize the limit field and clear the bitmap corresponding to this structure on the heap in the above method.

Page heap

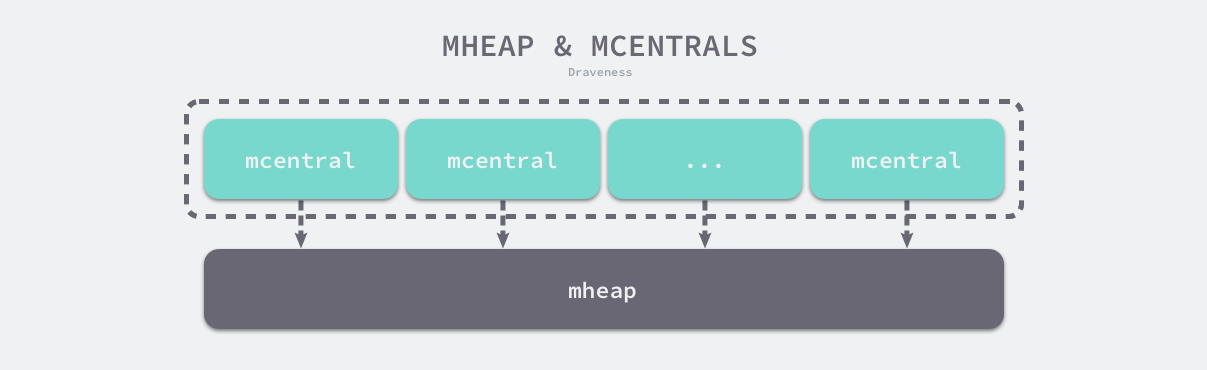

runtime.mheap is the core structure for memory allocation, which is stored as a global variable by Go language programs, and all objects initialized on the heap are managed by this structure. This structure contains two very important sets of fields, one of which is the global central cache list central, and the other is the arenas and related fields that manage the memory area of the heap.

The page heap contains a runtime.mcentral array of length 136, of which 68 are central caches for span classes that require scan, and the other 68 are central caches for noscan.

We have already described in the Design Principles section that all memory space in Go is managed by a two-dimensional matrix runtime.heapArena as follows, which can be discontinuous.

In 64-bit operating systems other than Windows, each runtime.heapArena manages 64MB of memory space. The following table shows the size of the heap area managed by Go language programs on different platforms and the memory space occupied by runtime.heapArena.

| Platform | Address Bits | Arena Size | One Dimensional Size | Two Dimensional Size |

|---|---|---|---|---|

| */64-bit | 48 | 64MB | 1 | 4M (32MB) |

| windows/64-bit | 48 | 4MB | 64 | 1M (8MB) |

| */32-bit | 32 | 4MB | 1 | 1024 (4KB) |

| */mips(le) | 31 | 4MB | 1 | 512 (2KB) |

This section describes the initialization of the page heap, memory allocation, and memory management unit allocation processes that help us understand how the global variable page heap relates to other components and the way it manages memory.

Initialization

The heap area is initialized using the runtime.mheap.init method, which we can see initializes a very large number of structures and fields, but two of the more important types of variables are initialized.

- spanalloc, cachealloc, and arenaHintAlloc free chain table allocators of type runtime.fixalloc.

- central cache of type runtime.mcentral in the central slice.

|

|

The multiple free link allocator initialized in the heap is not much different from the allocator mentioned in the design principles. When we call runtime.fixalloc.init to initialize the allocator, we need to pass in information such as the size of the structure to be initialized, which will help the allocator to partition the memory to be allocated, and it provides the following two methods for allocating and releasing memory.

- runtime.fixalloc.alloc - get the next free memory space.

- runtime.fixalloc.free - free the memory space pointed to by the pointer.

In addition to these free chain table allocators, we will also initialize all the central caches in this method. These central caches will maintain the global memory management units, and individual threads will fetch new memory units through the central caches.

Memory management units

runtime.mheap is the core component of the memory allocator and will fetch new runtime.mspan units on the system stack via its runtime.mheap.alloc method.

To stop the large memory footprint and heap growth, we need to call the runtime.mheap.reclaim method to reclaim a portion of memory before allocating the corresponding number of pages, and then the runtime allocates a new memory management unit via runtime.mheap.allocSpan, the execution of which we will split into two parts.

- allocate a new memory page and memory management unit runtime.mspan from the heap.

- initialize the memory management unit and add it to the list of memory units held by runtime.mheap.

First we need to request the number of npages of memory pages on the heap and initialize runtime.mspan.

|

|

The above methods request memory from the heap through either the processor’s page cache, runtime.pageCache, or the global page allocator, runtime.pageAlloc.

- if the requested memory is relatively small, obtains the processor requesting the memory and attempts to call runtime.pageCache.alloc to obtain the base address and size of the memory area.

- if the requested memory is large or the thread is running low on memory in the page cache, it will request memory on the page heap via runtime.pageAlloc.alloc.

- if insufficient memory is found on the page heap, attempts to expand and re-call runtime.pageAlloc.alloc to request memory via runtime.mheap.grow.

- if the memory is requested, it means the expansion is successful.

- if no memory is requested, it means that the expansion failed and the host may not have free memory and will simply abort the current program when it runs.

Whichever way the memory page is obtained, we allocate the new runtime.mspan structure in this function; the remainder of the method initializes its multiple fields with parameters such as the number of pages, memory space, and the span class.

|

|

In the above code, we initialize the just-allocated runtime.mspan structure by calling runtime.mspan.init to set the parameters and establish the connection between the page heap and the memory cell via runtime.mheaps.setSpans.

expand

runtime.mheap.grow will request more memory space from the operating system. The number of pages passed in is aligned to get the desired memory size, and we can divide the execution of this method into the following parts.

- obtain the size of the memory space expected to be allocated and the base address of the memory from the number of pages passed in.

- calling runtime.mheap.sysAlloc to request more memory from the operating system if there is not enough space in the arena area.

- expanding the arena area held by runtime.mheap and updating the page allocator’s meta information.

- in some scenarios, calling runtime.pageAlloc.scavenge to reclaim free memory pages that are no longer in use.

During the page heap expansion, runtime.mheap.sysAlloc is the method used by the page heap to request virtual memory, and we will describe the implementation of this method in several parts. First, the method attempts to request memory in a pre-reserved area.

The above code calls runtime.linearAlloc.alloc of the linear allocator to request a usable space in the pre-reserved memory. If no space is available, we will try to expand it at the target address based on the arenaHints of the page heap.

|

|

runtime.sysReserve and runtime.sysMap are the core parts of the above code that request memory from the operating system and convert it to the Prepared state.

|

|

The runtime.mheap.sysAlloc method at the end initializes a new runtime.heapArena to manage the memory space just requested, which is added to the two-dimensional matrix of the page heap.

Memory allocation

All objects on the heap are allocated memory by calling the runtime.newobject function, which calls runtime.mallocgc to allocate memory space of the specified size, which is the mandatory function for user programs to request memory space on the heap.

|

|

The above code uses runtime.gomcache to get the thread cache and determine if the requested memory type is a pointer. We can see from this code snippet that runtime.mallocgc performs different allocation logic depending on the size of the object. As also described in the previous section, the runtime classifies objects into micro, small and large objects based on their size, and here different allocation logic is chosen based on size.

- micro-object (0, 16B) - use the micro-allocator first, then try to allocate memory using the thread cache, the central cache and the heap in that order.

- small objects [16B, 32KB] - try to allocate memory using the thread cache, the central cache and the heap, in that order.

- large objects (32KB, +∞) - allocate memory directly on the heap.

We will introduce the process of allocating micro-objects, small objects and large objects at runtime in turn to sort out the core execution flow of memory allocation.

Microobjects

The Go language runtime classifies objects smaller than 16 bytes as microobjects, and it improves the performance of microobject allocation using a microallocator on the thread cache, which we mainly use to allocate smaller strings and escaped temporary variables. The microallocator can combine multiple smaller memory allocation requests into the same memory block, and the whole memory may be reclaimed only when all objects in the memory block need to be reclaimed.

The objects managed by the microallocator cannot be of pointer type. The size of the memory block for managing multiple objects, maxTinySize, is adjustable, and by default the memory block size is 16 bytes. The larger the value of maxTinySize, the higher the possibility of combining multiple objects and the more memory is wasted; the smaller the maxTinySize, the less memory is wasted. However, no matter how you tune it, a multiple of 8 is a good choice.

As shown above, the microallocator has allocated 12 bytes of objects in a 16-byte block of memory. If the next object to be allocated is less than 4 bytes, it will use the remainder of the above block directly, reducing memory fragmentation, although the block will only be reclaimed if all objects are marked as garbage.

The tiny field in the thread cache runtime.mcache points to a block of size maxTinySize, which will be fetched and returned by the runtime with the base address and offset if the current block still contains free memory of the right size.

|

|

When the memory block does not contain free memory, this code below will first find the memory management unit runtime.mspan corresponding to the span class from the thread cache and call runtime.nextFreeFast to get the free memory; when there is no free memory, we will call runtime.mcache.nextFree to get the free memory from the central cache or the page heap to get an allocatable block of memory:

|

|

After acquiring the new free memory block, the above code clears the data in the free memory, updates the tiny and tinyoffset fields that make up the micro-object allocator, and returns the new free memory.

Small objects

Small objects are objects of size 16 bytes to 32,768 bytes and all objects of pointer type less than 16 bytes, the allocation of small objects can be divided into the following three steps.

- determine the size of the allocated object and the span class runtime.spanClass.

- fetching memory management units from the thread cache, central cache, or heap and finding free memory space from the memory management units.

- calling runtime.memclrNoHeapPointers to clear all data in free memory.

Determining the size of the object to be allocated and the span class requires the use of the pre-calculated size_to_class8, size_to_class128 and class_to_size dictionaries, which help us to quickly obtain the corresponding values and construct runtime.spanClass.

|

|

In the above code snippet, we will focus on the implementation of two methods, runtime.nextFreeFast and runtime.mcache.nextFree, which help us to get free memory space. runtime.nextFreeFast uses the memory management unit’s allocCache field in the memory management unit to quickly find the number of bits in that field that are 1. We described above that 1 means that the memory space corresponding to that bit is free.

|

|

Once we find a free object, we can update the allocCache, freeindex, etc. fields of the memory management unit and return that piece of memory; if we don’t find a free memory, the runtime will find a new memory management unit via runtime.mcache.nextFree.

|

|

In the above method, if we do not find an available memory management unit in the thread cache, we replace the structure that no longer has an available object with a memory management unit from the central cache via runtime.mcache.refill as described earlier, which calls runtime.mspan.nextFreeIndex of the new structure to get free memory and returns it.

Large objects

Large objects larger than 32KB are handled separately by the runtime. Instead of fetching memory management units from the thread cache or central cache, we call runtime.mcache.allocLarge to allocate large chunks of memory directly.

|

|

runtime.mcache.allocLarge calculates the number of pages needed to allocate the object, and it requests memory on the heap in multiples of 8KB.

|

|

The memory request creates a runtime.spanClass with a span class of 0 and calls runtime.mheap.alloc to allocate a management unit to manage the corresponding memory.

Summary

Memory allocation is the core logic of Go language runtime memory management. The runtime memory allocator uses a TCMalloc-like allocation strategy to classify objects according to their size and designs multi-level components to improve the performance of the memory allocator. This section not only introduces the design and implementation principles of memory allocators in Go language, but also introduces common designs of memory allocators to help us understand the different choices made by different programming languages when designing memory allocators.

Although the memory allocator is very important, it only solves the problem of how to allocate memory. We have omitted a lot of code related to garbage collection in this section and have not analyzed the implementation principles of runtime garbage collection, in the next section we will analyze the design and implementation principles of Go language garbage collection in detail.