I remember a dozen years ago when I was still using the early Windows system, every time the system would become very laggy, then you need to open the system provides the following disk defragmentation program, when the defragmentation is complete will feel the system becomes slightly smoother some.

In the file system, defragmentation is the process of reducing fragmentation in the file system, which rearranges the contents of the same files on the disk in order and uses compression algorithms to remove gaps between files, somewhat similar to the tag compression algorithm in garbage collection.

The author has not used Windows for many years, and has been using macOS since college for seven or eight years now. I don’t know if Windows still needs disk defragmentation today, but there is no similar tool on either Linux or macOS 3, which makes the author want to to look into the reasons behind this. In summary, there are two main reasons why operating systems need defragmentation.

- the design of the file system makes many fragments appear after the resources are released.

- random read and write performance of mechanical hard drives is several orders of magnitude worse than sequential read and write.

File system

Windows in the olden days used a very simple file system - the File Allocation Table - the design of which was the root cause of disk fragmentation, but before we analyze it, let’s introduce the history of the file system here.

FAT was the first file system designed in 1977 for floppy disks, a very old storage medium, and today’s computers have largely removed the floppy disk drive, which had very little storage space at that time, and each time a file was written it would start from scratch to find the available space and would not check the size of the available space.

Soon after the file system was used, Microsoft chose to extend the FAT file system to support more space in the DOS and Windows 9x series as mechanical hard disk drives (Hard Disk Driver, HDD) began to become cheaper and more widely used, and disk-sensitive applications such as databases quickly became very popular.

Although fragmentation could occur with each data write, this was acceptable at the time because floppy disks had less storage space, but as storage media became more spacious, we needed to introduce random writes to improve efficiency, and FATs that supported random writes were also simple file systems.

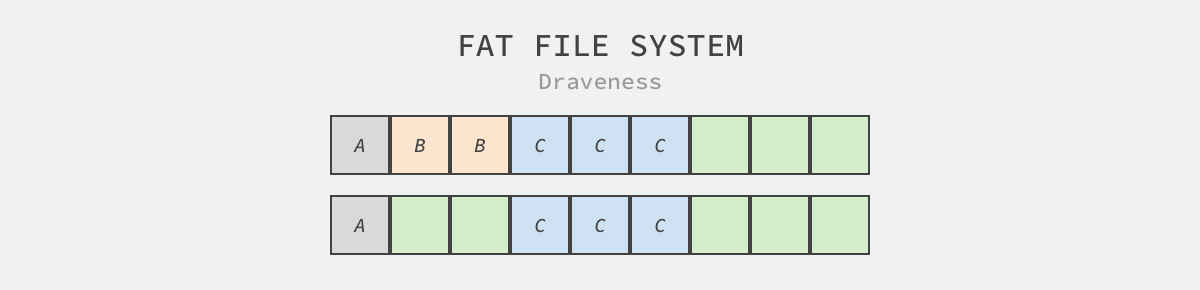

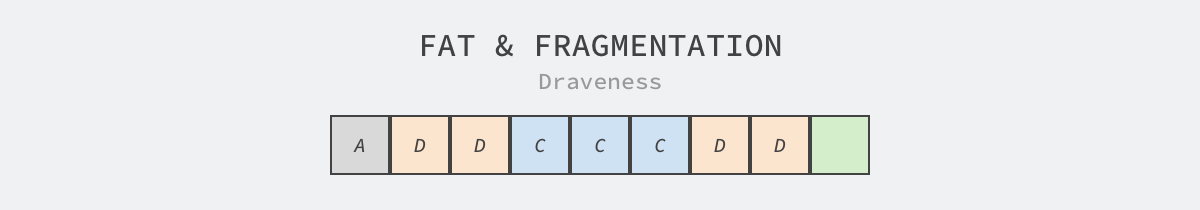

As you can see above, if we are writing multiple files A, B and C to a new hard drive, these files will be stored sequentially in the FAT file system without any fragmentation between them. However, if at this point we decide to delete one of the B files and write a larger file D to the file system, a more interesting situation arises.

The FAT file system finds two free spots on the disk left after deleting B and writes part of the D file to them, and then finds another free spot after the C file and writes all the remaining contents of the D file to it. The result is that the D file is scattered all over the drive, triggering multiple random reads when the user reads the D file.

FAT is a very simple, primitive file system that is poorly designed and implemented from today’s point of view. Not only does it not check the size of free space each time a file is written, causing file fragmentation, but it also does not include fragmentation management features and requires the user to manually trigger disk defragmentation over time, which is actually a very poor design and user experience.

Mechanical Hard Disk Drive

Mechanical hard disk drive (Hard Disk Drive, HDD) is an electronic-based, non-volatile mechanical data storage device, which uses magnetic memory to store and find data on the disk, in the process of reading and writing data, the head attached to the mechanical arm of the hard disk will read and write bits of the disk surface.

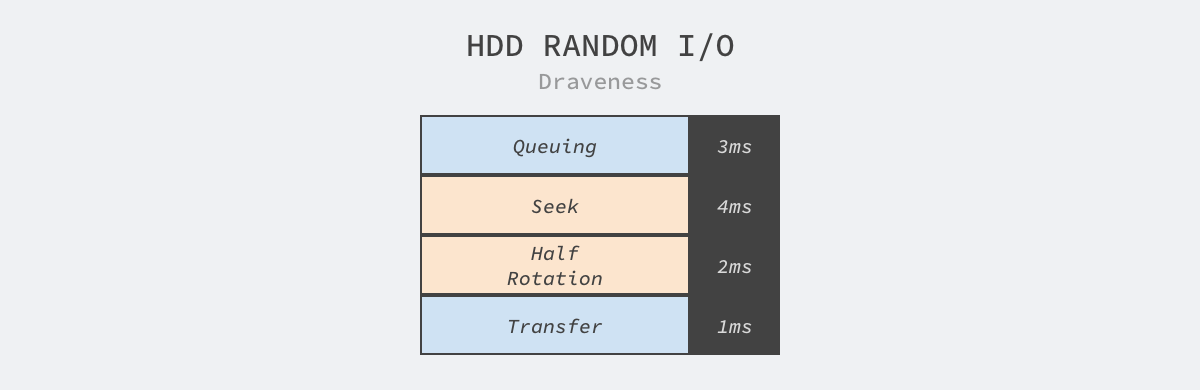

It is because the disk has a complex mechanical structure, so the disk takes a lot of time to read and write, and the read and write performance of the database is basically dependent on the performance of the disk, if we use a mechanical hard disk in the database to query a random piece of data, this may trigger random I/O of the disk, however, the cost required to read the data from the disk to memory is very large, ordinary disks (non-SSD) need to load data through the process of queuing, seeking, spinning and transfer, which takes about 10ms

When we read a file on disk, if the contents of the file are scattered in different locations on the disk, it may be necessary to perform several random I/Os to get the entire contents of the file, which is a significant additional overhead for a mechanically structured disk. If the contents of the file will be stored in the same location, then only one random I/O needs to be performed when reading the file, and all subsequent reads can use sequential I/O at a speed of about 40 MB/s, which can significantly reduce the read time of the file.

Fragmented files cause more serious performance problems on mechanical drives, and ideally we would like the disk to reach its upper limit of read/write bandwidth; however, in practice, frequent random I/Os allow the disk to spend most of its time seeking and spinning, preventing it from working at full capacity. An SSD with an electronic architecture can better tolerate a fragmented file system than a mechanical drive, and defragmentation can instead affect its lifespan.

Summary

I believe that many engineers in the industry before entering the Windows system, the early Windows is the desktop system is almost the only choice, the author of today’s topic has a relatively special feelings, in the study of operating systems before the file system has never thought about this issue, until the word fragmentation of the file system let themselves think of more than a decade ago, this kind of clarity The feeling of clarity is still rarely experienced today. Let’s briefly summarize the two reasons why early Windows needed defragmentation.

- Early Windows systems used a simple FAT file system, which was subject to frequent write and delete operations that resulted in large files being scattered all over the disk.

- mechanical hard drives were the dominant devices of their time more than a decade ago, although the mechanical construction of hard drives meant that randomly reading and writing to locations on the disk required physical seek and rotation, causing the process to be extremely slow.