Kubernetes Ingress is just a common resource object in Kubernetes that requires a corresponding Ingress Controller to resolve Ingress rules and expose the service to the outside, such as ingress-nginx, which is essentially just an Nginx Pod that then redirects requests to This Pod itself is also exposed through the Kubernetes service, most commonly through LoadBalancer.

Again, this article wants to give you a simple and clear overview of what’s behind Kubernetes Ingress to make it easier for you to understand the Ingress in use.

Why should I use Ingress?

We can use Ingress to expose internal services to the outside of the cluster. It saves you valuable static IPs because you don’t need to declare multiple LoadBalancer services, and it allows for more additional configuration. Here’s a simple example to illustrate Ingress.

Simple HTTP server

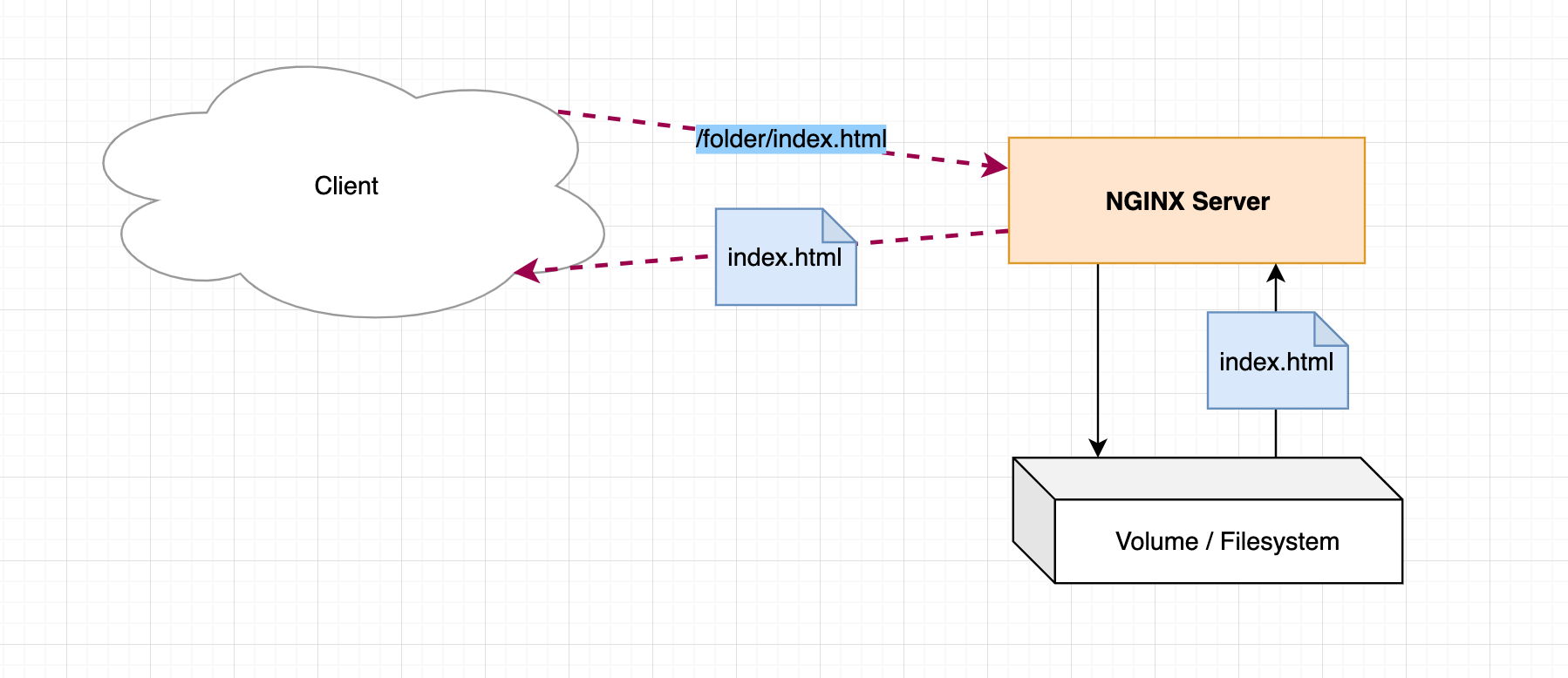

First, let’s go back to the days before containers and Kubernetes.

Before that, we used a (Nginx) HTTP server to host our services, which would receive a request for a specific file path via the HTTP protocol, check the file path in the filesystem, and return it if it existed.

For example, in Nginx, we can do this with the following configuration.

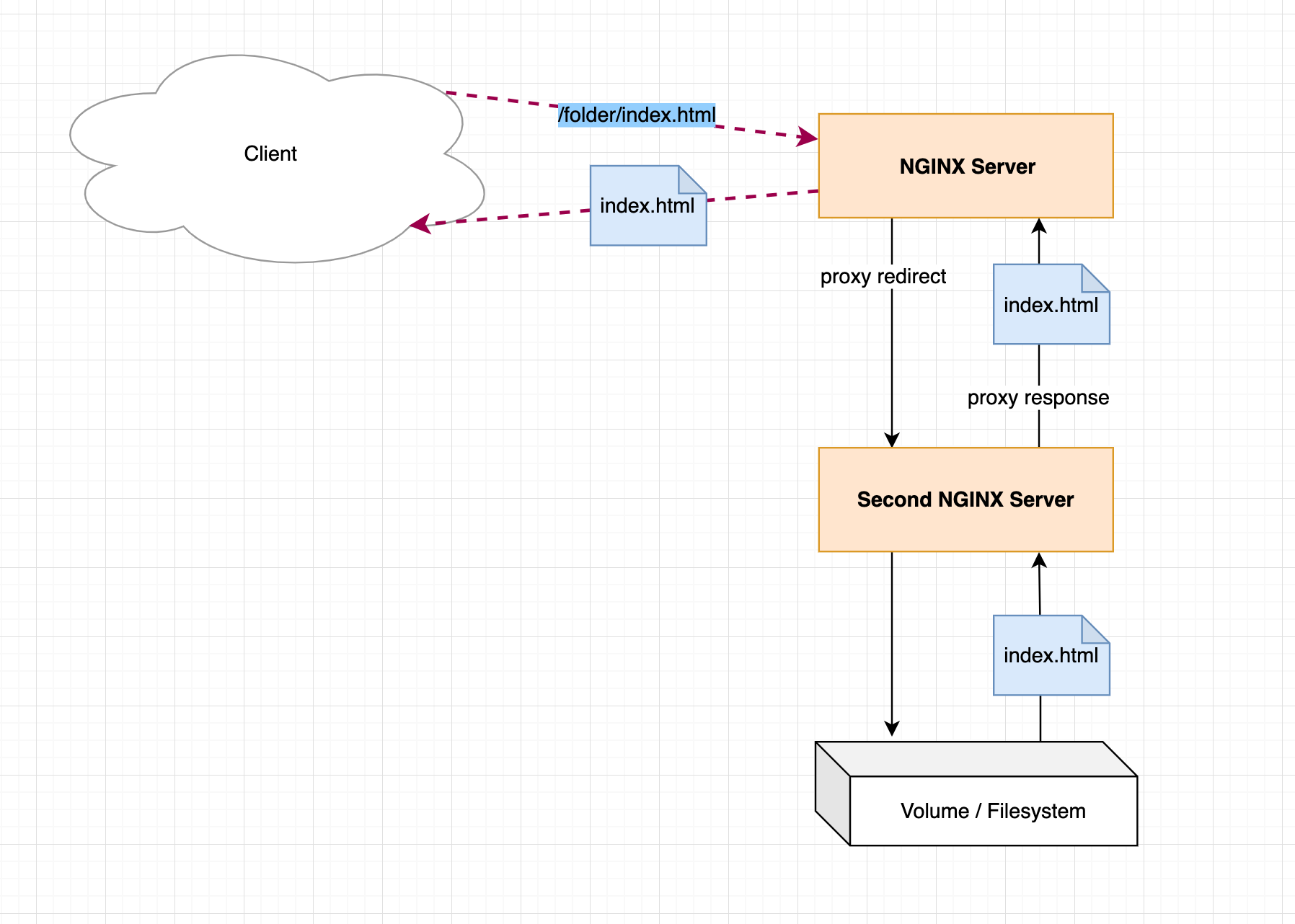

In addition to the features mentioned above, we can, when the HTTP server receives the request, redirect the request to another server (meaning it acts as a proxy) and then redirect the response from that server to the client. For the client, nothing changes and the result received is still the requested file (if it exists).

Also in Nginx, the redirect can be configured as follows.

This means that Nginx can serve files from the filesystem, or redirect responses to other servers and return their responses through a proxy.

A simple Kubernetes example

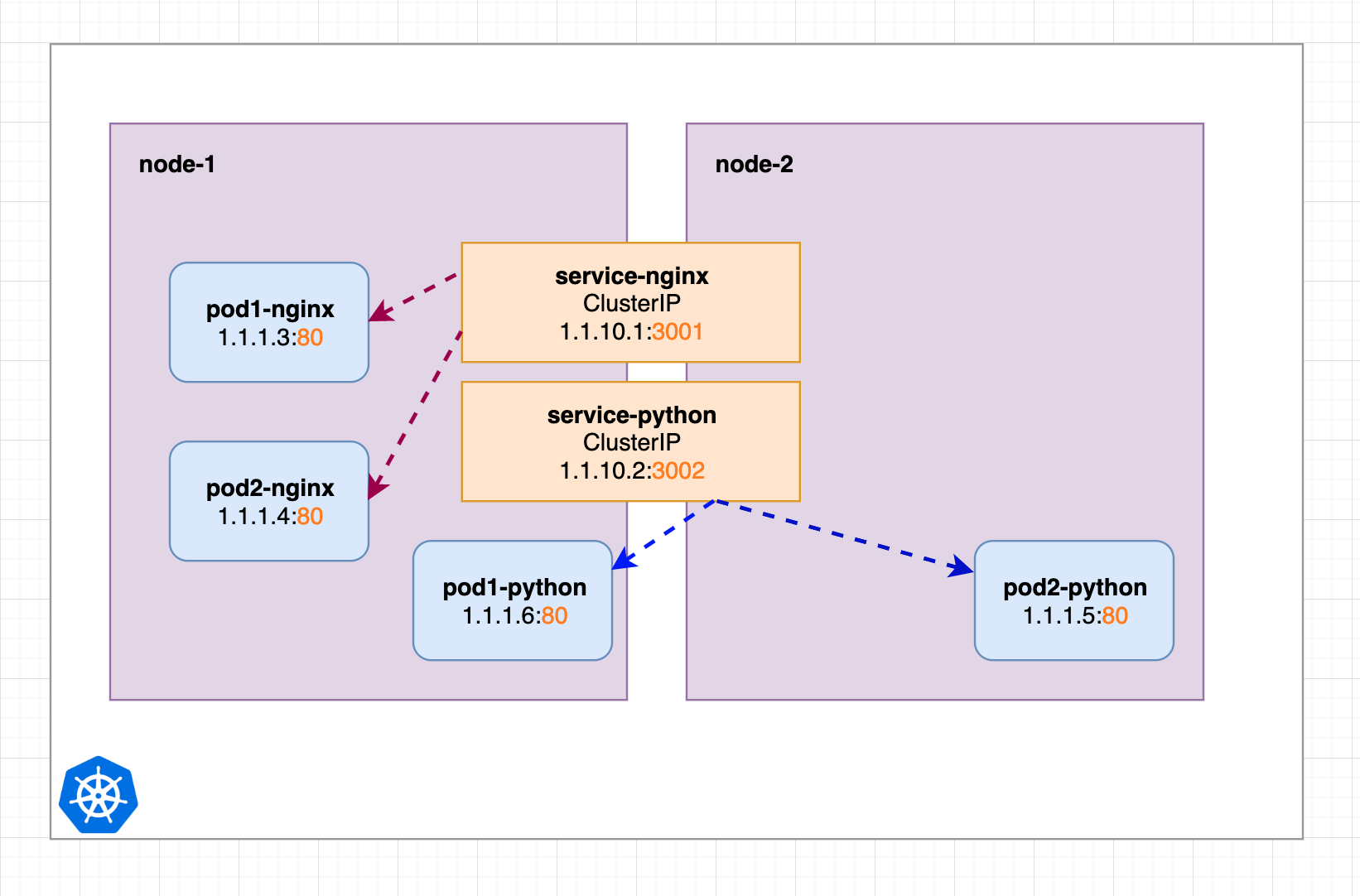

Using ClusterIP Services

After deploying an application in Kubernetes, we should first go through the Kubernetes Service services (explained in the previous article). For example, we have two worker nodes with two services service-nginx and service-python that point to different pods. these two services are not dispatched to any specific node, i.e., they are possible on any node, as shown in the following figure.

Inside the cluster we can request to Nginx pods and Python pods through their Service services, now we want to make these services accessible from outside the cluster as well, as mentioned before we need to convert these services to LoadBalancer services.

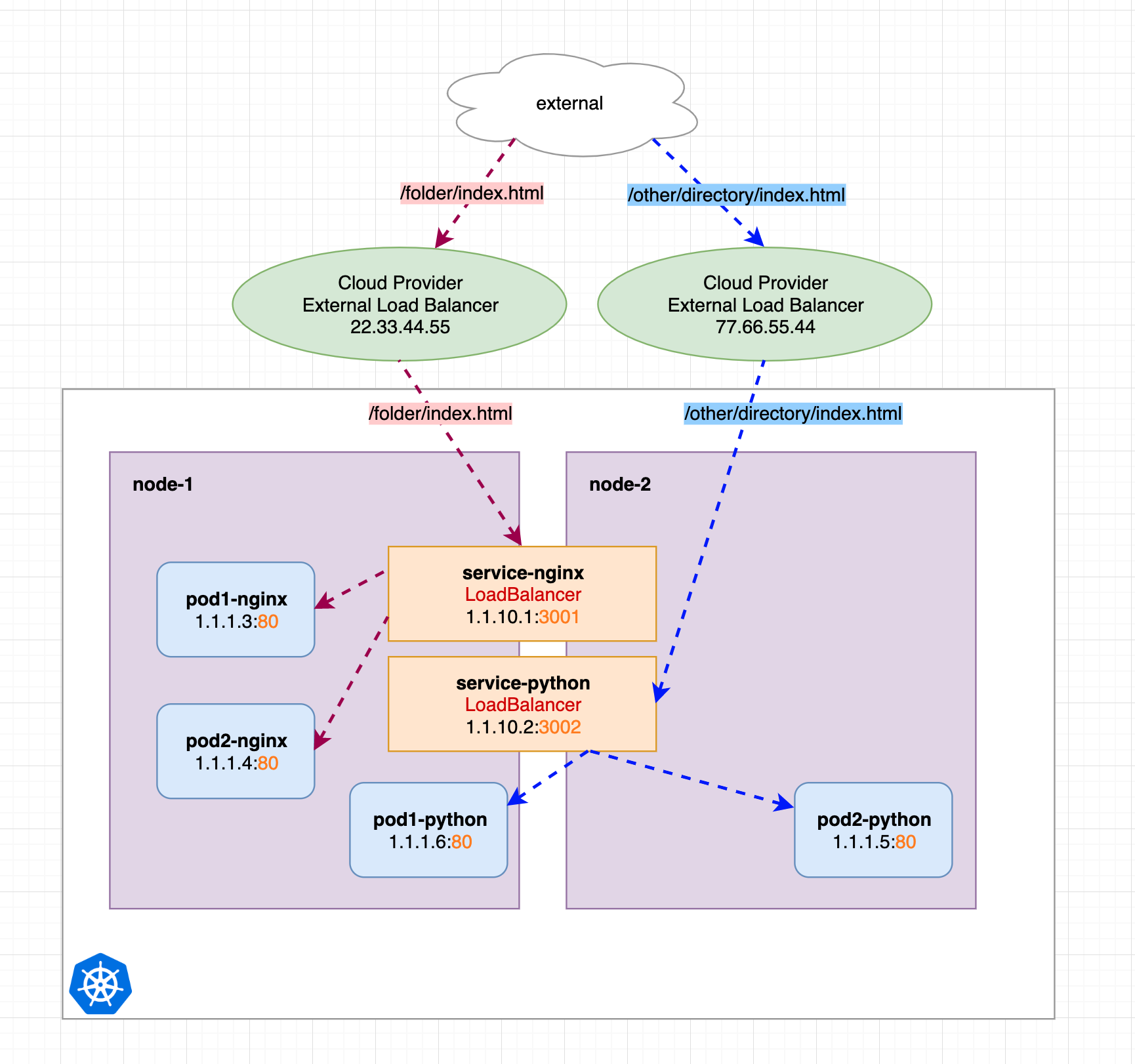

Using the LoadBalancer service

Of course the prerequisite for using the LoadBalancer service is that our Kubernetes cluster’s hosting provider supports it, and if it does we can convert the above ClusterIP service to a LoadBalancer service by creating two external load balancers that redirect requests to our node IPs and then redirect them to the internal ClusterIP service.

We can see that both LoadBalancers have their own IP, if we send a request to LoadBalancer 22.33.44.55, it will be redirected to our internal service-nginx service. If you send a request to 77.66.55.44, it will be redirected to our internal service-python service.

This is really convenient, but be aware that IP addresses are rare and not cheap. Imagine we have not just two services in our Kubernetes cluster, but many, and the cost of creating LoadBalancers for those services increases exponentially.

So is there another solution that allows us to use just one LoadBalancer to forward requests to our internal services? Let’s explore this first in a manual (non-Kubernetes) way.

Manually configure the Nginx proxy service

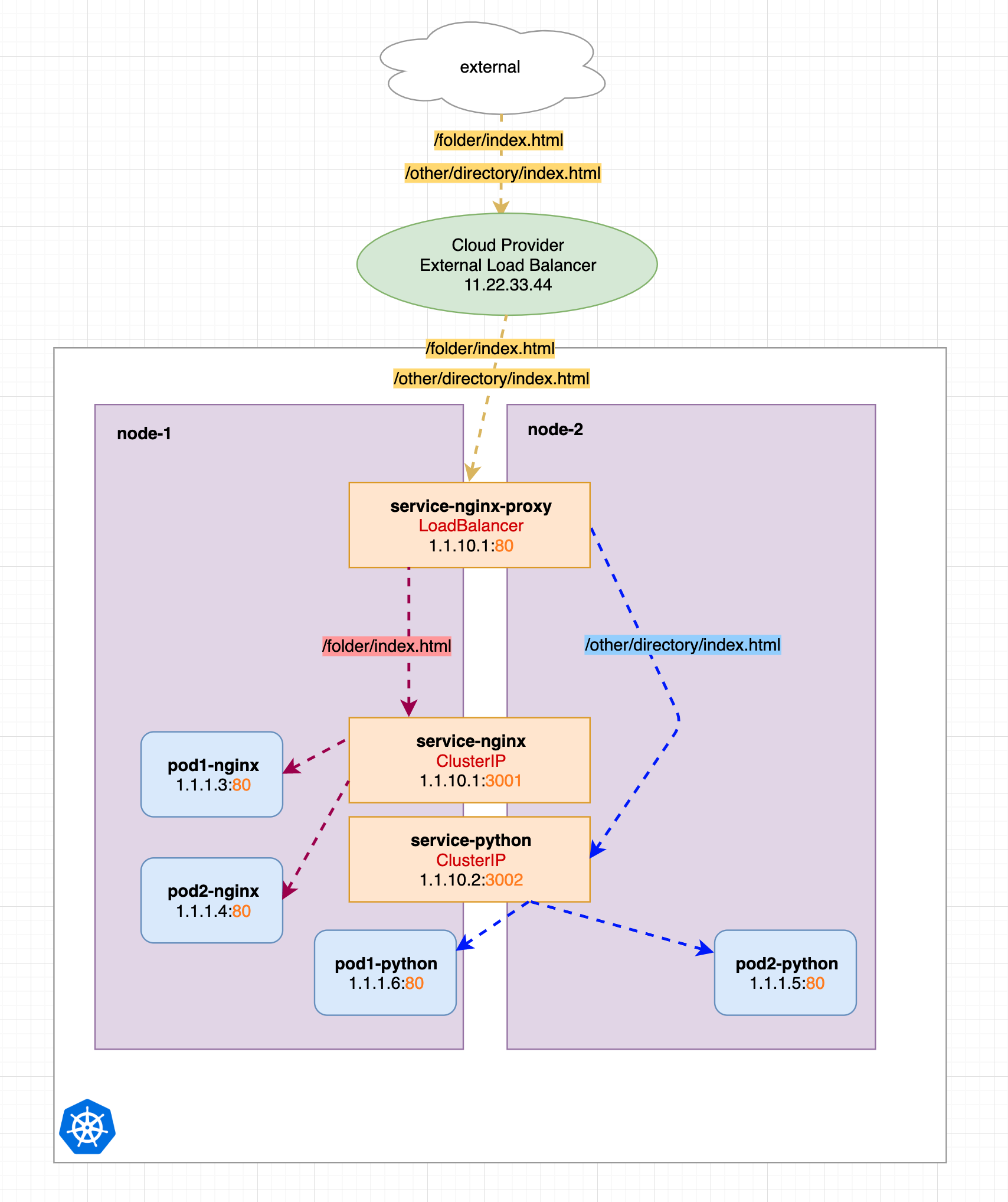

We know that Nginx can be used as a proxy, so we can easily think of running an Nginx to proxy our services. As you can see below, we have added a new service called service-nginx-proxy, which is actually our only LoadBalancer service. service-nginx-proxy will still point to one or more Nginx-pod-endpoints (not identified on the ), the other two services are now simple ClusterIP services.

You can see that we have assigned only one load balancer with IP address 11.22.33.44, and we mark the different http request paths with yellow, they have the same target, but contain different request URLs.

The service-nginx-proxy service will decide which service they should redirect the request to based on the request URL.

In the image above we have two services behind it, marked in red and blue, with red redirecting to the service-nginx service and blue redirecting to the service-python service. The corresponding Nginx proxy configuration is shown below.

Currently, we need to manually configure the service-nginx-proxy service, for example, if we add a request path that needs to be routed to another service, we need to reconfigure the Nginx configuration to make it work, but this is a viable solution, just a bit of a pain.

Kubernetes Ingress is designed to make our configuration easier, smarter, and more manageable, so we use Ingress in Kubernetes clusters to expose services outside the cluster instead of the manual configuration above.

Using Kubernetes Ingress

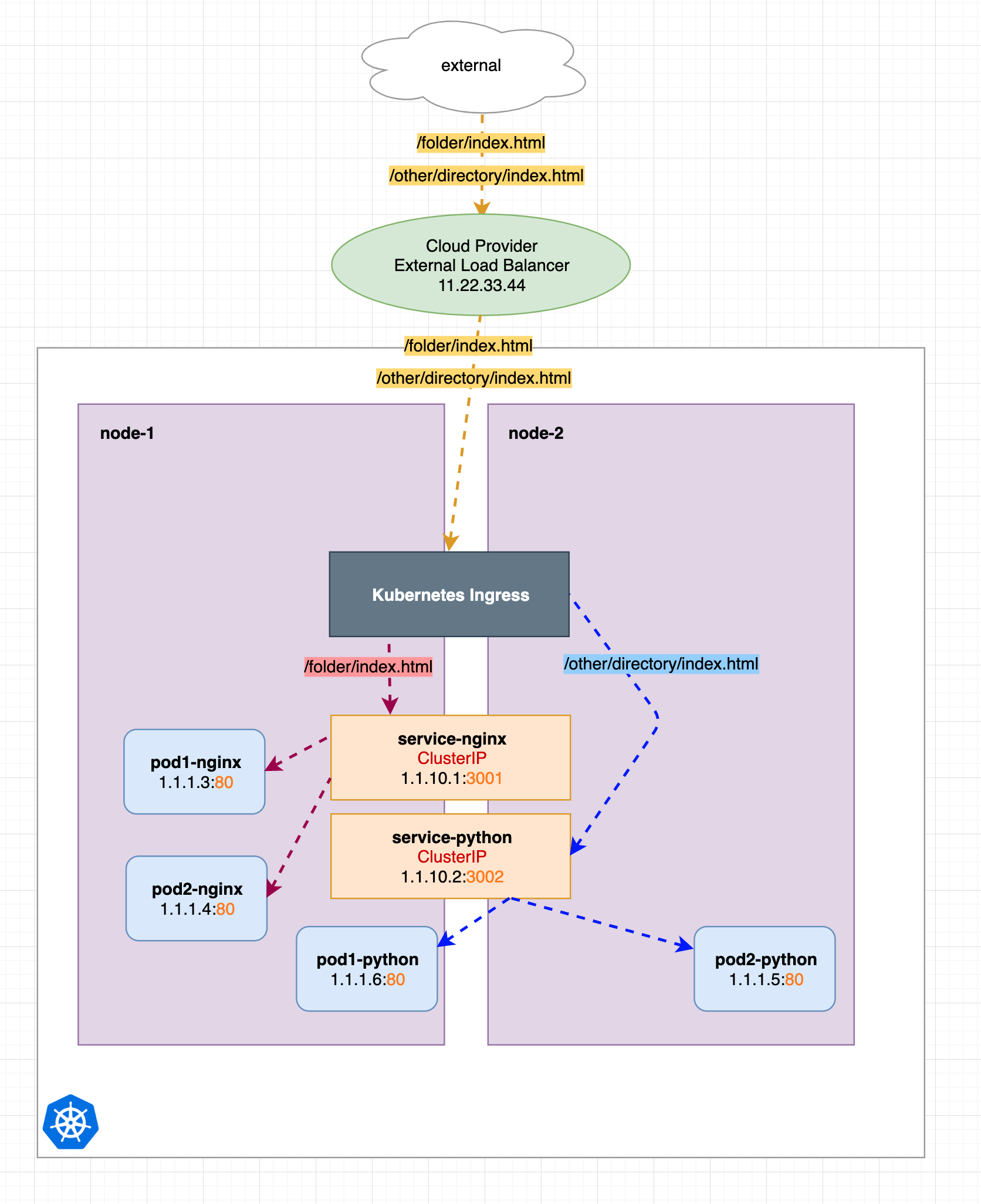

Now we switch from the manual proxy configuration above to the Kubernetes Ingress approach, as shown in the figure below, we just use a pre-configured Nginx (Ingress) that already does all the proxy redirection work for us, which saves us a lot of manual configuration work.

This actually explains what Kubernetes Ingress is, so let’s take a look at some configuration examples below.

Installing the Ingress Controller

Ingress is just a resource object in Kubernetes where we can configure our service routing rules, but to actually identify the Ingress and provide proxy routing, we need to install a corresponding controller to do so.

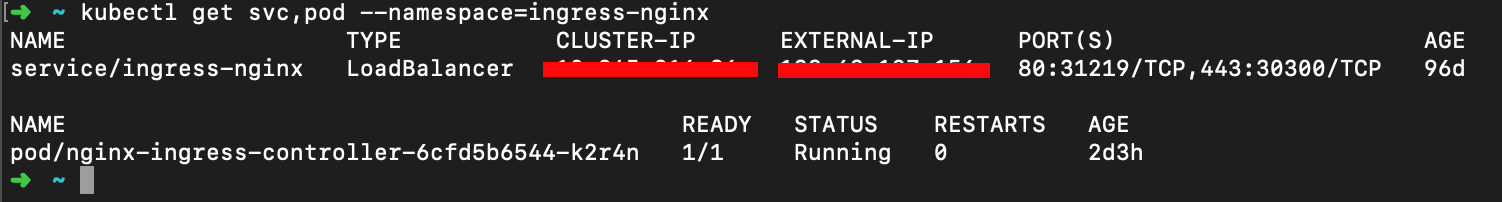

Use the following command to see the k8s resources installed in the namespace ingress-nginx.

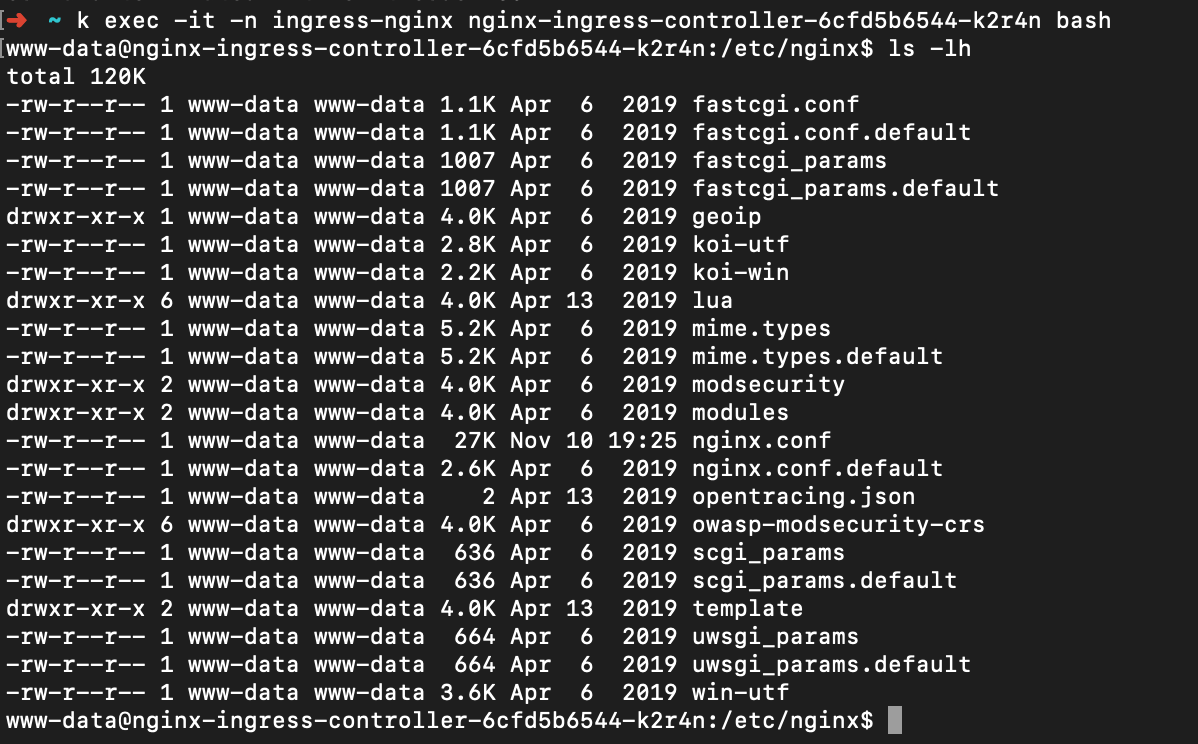

We can see a normal LoadBalancer service with an external IP and a pod to which it belongs, which we can access with the command kubectl exec and which contains a preconfigured Nginx server.

The nginx.conf file contains various proxy redirection settings and other related configurations.

Ingress Configuration Example

The Ingress yaml example that we use can look like this.

|

|

As with other resource objects, you can create this resource object by using kubectl create -f ingress.yaml, which will be converted to the corresponding Nginx configuration by the Ingress controller installed above.

What if one of your internal services, the one Ingress should redirect to, is in a different namespace? Because the Ingress resources we define are at the namespace level. In the Ingress configuration, only services in the same namespace can be redirected to .

If you define multiple Ingress yaml configurations, they are combined into a single Nginx configuration by a single Ingress controller. This means that all of them are using the same LoadBalancer IP.

Configuring Ingress Nginx

Sometimes we need to fine-tune the configuration of Ingress Nginx, and we can do this through annotations in the Ingress resource object. For example, we can configure various configuration options that are normally available directly in Nginx.

|

|

It is also possible to do more fine-grained rule configuration as follows.

These comments will be converted into Nginx configuration, which you can check by manually connecting ( kubectl exec) to the nginx pod.

For more information on the use of ingress-nginx configuration, see the official documentation at

- https://github.com/kubernetes/ingress-nginx/tree/master/docs/user-guide/nginx-configuration

- https://github.com/kubernetes/ingress-nginx/blob/master/docs/user-guide/nginx-configuration/annotations.md#lua-resty-waf

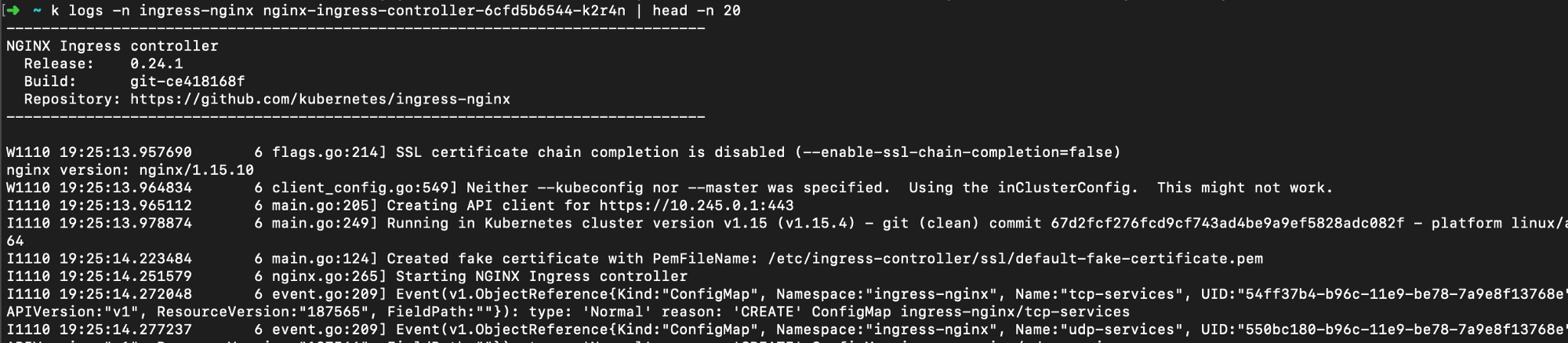

View ingress-nginx logs

To troubleshoot problems, it is helpful to look through the Ingress controller’s logs.

Testing with Curl

If we want to test Ingress redirection rules, it is better to use curl -v [yourhost.com](http://yourhost.com) instead of a browser, to avoid problems caused by caching, etc.

Redirection rules

In the examples in this article we use paths such as /folder and /other/directory to redirect to different services, and we can also differentiate requests by hostname, for example by redirecting api.myurl.com and site.myurl.com are redirected to different internal ClusterIP services.

|

|

SSL/HTTPS

It may be that we want the site to use a secure HTTPS service, and Kubernetes Ingress also provides simple TLS checksums, which means that it will handle all SSL traffic, decrypt/checksum SSL requests, and then send those decrypted requests to the internal service.

If you have multiple internal services using the same (possibly wildcard) SSL certificate so that we only need to configure it once on Ingress and not on the internal service, Ingress can use the configured TLS Kubernetes Secret to configure the SSL certificate.

|

|

Note, however, that if you have multiple Ingress resources in different namespaces, then your TLS secret also needs to be available in all the namespaces of the Ingress resources you use.

Summary

Here we briefly describe how Kubernetes Ingress works, in short: it’s nothing more than a way to easily configure an Nginx server that redirects requests to other internal services. This saves us valuable static IP and LoadBalancers resources.

Also note that there are other Kubernetes Ingress types that don’t have an internal Nginx service set up, but may use other proxy technologies that do all of the above as well.