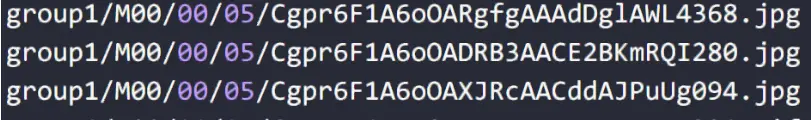

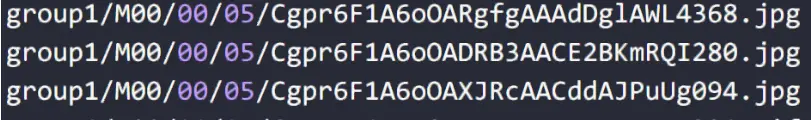

Since FastDFS distributed file storage does not retain the original file name when uploading files, when the file is uploaded it returns a file ID in the following format, which contains the group the file is in, the secondary directory, and a base64-encoded file name generated from the client’s IP, timestamp, and file size. The file ID is stored in the client database and can only be accessed by the file ID. If other systems want to access the FastDFS file store, they have to get the file ID of the file from the database where the upload client is stored. This increases the coupling of the system and is not conducive to subsequent file storage migration and maintenance. Since FastDFS stores files directly on local disks and does not chunk or merge files, we can let nginx request files on local disks directly, without querying the client database for file IDs, and without going through FastDFS.

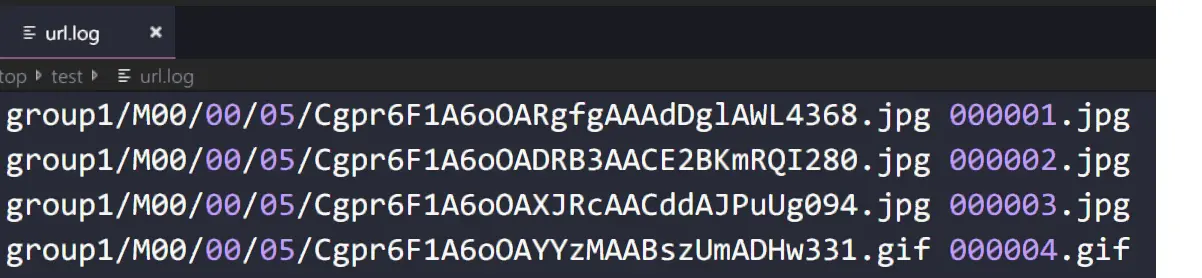

Composition of the file ID

group1 is the name of the group where the file is located

M00 is the partition on the storage server where the file is located

00/05 is the first level subdirectory/second level subdirectory where the file is located, which is the real path where the file is located

Cgpr6F1A7O6ASWv9AAA-az6haWc850.jpg is the name of the newly generated file

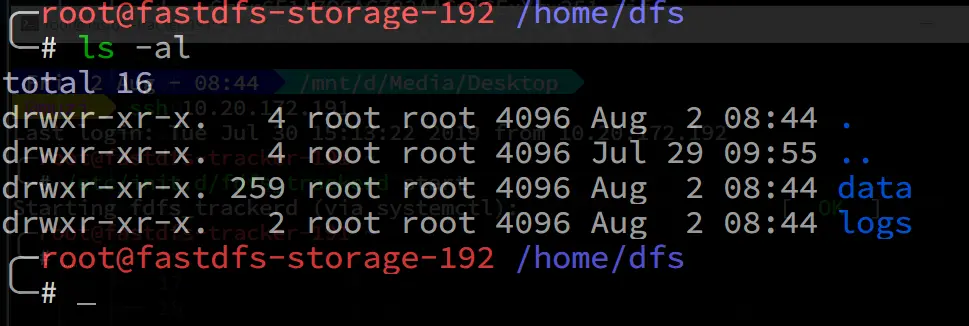

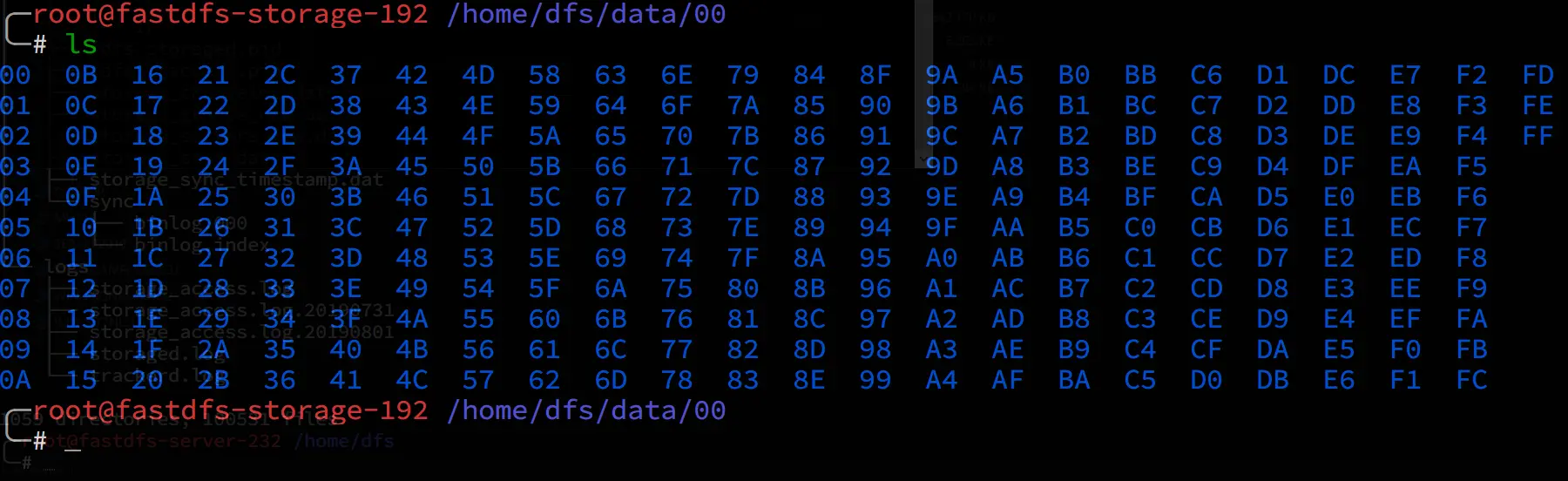

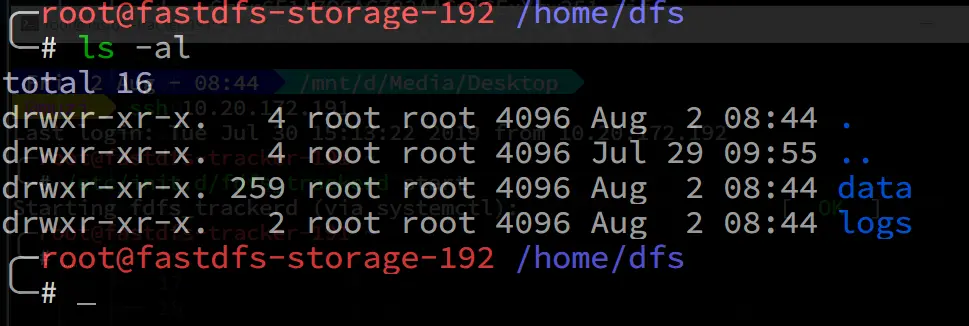

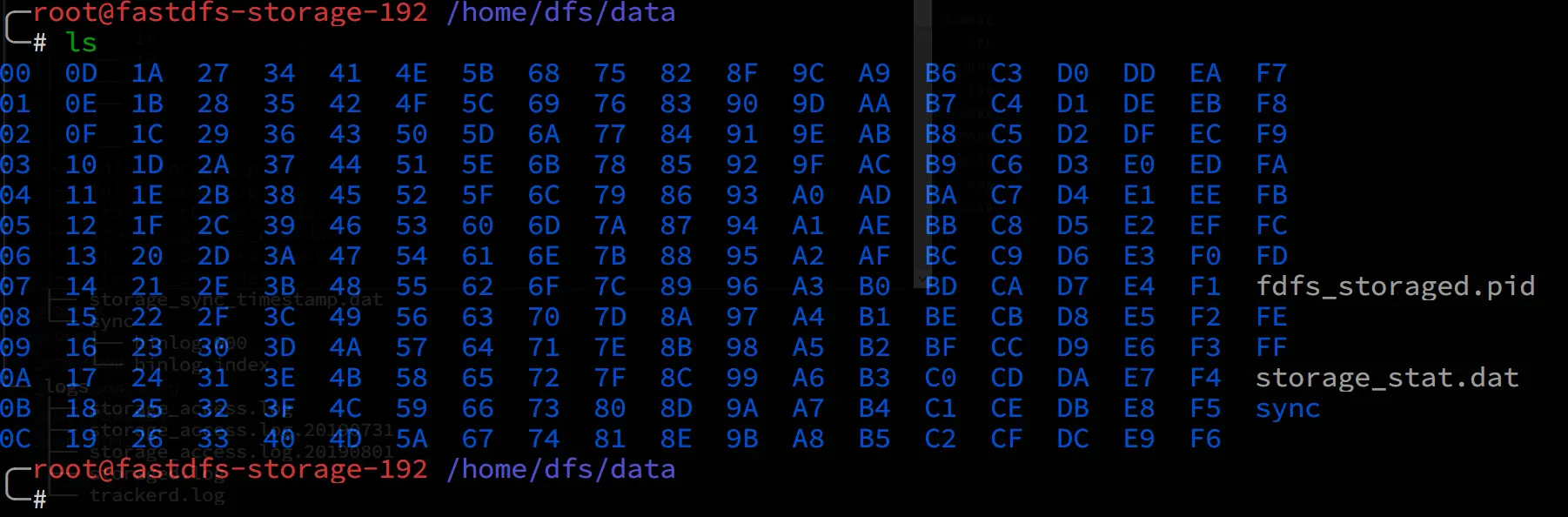

The root directory for file storage, set by the base_path= configuration parameter, the data directory is the file storage directory, and the logs directory stores logs

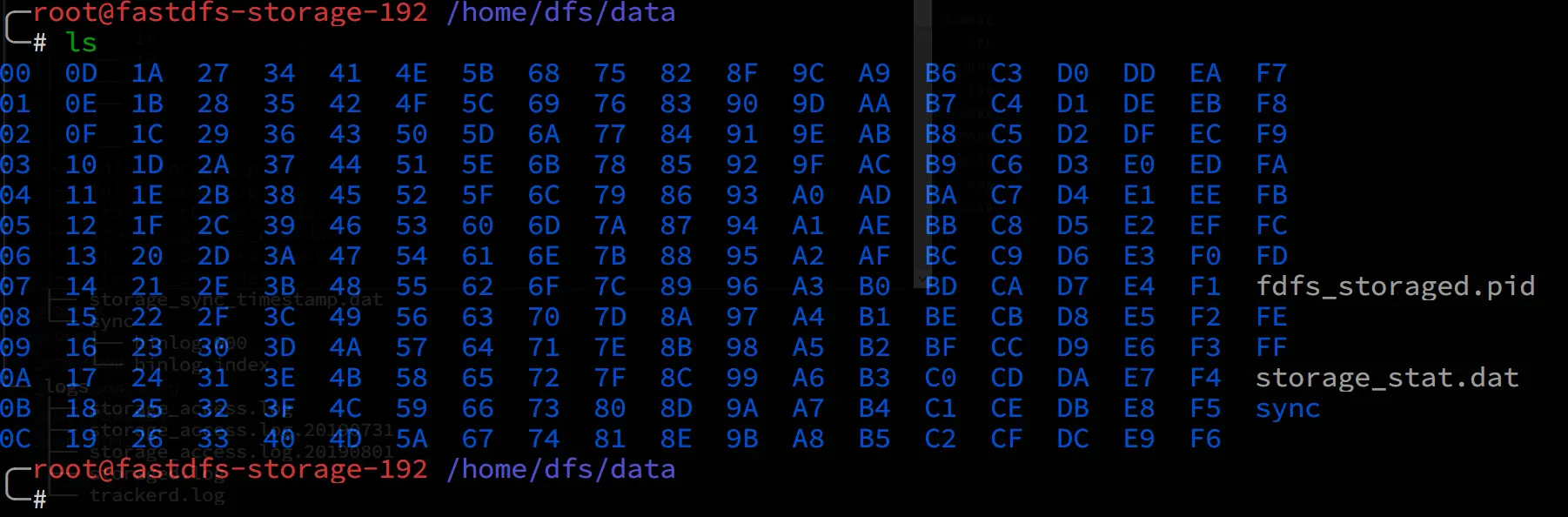

First-level subdirectories for file storage

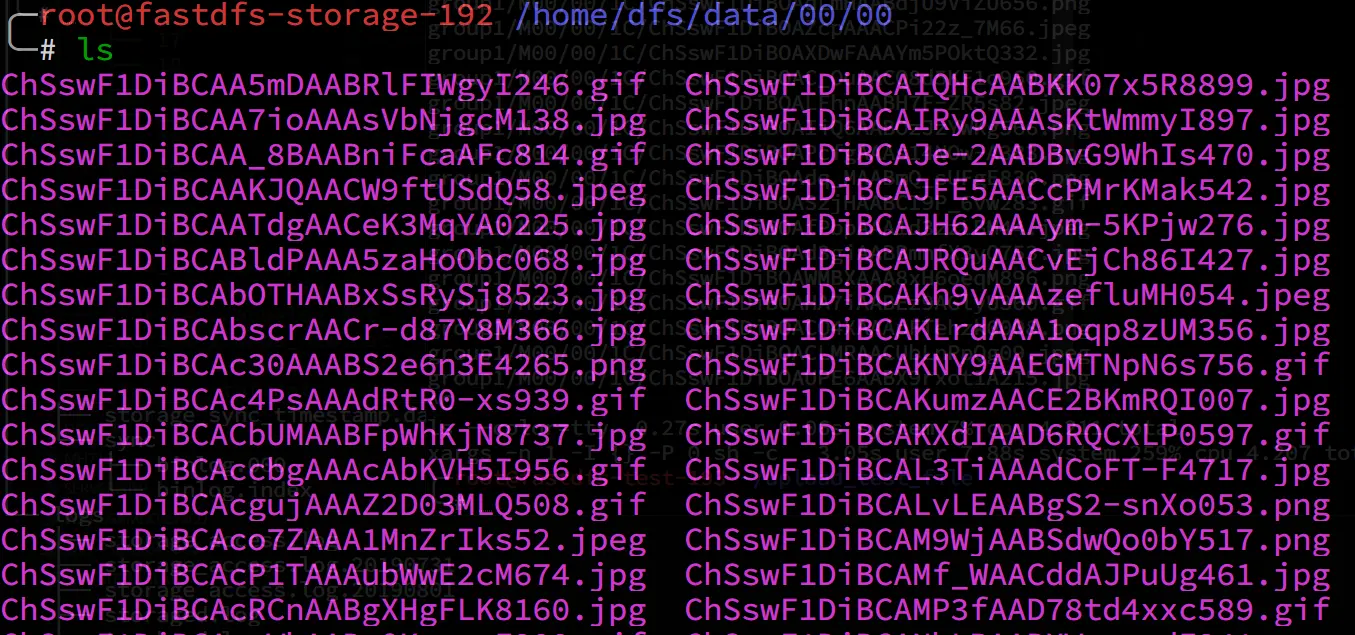

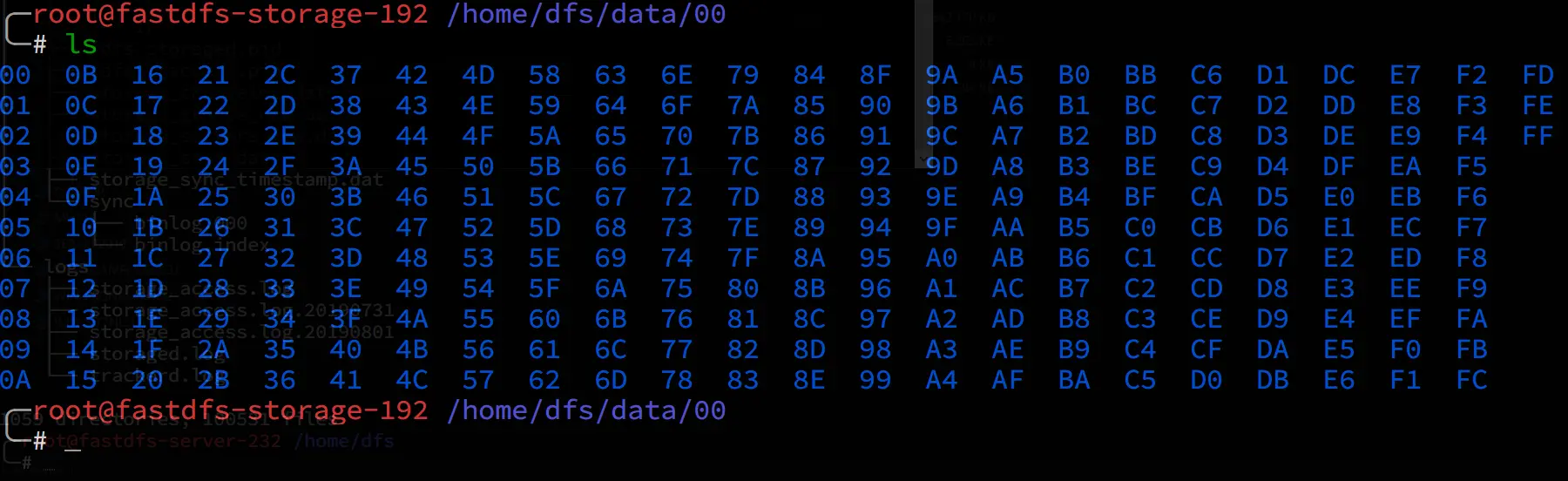

Secondary subdirectories for file storage

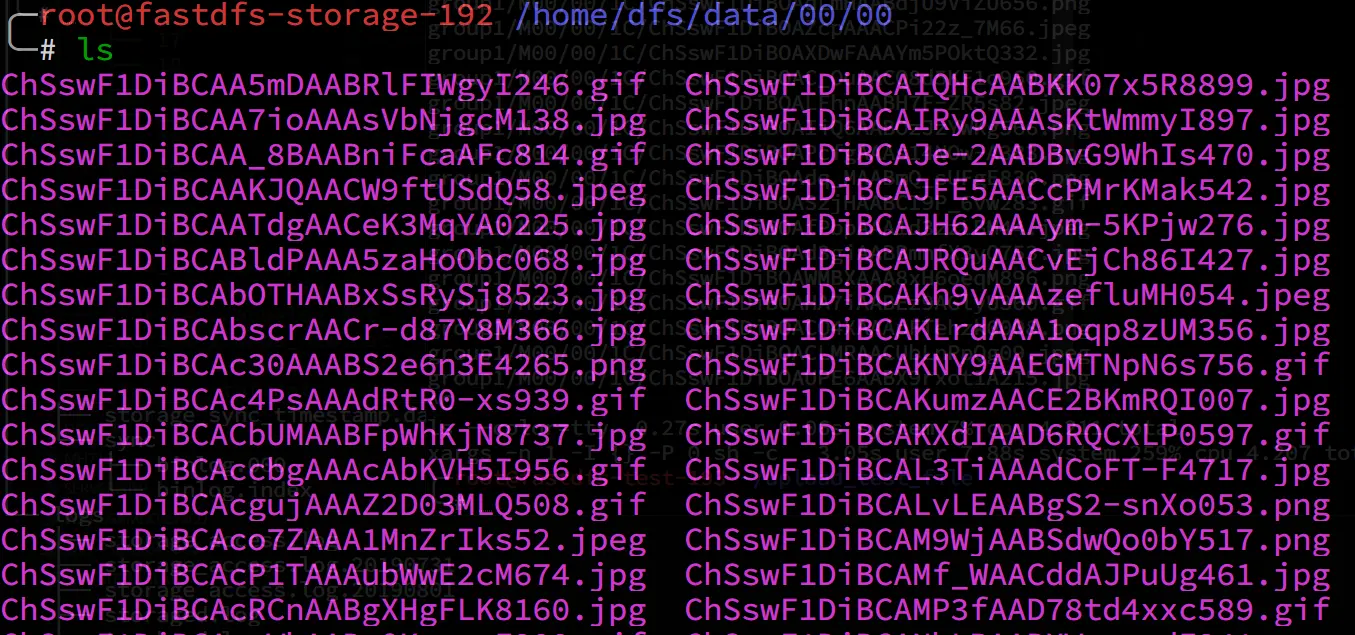

FastDFS stores real files, no chunking or merging of files

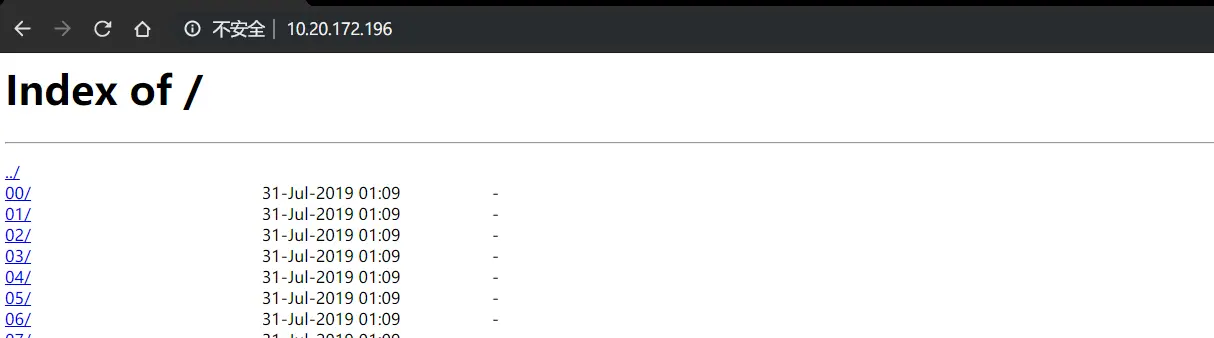

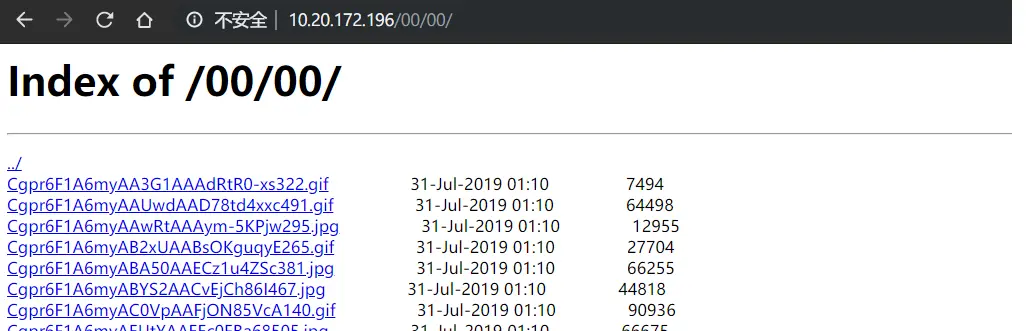

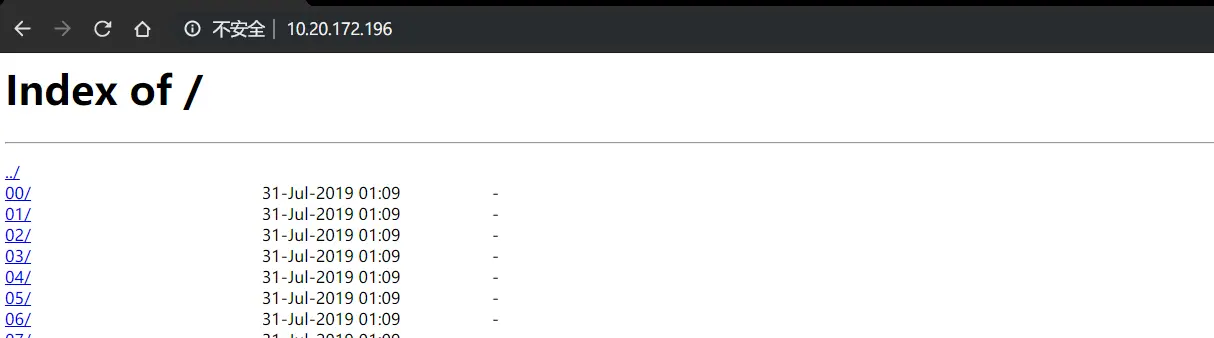

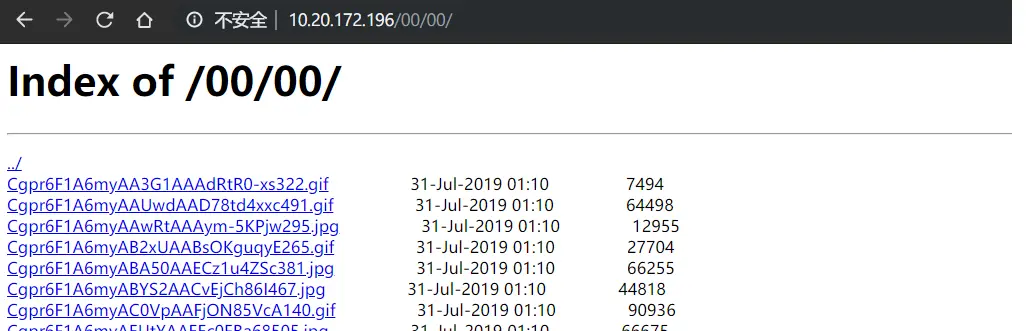

For testing purposes, the nginx list directory option is turned on here

Implementation process

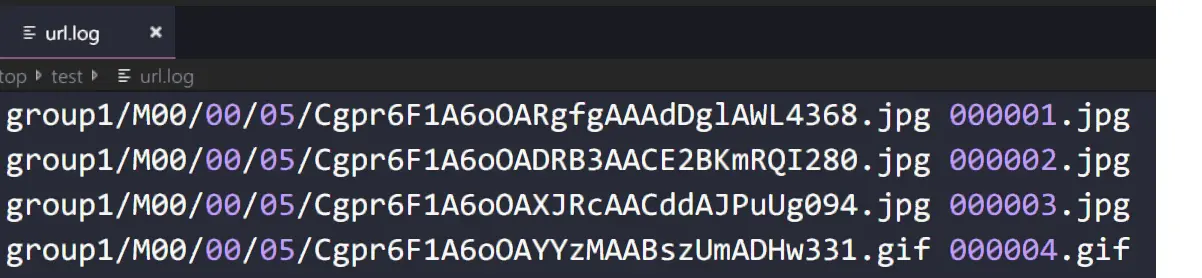

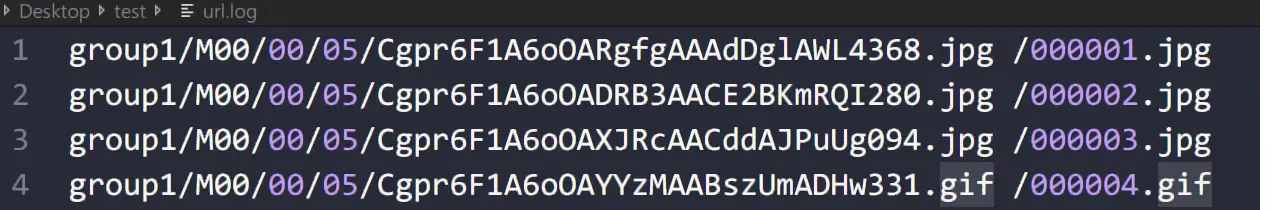

Get the original file name and the newly generated file ID

The log in the following format is extracted from the client (C version) log, available in the database for other versions, which records the original file name and the file ID generated by the FastDFS storage service after the upload.

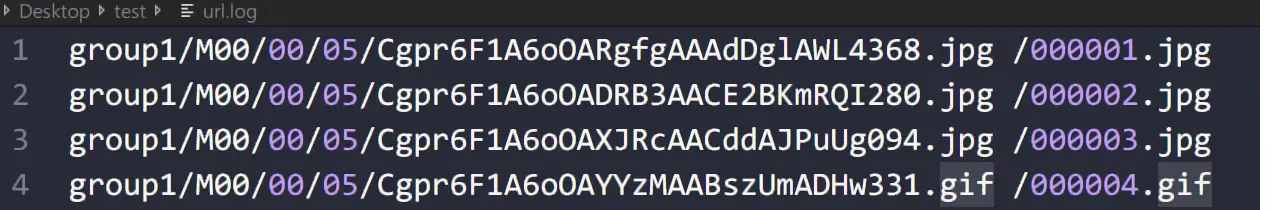

You need to add a / to the original file name as the requested uri header, and the converted format will be as follows

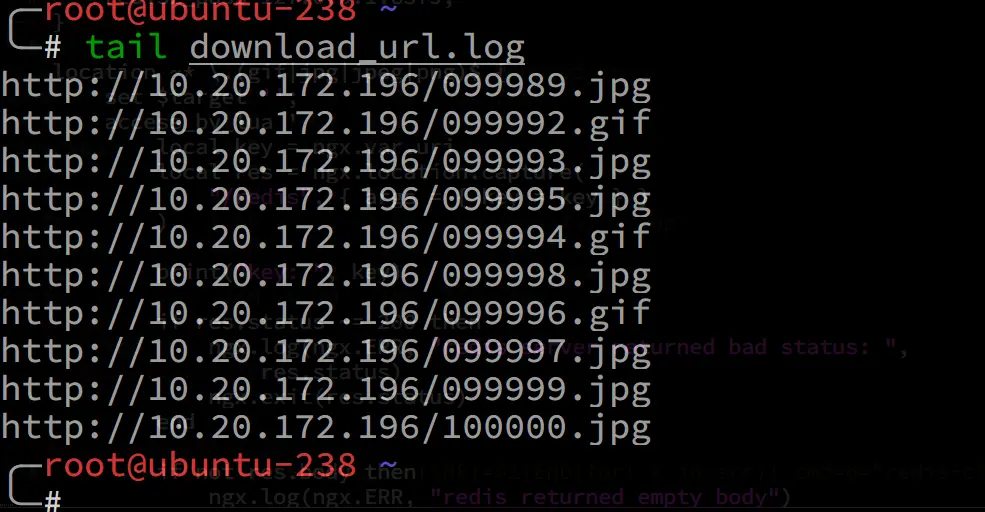

Importing Data into Redis

Use a shell script to import the original file name (which can also be customized) into the Redis database as KEY and the file ID as VALUE

awk 'BEGIN{ FS=" "}{arr[$NF]=$1}END{for( k in arr){ cmd=d="redis-cli set "k" "arr[k];system(cmd)}}' url.log

1

2

3

4

5

6

7

8

9

|

# 通过脚本导入

#!/bin/bash

# import data

cat $1 | while read line

do

key=$(echo $line | cut -d ' ' -f2)

value=$(echo $line | cut -d ' ' -f1)

redis-cli set $key $value

done

|

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

|

# 10W 条 K/V 键值对占用不到15MB 内存

╭─root@ubuntu-238 /tmp

╰─# redis-cli

127.0.0.1:6379> info

# Memory

used_memory:14690296

used_memory_human:14.01M

used_memory_rss:18280448

used_memory_rss_human:17.43M

used_memory_peak:18094392

used_memory_peak_human:17.26M

used_memory_peak_perc:81.19%

used_memory_overhead:5881110

used_memory_startup:782504

used_memory_dataset:8809186

used_memory_dataset_perc:63.34%

total_system_memory:4136931328

total_system_memory_human:3.85G

used_memory_lua:37888

used_memory_lua_human:37.00K

maxmemory:0

maxmemory_human:0B

maxmemory_policy:noeviction

mem_fragmentation_ratio:1.24

mem_allocator:jemalloc-3.6.0

active_defrag_running:0

lazyfree_pending_objects:0

127.0.0.1:6379> get /000001.jpg

"group1/M00/00/05/Cgpr6F1A6oOARgfgAAAdDglAWL4368.jpg"

127.0.0.1:6379>

|

Compiling nginx to include lua and lua-Redis modules

Compiler Environment

1

|

yum install -y gcc g++ gcc-c++ zlib zlib-devel openssl openssl--devel pcre pcre-devel

|

Compile luajit

1

2

3

4

5

6

|

# 编译安装 luajit

wget http://luajit.org/download/LuaJIT-2.1.0-beta2.tar.gz

tar zxf LuaJIT-2.1.0-beta2.tar.gz

cd LuaJIT-2.1.0-beta2

make PREFIX=/usr/local/luajit

make install PREFIX=/usr/local/luajit

|

Download the ngx_devel_kit (NDK) module

1

2

|

wget https://github.com/simpl/ngx_devel_kit/archive/v0.2.19.tar.gz

tar -xzvf v0.2.19.tar.gz

|

Download the lua-nginx-module module

1

2

|

wget https://github.com/openresty/lua-nginx-module/archive/v0.10.2.tar.gz

tar -xzvf v0.10.2.tar.gz

|

Compiling nginx

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

|

tar zxvf nginx-1.15.1.tar.gz

cd nginx-1.15.1/

# tell nginx's build system where to find LuaJIT 2.1:

export LUAJIT_LIB=/usr/local/luajit/lib

export LUAJIT_INC=/usr/local/luajit/include/luajit-2.1

./configure --prefix=/usr/local/nginx --with-ld-opt="-Wl,-rpath,/usr/local/luajit/lib" --with-http_stub_status_module --with-http_ssl_module --with-http_realip_module --with-http_gzip_static_module --with-debug \

--add-module=/usr/local/src/nginx_lua_tools/ngx_devel_kit-0.2.19

# 重新编译

./configure (之前安装的参数) --with-ld-opt="-Wl,-rpath,/usr/local/luajit/lib" --add-module=/path/to/ngx_devel_kit --add-module=/path/to/lua-nginx-module

--add-module后参数路径根据解压路径为准

make -j4 & make install

# --with-debug "调试日志"默认是禁用的,因为它会引入比较大的运行时开销,让 Nginx 服务器显著变慢。

# 启用 --with-debug 选项重新构建好调试版的 Nginx 之后,还需要同时在配置文件中通过标准的 error_log 配置指令为错误日志使用 debug 日志级别(这同时也是最低的日志级别)

|

Configuring nginx

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

|

server {

listen 80;

location ~/group[0-9]/ {

autoindex on;

root /home/dfs/data;

}

location = /Redis {

internal;

set_unescape_uri $key $arg_key;

Redis2_query get $key;

Redis2_pass 127.0.0.1:6379;

}

# 此处根据业务的需求来写正则表达式,一定要个 redis 里的 KEY 对应上

location ~/[0-9].*\.(gif|jpg|jpeg|png)$ {

set $target '';

access_by_lua '

# 使用 nginx 的内部参数 ngx.var.uri 来获取请求的 uri 地址,如 /000001.jpg

local key = ngx.var.uri

# 根据正则匹配到 KEY ,从 redis 数据库里获取文件 ID (路径和文件名)

local res = ngx.location.capture(

"/Redis", { args = { key = key } }

)

if res.status ~= 200 then

ngx.log(ngx.ERR, "Redis server returned bad status: ",

res.status)

ngx.exit(res.status)

end

if not res.body then

ngx.log(ngx.ERR, "Redis returned empty body")

ngx.exit(500)

end

local parser = require "Redis.parser"

local filename, typ = parser.parse_reply(res.body)

if typ ~= parser.BULK_REPLY or not server then

ngx.log(ngx.ERR, "bad Redis response: ", res.body)

ngx.exit(500)

end

ngx.var.target = filename

';

proxy_pass http://10.20.172.196/$target;

}

}

|

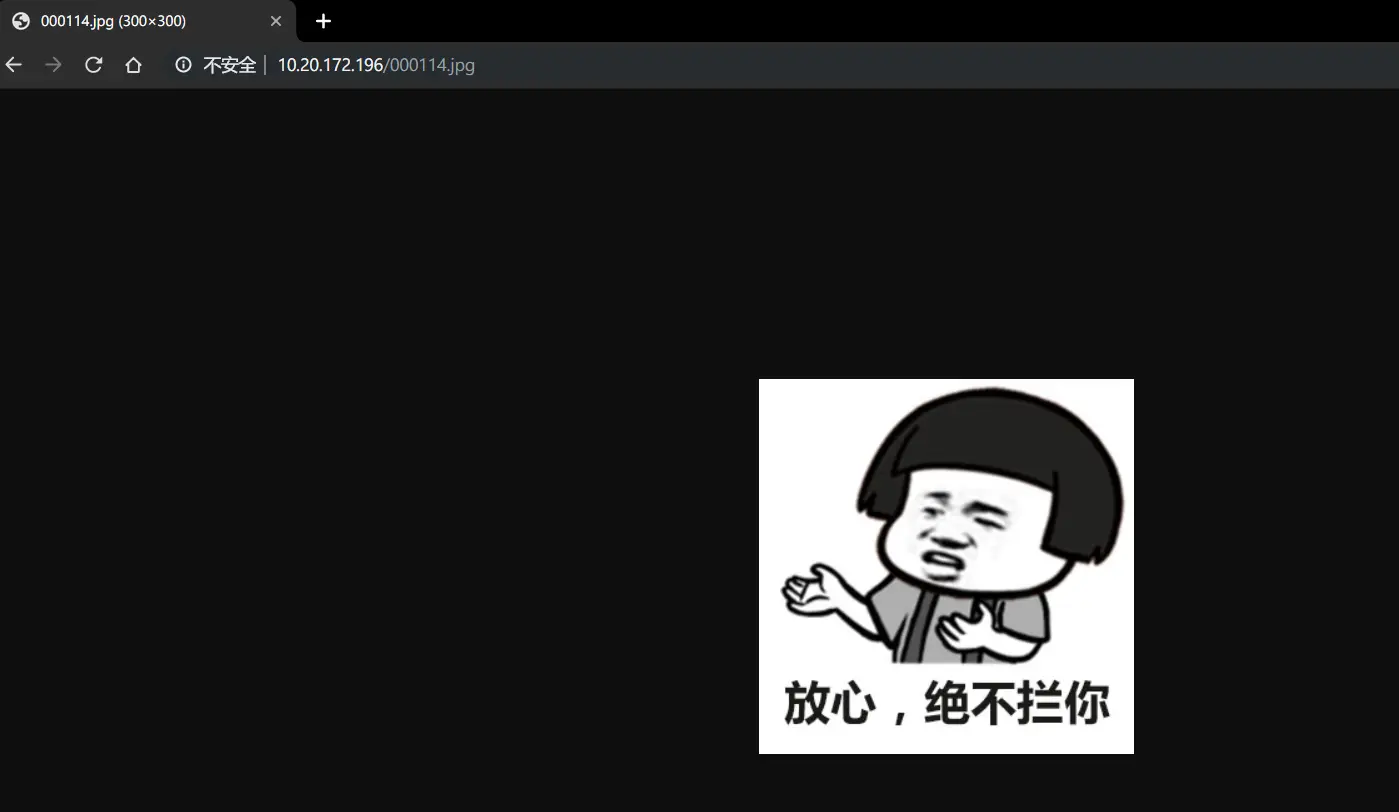

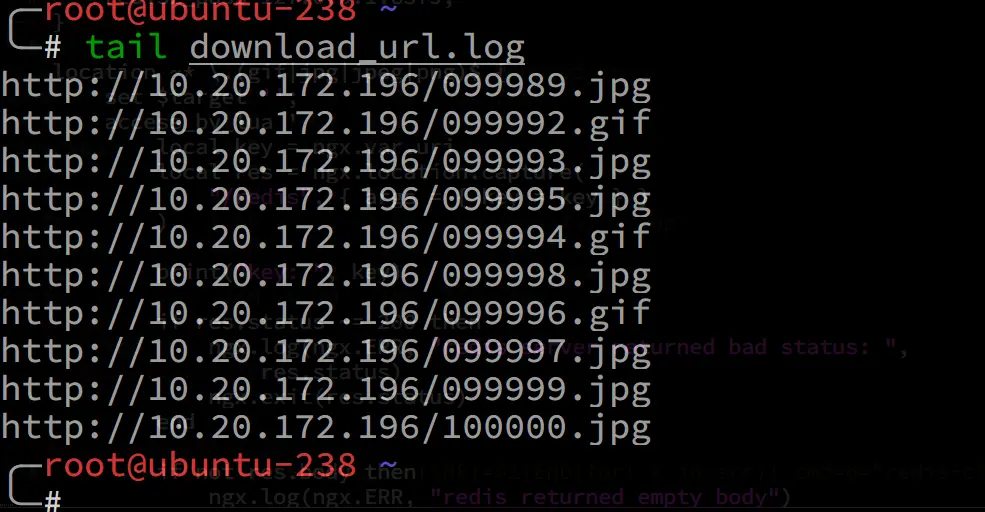

Test Access

The url address of the stitched image file

Access via browser

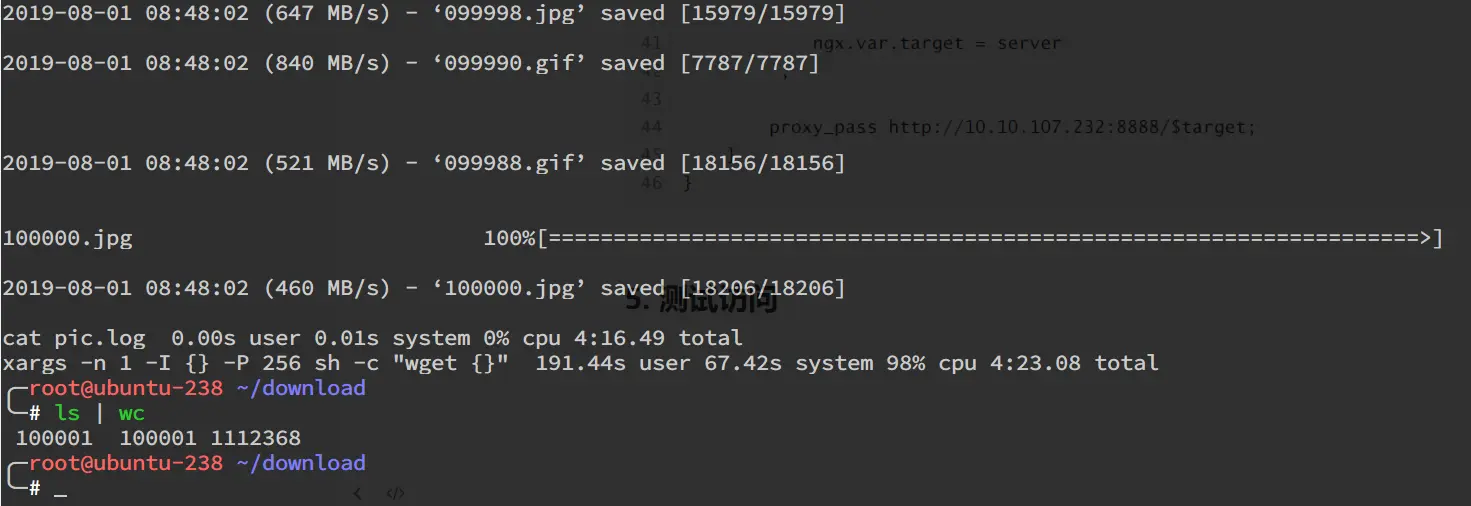

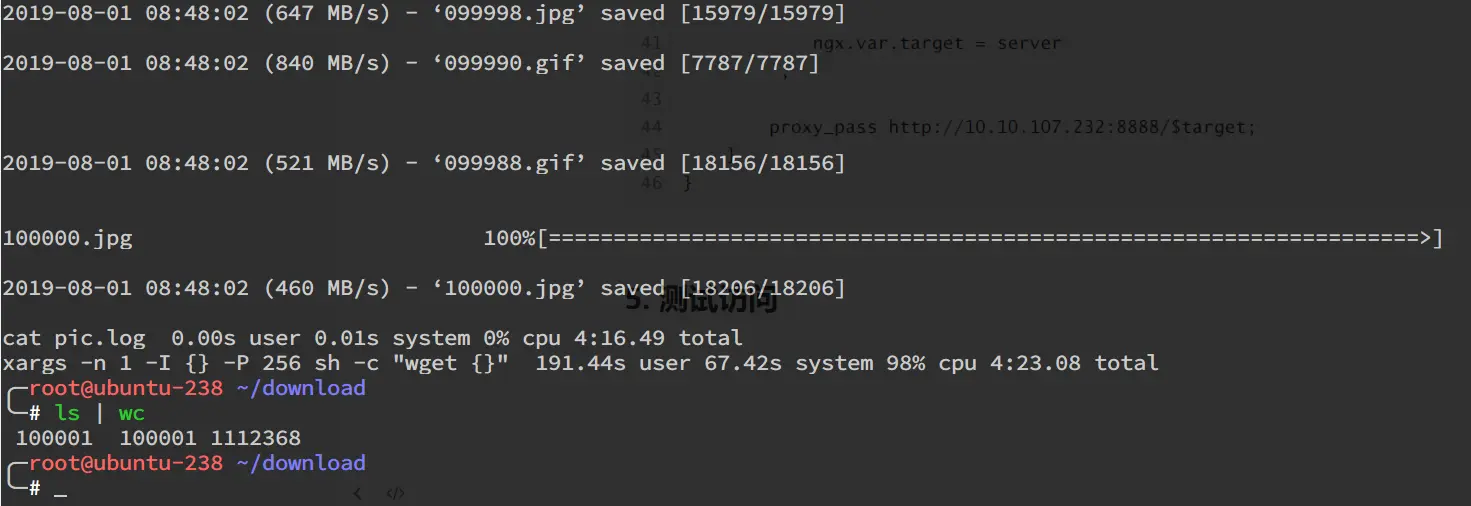

Parallel download using wget and xargs

Shortcomings and improvement options

Advantages

The advantage of this solution is that the uploaded files can be retrieved from the file name that is accessed by a custom file name, which is set according to the needs of the business. The corresponding regular expressions are written in the nginx location module. This decouples FastDFS from the upload client, making it possible to access files without relying on FastDFS storage, reducing O&M costs. At the same time, since the Redis database and internal forwarding are used, the performance loss is almost negligible as it is transparent to the accessing client.

Disadvantages

- Since the data in the Redis database needs to be imported from the client logs or database, the Redis database cannot be updated in real time, and if the uploaded file is modified or deleted, it cannot be updated to the Redis database.

- You need to recompile and install nginx, add the lua-nginx module, and install the Redis database.

Improvements

- Modify the content of FastDFS log output, add metafile name field, and add, delete, and check Redis based on the log operation records

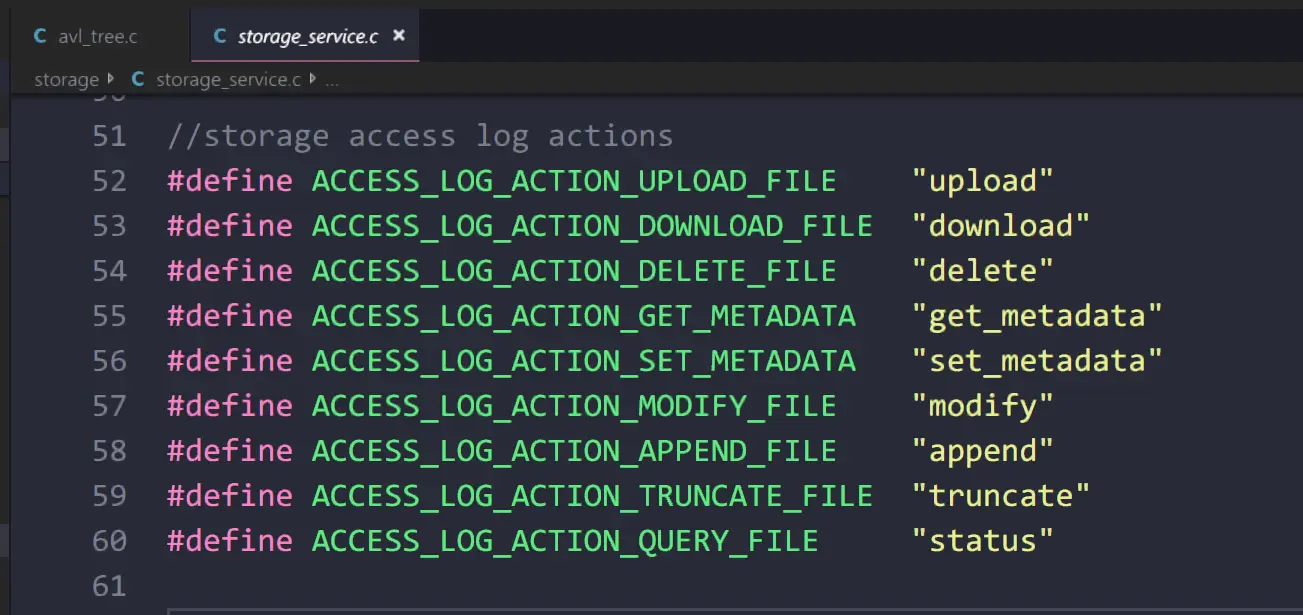

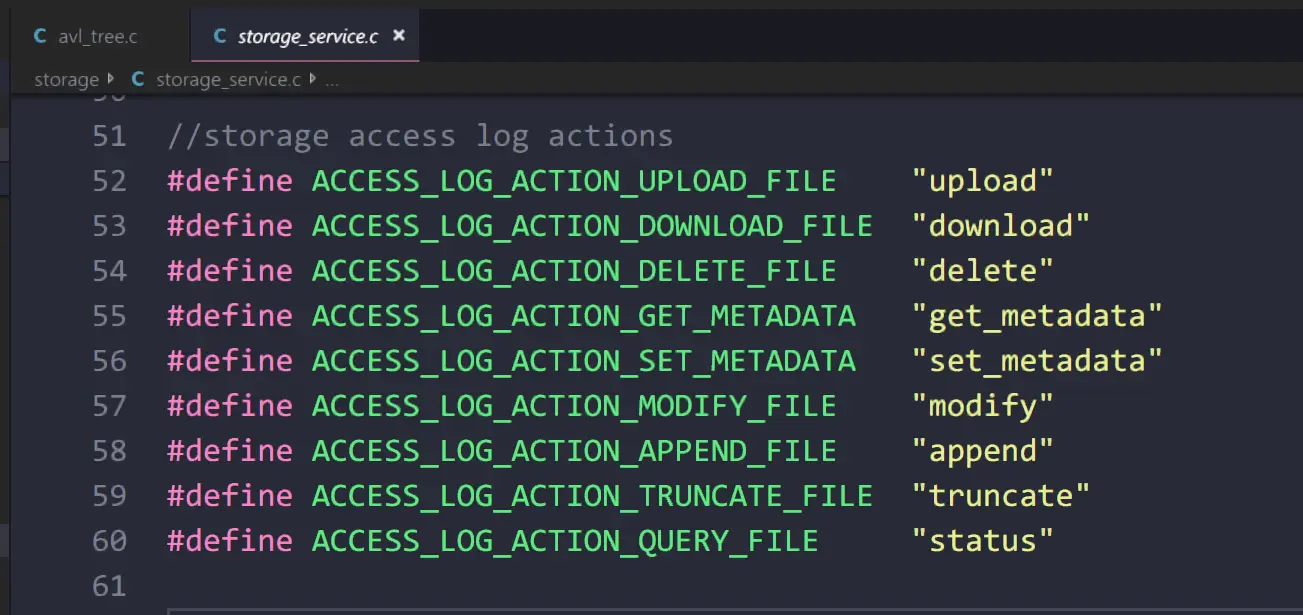

As you can see from the source code, FastDFS records the operation types of files in the logs, and you can add, delete, and check the Redis database based on these types, so you can monitor the output of the logs to add, delete, and check the Redis database.

1

2

3

4

5

6

7

8

9

10

|

//storage access log actions

#define ACCESS_LOG_ACTION_UPLOAD_FILE "upload"

#define ACCESS_LOG_ACTION_DOWNLOAD_FILE "download"

#define ACCESS_LOG_ACTION_DELETE_FILE "delete"

#define ACCESS_LOG_ACTION_GET_METADATA "get_metadata"

#define ACCESS_LOG_ACTION_SET_METADATA "set_metadata"

#define ACCESS_LOG_ACTION_MODIFY_FILE "modify"

#define ACCESS_LOG_ACTION_APPEND_FILE "append"

#define ACCESS_LOG_ACTION_TRUNCATE_FILE "truncate"

#define ACCESS_LOG_ACTION_QUERY_FILE "status"

|

- After reading the source code of the FastDFS storage module, I found that the FastDFS server does not store the original file name, and the original file name is not included in the corresponding file attribute structure. The source code needs to be modified to output the original file name to the log, which is difficult.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

|

typedef struct

{

bool if_gen_filename; //if upload generate filename

char file_type; //regular or link file

bool if_sub_path_alloced; //if sub path alloced since V3.0

char master_filename[128];

char file_ext_name[FDFS_FILE_EXT_NAME_MAX_LEN + 1];

char formatted_ext_name[FDFS_FILE_EXT_NAME_MAX_LEN + 2];

char prefix_name[FDFS_FILE_PREFIX_MAX_LEN + 1];

char group_name[FDFS_GROUP_NAME_MAX_LEN + 1]; //the upload group name

int start_time; //upload start timestamp

FDFSTrunkFullInfo trunk_info;

FileBeforeOpenCallback before_open_callback;

FileBeforeCloseCallback before_close_callback;

} StorageUploadInfo;

typedef struct

{

char op_flag;

char *meta_buff;

int meta_bytes;

} StorageSetMetaInfo;

typedef struct

{

char filename[MAX_PATH_SIZE + 128]; //full filename

/* FDFS logic filename to log not including group name */

char fname2log[128+sizeof(FDFS_STORAGE_META_FILE_EXT)];

char op; //w for writing, r for reading, d for deleting etc.

char sync_flag; //sync flag log to binlog

bool calc_crc32; //if calculate file content hash code

bool calc_file_hash; //if calculate file content hash code

int open_flags; //open file flags

int file_hash_codes[4]; //file hash code

int64_t crc32; //file content crc32 signature

MD5_CTX md5_context;

union

{

StorageUploadInfo upload;

StorageSetMetaInfo setmeta;

} extra_info;

int dio_thread_index; //dio thread index

int timestamp2log; //timestamp to log

int delete_flag; //delete file flag

int create_flag; //create file flag

int buff_offset; //buffer offset after recv to write to file

int fd; //file description no

int64_t start; //the start offset of file

int64_t end; //the end offset of file

int64_t offset; //the current offset of file

FileDealDoneCallback done_callback;

DeleteFileLogCallback log_callback;

struct timeval tv_deal_start; //task deal start tv for access log

} StorageFileContext;

|

Real-time updates to the Redis database by the type of file operations recorded in FastDFS logs