We all know that Pods in a StatefulSet have separate DNS records. For example, if a StatefulSet is named etcd and its associated Headless SVC is named etcd-headless, CoreDNS will resolve an A record for each of its Pods as follows.

- etcd-0.etcd-headless.default.svc.cluster.local

- etcd-1.etcd-headless.default.svc.cluster.local

- ……

So can other Pods besides the one managed by StatefulSet also generate DNS records?

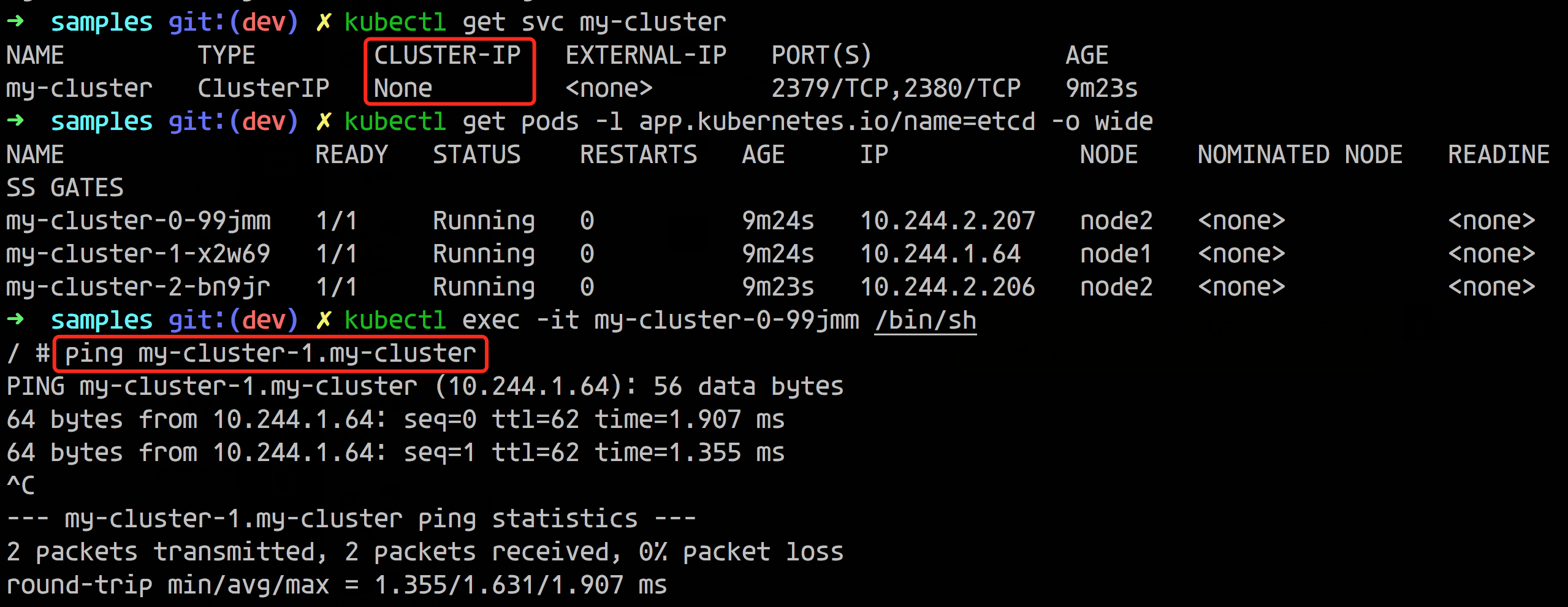

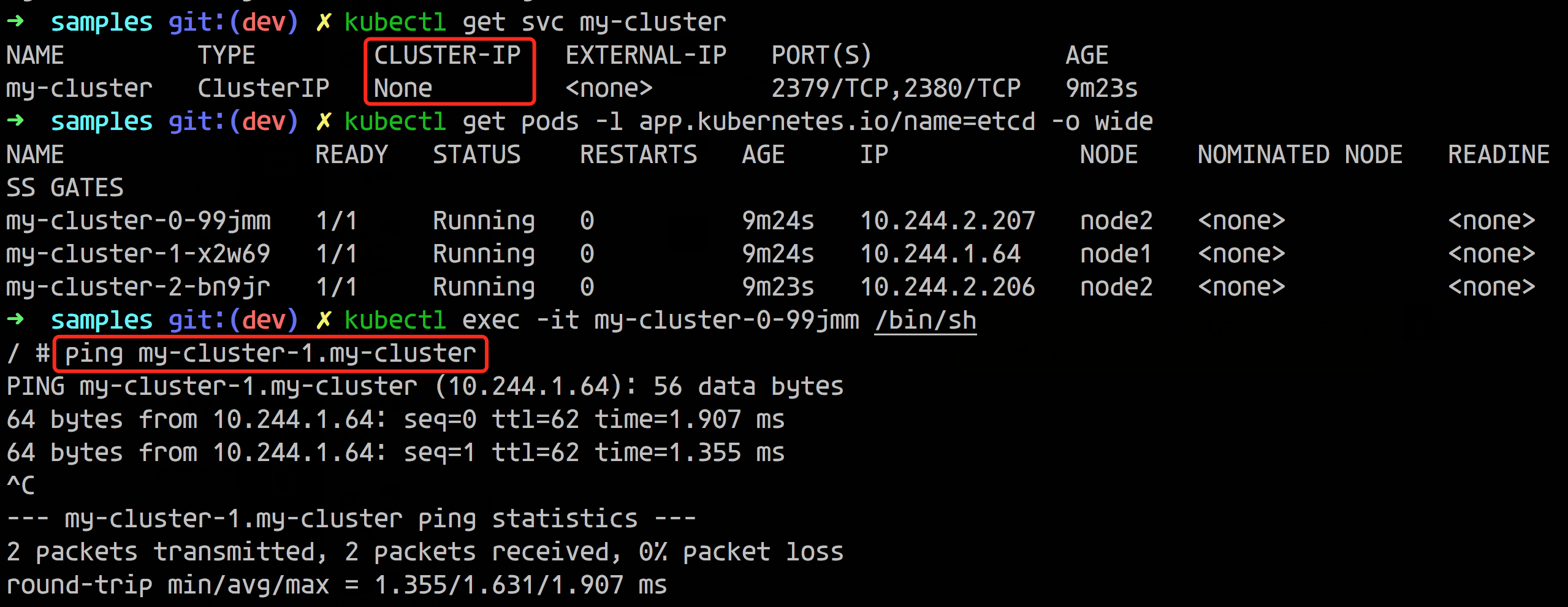

As shown below, we only have a Headless SVC here, not a Pod managed by StatefulSet, but a Pod managed by ReplicaSet, and we can see that it seems to generate resolution records similar to those in StatefulSet.

How is this done? According to our conventional understanding, this is a Pod managed by a StatefulSet, but in fact this is a different ReplicaSet. The implementation here is actually because the Pod itself can have its own DNS records, so we can implement a similar resolution record as the Pod of StatefulSet.

First let’s deploy a Deployment-managed common application, defined as follows.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

# nginx.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

spec:

selector:

matchLabels:

app: nginx

replicas: 2

template:

metadata:

labels:

app: nginx

spec:

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

|

Two Pods were created after deployment.

1

2

3

4

5

6

|

$ kubectl apply -f nginx.yaml

deployment.apps/nginx created

$ kubectl get pod -l app=nginx -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-5d59d67564-2cwdz 1/1 Running 0 19s 10.244.1.68 node1 <none> <none>

nginx-5d59d67564-bp5br 1/1 Running 0 19s 10.244.2.209 node2 <none> <none>

|

Then define the Headless Service as follows:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

|

# service.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx

spec:

clusterIP: None

ports:

- name: http

port: 80

protocol: TCP

selector:

app: nginx

type: ClusterIP

|

Create the service and try to resolve the service DNS.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

|

$ kubectl apply -f ervice.yaml

service/nginx created

$ kubectl get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kubernetes ClusterIP 10.96.0.1 <none> 443/TCP 38d

nginx ClusterIP None <none> 80/TCP 7s

$ dig @10.96.0.10 nginx.default.svc.cluster.local

; <<>> DiG 9.9.4-RedHat-9.9.4-73.el7_6 <<>> @10.96.0.10 nginx.default.svc.cluster.local

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 2573

;; flags: qr aa rd; QUERY: 1, ANSWER: 2, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;nginx.default.svc.cluster.local. IN A

;; ANSWER SECTION:

nginx.default.svc.cluster.local. 30 IN A 10.244.2.209

nginx.default.svc.cluster.local. 30 IN A 10.244.1.68

;; Query time: 19 msec

;; SERVER: 10.96.0.10#53(10.96.0.10)

;; WHEN: Wed Nov 25 11:44:41 CST 2020

;; MSG SIZE rcvd: 154

|

Then we dig into the FQDN domain of nginx, and we see that multiple A records are returned, each corresponding to a Pod.

The 10.96.0.10 used in the dig command above is the cluster IP of kube-dns, which can be viewed in the kube-system namespace at

1

2

3

|

$ kubectl -n kube-system get svc

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

kube-dns ClusterIP 10.96.0.10 <none> 53/UDP,53/TCP 52m

|

Next, let’s try prefixing the service name with the Pod name and giving it to kube-dns to resolve.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

|

$ dig @10.96.0.10 nginx-5d59d67564-bp5br.nginx.default.svc.cluster.local

; <<>> DiG 9.9.4-RedHat-9.9.4-73.el7_6 <<>> @10.96.0.10 nginx-5d59d67564-bp5br.nginx.default.svc.cluster.local

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NXDOMAIN, id: 10485

;; flags: qr aa rd; QUERY: 1, ANSWER: 0, AUTHORITY: 1, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;nginx-5d59d67564-bp5br.nginx.default.svc.cluster.local. IN A

;; AUTHORITY SECTION:

cluster.local. 30 IN SOA ns.dns.cluster.local. hostmaster.cluster.local. 1606275807 7200 1800 86400 30

;; Query time: 4 msec

;; SERVER: 10.96.0.10#53(10.96.0.10)

;; WHEN: Wed Nov 25 11:47:31 CST 2020

;; MSG SIZE rcvd: 176

|

You can see that you do not get the parsed result. The official documentation has a paragraph Pod’s hostname and subdomain fields Description.

The Pod protocol contains an optional hostname field that can be used to specify the Pod’s hostname. When this field is set, it will take precedence over the Pod’s name as the Pod’s hostname. For example, given a Pod with hostname set to “my-host”, the Pod’s hostname will be set to “my-host”. can be used to specify the Pod’s subdomain. For example, if the hostname of a Pod is set to “foo” and the subdomain is set to “bar”, the corresponding fully qualified domain name in the namespace “my-namespace " and the corresponding fully qualified domain name (FQDN) is "foo.bar.my-namespace.svc.cluster-domain.example" .

Now let’s edit nginx.yaml and add subdomain to test it.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

|

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx

spec:

selector:

matchLabels:

app: nginx

replicas: 2

template:

metadata:

labels:

app: nginx

spec:

subdomain: nginx

containers:

- name: nginx

image: nginx:1.7.9

ports:

- containerPort: 80

|

Update the deployment before attempting to resolve Pod DNS.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

|

$ kubectl apply -f nginx.yaml

$ kubectl get pod -l app=nginx -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

nginx-78f58d8bcb-6kctm 1/1 Running 0 8s 10.244.2.210 node2 <none> <none>

nginx-78f58d8bcb-6tbnv 1/1 Running 0 15s 10.244.1.69 node1 <none> <none>

$ dig @10.96.0.10 nginx-78f58d8bcb-6kctm.nginx.default.svc.cluster.local

; <<>> DiG 9.9.4-RedHat-9.9.4-73.el7_6 <<>> @10.96.0.10 nginx-78f58d8bcb-6kctm.nginx.default.svc.cluster.local

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NXDOMAIN, id: 34172

;; flags: qr aa rd; QUERY: 1, ANSWER: 0, AUTHORITY: 1, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;nginx-78f58d8bcb-6kctm.nginx.default.svc.cluster.local. IN A

;; AUTHORITY SECTION:

cluster.local. 30 IN SOA ns.dns.cluster.local. hostmaster.cluster.local. 1606276303 7200 1800 86400 30

;; Query time: 2 msec

;; SERVER: 10.96.0.10#53(10.96.0.10)

;; WHEN: Wed Nov 25 11:52:18 CST 2020

;; MSG SIZE rcvd: 176

|

As you can see, you still can’t resolve it, so try the example in the official documentation and create a Pod directly without Deployment. The first step is to comment out the hostname and subdomain.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

|

# individual-pods-example.yaml

apiVersion: v1

kind: Service

metadata:

name: default-subdomain

spec:

selector:

name: busybox

clusterIP: None

ports:

- name: foo # Actually, no port is needed.

port: 1234

targetPort: 1234

---

apiVersion: v1

kind: Pod

metadata:

name: busybox1

labels:

name: busybox

spec:

hostname: busybox-1

subdomain: default-subdomain

containers:

- image: busybox:1.28

command:

- sleep

- "3600"

name: busybox

---

apiVersion: v1

kind: Pod

metadata:

name: busybox2

labels:

name: busybox

spec:

hostname: busybox-2

subdomain: default-subdomain

containers:

- image: busybox:1.28

command:

- sleep

- "3600"

name: busybox

|

Deploy and then try to resolve the Pod DNS (note the difference between the hostname and the pod name here, with the extra minus sign in between).

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

|

$ kubectl apply -f individual-pods-example.yaml

$ $ dig @10.96.0.10 busybox-1.default-subdomain.default.svc.cluster.local

; <<>> DiG 9.11.3-1ubuntu1.5-Ubuntu <<>> @10.96.0.10 busybox-1.default-subdomain.default.svc.cluster.local

; (1 server found)

;; global options: +cmd

;; Got answer:

;; WARNING: .local is reserved for Multicast DNS

;; You are currently testing what happens when an mDNS query is leaked to DNS

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 12636

;; flags: qr aa rd ra; QUERY: 1, ANSWER: 1, AUTHORITY: 0, ADDITIONAL: 1

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

; COOKIE: 5499ded915cf1ff2 (echoed)

;; QUESTION SECTION:

;busybox-1.default-subdomain.default.svc.cluster.local. IN A

;; ANSWER SECTION:

busybox-1.default-subdomain.default.svc.cluster.local. 5 IN A 10.44.0.6

;; Query time: 0 msec

;; SERVER: 10.96.0.10#53(10.96.0.10)

;; WHEN: Fri Apr 19 15:27:38 CST 2019

;; MSG SIZE rcvd: 163

|

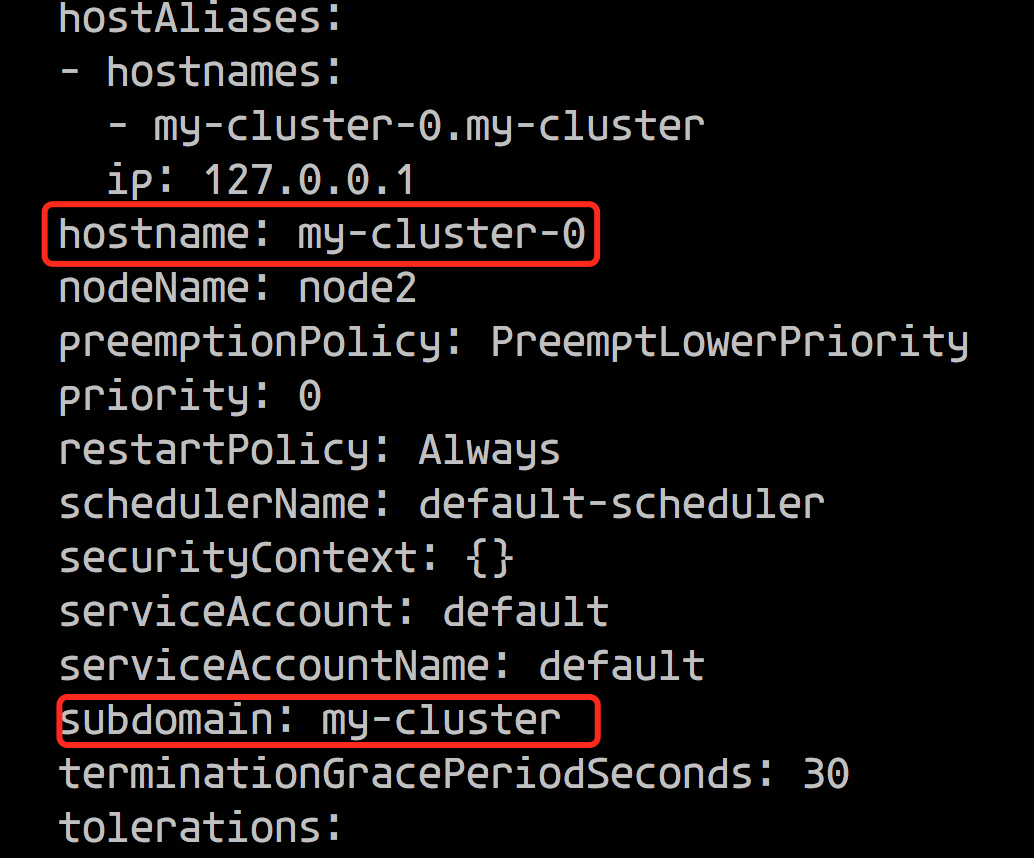

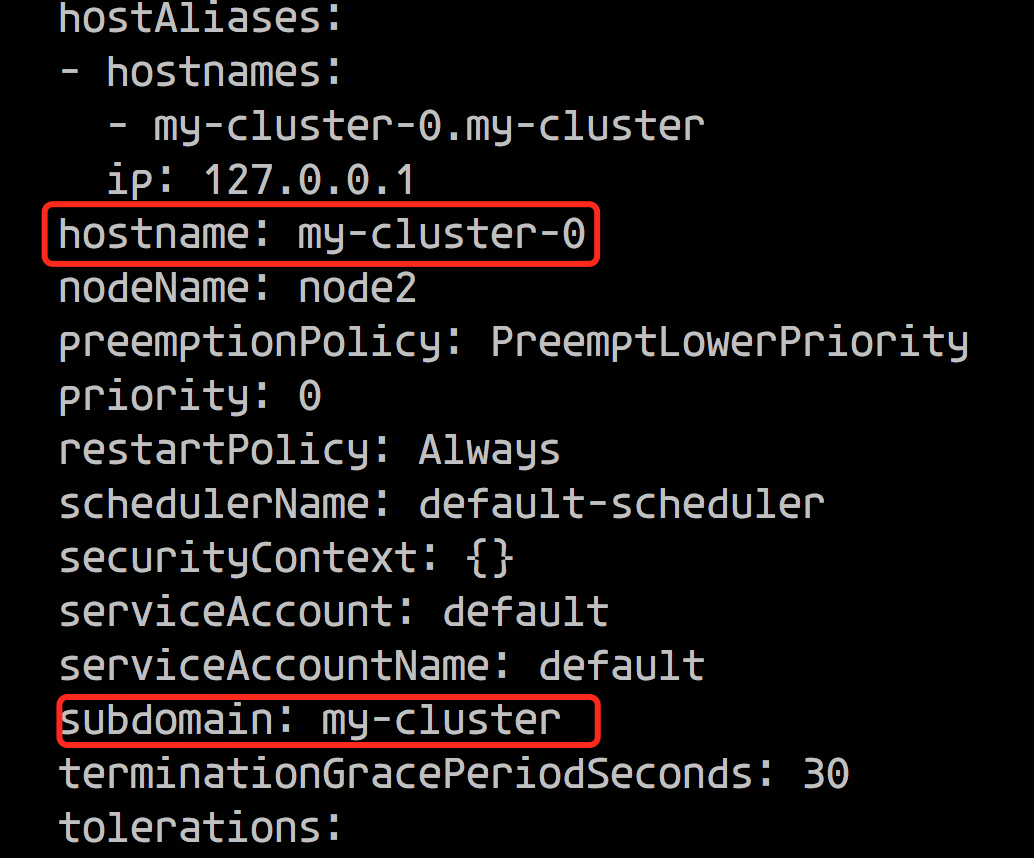

Now we see that we have the ANSWER record back, both hostname and subdomain must be explicitly specified, one cannot be missing. The implementation in our screenshot at the beginning is actually the same way.

Now let’s modify the previous nginx deployment to add the hostname and re-resolve it.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

|

$ dig @10.96.0.10 nginx.nginx.default.svc.cluster.local

; <<>> DiG 9.9.4-RedHat-9.9.4-73.el7_6 <<>> @10.96.0.10 nginx.nginx.default.svc.cluster.local

; (1 server found)

;; global options: +cmd

;; Got answer:

;; ->>HEADER<<- opcode: QUERY, status: NOERROR, id: 21127

;; flags: qr aa rd; QUERY: 1, ANSWER: 2, AUTHORITY: 0, ADDITIONAL: 1

;; WARNING: recursion requested but not available

;; OPT PSEUDOSECTION:

; EDNS: version: 0, flags:; udp: 4096

;; QUESTION SECTION:

;nginx.nginx.default.svc.cluster.local. IN A

;; ANSWER SECTION:

nginx.nginx.default.svc.cluster.local. 30 IN A 10.244.2.211

nginx.nginx.default.svc.cluster.local. 30 IN A 10.244.1.70

;; Query time: 1 msec

;; SERVER: 10.96.0.10#53(10.96.0.10)

;; WHEN: Wed Nov 25 11:55:37 CST 2020

;; MSG SIZE rcvd: 172

|

We can see that the resolution is successful, but because Deployment cannot assign different hostname to each Pod, so two Pods have the same hostname and resolve out two IPs, which is not in line with our intention.

But after knowing this way we can write an Operator to manage Pods directly by setting different hostname and a Headless SVC name subdomain for each Pod, which is equivalent to implementing Pod resolution in StatefulSet.