When using Kubernetes, users often need to share the use of Kubernetes clusters (multi-tenancy) to simplify operations and reduce costs while meeting the needs of multiple teams and customers. While Kubernetes itself does not directly provide multi-tenancy capabilities, it provides a set of features that can be used to support the implementation of multi-tenancy. Based on these features, a number of projects have emerged in the Kubernetes community to implement multi-tenancy. In this article, we will talk about the existing implementation mechanisms and optimization options for Kubernetes multi-tenancy, and how enterprises should choose between multi-tenancy (shared clusters) and multi-cluster solutions.

Kubernetes Isolation Techniques

Control Plane Isolation

Kubernetes provides three mechanisms to achieve control plane isolation: namespace, RBAC, and quota.

Namespace should be a concept that every Kubernetes user has been exposed to, and it is used to provide separate namespaces. Objects within different namespaces can be renamed. In addition, namespace also limits the scope of RBAC and quota.

RBAC is used to limit user or load access to the API. By setting appropriate RBAC rules, isolated access to API resources can be achieved.

ResourceQuota can be used to limit the use of resources under a namespace to prevent a namespace from taking up too many cluster resources and affecting other namespace applications. However, there is a restriction on the use of ResourceQuota, which requires each container under a namespace to specify a resource request and a limit.

While these three mechanisms provide some degree of control plane isolation, they do not solve the problem completely. Cluster-wide resources such as CRD, for example, cannot be isolated well.

Data Plane Isolation

Data plane isolation is divided into three main areas: container runtime, storage, and network.

Containers and hosts share a kernel, and vulnerabilities in applications or host systems can be exploited by attackers to breach the container boundaries and attack the host or other containers. The solution is usually to place the container in an isolated environment, such as a virtual machine or a user kernel, the former being represented by Kata Containers and the latter by gVisor.

Storage isolation should ensure that volumes are not accessed across tenants, and since StorageClass is a cluster-wide resource, its reclaimPolicy should be specified as Delete to prevent PVs from being accessed across tenants. in addition, the use of volumes such as hostPath should be prohibited to avoid misuse of the node’s local storage.

Network isolation is usually ensured by NetworkPolicy. By default, all pods within Kubernetes are allowed to communicate with each other. Using NetworkPolicy, you can limit the range of pods that a pod can communicate with to prevent accidental network access. In addition, service meshes typically provide more advanced network isolation capabilities.

Multi-tenancy options

The control plane and data plane isolation features mentioned above are relatively discrete and fragmented features within Kubernetes that fall short of a full multi-tenancy solution and require considerable effort to organize. However, there are a number of open source projects in the Kubernetes community dedicated to solving the multi-tenancy problem. In terms of general direction, they fall into two categories. One category uses namespace as a boundary to divide tenants, and the other provides a virtual control plane for tenants.

Tenant Segmentation by Namespace

RBAC and ResourceQuota in Kubernetes’ control plane isolation are bounded by namespaces, so the idea of dividing tenants by namespace is a natural one. However, in reality, there are limitations to limiting a tenant to one namespace. For example, it cannot be further subdivided into teams or at application granularity, making it difficult to manage. Therefore, Kubernetes officially provides a controller that supports hierarchical namespaces.

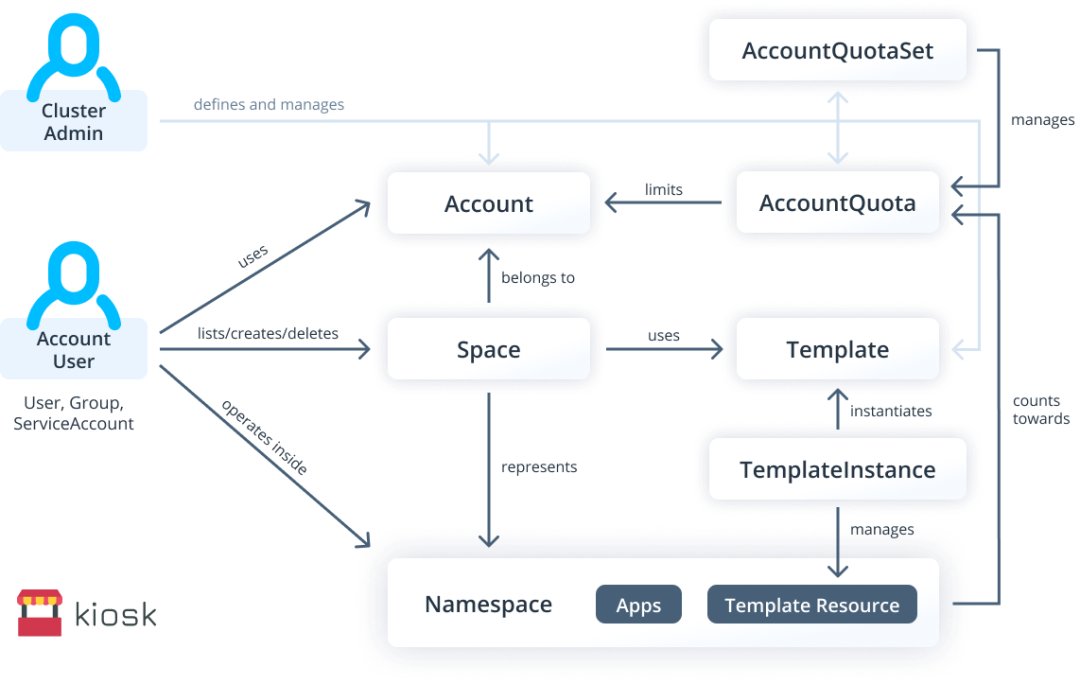

In addition, third-party open source projects such as Capsule and kiosk provide richer multi-tenancy support.

Virtual Control Plane

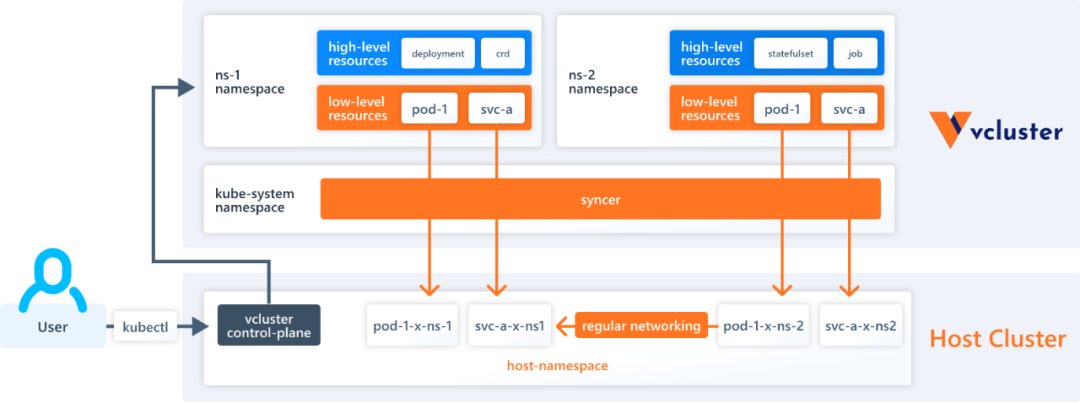

Another multi-tenant implementation option is to provide a separate virtual control plane for each tenant to completely isolate the tenant’s resources. A virtual control plane is typically implemented by running a separate set of apiserver for each tenant, and using a controller to synchronize resources from the tenant apiserver to the original Kubernetes cluster. Each tenant can only access its own apiserver, while the apiserver of the original Kubernetes cluster is typically not accessible to the public.

This type of solution comes at the cost of additional apiserver overhead, but allows for more complete control plane isolation. Combined with data plane isolation techniques, the virtual control plane allows for a more thorough and secure multi-tenant solution. This type of solution is represented by the vcluster project.

How do I choose?

The choice between splitting tenants by namespace or using a virtual control plane should depend on the multi-tenancy scenario. In general, tenancy by namespace has a slight lack of isolation and freedom, but has the advantage of being lightweight. For multi-team shared use scenarios, tenancy by namespace is more appropriate. For multi-client shared use scenarios, choosing a virtual control plane usually provides better isolation.

Multi-Cluster Solutions

As you can see above, shared use of Kubernetes clusters is not easy; Kubernetes clusters do not naturally support multi-tenancy, but only provide some fine-grained support for the feature. For Kubernetes to support a multi-tenant scenario requires support from other projects to isolate between tenants on both the control plane and the data plane. This makes the whole scenario subject to a significant learning and adaptation cost. As a result, there are many users currently using not shared clusters, but multi-cluster solutions.

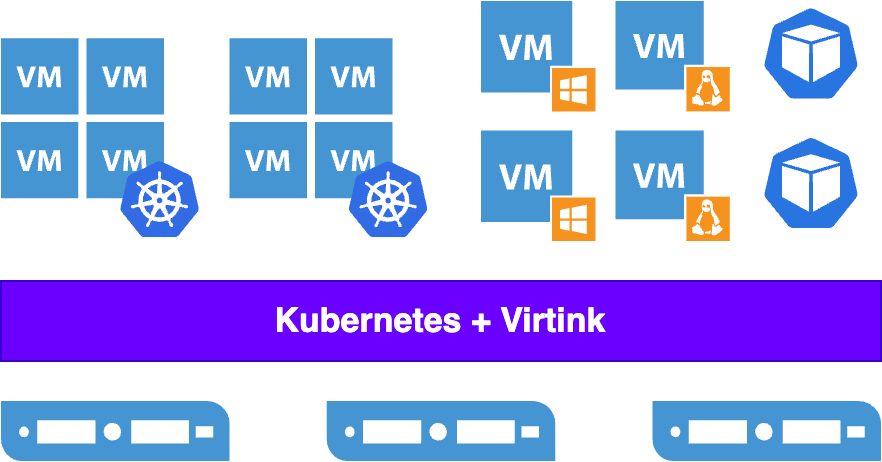

Compared to shared clusters, multi-cluster solutions have advantages and disadvantages: the advantages are high isolation and clear boundaries, and the disadvantages are higher resource overhead and operation and maintenance costs. Because each cluster requires separate control planes and worker nodes, Kubernetes clusters are often built on virtual machines in order to improve the utilization of the physical cluster. However, traditional virtualization products tend to be large and full-featured and expensive because they need to take into account a wider range of scenarios, and are not the best choice to support virtualized Kubernetes clusters.

Based on this, it can be argued that the ideal virtualization platform to support a virtualized Kubernetes cluster should have the following characteristics.

- Lightweight. No need to take into account scenarios such as desktop virtualization, just focus on server virtualization, minus all the unnecessary features and overhead.

- Efficient. Full use of semi-virtualized I/O technologies like virtio to improve efficiency.

- Security. Minimizes the possibility of host attacks.

- Kubernetes native. The virtualization platform itself should ideally be a Kubernetes cluster to reduce learning and operations costs.

These are exactly the characteristics of the Virtink virtualization engine that SmartX released some time ago.

Virtink is based on the Cloud Hypervisor project and provides the ability to orchestrate lightweight, modern virtual machines on Kubernetes. Virtink reduces the additional overhead of at least 100MB per VM compared to the QEMU and libvirt-based KubeVirt project. In addition, Virtink makes full use of virtio to improve I/O efficiency. Finally, in terms of security, Cloud Hypervisor is written in Rust for more secure memory management. And by reducing obsolete and unnecessary peripheral support, it minimizes the attackable surface exposed to VMs to improve host security. Lighter, more efficient, and more secure, Virtink provides a more desirable and cost-effective virtualization support for Kubernetes in Kubernetes, minimizing the overhead of the virtualization layer.

In addition, for creating and maintaining virtualized Kubernetes clusters on Virtink, SmartX has developed the knest command line tool to help users create clusters and manage their expansion and contraction in a single click. Creating a virtualized Kubernetes cluster on a Virtink cluster is as simple as executing the “knest create” command. Subsequent expansion and contraction of the cluster can also be done in one click with the knest tool.

Summary

Kubernetes does not have multi-tenancy capabilities built in, but provides some fine-grained feature support. These features, combined with some third-party tools, can enable multi-tenant shared use of clusters. But at the same time, these tools bring additional learning and operation and maintenance costs. In this case, multiple virtualized clusters are still the solution of choice for many users. The SmartX Virtink open source virtualization engine is based on the efficient and secure Cloud Hypervisor and provides the ability to orchestrate light virtual machines on Kubernetes, minimizing the resource overhead of virtualizing Kubernetes clusters. The companion knest command-line tool supports one-click cluster creation and maintenance, effectively reducing the cost of multiple cluster operations and maintenance.

Ref

- Kubernetes-sigs / hierarchical-namespaces https://github.com/kubernetes-sigs/hierarchical-namespaces

- clastix / capsule https://github.com/clastix/capsule

- loft-sh / kiosk https://github.com/loft-sh/kiosk

- loft-sh / vcluster https://github.com/loft-sh/vcluster

- smartxworks / virtink https://github.com/smartxworks/virtink

- smartxworks / knest https://github.com/smartxworks/knest